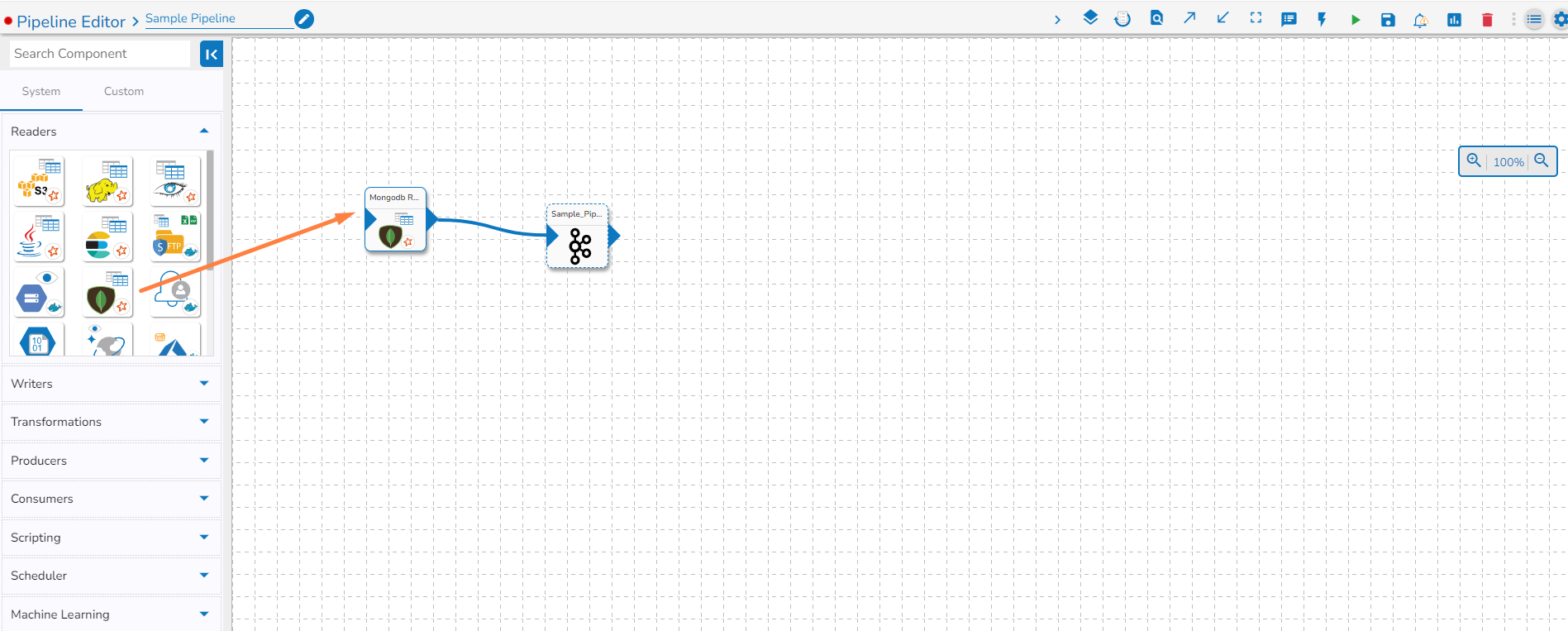

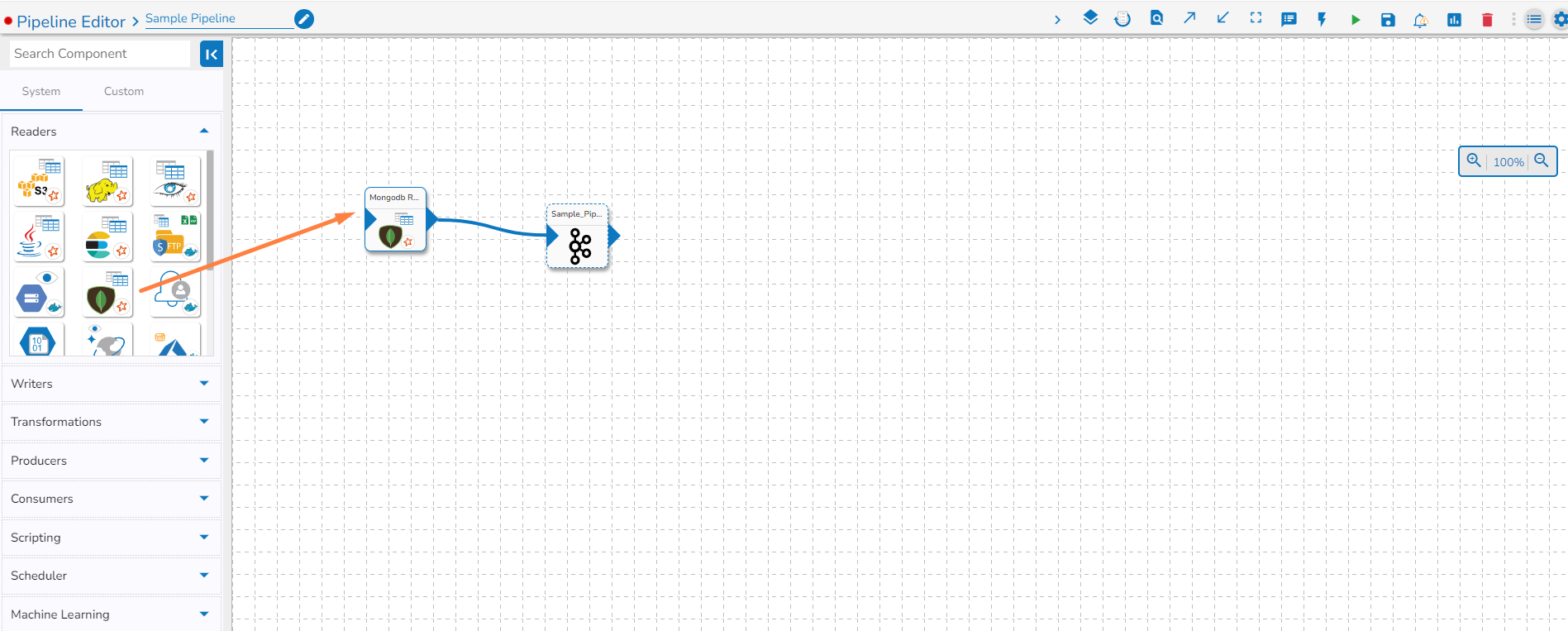

Connecting Components

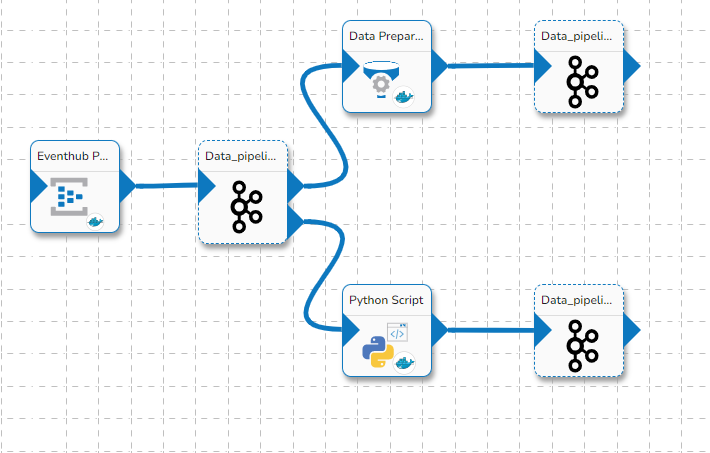

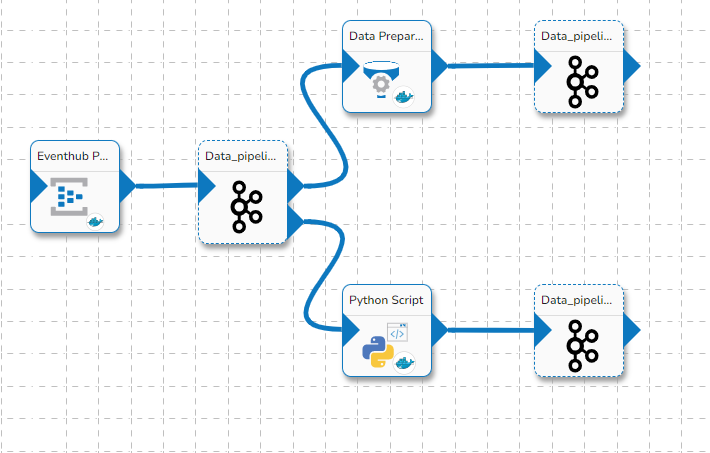

An event-driven architecture uses events to trigger and communicate between decoupled services and is common in modern applications built with microservices.

An event-driven architecture uses events to trigger and communicate between decoupled services and is common in modern applications built with microservices.