RabbitMQ Producer

RabbitMQ producer plays a vital role in enabling reliable message-based communication and data flow within a data pipeline.

RabbitMQ is an open-source message-broker software that enables communication between different applications or services. It implements the Advanced Message Queuing Protocol (AMQP) which is a standard protocol for messaging middleware. RabbitMQ is designed to handle large volumes of message traffic and to support multiple messaging patterns such as point-to-point, publish/subscribe, and request/reply. In a RabbitMQ system, messages are produced by a sender application and sent to a message queue. Consumers subscribe to the queue to receive messages and process them accordingly. RabbitMQ provides reliable message delivery, scalability, and fault tolerance through features such as message acknowledgement, durable queues, and clustering.

In RabbitMQ, a producer is also referred to as a "publisher" because it publishes messages to a particular exchange. The exchange then routes the message to one or more queues, which can be consumed by one or more consumers (or "subscribers").

All component configurations are classified broadly into following section

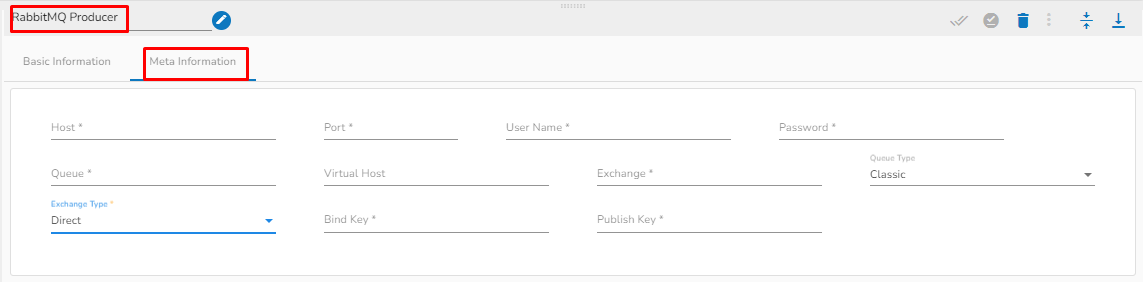

Meta Information

Configure the Meta Information tab of RabbitMQ Producer

Host: Enter the host for RabbitMQ.

Port: Enter the port.

Username: Enter the username for RabbitMQ.

Password: Enter the password to authenticate with RabbitMQ Producer.

Queue: In RabbitMQ, a queue is a buffer that holds messages that are waiting to be processed by a consumer (or multiple consumers). In the context of a RabbitMQ producer, a queue is a destination where messages are sent for eventual consumption by one or more consumers.

Virtual host: Provide a virtual host. In RabbitMQ, a virtual host is a logical grouping of resources such as queues, exchanges, and bindings, which allows you to isolate and segregate different parts of your messaging system.

Exchange: Provide a Exchange. An exchange is a named entity in RabbitMQ that receives messages from producers and routes them to queues based on a set of rules called bindings. An exchange can have several types, including "direct", "fanout", "topic", and "headers", each of which defines a different set of routing rules.

Query Type: Select the query type from the drop-down. There are three(3) options available in it:

Classic: Classic queues are the most basic type of queue in RabbitMQ, and they work in a "first in, first out" (FIFO) manner. In classic queues, messages are stored on a single node, and consumers can retrieve messages from the head of the queue.

Stream: In stream queues, messages are stored across multiple nodes in a cluster, with each message being replicated across multiple nodes for fault tolerance. Stream queues allow for messages to be processed in parallel and can handle much higher message rates than classic queues.

Quorum: In quorum queues, messages are stored across multiple nodes in a cluster, with each message being replicated across a configurable number of nodes for fault tolerance. Quorum queues provide better performance than classic queues and better durability than stream queues.

Exchange Type: Select the query type from the drop-down. There are Four(4) exchange type supported:

Direct: A direct exchange type in RabbitMQ is one of the four possible exchange types that can be used to route messages between producers and consumers. In a direct exchange, messages are routed to one or more queues based on an exact match between the routing key specified by the producer and the binding key used by the queue. That is, the routing key must match the binding key exactly for the message to be routed to the queue.

Fanout: A fanout exchange routes all messages it receives to all bound queues indiscriminately. That is, it broadcasts every message it receives to all connected consumers, regardless of any routing keys or binding keys.

Topic: This type of exchange routes messages to one or more queues based on a matching routing pattern. Potential subtopics include how to create and bind to a topic exchange, how to use wildcards to match routing patterns, and how to publish and consume messages from a topic exchange. Two fields will be displayed when Direct, Fanout and Topic is selected as Exchange type:

Bind Key: Provide the Bind key. The binding key is used on the consumer (queue) side to determine how messages are routed from an exchange to a specific queue.

Publish Key: Enter the Publish key. The Publish key is used by the producer (publisher) when sending a message to an exchange.

Header: This type of exchange routes messages based on header attributes instead of routing keys. Potential subtopics include how to create and bind to a headers exchange, how to publish messages with specific header attributes, and how to consume messages from a headers exchange. X-Match: This field will only appear if Header is selected as Exchange type. There are two options in it:

Any: When

X-matchis set toany, the message will be delivered to a queue if it matches any of the header fields in the binding. This means that if a binding has multiple headers, the message will be delivered if it matches at least one of them.All: When

X-matchis set toall, the message will only be delivered to a queue if it matches all of the header fields in the binding. This means that if a binding has multiple headers, the message will only be delivered if it matches all of them.Binding Headers: This field will only appear if Header is selected as Exchange type. Enter the Binding headers key and value. Binding headers are used to create a binding between an exchange and a queue based on header attributes. You can specify a set of headers in a binding, and only messages that have matching headers will be routed to the bound queue.

Publishing Headers: This field will only appear if Header is selected as Exchange type. Enter the Publishing headers key and value. Publishing headers are used to attach header attributes to messages when they are published to an exchange.