Synthetic Data Generator

The Synthetic Data Generator component is designed to generate the desired data by using the Draft07 schema of the data that needs to be generated.

The user can upload the data in CSV or XLSX format and it will generate the draft07 schema for the same data.

Check out steps to create and use the Synthetic Data Generator component in a Pipeline workflow.

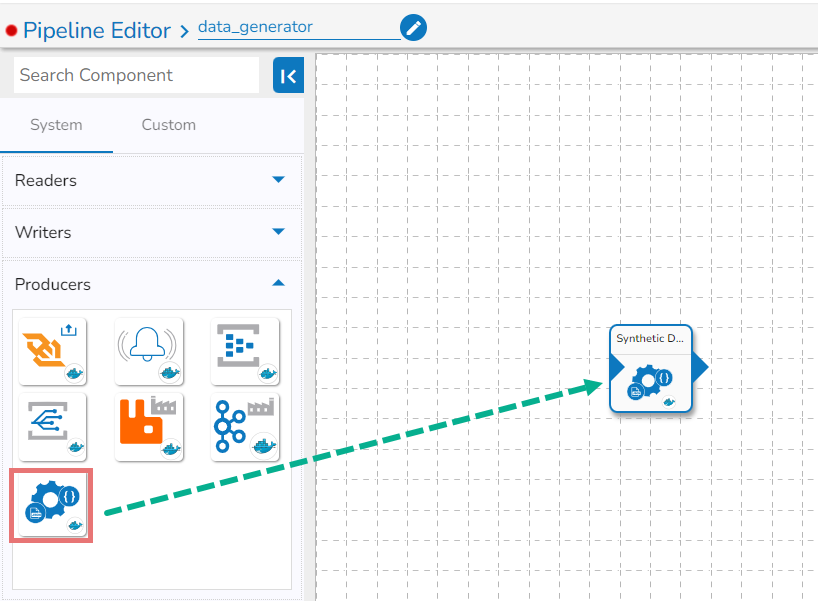

Drag and Drop the Component

Drag and drop the Synthetic Data Generator Component to the Workflow Editor.

Click on the dragged Synthetic Data Generator component to get the component properties tabs.

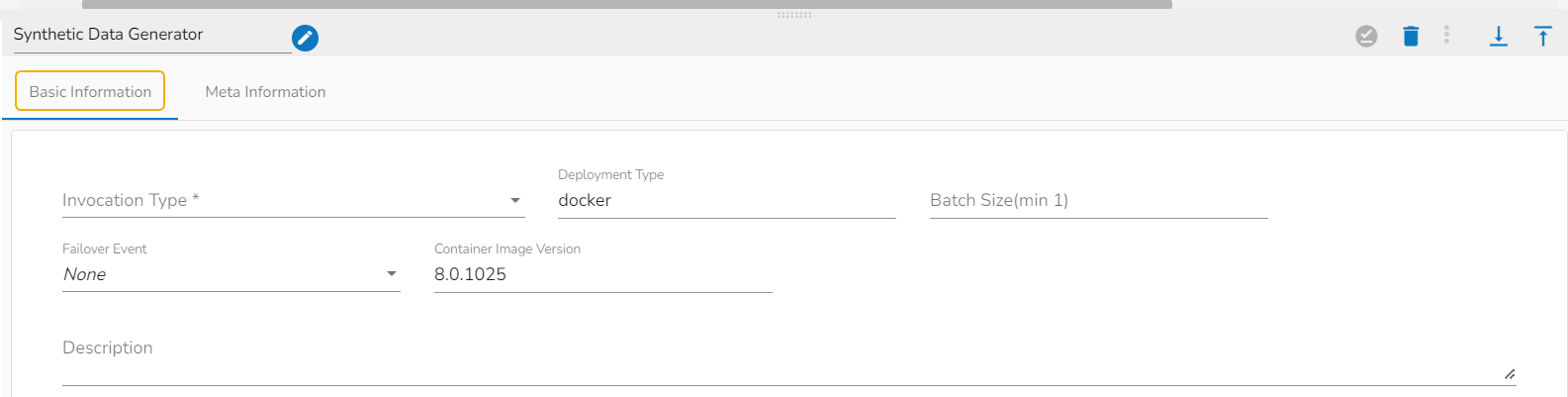

Basic Information Tab

Configure the Basic Information tab.

Select an Invocation type from the drop-down menu to confirm the running mode of the component. Select the Real-Time option from the drop-down menu.

Deployment Type: It displays the deployment type for the component. This field comes pre-selected.

Container Image Version: It displays the image version for the docker container. This field comes pre-selected.

Failover Event: Select a failover Event from the drop-down menu .

Batch Size (min 10): Provide maximum number of records to be processed in one execution cycle (Min limit for this field is 10.

Meta Information Tab

Configure the following information:

Iteration: Number of iterations for producing the data.

Delay (sec): Delay between each iteration in seconds.

Batch Size: Number of data to be produced in each iteration.

Upload Sample File: Upload the file containing data. CSV and XLSX file formats are supported. Once the file is uploaded, the draft07 schema for the uploaded file will be generated in the Schema tab. The supported files are CSV, Excel, and JSON formats.

Schema: Draft07 schema will display under this tab in the editable format.

Upload Schema: The user can directly upload the draft07 schema in JSON format from here. Also, the user can directly paste the draft07 schema in the schema tab.

Saving the Component Configuration

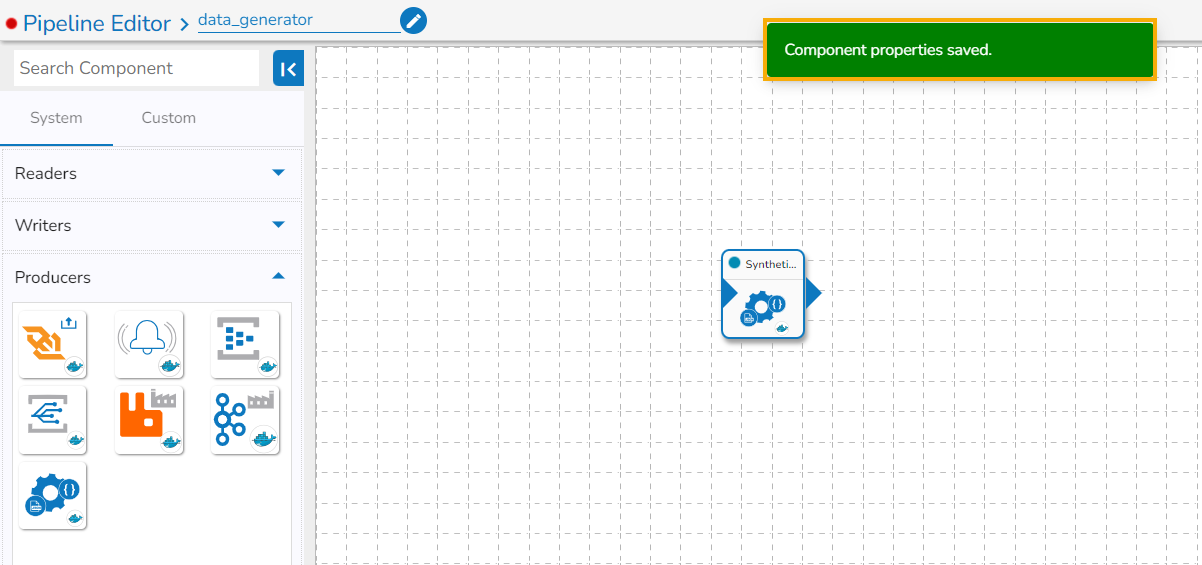

After doing all the configurations click the Save Component in Storage icon provided in the configuration panel to save the component.

A notification message appears to inform about the component configuration saved.

Please Note: Total number of generated data= Number of iterations * batch size

Sample Schema File

Please find a Sample Schema file given below for the users to explore the component.

Please Note: Weights can be given in order to handle the bias across the data generated:

The addition on weights should be exactly 1

"age": { "type": "string", "enum": ["Young", "Middle","Old"], "weights":[0.6,0.2,0.2]}

Types and their properties

Type: "string"

Properties:

maxLength: Maximum length of the string.minLength: Minimum length of the string.enum: A list of values that the number can take.weights: Weights for each value in the enum list.format: Available formats include 'date', 'date-time', 'name', 'country', 'state', 'email', 'uri', and 'address'.

For 'date' and 'date-time' formats, the following properties can be set:

minimum: Minimum date or date-time value.maximum: Maximum date or date-time value.interval: For 'date' format, the interval is the number of days. For 'date-time' format, the interval is the time difference in seconds.occurrence: Indicates how many times a date/date-time needs to repeat in the data. It should only be employed with the 'interval' and 'start' keyword.

A new format has been introduced for the string type: 'current_datetime'. This format generates records with the current date-time.

Type: "number"

Properties:

minimum: The minimum value for the number.maximum: The maximum value for the number.exclusiveMinimum: Indicates whether the minimum value is exclusive.exclusiveMaximum: Indicates whether the maximum value is exclusive.unique: Determines if the field should generate unique values (True/False).start: Associated with unique values, this property determines the starting point for unique values.enum: A list of values that the number can take.weights: Weights for each value in the Enum list.

Type: "float"

Properties:

minimum: The minimum float value.maximum: The maximum float value.

Note: Draft-07 schemas allow for the use of if-then-else conditions on fields, enabling complex validations and logical checks. Additionally, mathematical computations can be performed by specifying conditions within the schema.

Example: Here number3 value will be calculated based on

"$eval": "data.number1 + data.number2 * 2" condition.

Last updated