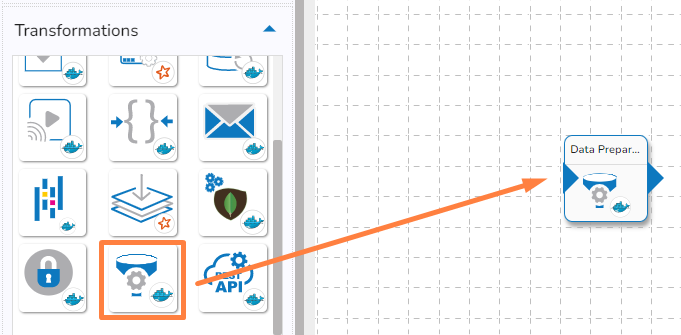

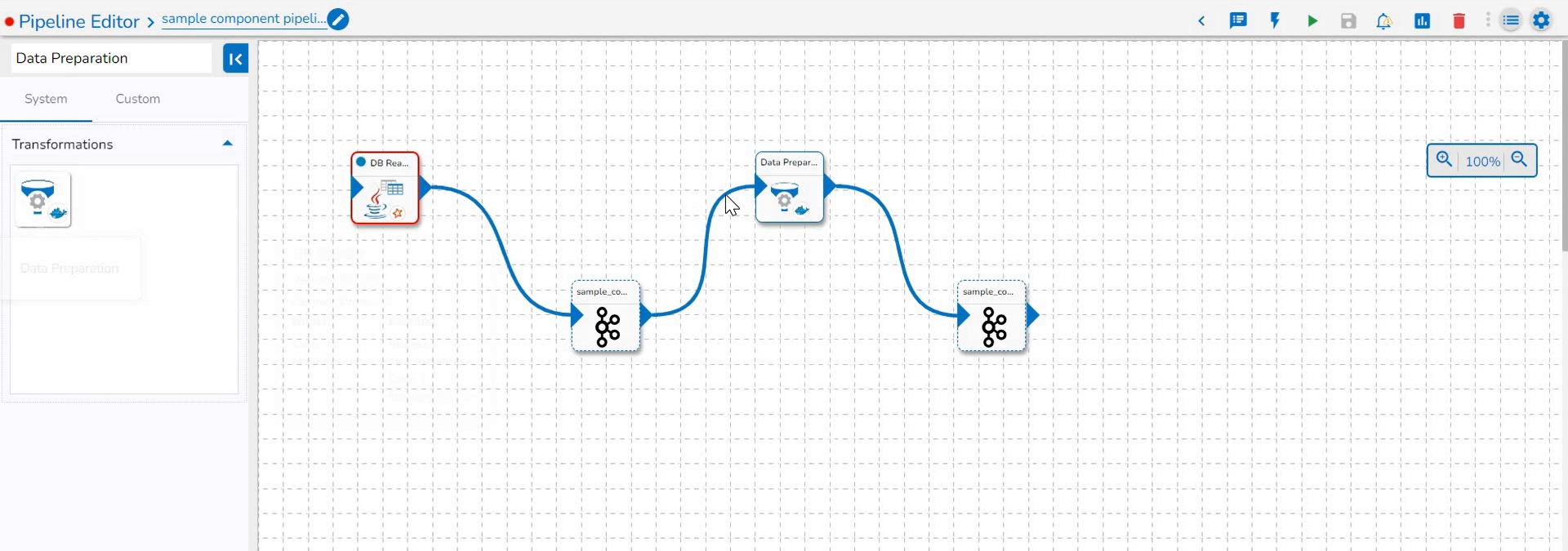

Data Preparation (Docker)

Steps to configure the Data Preparation component

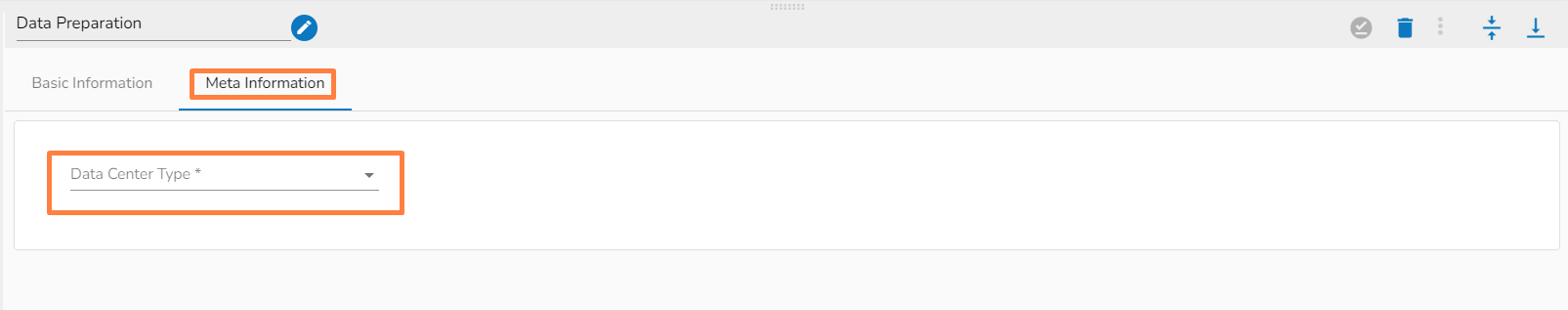

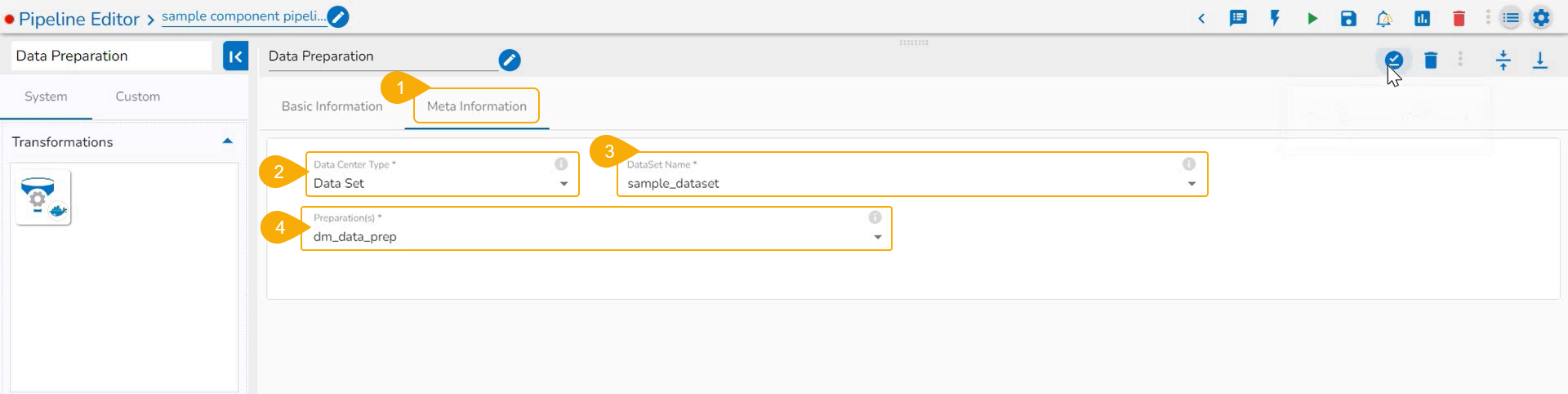

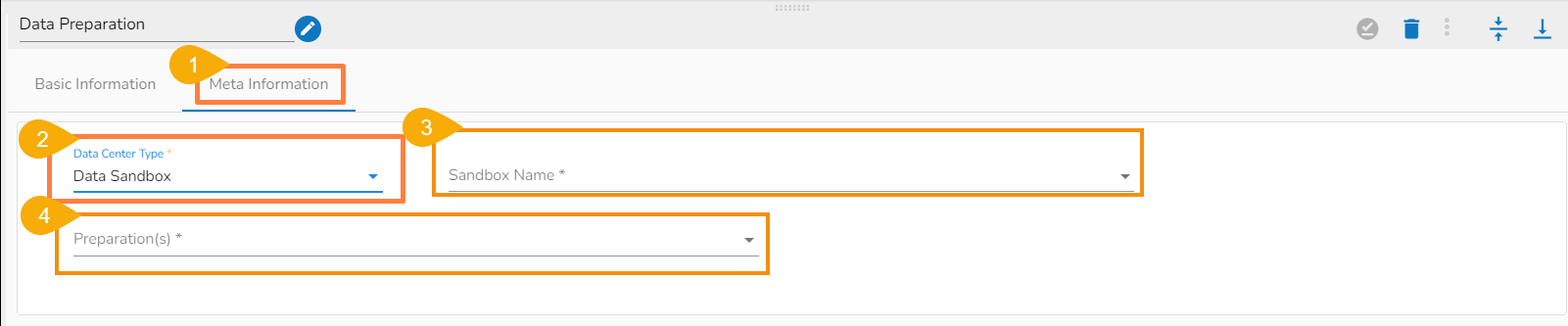

Meta Information

When Data Set is selected as Data Center Type

When Data Sandbox is selected as Data Center Type

Saving the Component

Last updated