AutoML Runner

The AutoML Runner is designed to automate the entire workflow of creating, training, and deploying machine learning models. It seamlessly integrates with the DS Lab module and allows for the importation of models into the pipeline.

All component configurations are classified broadly into 3 sections.

Meta Information

Steps to configure Auto ML Runner

Drag and drop the Auto ML Runner component to the Workflow Editor.

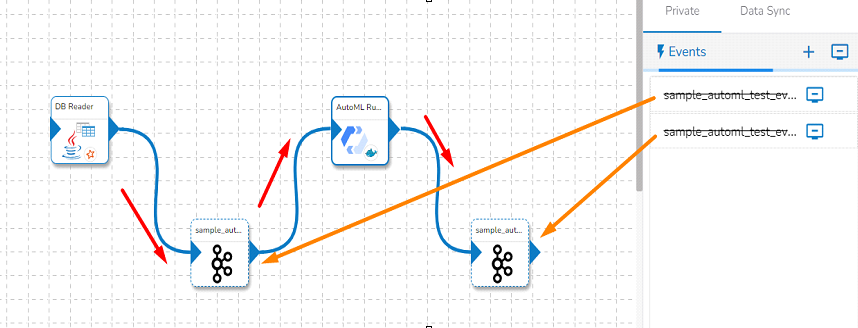

The Auto ML Runner requires input data from an Event and sends the processed data to another Event. Create two Events and drag them on the Workspace.

Connect the input and output events with the Auto ML Runner component as displayed below.

The data in the input event can come from any Ingestion, Readers, Any script from DS Lab Module or shared events.

Click the Auto ML Runner component to get the component properties tabs below.

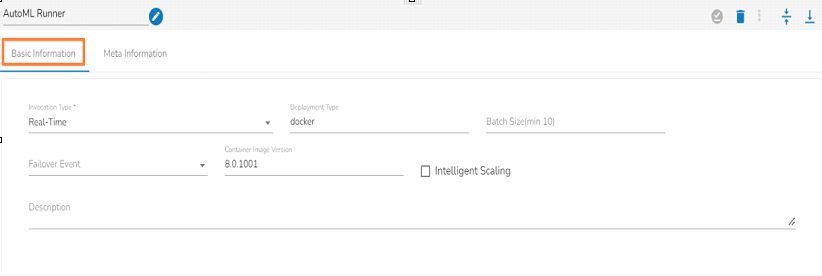

Basic Information

It is the default tab to open for the component.

Select an Invocation type from the drop-down menu to confirm the running mode of the reader component. Select either Real-Time or Batch option from the drop-down menu.

Please Note: If the selected Invocation Type option is Batch, then Grace Period (in sec)* field appears to provide the grace period for component to go down gracefully after that time.

Deployment Type: It displays the deployment type for the component. This field comes pre-selected.

Container Image Version: It displays the image version for the docker container. This field comes pre-selected.

Failover Event: Select a failover Event from the drop-down menu.

Batch Size (min 10): Provide the maximum number of records to be processed in one execution cycle (Min limit for this field is 10).

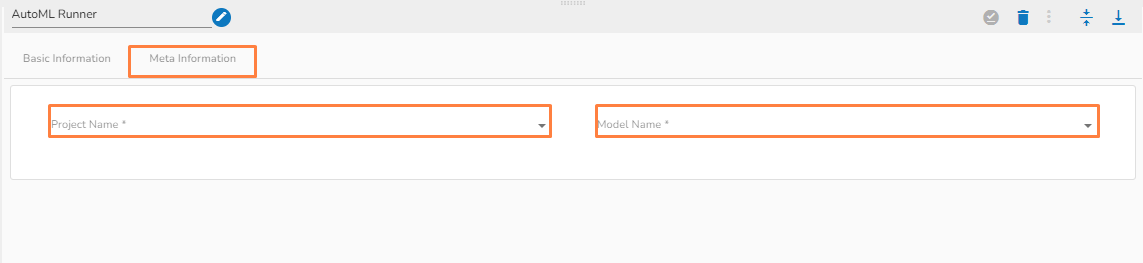

Meta Information

Project Name: Name of the project where you have created your model in DS Lab Module.

Model Name: Name of the saved model in project in the DS Lab module.

Saving the Auto ML Runner Component

Click the Save Component in Storage

icon.

icon.A success notification message appears when the component gets saved.

The Auto ML Runner component reads the data coming to the input event, runs the model and gives the output data with predicted columns to the output event.