Data Science Lab Quick Start Flow

This page aims to provide all the major steps in the concise manner for the user to kick start their Data Science Experiments.

Data Science module allows the user to create Data Science Experiments and productionize them. This page tries to provide the entire Data Science flow in nutshell for the user to quickly begin their Data Science experiment journey.

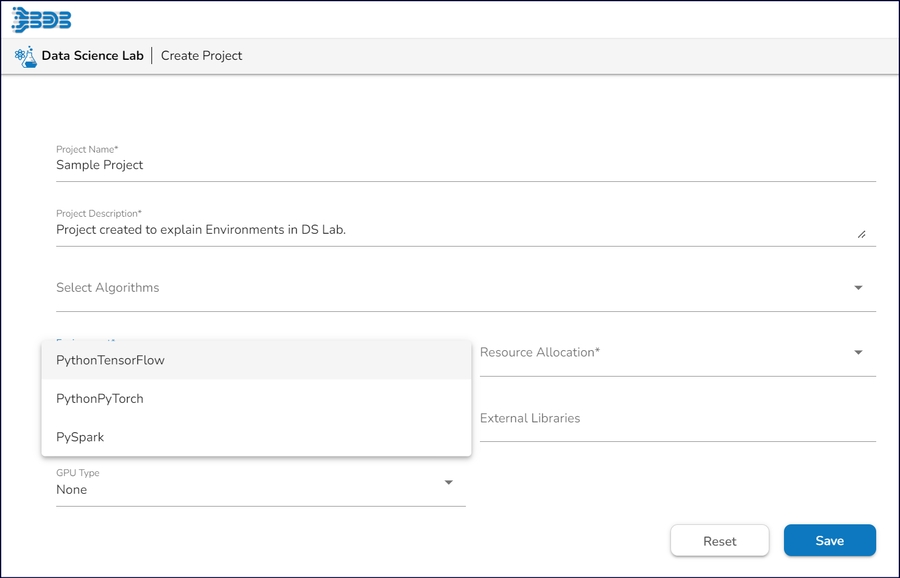

Project Creation

A Data Science Project created inside the Data Science Lab is like a Workspace inside which the user can create and store multiple data science experiments.

Pre-requisite: It is mandatory to configure the DS Lab Settings option before beginning with the Data Science Project creation. Also, select the algorithms by using the Algorithms field from the DS Lab Settings section which you wish to use inside your Data Science Lab project.

Creating a New Project

Open the Data Science Lab module and access the Create Project option to begin with the Project creation. Refer the Creating a Project page to understand the steps involved in the Project Creation in details.

Project List

Once a Data Science Project gets created it gets listed under the Projects page. Each Project in the list gets the following Actions to be applied on it:

Push to VCS (only available for an activated Project)

Pull from VCS (only available for an activated Project)

Please Note: Refer the Project List page to understand all the above listed options in details.

Supported Environment

The following environments are supported inside a Data Science Lab Project.

TensorFlow: Users can execute Sklearn commands by default in the notebook. If the users select the TensorFlow environment, they do not need to install packages like the TensorFlow and Keras explicitly in the notebook. These packages can simply be imported inside the notebook.

PyTorch: If the users select the PyTorch environment, they do not need to install packages like the Torch and Torchvision explicitly in the notebook. These packages can simply be imported inside the notebook.

PySpark: If the users select the PySpark environment, they do not need to install packages like the PySpark explicitly in the notebook. These packages can simply be imported inside the notebook.

Please Note:

The Sklearn environment is a default environment for the Data Science Lab Project.

The Project level tabs provided for TensorFlow and PyTorch environments remain same, so the current document presents content for them together.

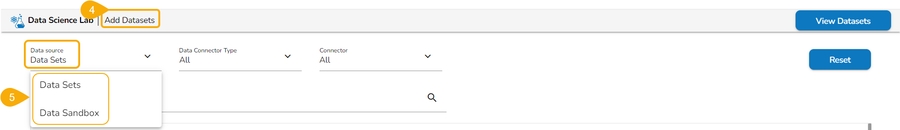

Dataset

Data is the first requirement for any Data Science Project. The user can add the required datasets and view the added datasets under a specific Project by using the Dataset tab.

The user needs to click on the Dataset tab from the Project List page to access the Add Datasets option.

Adding Data Sets

The user can get a list of uploaded Data Sets and Data Sandbox from the Data Center module under this tab.

The Add Datasets page offers the following Data service options to add as datasets:

Data Sets – These are the uploaded data sets (data services) from the Data Center module.

Data Sandbox – This option lists all the available/ uploaded Data Sandbox.

Checkout the given illustrations to understand the Adding Dataset (Data Service) and Adding Data Sandbox steps in details.

Please Note:

The user can add Datasets by using the Dataset tab or Notebook page.

Based on the selected Environment the supported Data Sets types can be added to a Project or Notebook. E.g., PySpark environment does not support the Data Service as Dataset.

Refer the Adding Data Sets section with the sub-pages to understand it in details.

Refer the Data Preparation page to understand how the user can apply required Data Preparation steps on a specific dataset from the Data Set List page.

Data Set List

All the uploaded and added datasets get various Actions that can help the users to create more accurate Data Science Experiments. The following major Actions are provided to an added Data Set.

Preview - Opens preview of the selected dataset.

Data Profile - Displays the detailed profile of data to know about data quality, structure and consistency.

Create Experiment - Creates an Auto ML experiment on the selected Dataset.

Data Preparation - Cleans data to enhance quality and accuracy that directly impacts reliability of the results.

Delete - Deletes the selected Dataset.

Please Note: The user can click each of the above-given Action option to open the information in details.

Data Science Experiment

Once the user creates a Project and adds the required Data sets to the Project, it gets ready to hold a Data Science Experiment. The Data Science Lab user gets the following ways to go ahead with their Data Science Experiments:

Use Notebook infrastructure provided under the Project to create script, save as a model or script, load, and predict a model. It is also possible to save the Artifacts for a Saved Model. Refer the Notebook section for more details.

Data Science Model

The the Notebook Operations section of the current documentation provides the details on the above stated aspects of the Data Science Models.

Algorithms and Transforms are also available for the Data Science models inside the Notebook Page as Notebook Operations.

The Notebook Page may contain a customized Notebook operations list based on the selected environment. E.g., The Data Science Projects created using the PySpark environment contain the following Notebook Operations:

Refer the Environment specific Notebook Operations by using the following options:

Notebook List Page

The Notebook List page lists all the created and saved Notebooks inside one Data Science Project. The user gets to apply the following Actions on a Notebook from the Notebook List page:

Use the Auto ML functionality to get the auto-trained Data Science models. The user can use the Create Experiment option provided under the Dataset List Page to begin with the AutoML model creation. Refer the AutoML section of this documentation for more details.

Data Science Experiment

Access the Create Experiment option under the Dataset List for the Projects that are created under the supported environments such as PyTorch and TensorFlow.

Once the AutoML experiment gets created successfully, the user gets directed to the AutoML List.

The following Actions are provided on the AutoML List page:

Details

Models - This option provides the detailed model explanation.

The View Explanation option is provided for both manually created Data Science models and AutoML generated models.

Model Explainability

The Model Explainer dashboard for a Data Science Lab model.

The View Explanation option carries the following flow to Explain a Model.

Model Summary - The Model Summary/ Run Summary will display the basic information about the trained top model.

Model Interpretation - This option provides the Model Explainer dashboards for an AutoML model.

Classification Model Explainer - This page provides the explainer dashboards for Classification Models.

Regression Model Explainer -This page provides the explainer dashboards for Regression Models.

Forecasting Model Explainer - This page provides model explainer dashboards for Forecasting Models.

Dataset Explainer - The Dataset Explainer tab provides high-level preview of the dataset that has been used for the experiment

Please Note:

The Auto ML functionality is not supported at present for the Project created in within PySpark environment.

Model As API functionality is not available for the AutoML Models.

Model Explainability and Model As API functionalities are not available for the Imported models.

Repo Sync Project

Refer the below-given page links to get directed to the various functionalities provided under the Repo Sync Project:

Repo Sync Project in the Python Environment (TensorFlow or PyTorch Environment)

Last updated