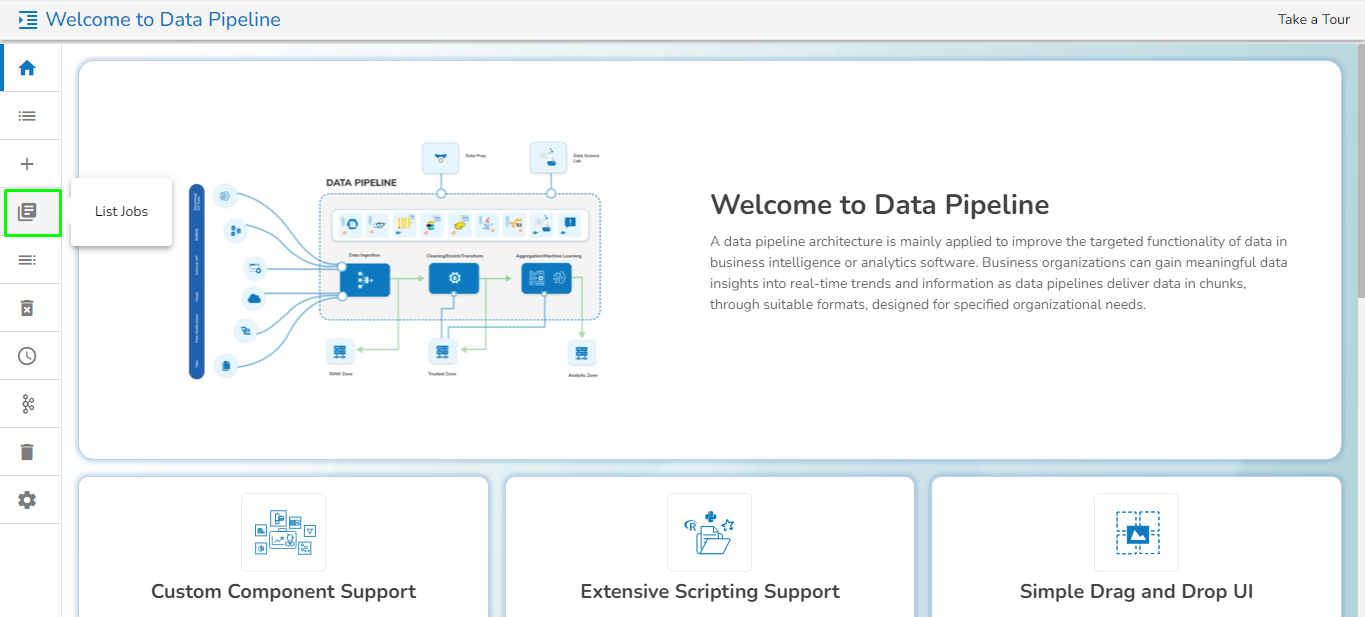

List Jobs

All the saved Jobs by a logged-in user get listed by using this option.

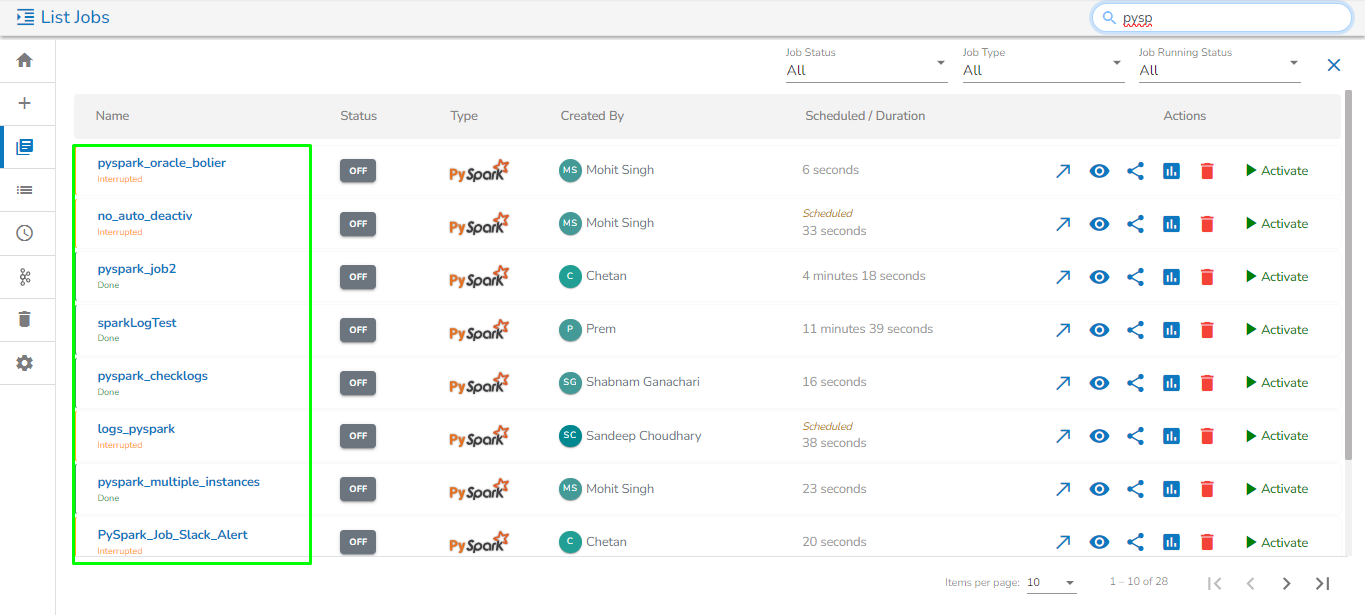

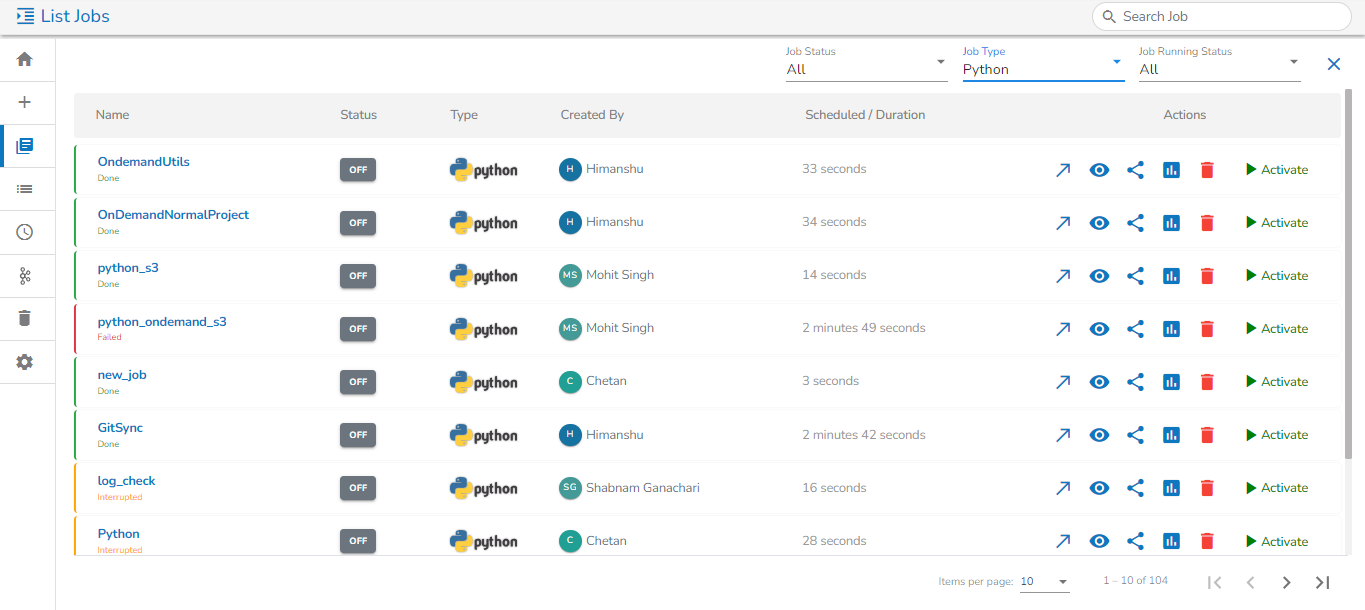

The List Jobs option opens the available Jobs List for the logged-in user. All the saved Jobs by a user get listed on this page. By clicking on the Job name the Details tab on the right side of the page gets displayed with the basic details of the selected job.

Job List

Navigate to the Data Pipeline Homepage.

Click on the List Jobs option.

The List Jobs page opens displaying the created jobs.

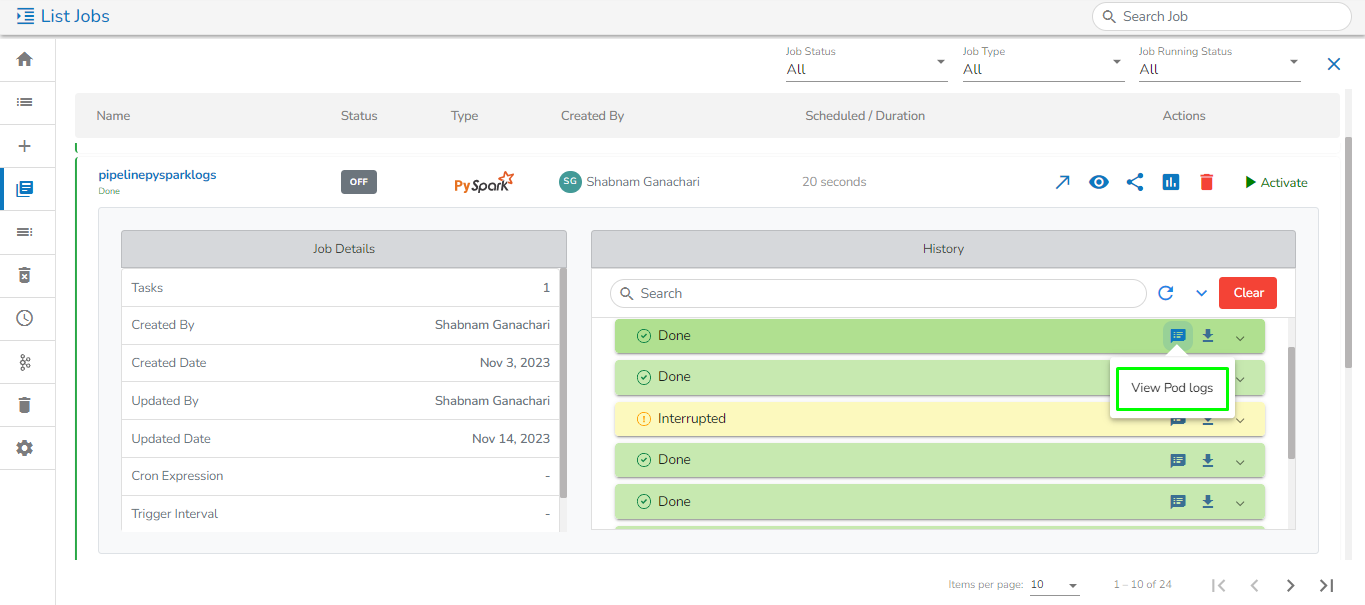

Job Details & History Tabs

Select a Job from the displayed list, and click on it.

This will open a panel containing three tabs:

Job Details:

Tasks: Indicates the number of tasks used in the job.

Created By: Indicates the name of the user who created the job.

Created Date: Date when the job was created.

Updated By: Indicates the name of the user who updated the job.

Updated Date: Date when the job was last updated.

Cron Expression: A string representing a schedule specifying when the job should run.

Trigger Interval: Interval at which the job is triggered (e.g., every 5 minutes).

Next Trigger: Date and time of the next scheduled trigger for the job.

Description: Description of the job provided by the user.

Total Job Config:

Total Allocated CPU: Total allocated CPU cores.

Total Allocated Memory: Total allocated memory in megabytes (MB).

Total Allocated Min Memory: Total Minimum allocated Memory (MB)

Total Allocated Max Memory: Total Maximum allocated Memory (MB)

Total Allocated Max CPU: Total Maximum allocated CPU (in Cores)

Total Allocated Min CPU: Total Minimum allocated CPU (in Cores)

History:

Provides relevant information about the selected job's past runs, including success or failure status.

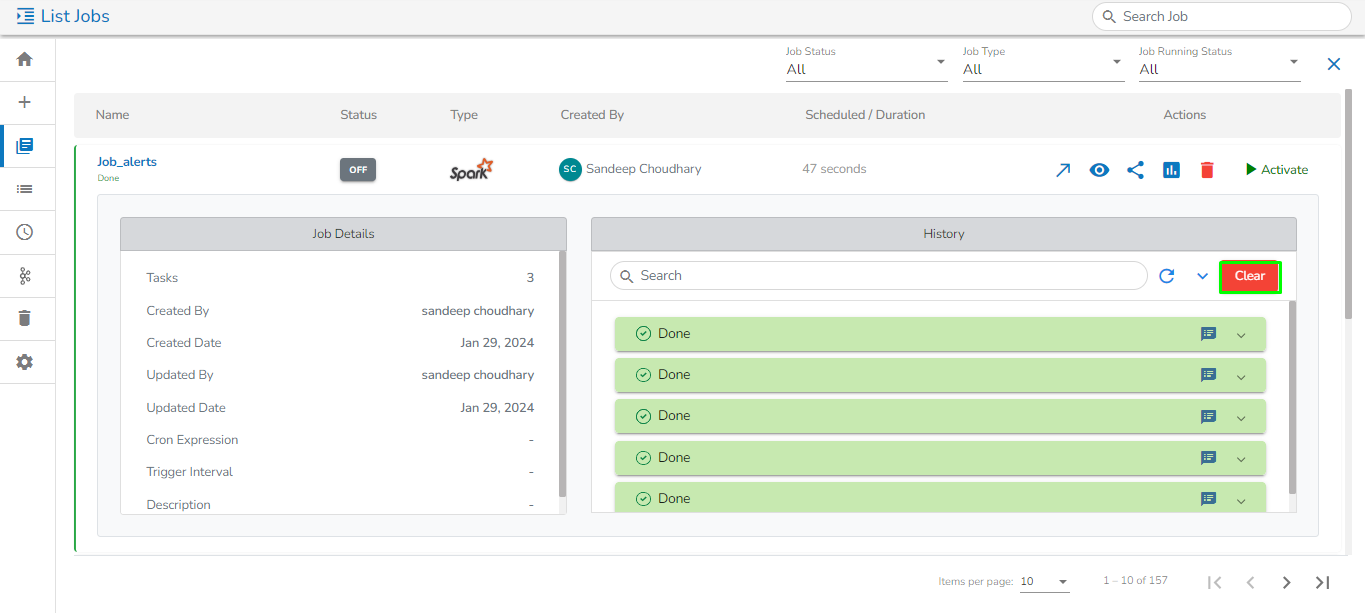

Clear option available to clear the job history.

Refresh icon for refreshing the displayed job history.

View: Redirects user to the Job workspace.

Share: Allows the user to share the selected job with any user.

Job Monitoring: Redirects user to the Job monitoring page.

Edit: Enables the user to edit any information of the job. This option will be disabled when the job is active.

Delete: Allows the user to delete the job. The deleted job will be moved to Trash.

In the List Jobs page, the user can view and download the pod logs for all instances by clicking on the View System Logs option in the Job history tab. For reference, please see the image below.

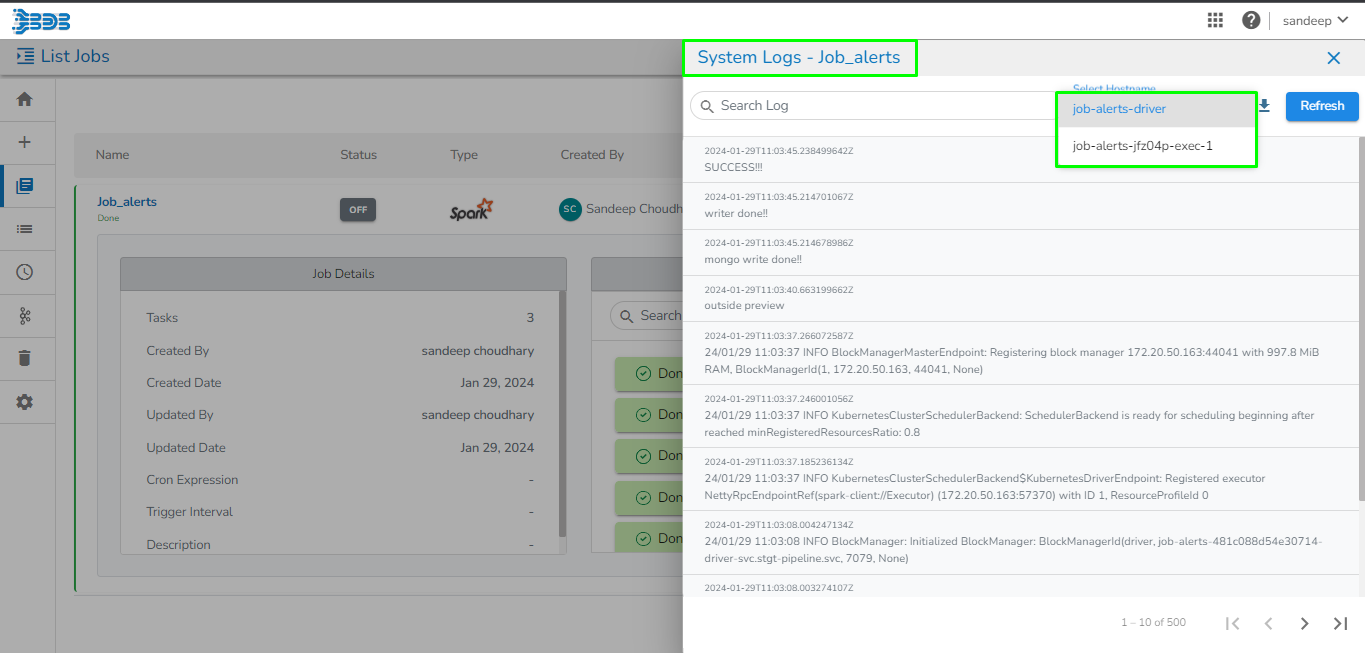

Once the user clicks on the View System Logs option, a drawer panel will open from the right side of the window. The user can select the instance for which the System logs have to be downloaded from the Select Hostname drop-down option. For reference, please see the image below.

Clear: It will clear all the job run history from the History tab. Please refer the below given image for reference.

Searching Job

The user can search for a specific Job by using the Search bar on the Job List. By typing a common name all the existing jobs having that word will list. E.g., By typing sand all the existing Jobs with the word sand in it get listed as displayed in the following image:

The user can also customize the Job List by choosing available filters such as Job Status, Job Type, Job Running Status:

Please Note:

The user can open the Job Editor for the selected Job from the list by clicking the View icon.

The user can view and download logs only for Jobs that have either successfully run or failed. Logs for the interrupted jobs cannot be viewed or downloaded.

Last updated