Job Editor Page

The Job Editor Page provides the user with all the necessary options and components to add a task and eventually create a Job workflow.

Adding a Task to the Job Workflow

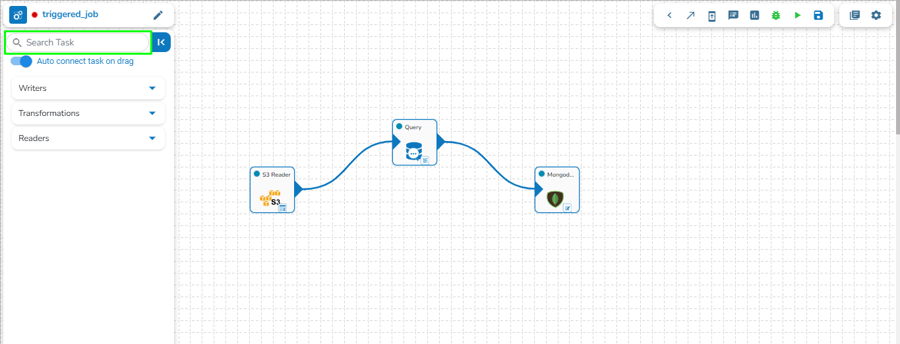

Once the Job gets saved in the Job list, the user can add a Task to the canvas. The user can drag the required tasks to the canvas and configure it to create a Job workflow or dataflow.

The Job Editor appears displaying the Task Pallet containing various components mentioned as Tasks.

Task Pallet

The Task Pallet is situated on the left side of the User Interface. It has a Task tab listing the various tasks.

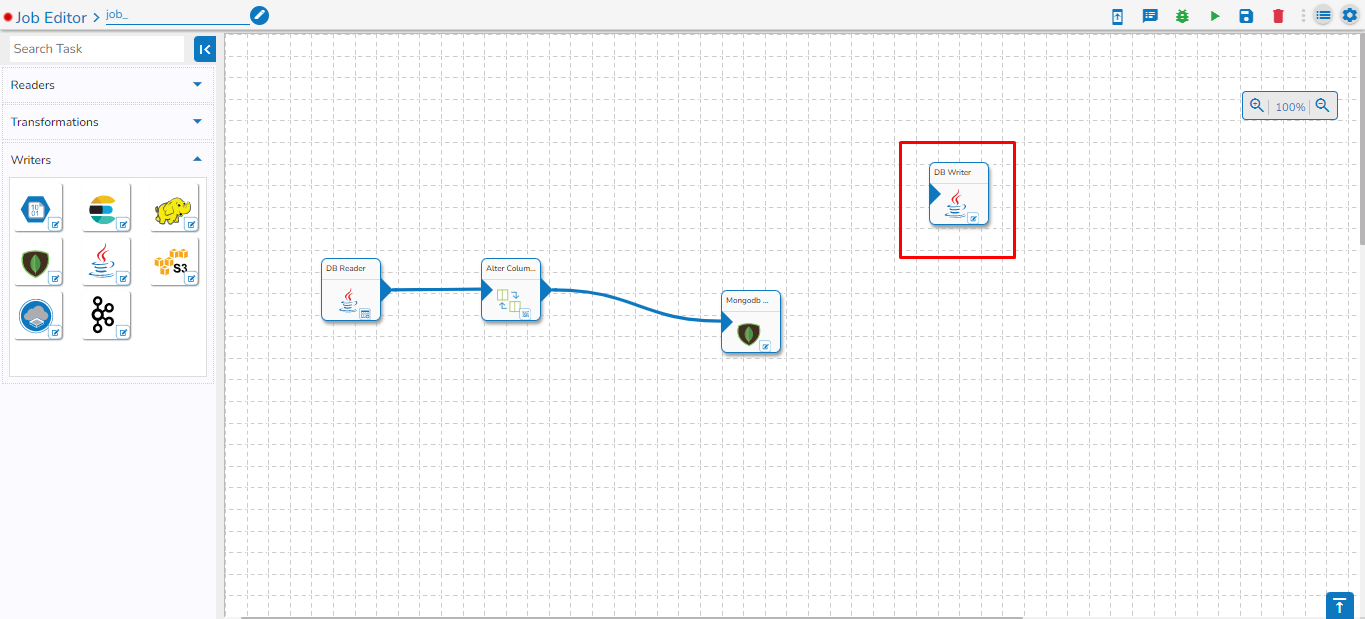

The tasks are displayed in the below-given image:

Task Components

The components used under the Task Pallet are broadly classified into:

Please Note: Refer to the Task Components page for the more details.

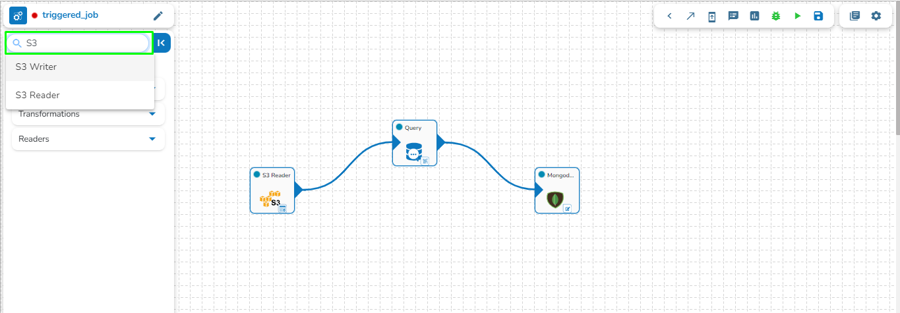

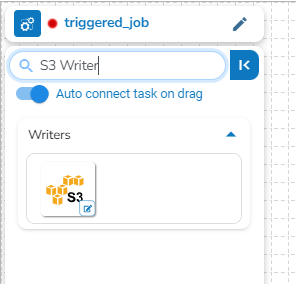

Searching a Task

There is a Search Task space provided on the Task Panel.

The user can search for a task by typing in the given space, the related Tasks will appear as suggestions.

Select a suggestion for search and the Task Panel will display the customized view based on the searched task.

Steps to create a Job Workflow

Navigate to the Job List page.

It will list all the jobs and also it will show the type of the Job whether it is Spark Job or PySpark Job in the type column.

Select a Job from the displayed list.

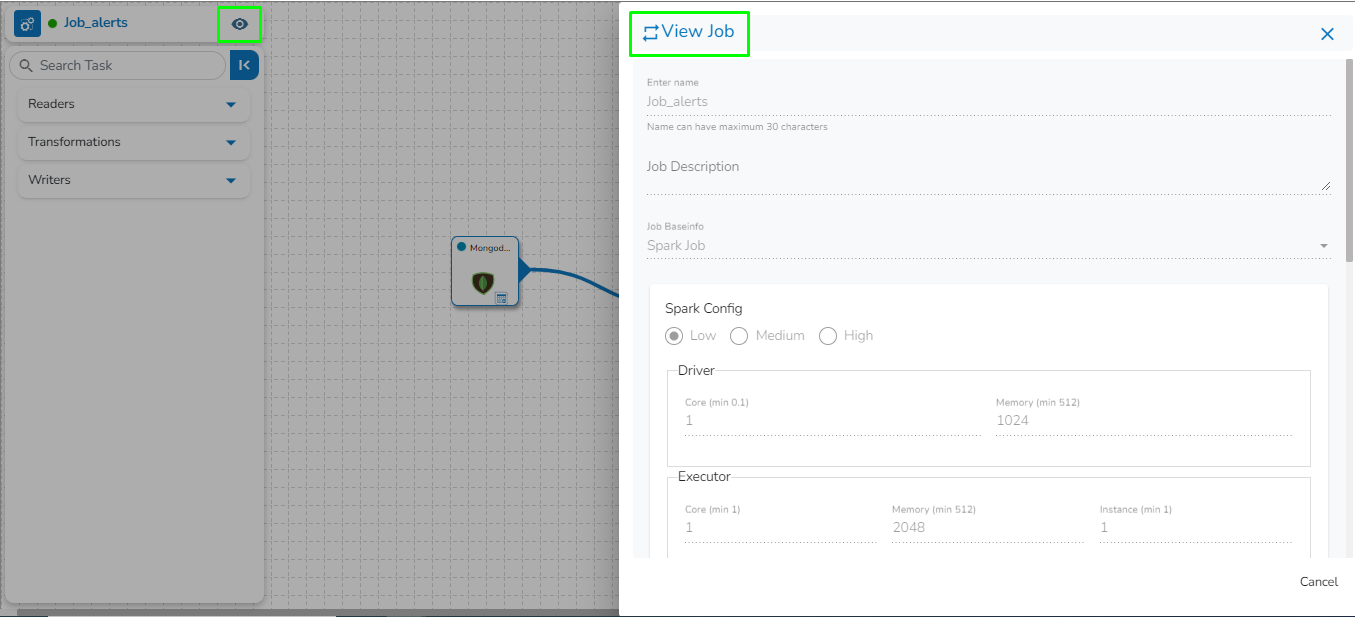

Click the View icon for the Job.

Please Note: Generally, the user can perform this step-in continuation to the Job creation, but in case the user has come out of the Job Editor the above steps can help to access it again.

The Job Editor opens for the selected Job.

Drag and drop the new required task or make changes in the existing task’s meta information or change the task configuration as the requirement. (E.g., the DB Reader is dragged to the workspace in the below-given image):

Click on the dragged task icon.

The task-specific fields open asking the meta-information about the dragged component.

Open the Meta Information tab and configure the required information for the dragged component.

Click the given icon to validate the connection.

Click the Save Task in Storage icon.

A notification message appears.

Click the Activate Job

icon to activate the job(It appears only after the newly created job gets successfully updated).

icon to activate the job(It appears only after the newly created job gets successfully updated).A dialog window opens to confirm the action of job activation.

Click the YES option to activate the job.

A success message appears confirming the activation of the job.

Once the job is activated, the user can see their job details while running the job by clicking on the View icon; the edit option for the job will be replaced by View icon when the job is activated.

Please Note:

Jobs can be run in the Development mode

as well. The user can preview only 10 records in the preview tab of the task if the job is running in the Development mode and if any writer task is used in the job then it will write only 10 records in the table of given database.

as well. The user can preview only 10 records in the preview tab of the task if the job is running in the Development mode and if any writer task is used in the job then it will write only 10 records in the table of given database.If the job is not running in Development mode, there will be no any data in the preview tab of tasks.

The Status for the Job gets changed on the job List page when they are running in the Development mode or it is activated.

Please Note: Click the Delete ![]() icon from the Job Editor page to delete the selected job. The deleted job gets removed from the Job list.

icon from the Job Editor page to delete the selected job. The deleted job gets removed from the Job list.

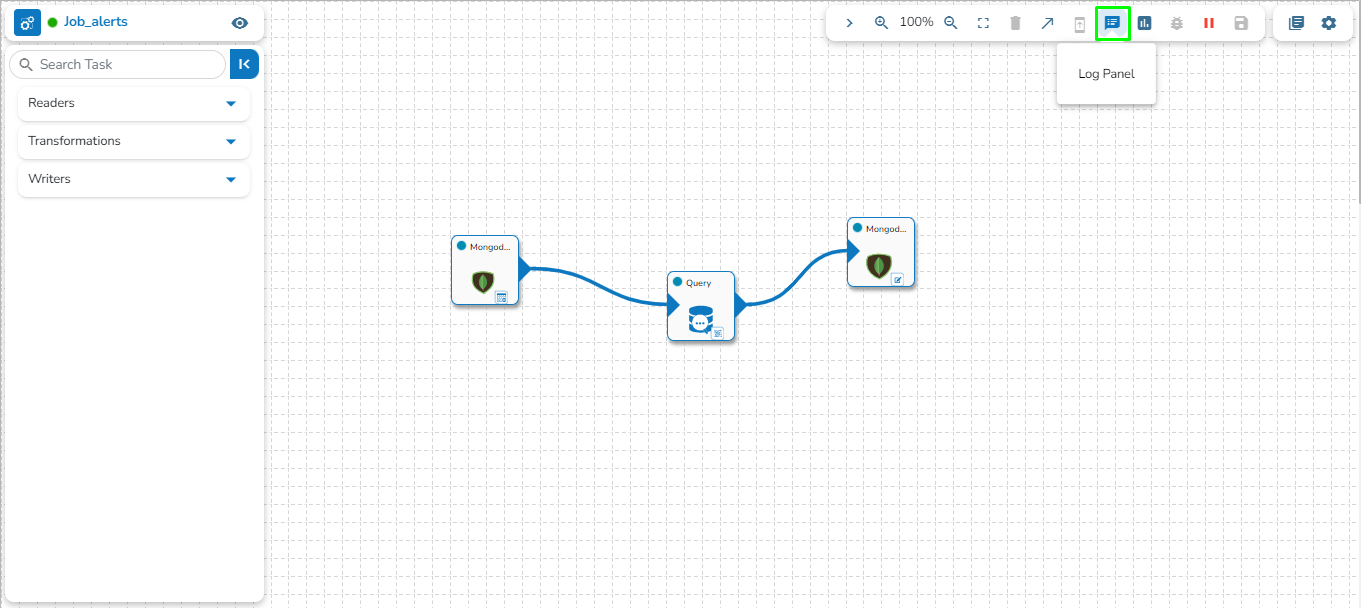

Toggle Log Panel

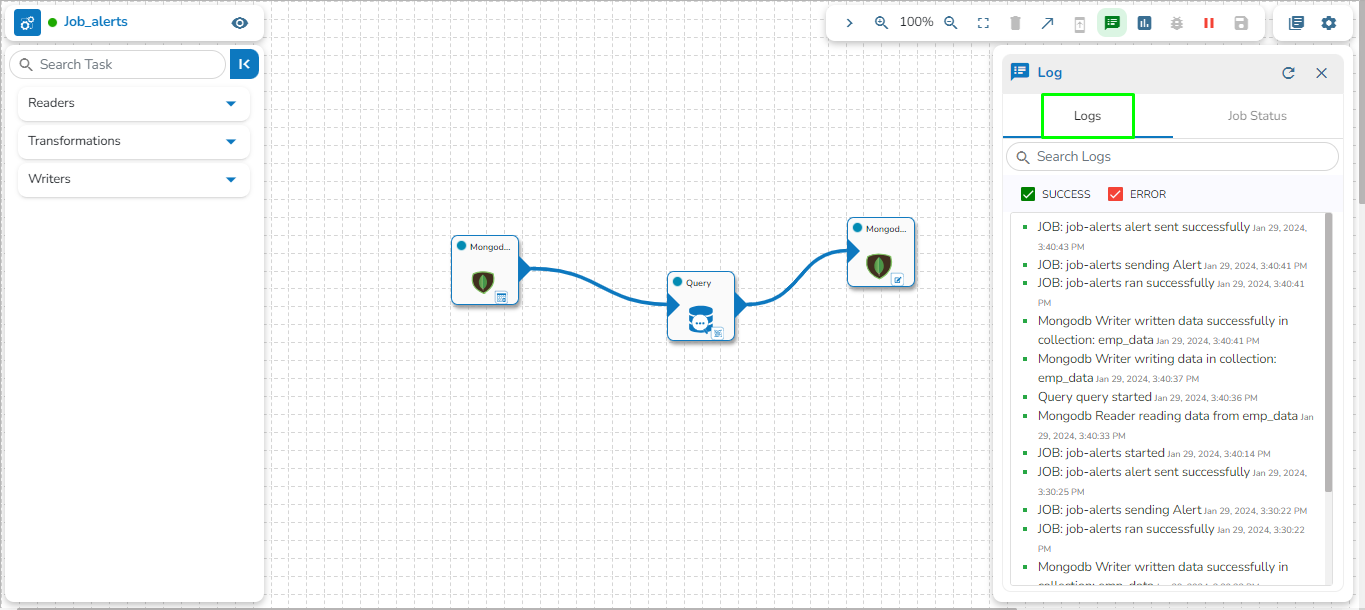

The Toggle Log Panel displays the Logs and Advanced Logs tabs for the Job Workflows.

Navigate to the Job Editor page.

Click the Toggle Log Panel icon on the header.

A panel Toggles displaying the collective logs of the job under the Logs tab.

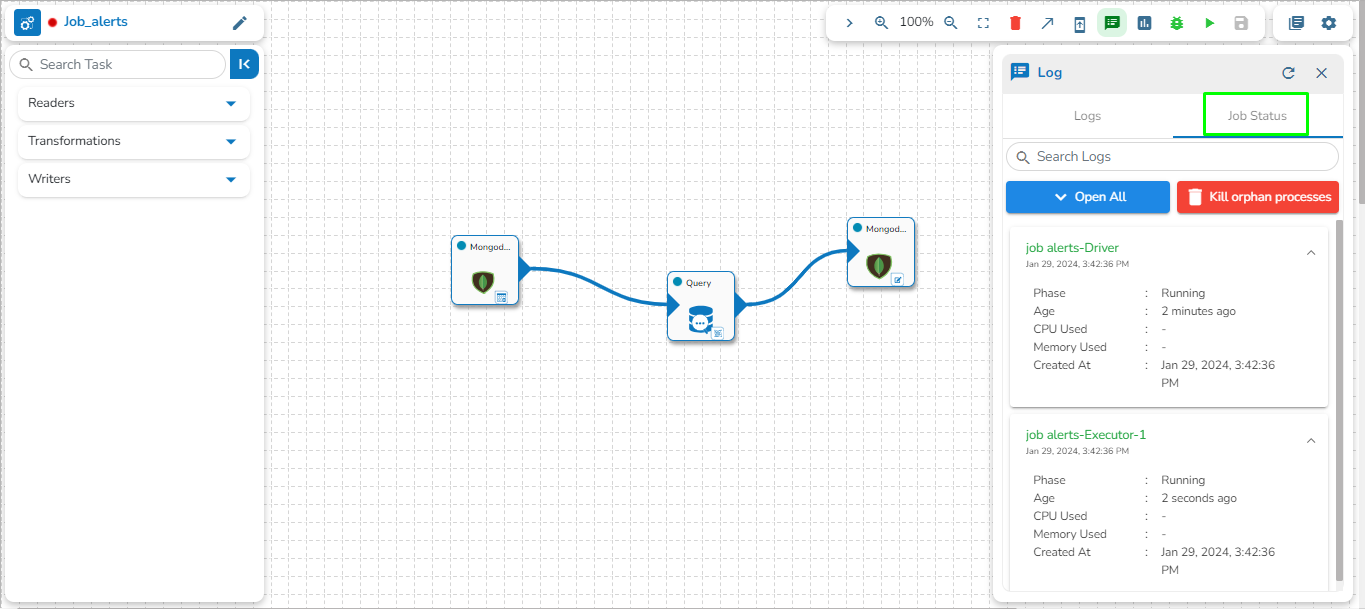

Select the Job Status tab to display the pod status of the complete Job.

Please Note: If any orphan task is used in the Job Editor Workspace which is not in use, it will cause failure for the entire Job. So, avoid using any orphan task in the Job. Please see the below given image for the reference. In the below given image, the highlighted DB writer task is an orphan task and if the Job is activated, then this Job will get failed because the orphan DB writer task is not getting any input.

So, it is recommended to avoid using an orphan task inside the Job Editor workspace.

Icons on the Header panel of the Job Editor Page

![]()

Job Version details

Displays the latest versions for the Jobs upgrade.

![]()

Development Mode

Runs the job in development mode.

![]()

Activate Job

Activates the current Job.

![]()

Update Job

Updates the current Job.

![]()

Edit Job

To edit the job name/ configurations.

![]()

Delete Job

Deletes the current Job.

![]()

Push Job

Push the selected job to GIT.

Last updated