Video Stream Consumer

Video stream consumer is designed to consume .mp4 video from realtime source or stored video in some SFTP location in form of frames.

All component configurations are classified broadly into the following sections:

Meta Information

Please follow the given demonstration to configure the Video Stream Consumer component.

Please Note:

Video Stream component supports only .mp4 file format. It reads/consumes video frame by frame.

The Testing Pipeline functionality and Data Metrices option (from Monitoring Pipeline functionality) are not available for this component.

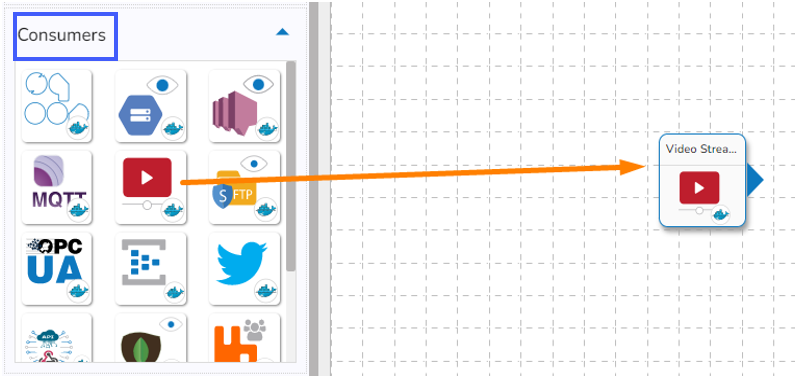

Drag & drop the Video Stream Consumer component to the Workflow Editor.

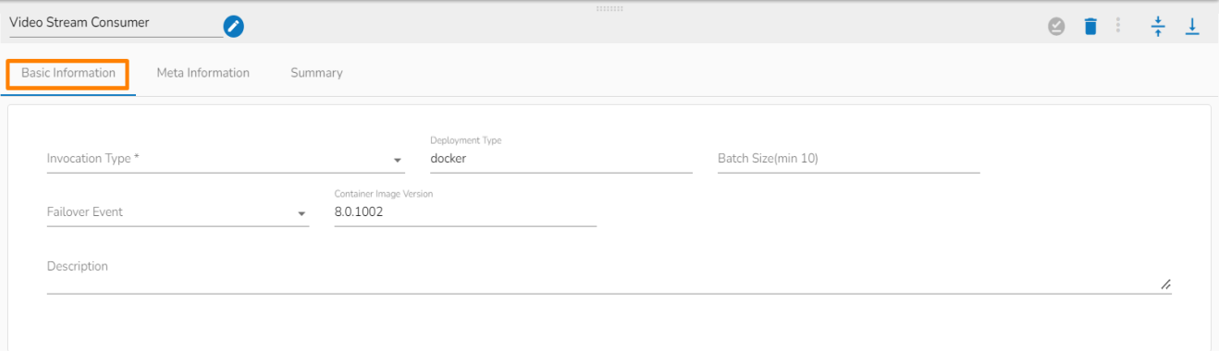

Click the dragged Video Stream Consumer component to open the component properties tabs.

Basic Information

It is the default tab to open for the component.

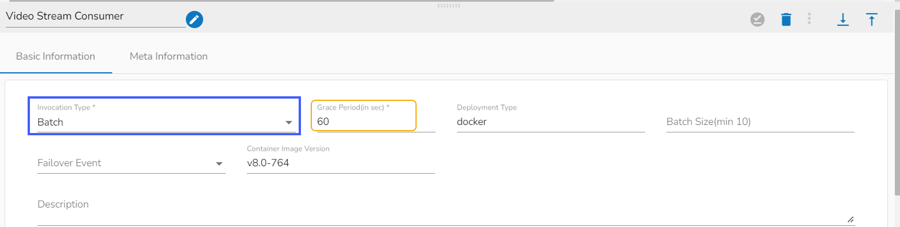

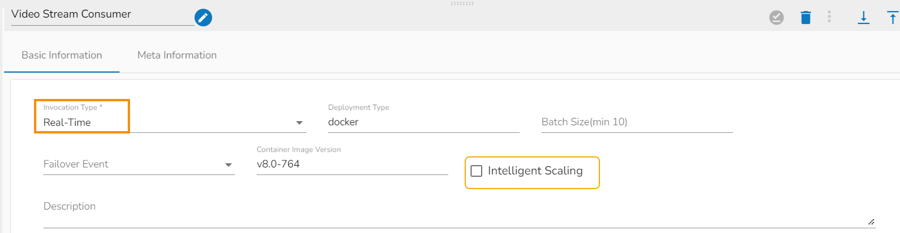

Invocation Type: Select an Invocation type from the drop-down menu to confirm the running mode of the reader component. Select ‘Real-Time’ or ‘Batch’ from the drop-down menu.

Deployment Type: It displays the deployment type for the component. This field comes pre-selected.

Batch Size (min 10): Provide the maximum number of records to be processed in one execution cycle (Min limit for this field is 10).

Failover Event: Select a failover Event from the drop-down menu.

Container Image Version: It displays the image version for the docker container. This field comes pre-selected.

Description: Description of the component (It is optional).

Please Note: If the selected Invocation Type option is Batch, then, Grace Period (in sec)* field appears to provide the grace period for component to go down gracefully after that time.

Selecting Real-time as the Invocation Type option will display the Intelligent Scaling option.

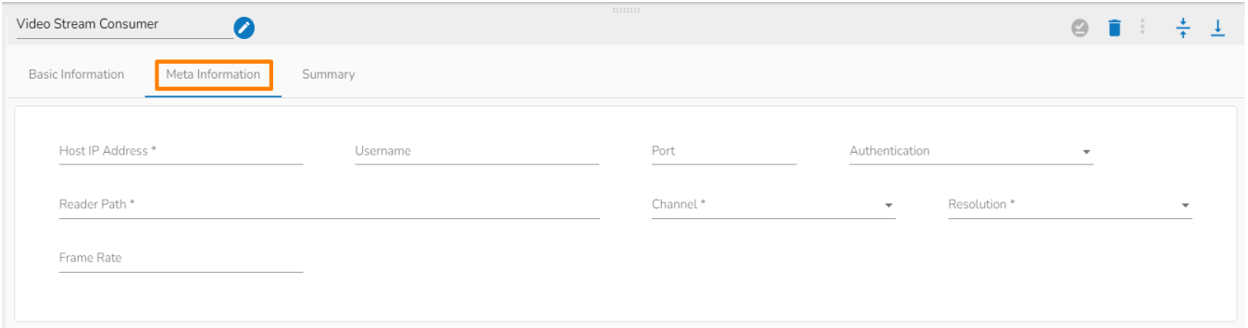

Meta Information Tab

Select the Meta Information tab and provide the mandatory fields to configure the dragged Video Stream Consumer component.

Host IP Address (*)- Provide IP or URL

The input in the Host IP Address field in the Meta Information tab changes based on the selection of Channel. There are two options available:

SFTP: It allows us to consume stored videos from SFTP location. Provide SFTP connection details.

URL: It allows us to consume live data from different sources such as cameras. We can provide the connection details for live video coming.

Username (*)- Provide username

Port (*)- Provide Port number

Authentication- Select any one authentication option out of Password or PEM PPK File

Reader Path (*)- Provide reader path

Channel (*)- The supported channels are SFTP and URL

Resolution (*)- Select an option defining the video resolution out of the given options.

Frame Rate – Provide rate of frames to be consumed.

Please Note: The fields for the Meta Information tab change based on the selection of the Authentication option.

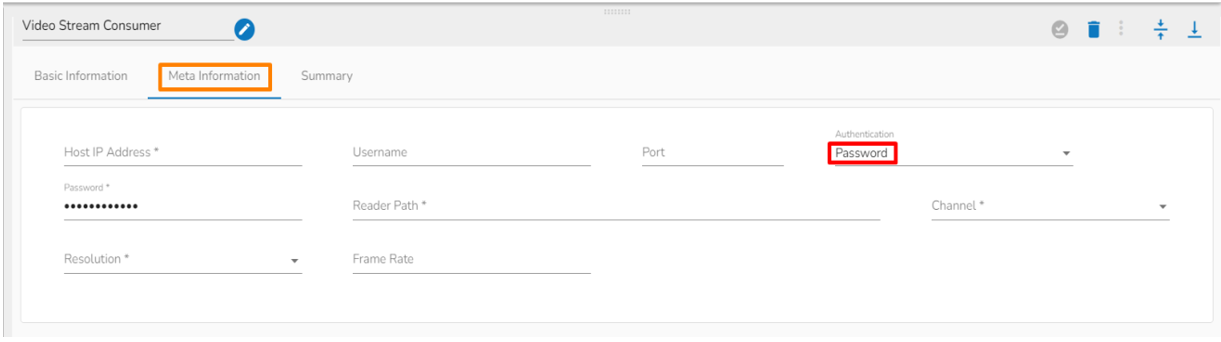

When the authentication option is Password

While using authentication option as Password it adds a password column in the Meta information.

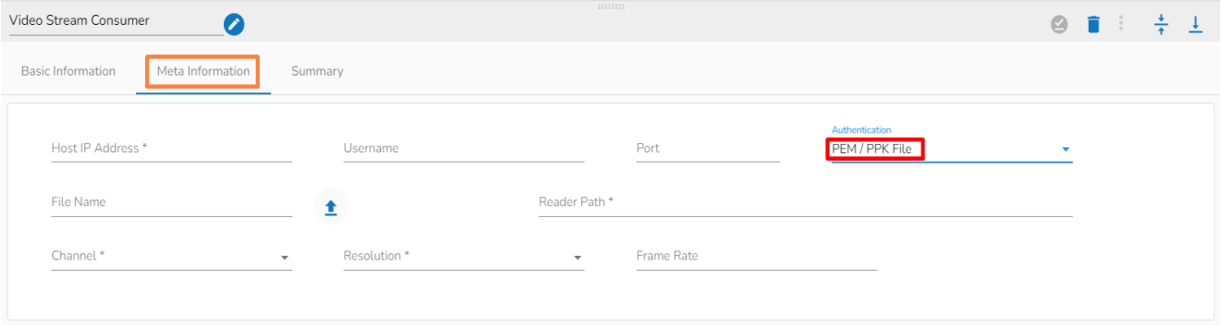

When the authentication option is PEM/PPK file

While choosing the PEM/PPK File authentication option, the user needs to select a file using the Choose File option.

Please Note: SFTP uses IP in the Host IP Address and URL one uses URL in Host IP Address.

Saving the Component

Click the Save Component in Storage icon for the Video Stream Consumer component.

A message appears to notify that the component properties are saved.

The Video Stream Consumer component gets configured to pass the data in the Pipeline Workflow.

Please Note: The Video Stream Consumer supports only the Video URL.

Last updated