DSLab Runner

The DSL (Data Science Lab) Runner is utilized to manage and execute data science experiments that have been created within the DS Lab module and imported into the pipeline.

All component configurations are classified broadly into 3 section:

Meta Information

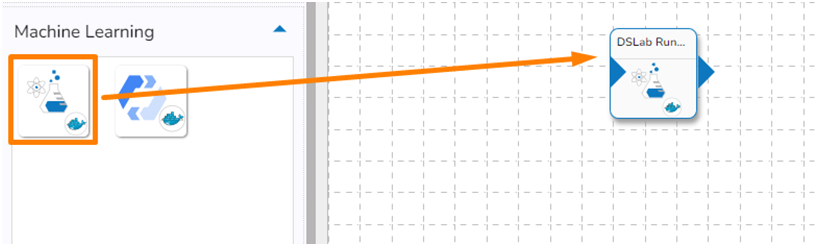

Using a DS Lab Runner Component in the Pipeline Workflow

Drag the DS Lab Runner component to the Pipeline Workflow canvas.

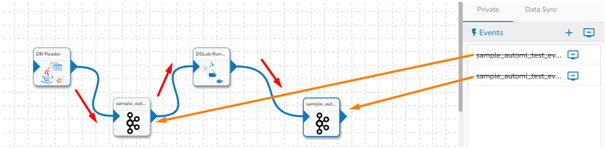

The DS Lab Model runner requires input data from an Event and sends the processed data to another Event. So, create two events and drag them onto the Workspace.

Connect the input and output events with the DS Lab Runner component as displayed below.

The data in the input event can come from any Ingestion, readers, any script from DS Lab Module or shared events.

Click the DS Lab Model runner component to get the component properties tabs below.

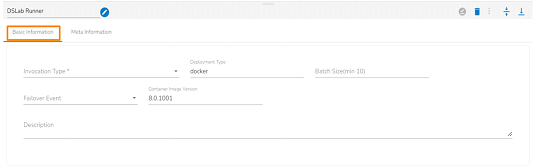

Basic Information

It is the default tab to open for the component.

Select an Invocation type from the drop-down menu to confirm the running mode of the reader component. Select the Real-Time or Batch option from the drop-down menu.

Please Note: If the selected Invocation Type option is Batch, then Grace Period (in sec)* field appears to provide the grace period for component to go down gracefully after that time.

Deployment Type: It displays the deployment type for the component. This field comes pre-selected.

Batch Size (min 10): Provide the maximum number of records to be processed in one execution cycle (Min limit for this field is 10).

Failover Event: Select a failover Event from the drop-down menu.

Container Image Version: It displays the image version for the docker container. This field comes pre-selected.

Description: Description of the component. It is optional.

Please Note: The DS Lab Runner contains two execution types in its Meta Information tab.

DS Lab Runner as Model Runner

It allows to run a model that you have created on DS Lab Module by simply registering the model to use it in pipeline.

DS Lab Runner as Script Runner

It allows to run a script that you have exported from DS Lab Module to pipeline.

Please follow the demonstration to use the DS Lab Runner as a Model Runner.

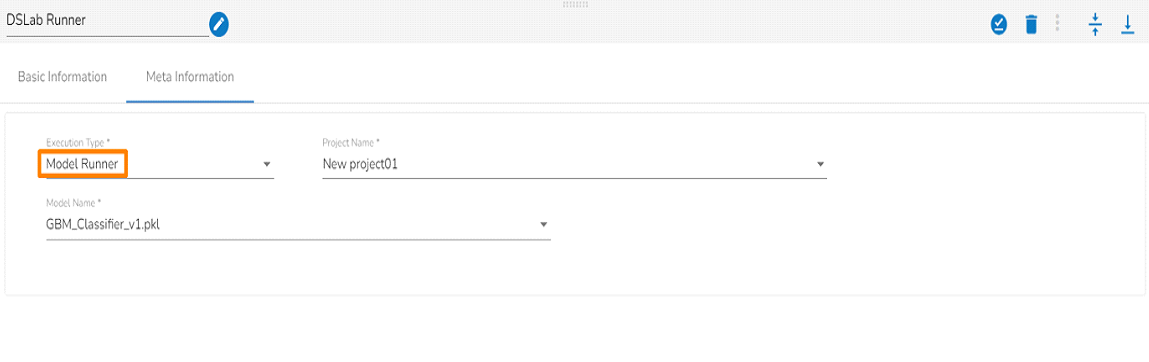

Meta Information Tab (as Model Runner)

Model Runner as Execution Type

Please follow the below steps to configure the Meta Information when the Model Runner is selected as execution type:

Project Name: Name of the project where you have created your model in DS Lab Module.

Model Name: Name of the saved model in Project under the DS Lab module.

Please follow the demonstration to configure the component for Execution Type as Script Runner as Execution Type.

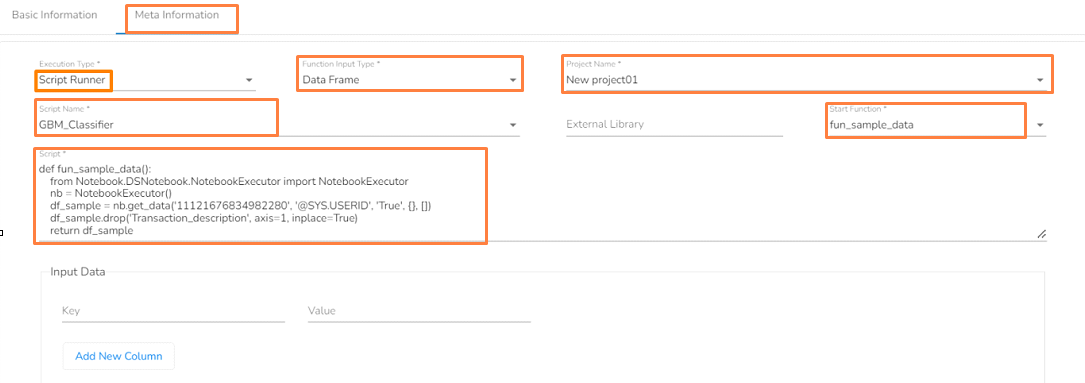

Script Runner as Execution Type

Please follow the below-given steps to configure the Meta Information when the Script Runner is selected as Execution Type:

Function Input Type: Select the input type from the drop-down. There are two options in this field:

DataFrame

List of dictionary

Project Name: Provide the name of the Project that contains a model in the DS Lab Module.

Script Name: Select the script that has been exported from the notebook in the DS Lab module. The script written in the DS Lab module should be inside a function.

External Library: If any external libraries are used in the script we can mention them here. We can mention multiple libraries by giving a comma (,) in between the names.

Start Function: Select the function name in which the script has been written.

Script: The Exported script appears under this space. For more information about exporting the script from the DSLab module, please refer to the following link: Exporting a Script from DSLab.

Input Data: If any parameter has been given in the function, then the parameter name is provided as Key, and the value of the parameters has to be provided as value in this field.

Saving the DS Lab Runner Component

Click the Save Component in the Storage

icon.

icon.A success notification message appears when the component gets saved.

The DS Lab Runner component reads the data coming to the input event, runs the model, and gives the output data with predicted columns to the output event.

Last updated