Git Sync

Git Sync feature allows users to import files directly from their Git repository into the DS Lab project to be used in the subsequent processes within pipelines and jobs. For using this feature, the user needs to configure their repository in their DS Lab project.

Prerequisites:

To configure GitLab/GitHub credentials, follow these steps in the Admin Settings:

Navigate to Admin >> Configurations >> Version Control.

From the first drop-down menu, select the Version.

Choose 'DsLabs' as the module from the drop-down.

Select either GitHub or GitLab based on the requirement for Git type.

Enter the host for the selected Git type.

Provide the token key associated with the Git account.

Select a Git project.

Choose the branch where the files are located.

After providing all the details correctly, click on 'Test,' and if the authentication is successful, an appropriate message will appear. Subsequently, click on the 'Save' option.

To complete the configuration, navigate to My Account >> Configuration. Enter the Git Token and Git Username, then save the changes.

Configuring the Git Sync inside a DSL Project

Please follow the below-given steps to configure the Git Sync in the DS Lab project:

Navigate to the DS Lab module.

Click the Create Project to initiate a new project.

Enter all the required fields for the project.

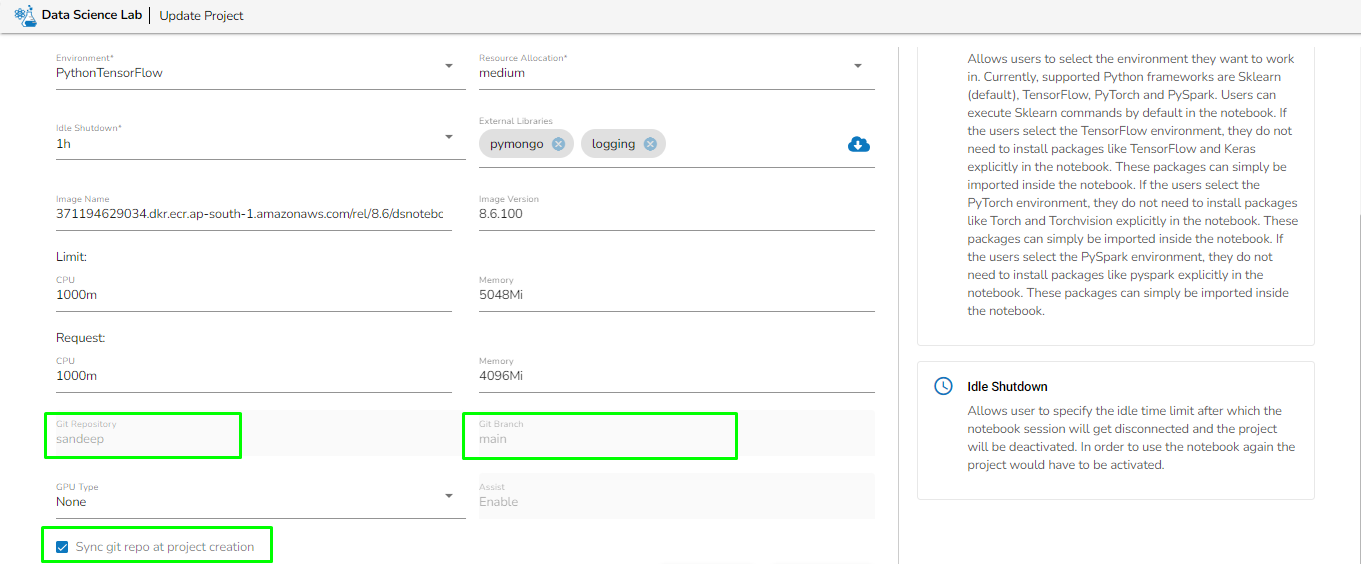

Select the Git Repository and Git Branch.

Enable the option Sync git repo at project creation to gain access of all the files in the selected repository.

Click the Save option to create the project.

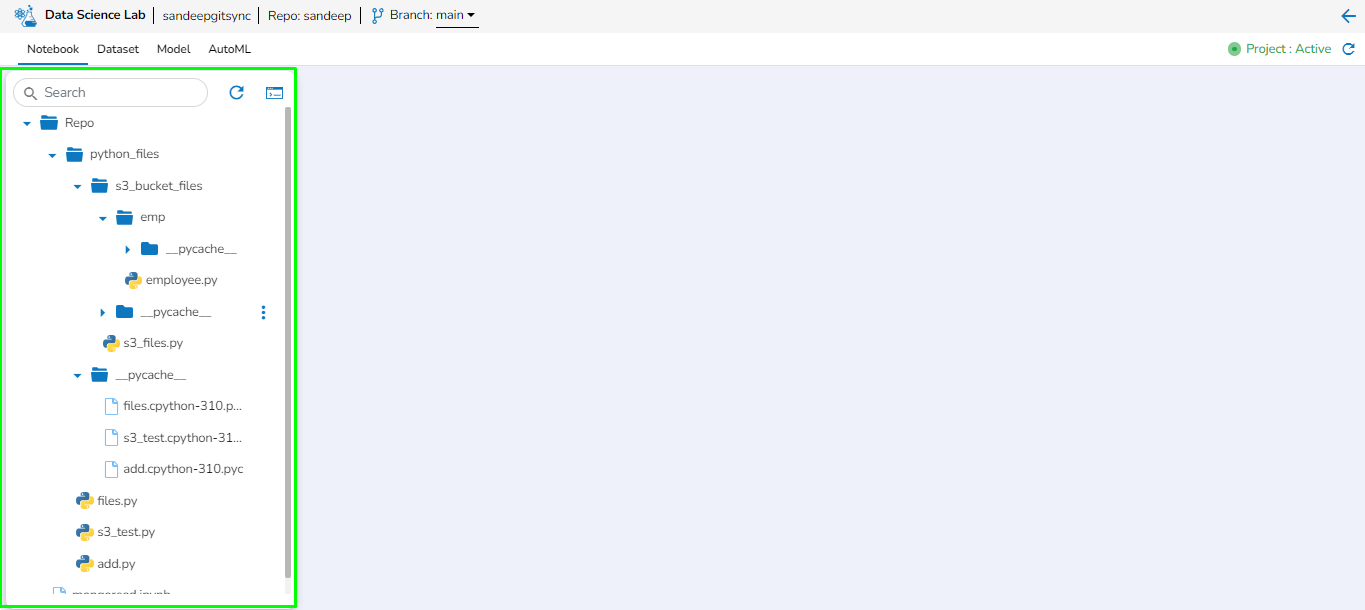

After creating the project, expand the Repo option in the Notebook tab to view all files present in the repository.

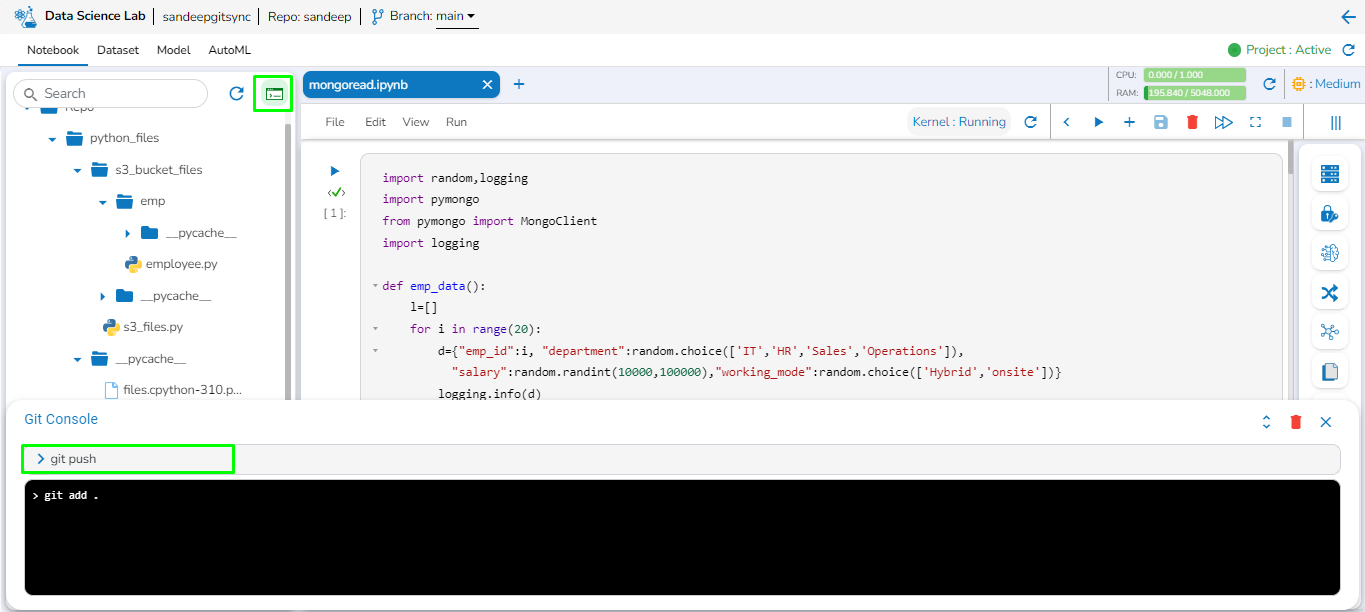

The Git Console option, accessible by clicking at the bottom of the page, allows the user to run Git commands directly. This feature enables the user to execute any Git commands as per their specific requirements.

After completing all the process, the users can export their scripts to the Data Pipeline module and register it as a job according to their specific requirements.

For instructions on exporting script to the pipeline and registering it as a job, please refer to the link provided below:

Sample Git commands

Please Note: Files with the .ipynb extension can be exported for use in pipelines and jobs, while those with the .py extension can only be utilized as utility files. Additional information on utility files can be found on the Utility page.

Last updated