Utility

This feature enable users to upload their files (in .py format) to the Utility section of a DS Lab project using this feature. Subsequently, it will allow users to import these files as modules in a DsLab notebook, enabling the use of the uploaded utility functions in scripts.

Prerequisite: To use this feature, the user needs to create a project under Ds Lab module.

Check out the below-given video on how to use utility scripts in the DS Lab module.

Activate and open the project under DS Lab module.

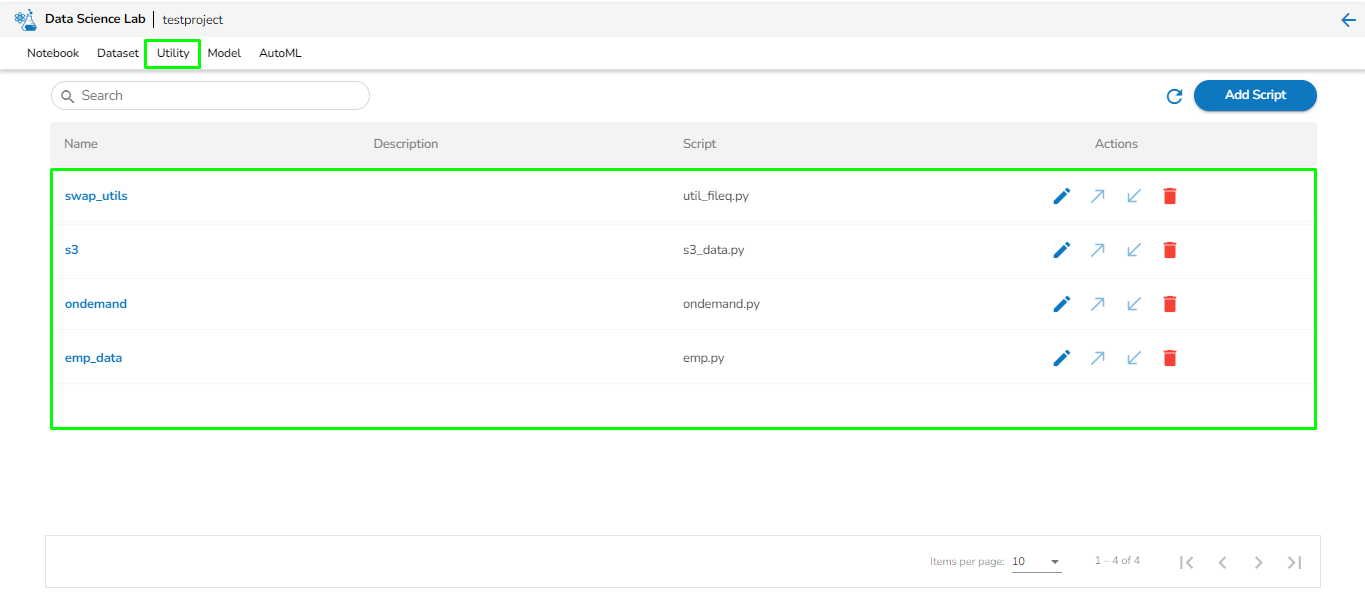

Navigate to the Utility tab in the project.

Click on Add Scripts options.

After that, the user will find two options:

Import Utility: It enables users to upload their own file (.py format) where the script has been written.

Pull from Git: It enables users to pull their scripts directly from their Git repository. In order to use this feature, the user needs to configure their Git branch while creating the project in DS Lab. Detailed information on configuring Git in a DS Lab project can be found here: Git Sync

Import Utility

Utility Name: Enter the utility name.

Utility Description: Enter the description for the utility.

Utility Script: The users can upload their files in the .py format.

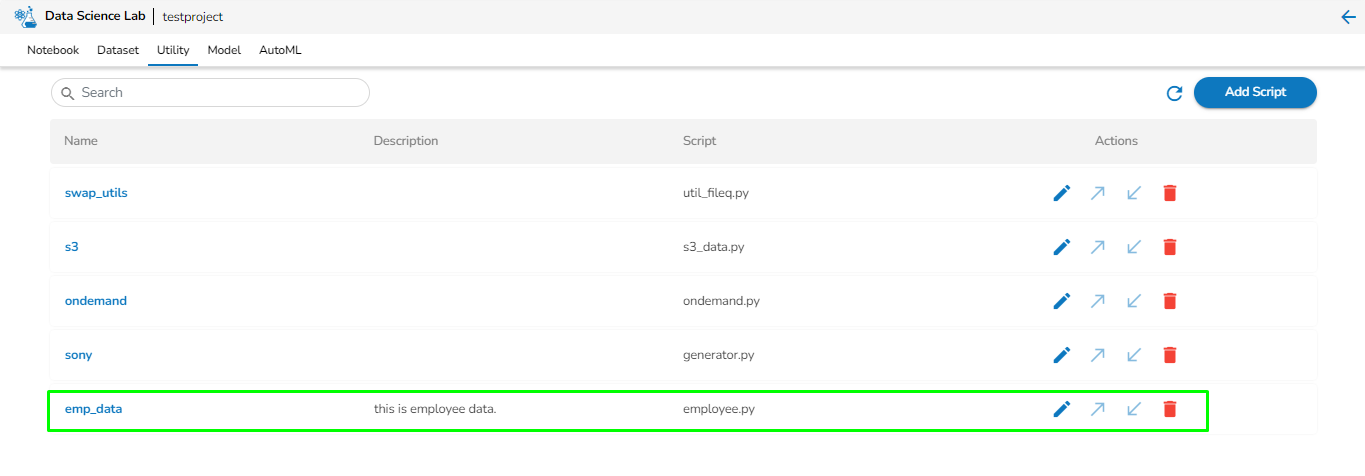

Click the Save option after entering all the required information on the Add utility script page, and the uploaded file will list under the Utility tab of the selected project.

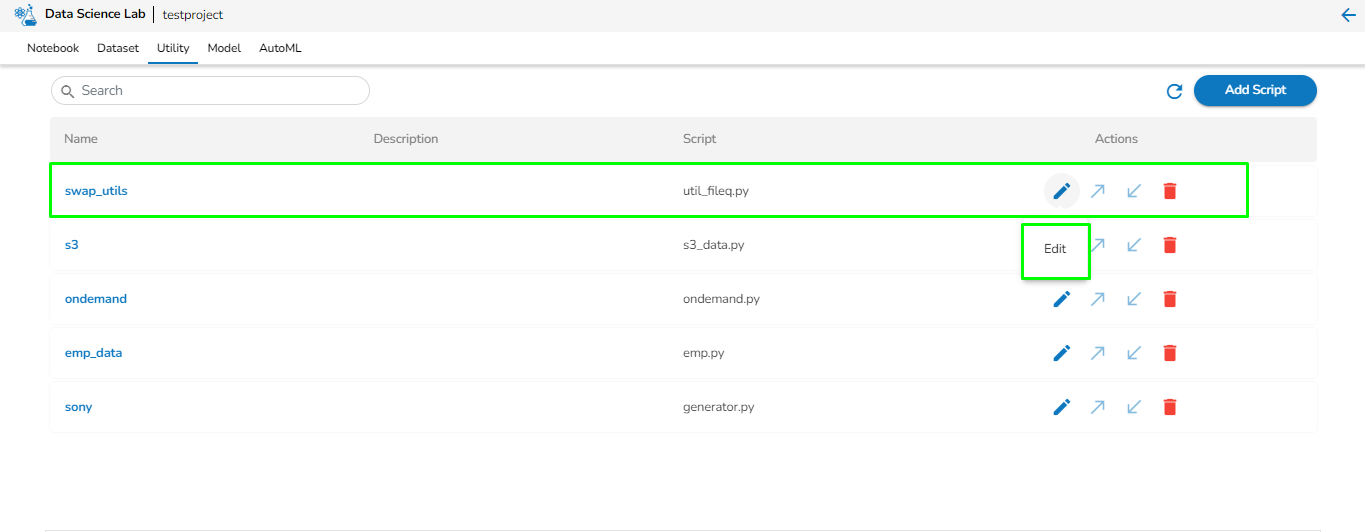

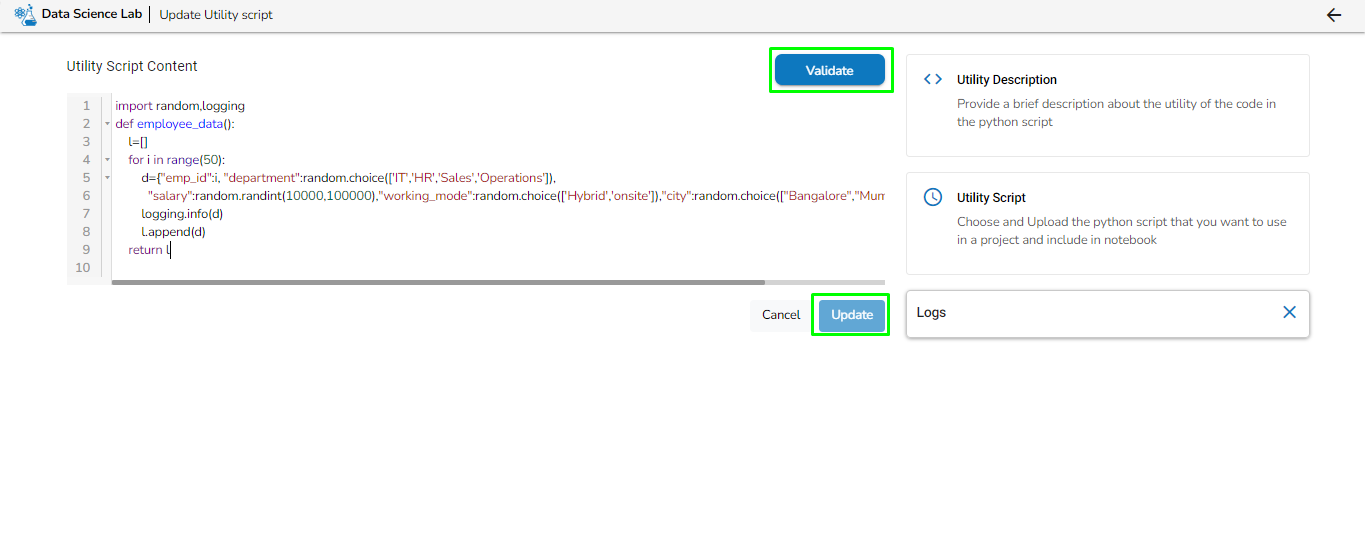

Once the file is uploaded to the Utility tab, the user can edit the contents of the file by clicking on the Edit icon corresponding to the file name.

After making changes, the users can validate their script by clicking on the Validate icon on the top right.

Click the Update option to save the latest changes to the utility file.

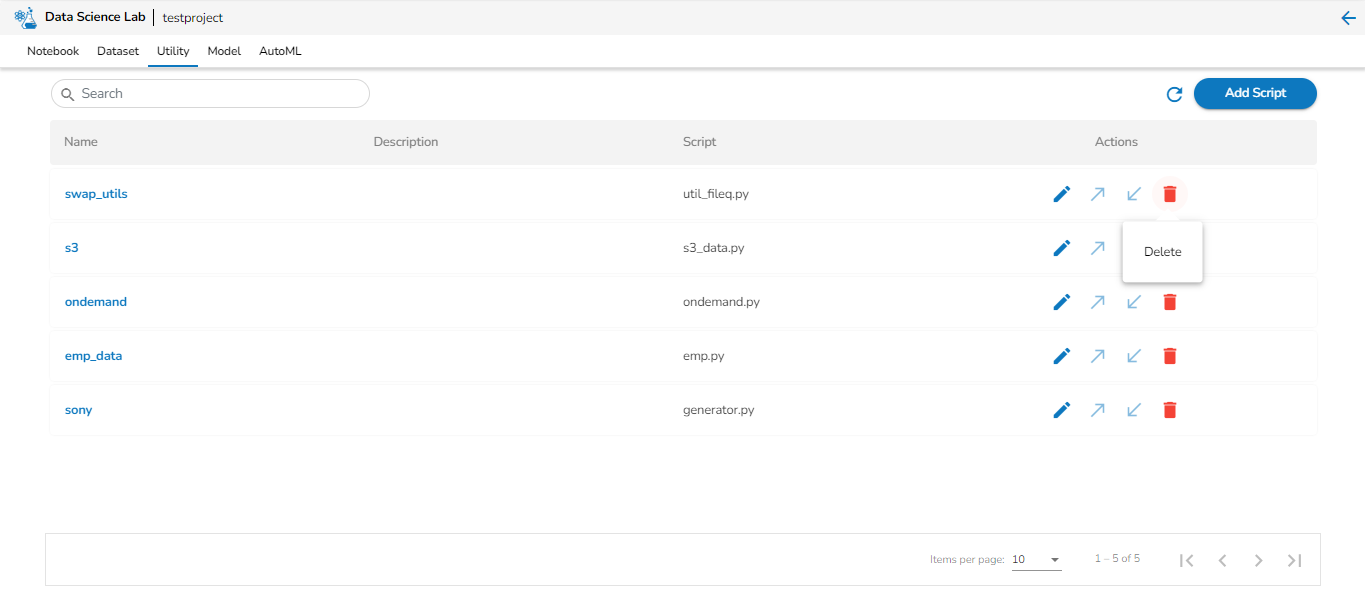

If the user wants to delete a particular Utility, they can do so by clicking on the Delete icon.

Importing the Utility File to a DS Lab Notebook

The user can import the uploaded utility file as a module in the DS Lab notebook and use it accordingly.

In the above given image, it can be seen that "employee.py" file is uploaded in the utility. Now, this file is going to be imported in the DS Lab notebook and will further used in the script.

Sample Utility Script

Use the below-given sample code for the reference and explore the Utility file related features yourself inside the DS Lab notebook.

In the above written sample script, the utility file(employee.py) has been imported (import employee) and it has been used in the script for further processing.

After completing this process, the user can export this script to the Data Pipeline module and register it as a job according to their specific requirements.

Please Note:

Refer to the below-given links to get instructions on exporting to the pipeline and registering it as a job:

To apply the changes made in the utility file and get the latest results, the user must restart the notebook's kernel after any modifications to the utility file.