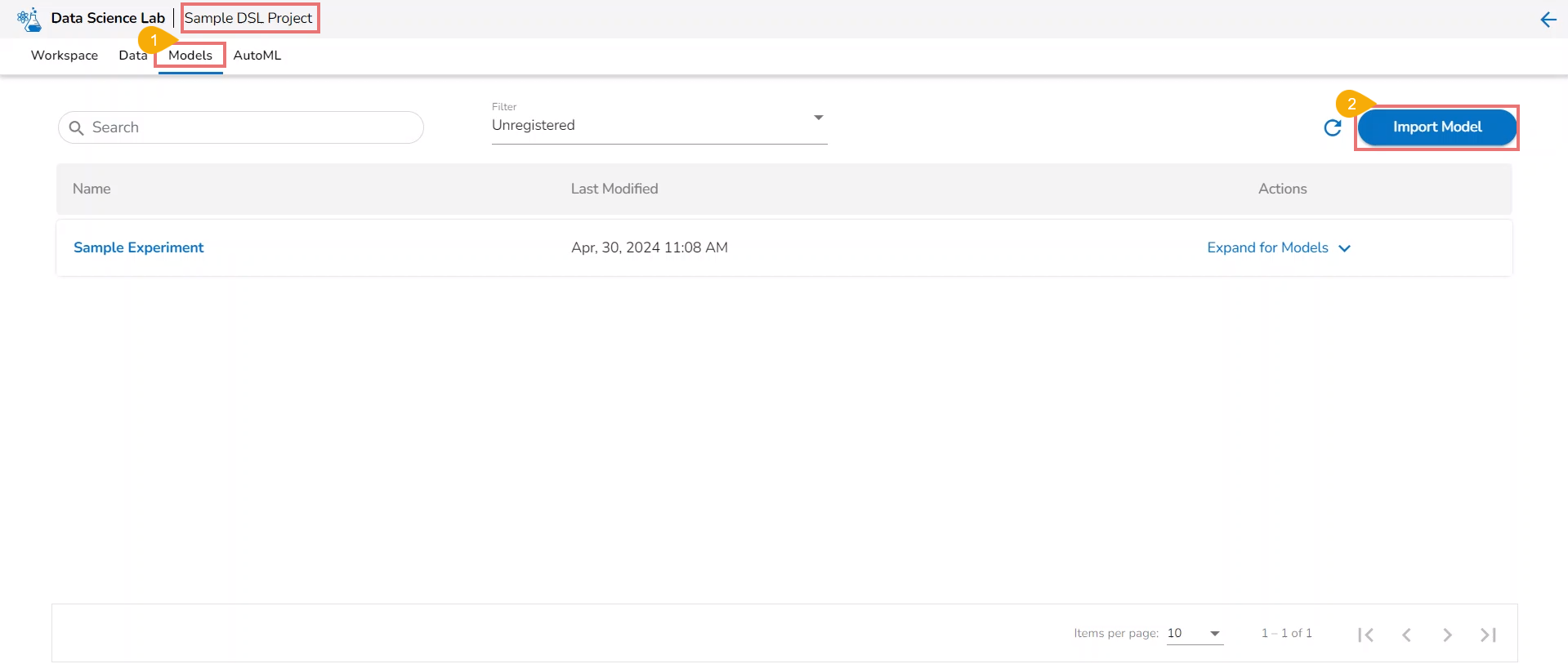

Import Model

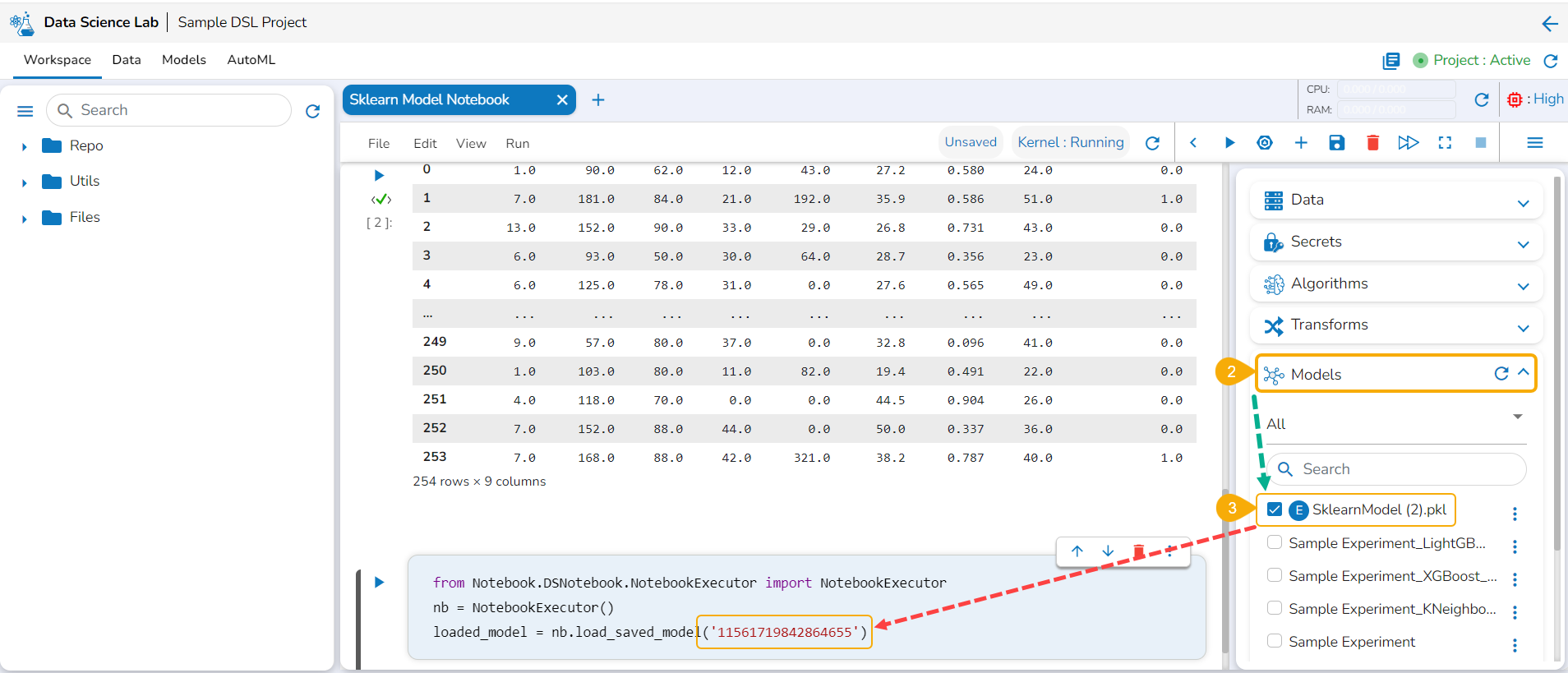

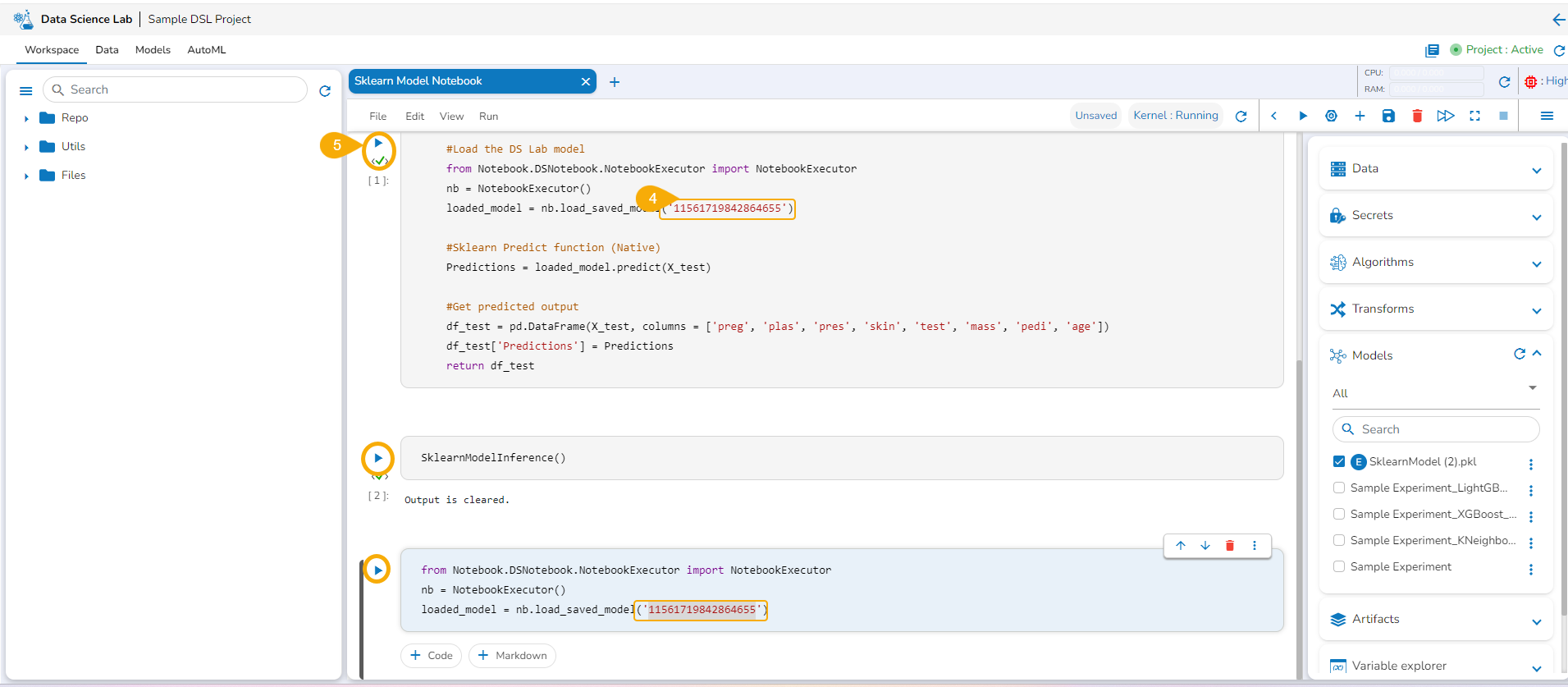

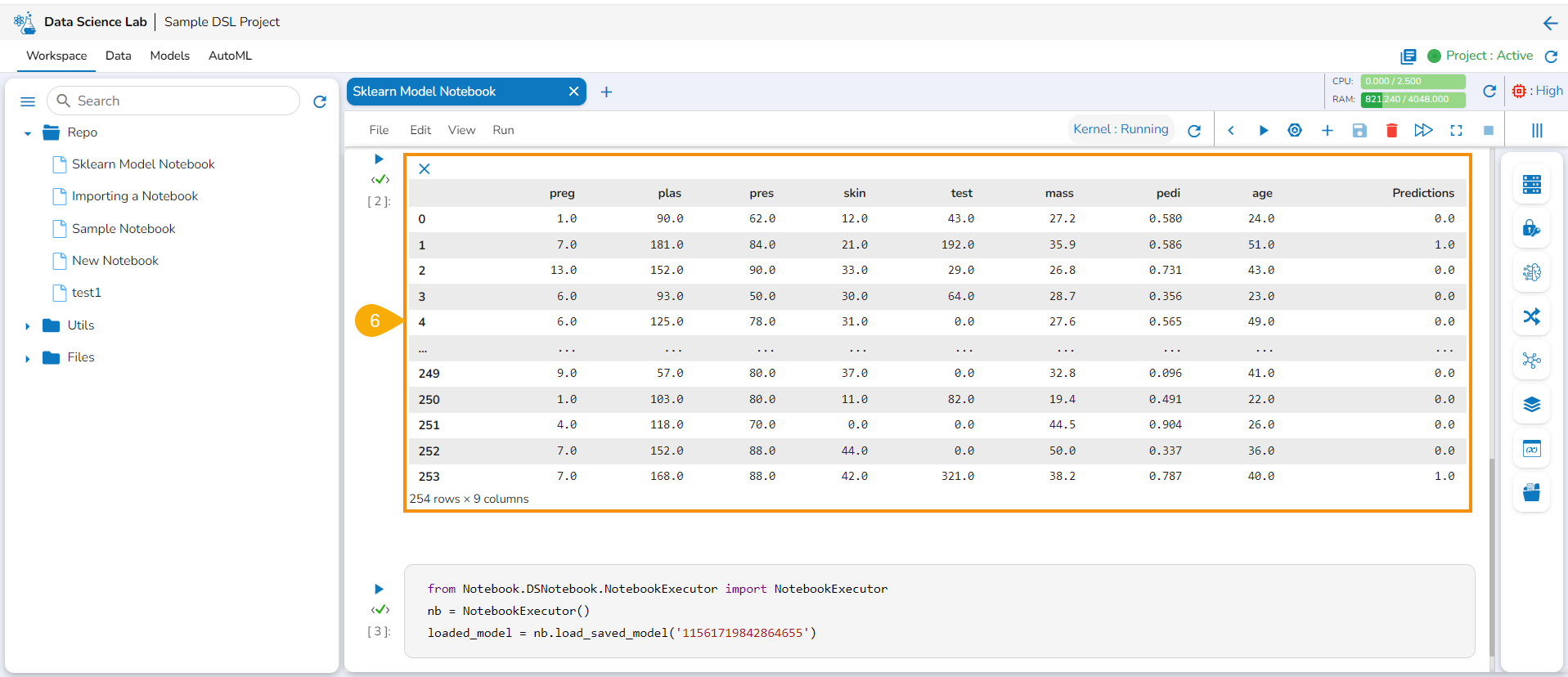

External models can be imported into the Data Science Lab and experimented inside the Notebooks using the Import Model functionality.

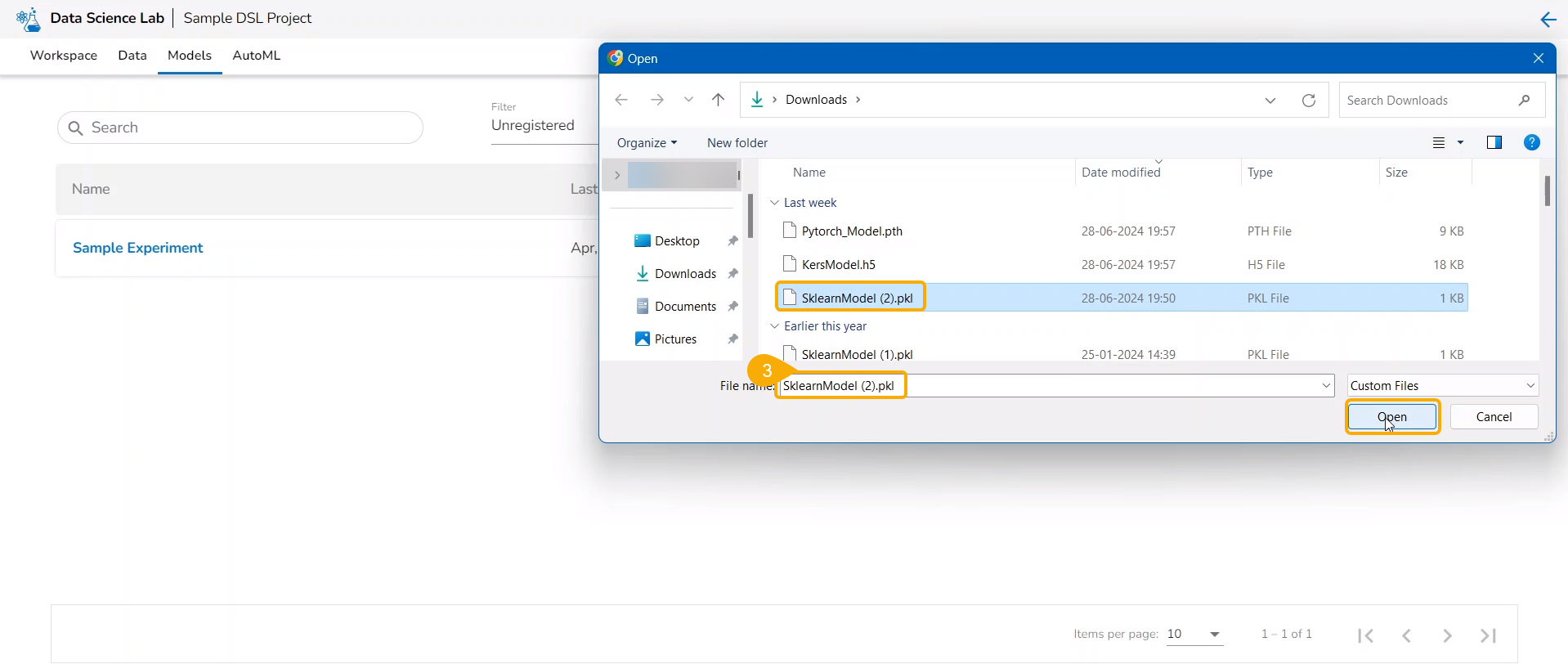

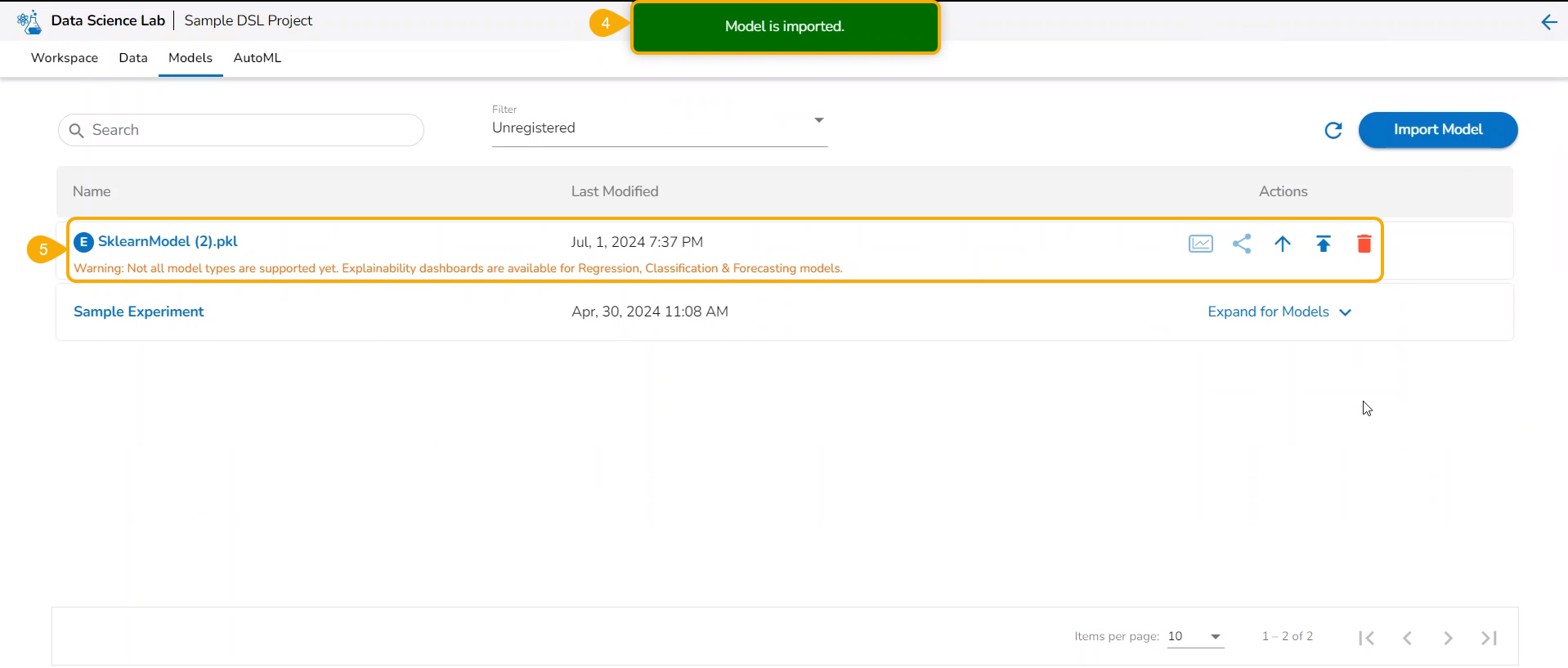

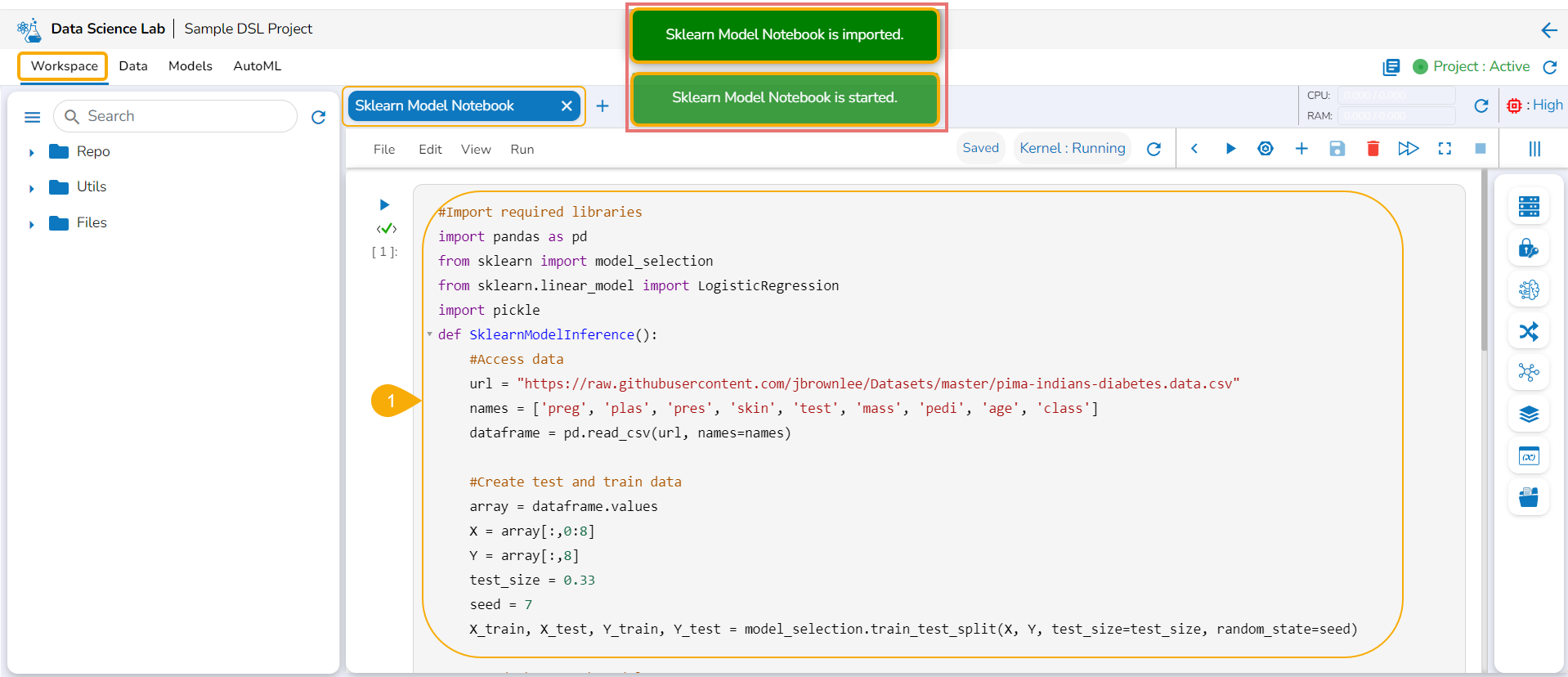

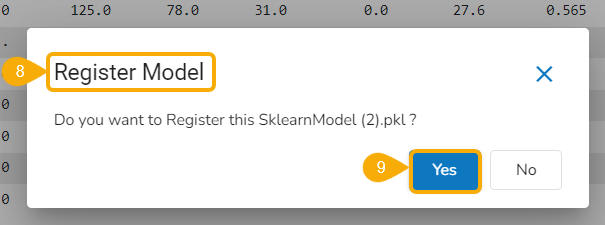

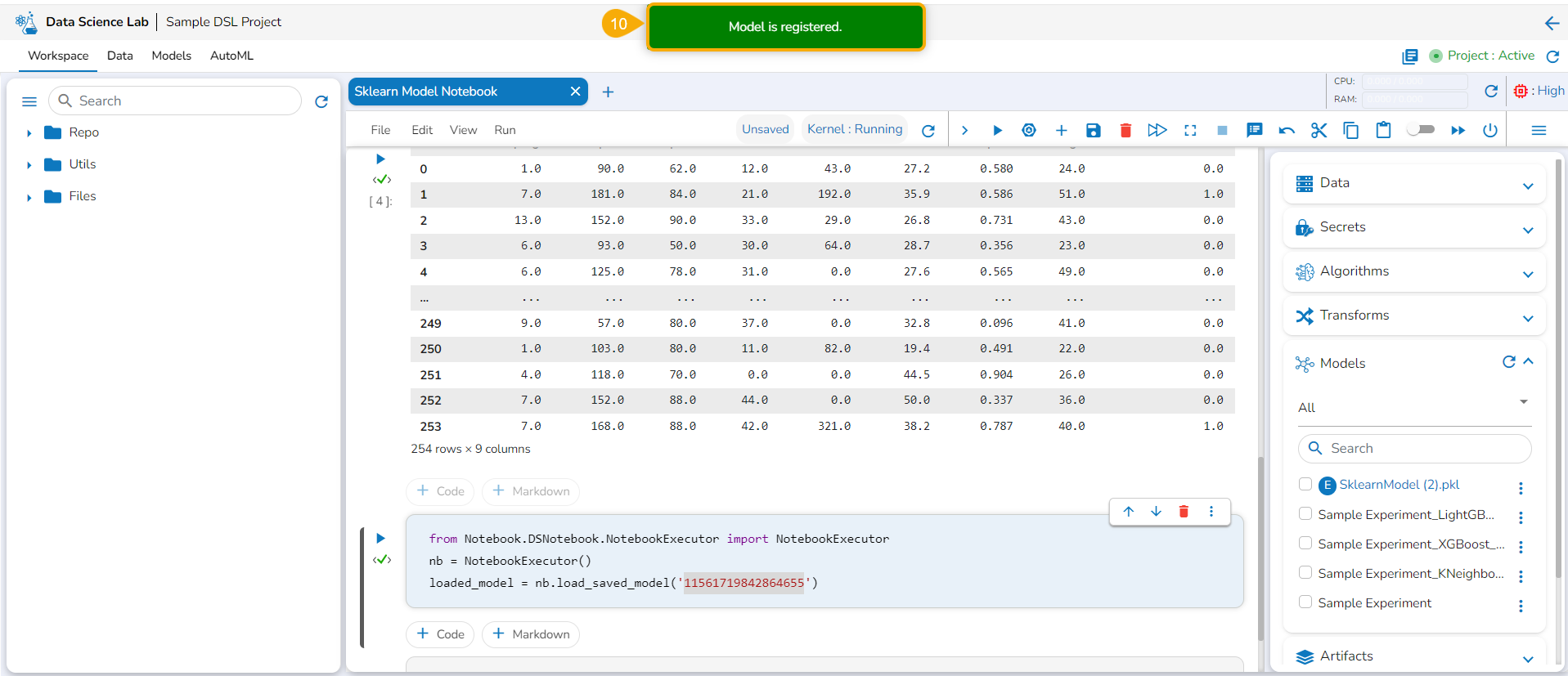

Importing a Model

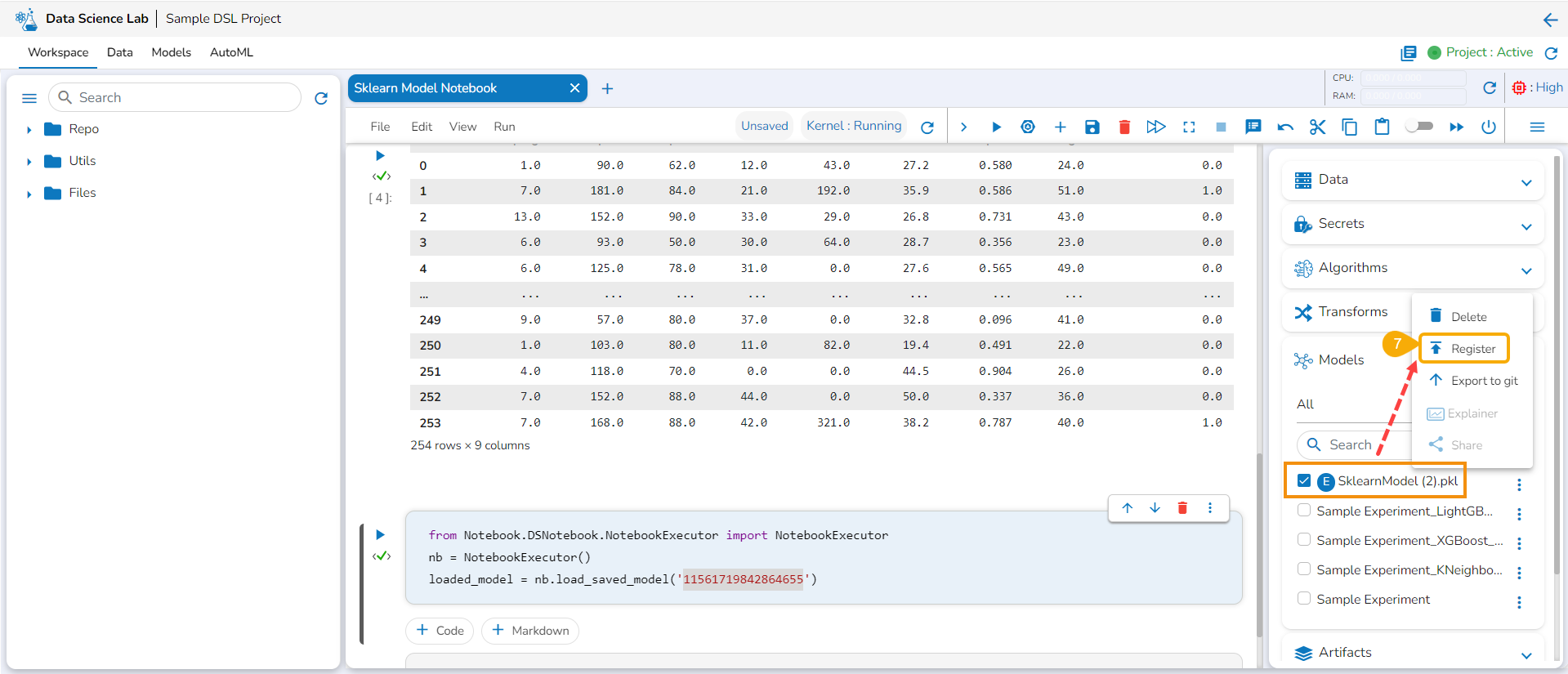

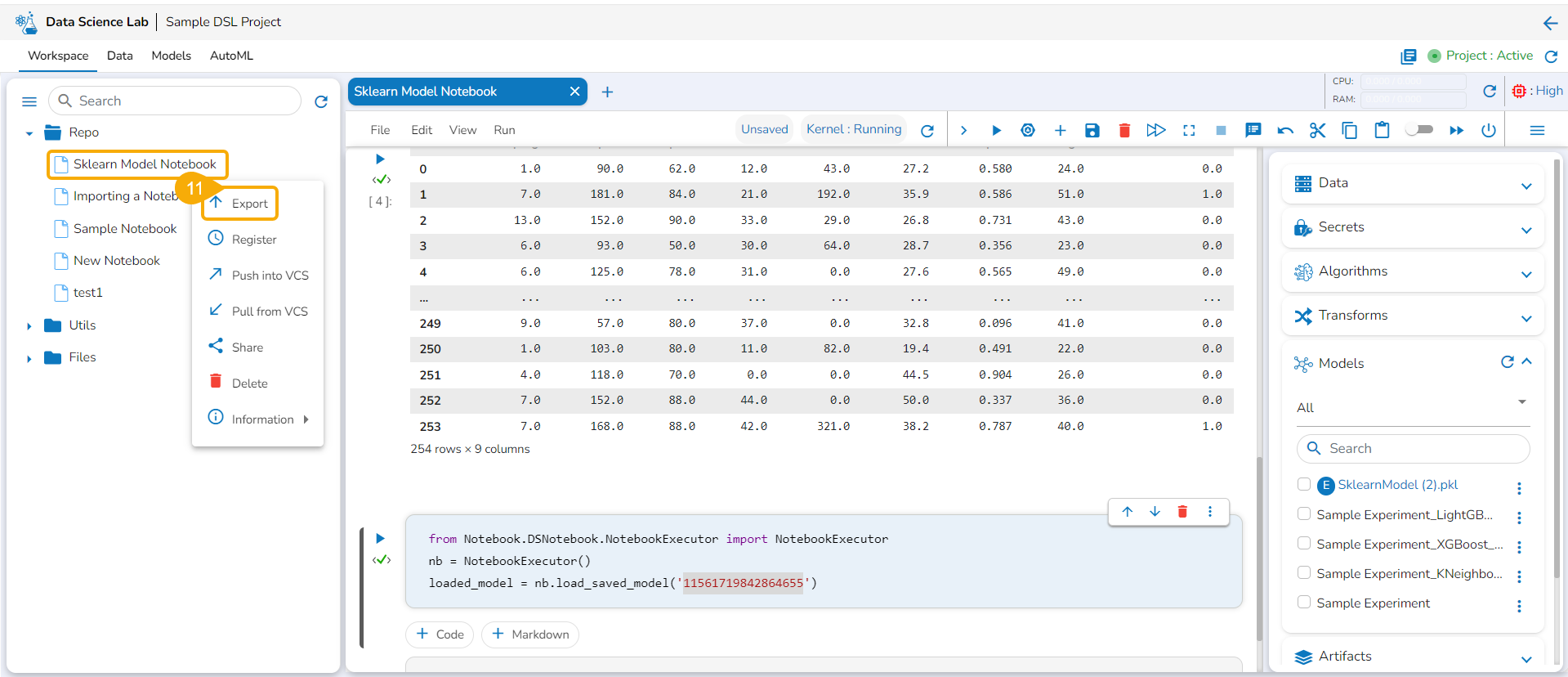

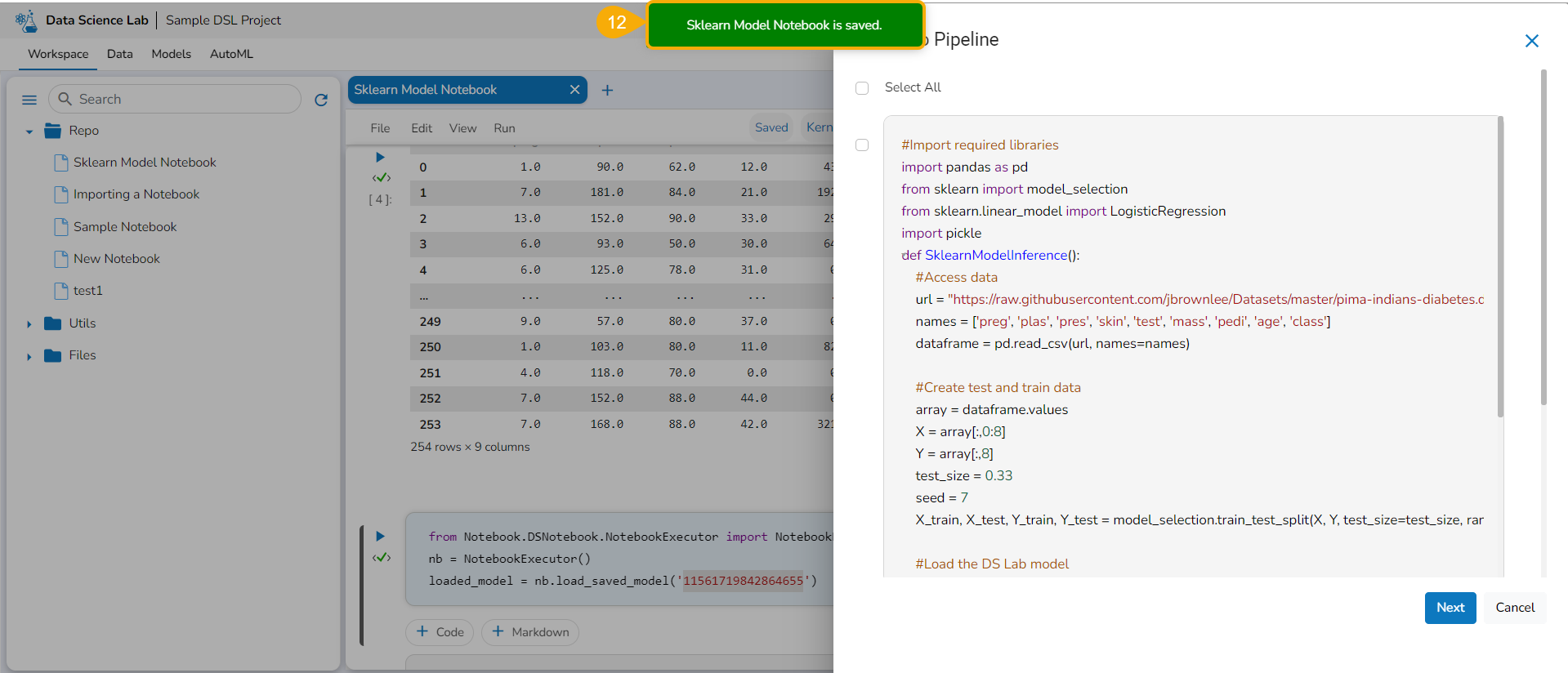

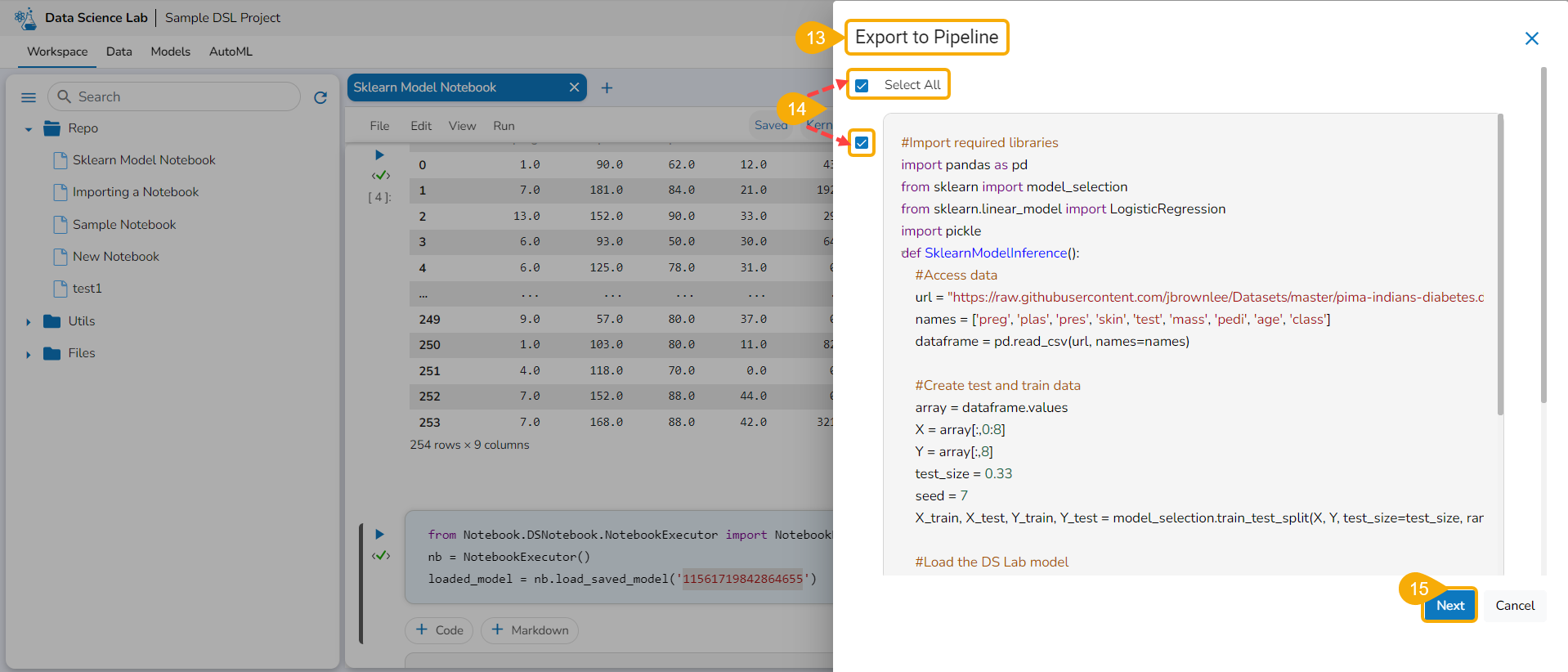

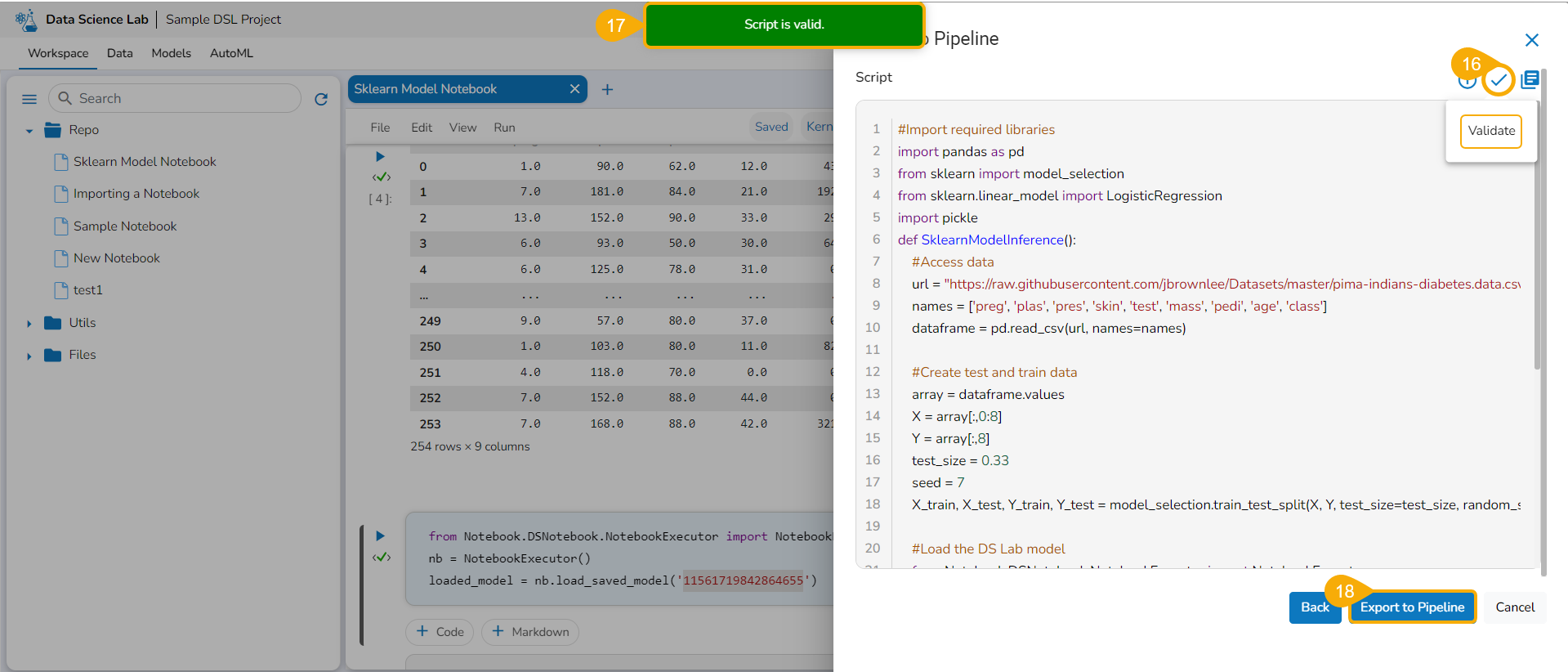

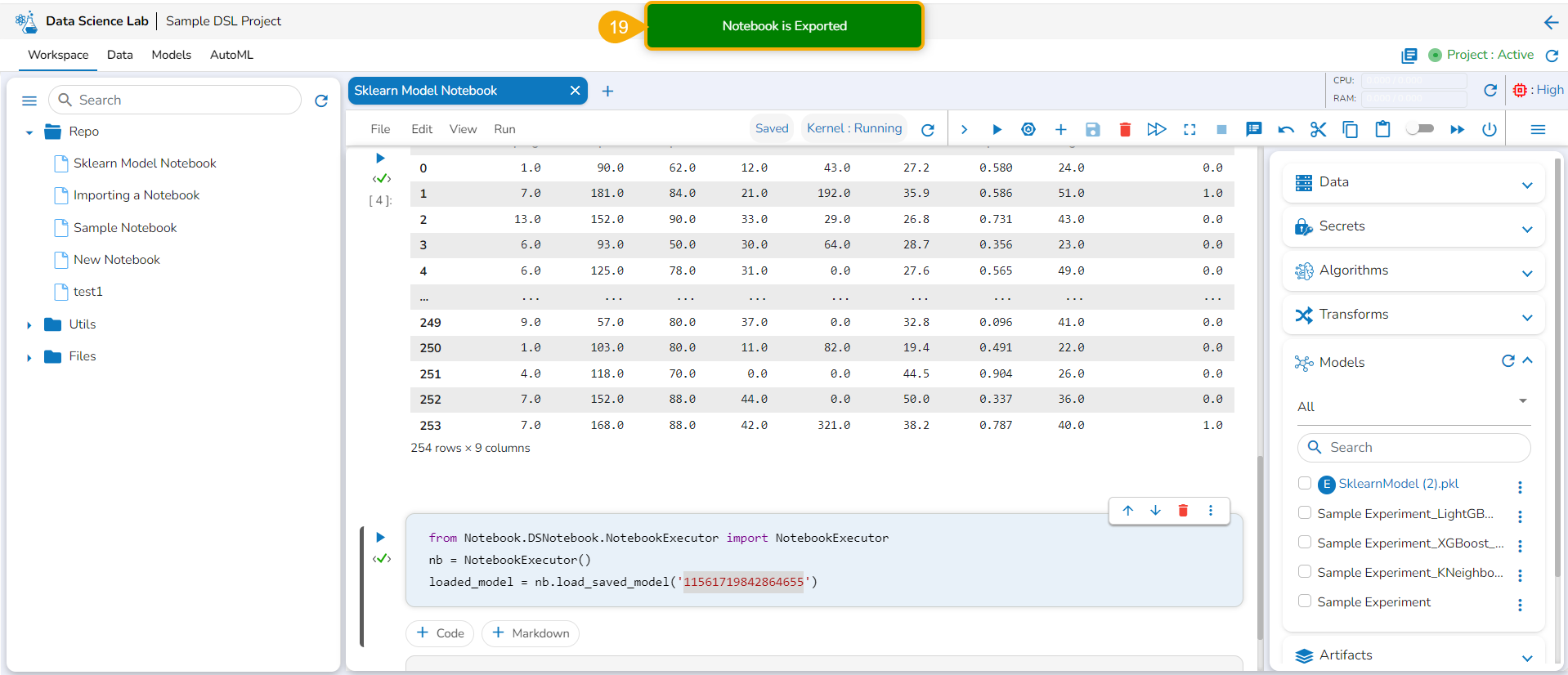

Exporting a Model to the Data Pipeline

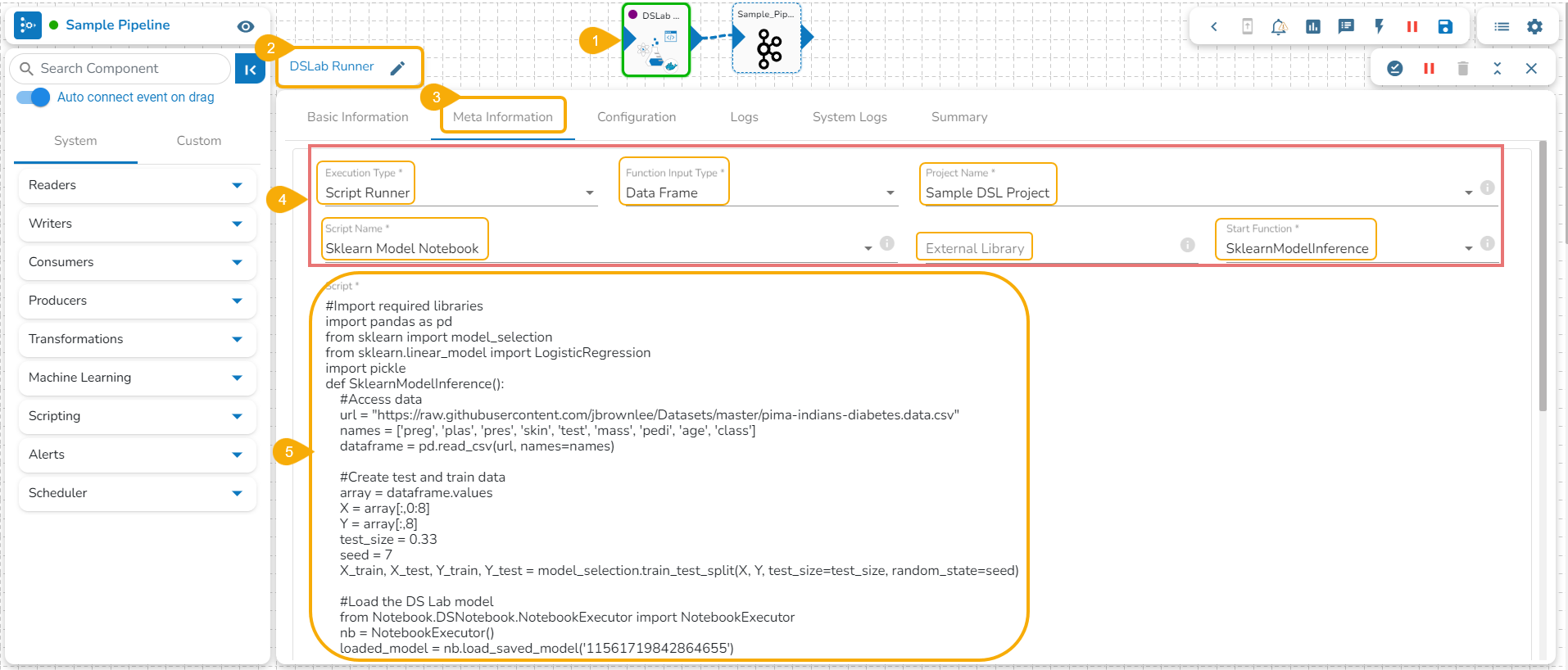

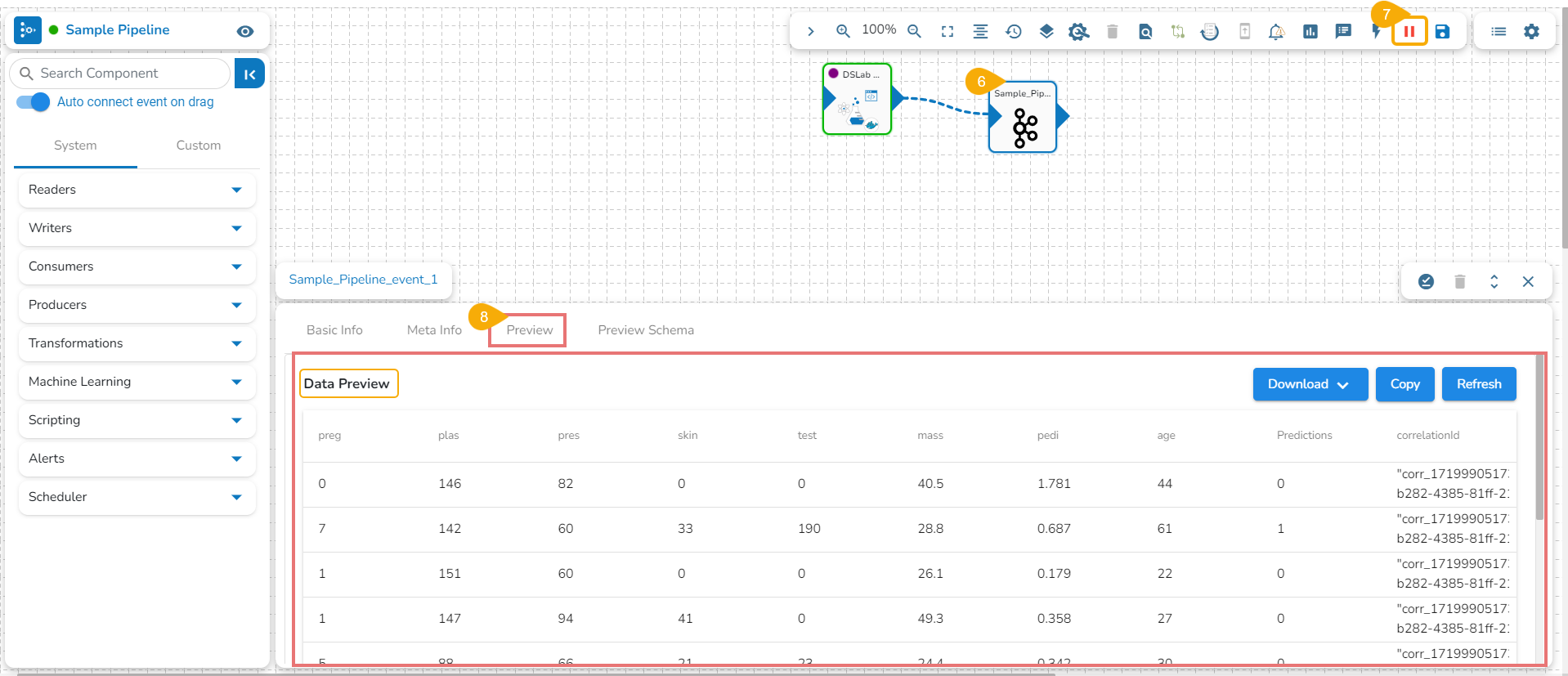

Accessing the Exported Model within the Pipeline User interface

Sample files for Sklearn

Sample files for Keras

Sample files for PyTorch

Last updated