Loading...

This page displays the steps to Export a DSL script and register it as Job.

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

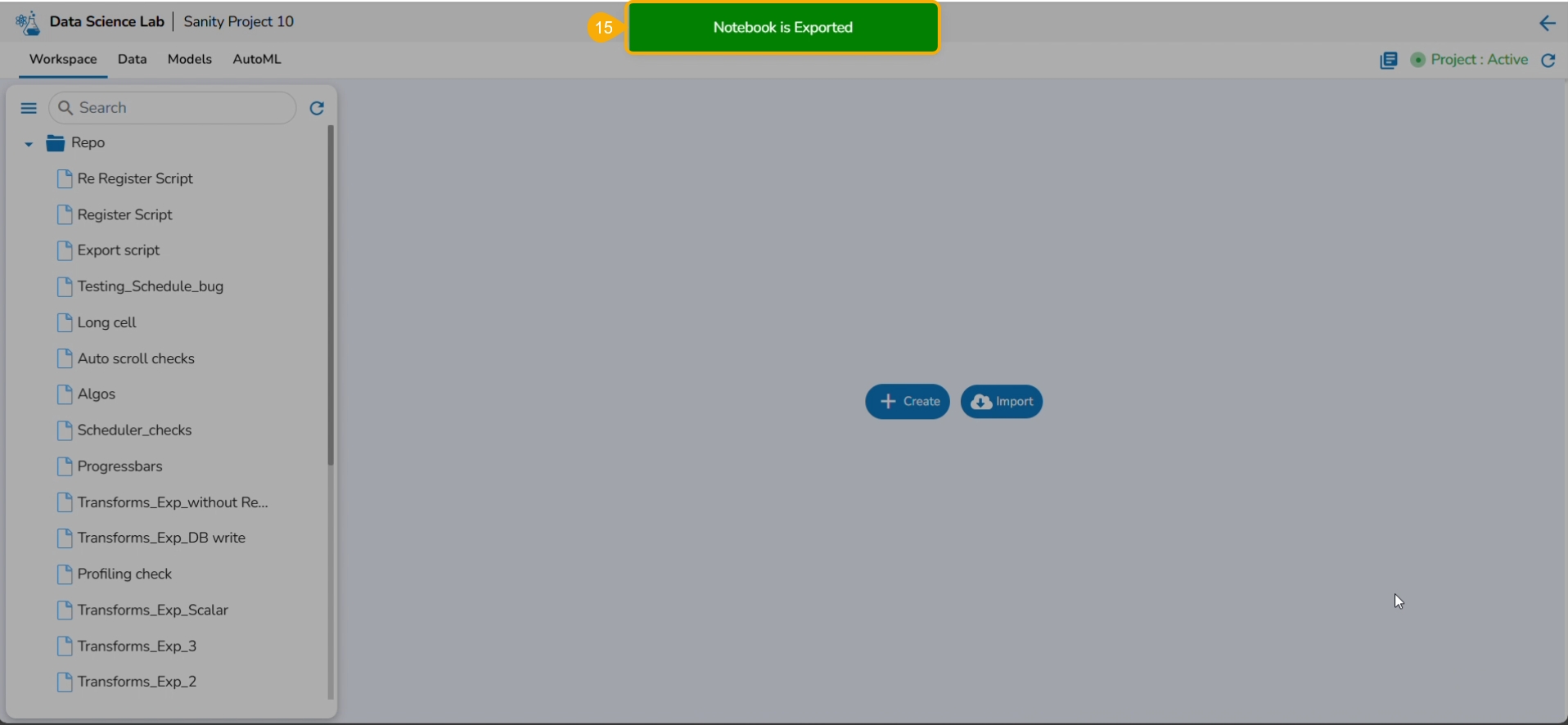

The Export icon provided for a Notebook redirects the user to export the Notebook as a script to the Data Pipeline module and GIT Repository.

A Notebook can be exported to the Data Pipeline module using this option.

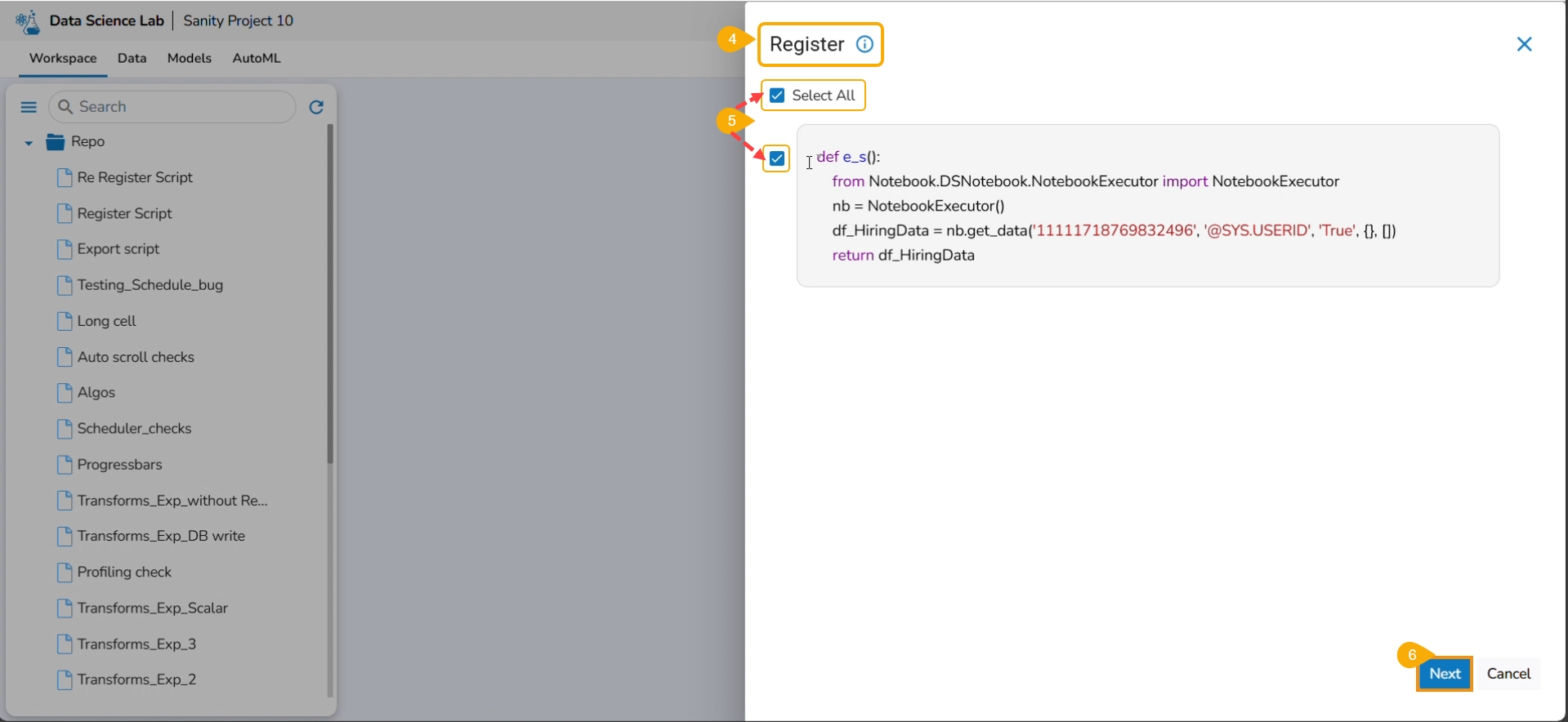

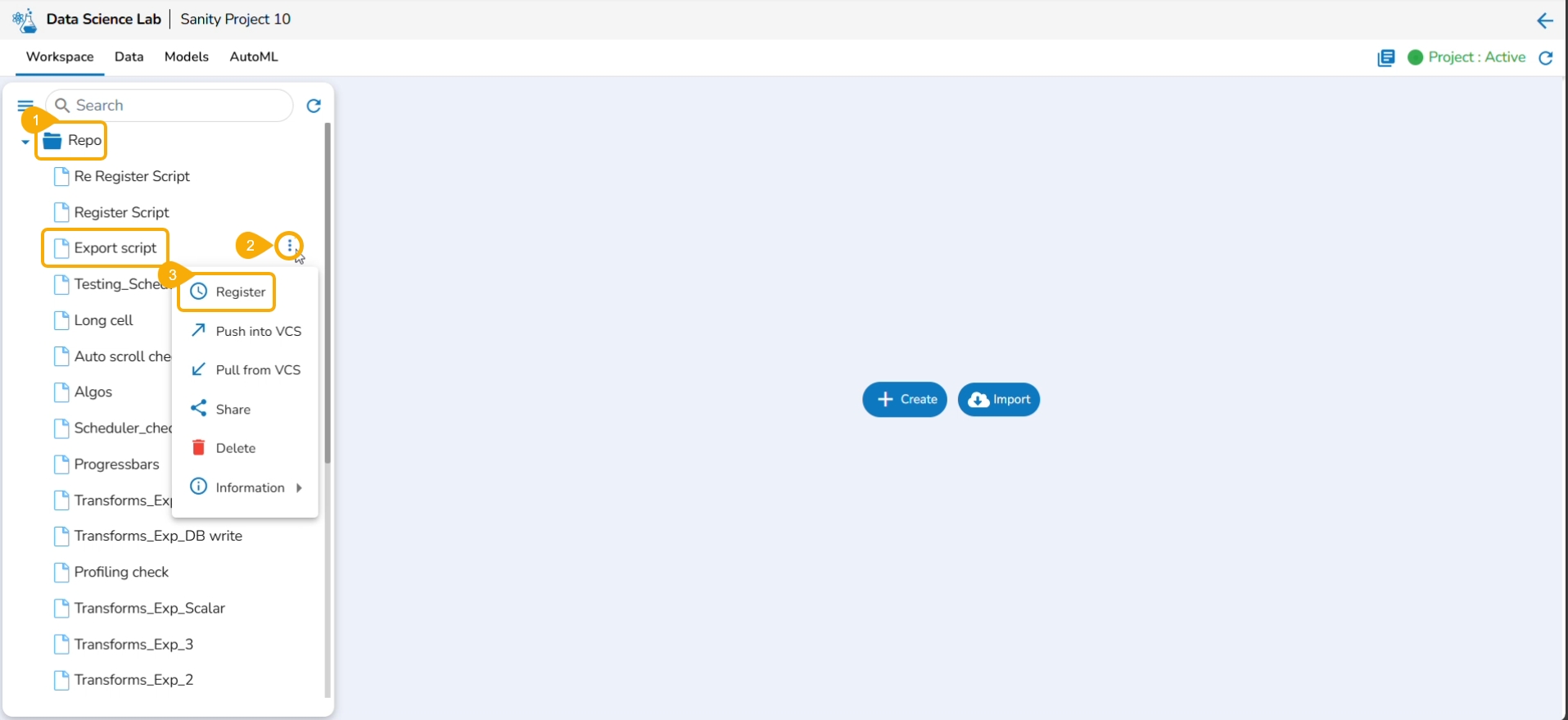

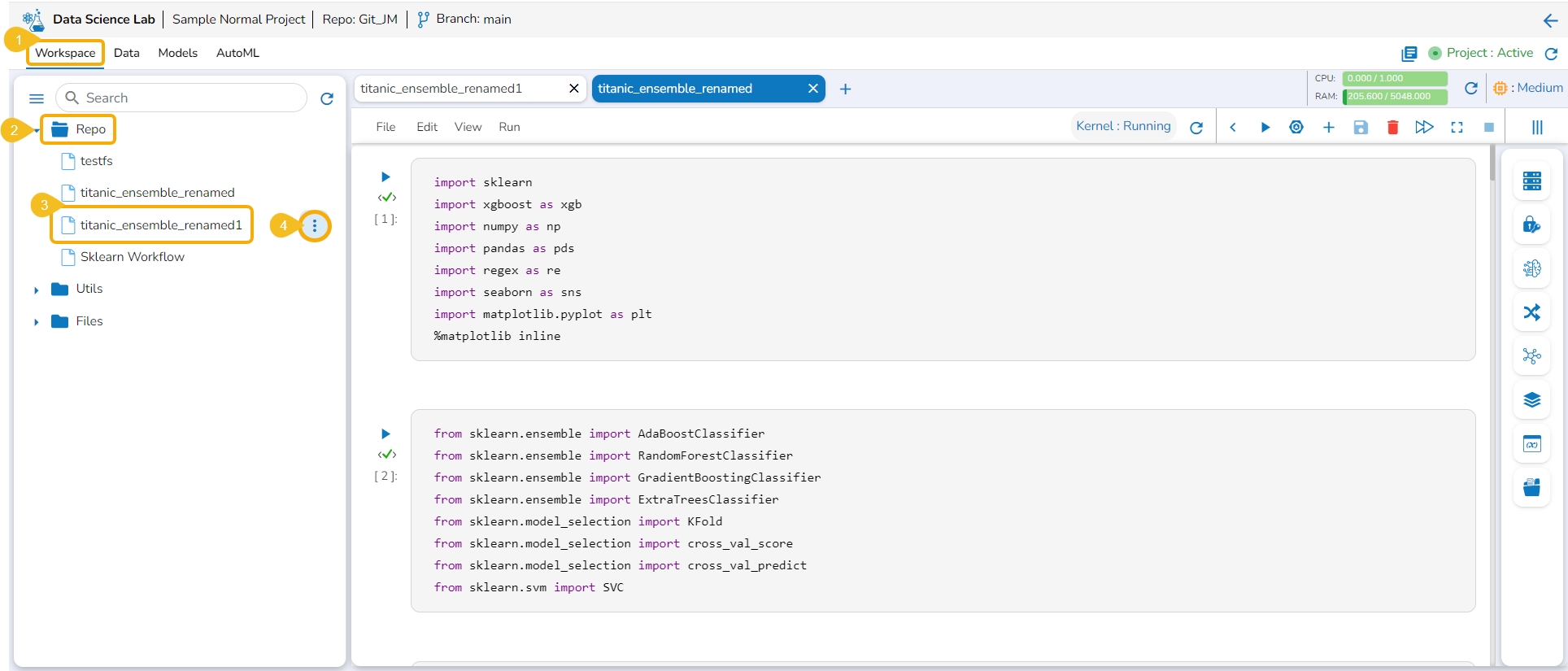

Navigate to the Repo folder and select a Notebook from the Workspace tab.

Click the Ellipsis icon for the selected Notebook to open the context menu.

Click the Register option for the Notebook.

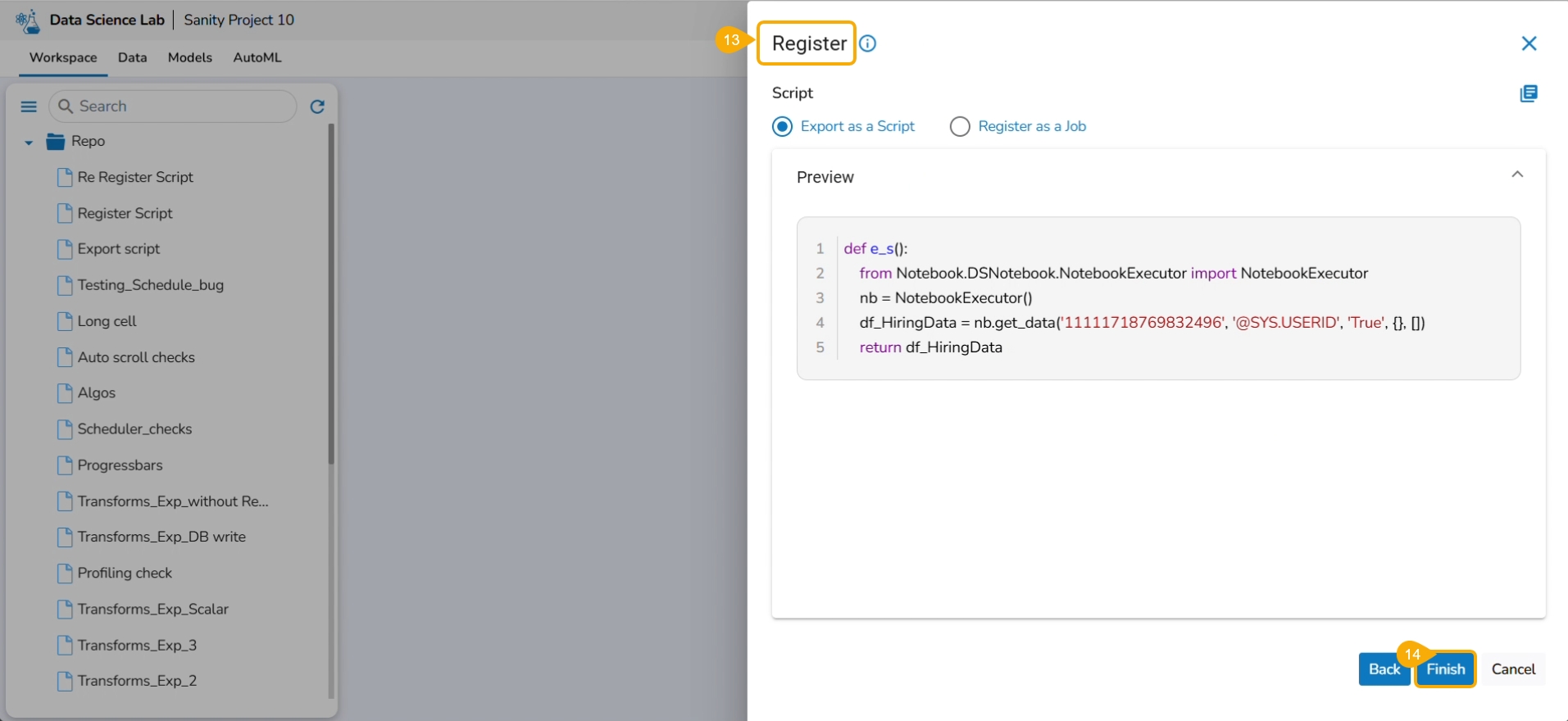

The Register window opens.

Select the Select All option or the required script using the checkbox(es).

Click the Next option.

Please Note: The user must write a function to use the Export to Pipeline functionality.

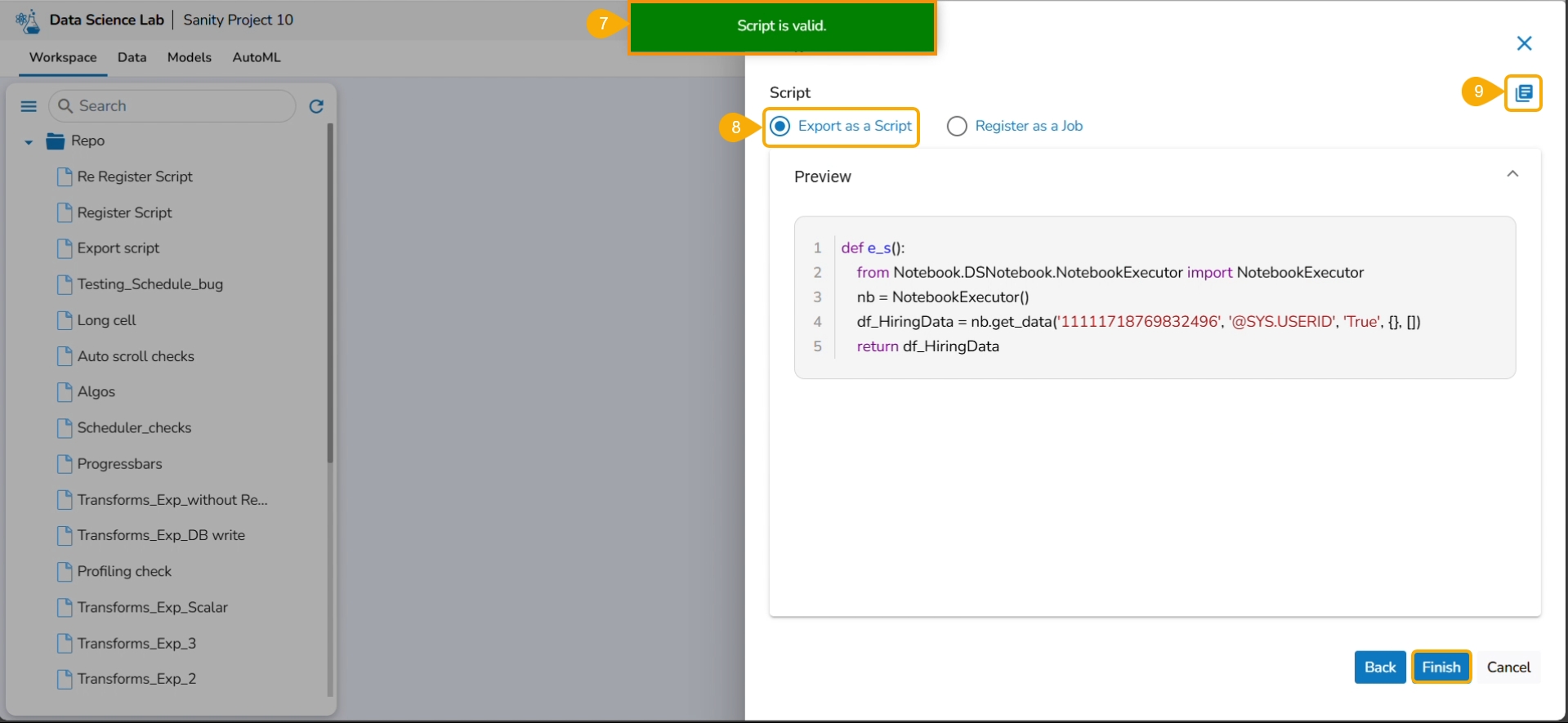

A notification appears stating that the selected script is valid.

Select Export as a Script option by selecting it via the checkbox.

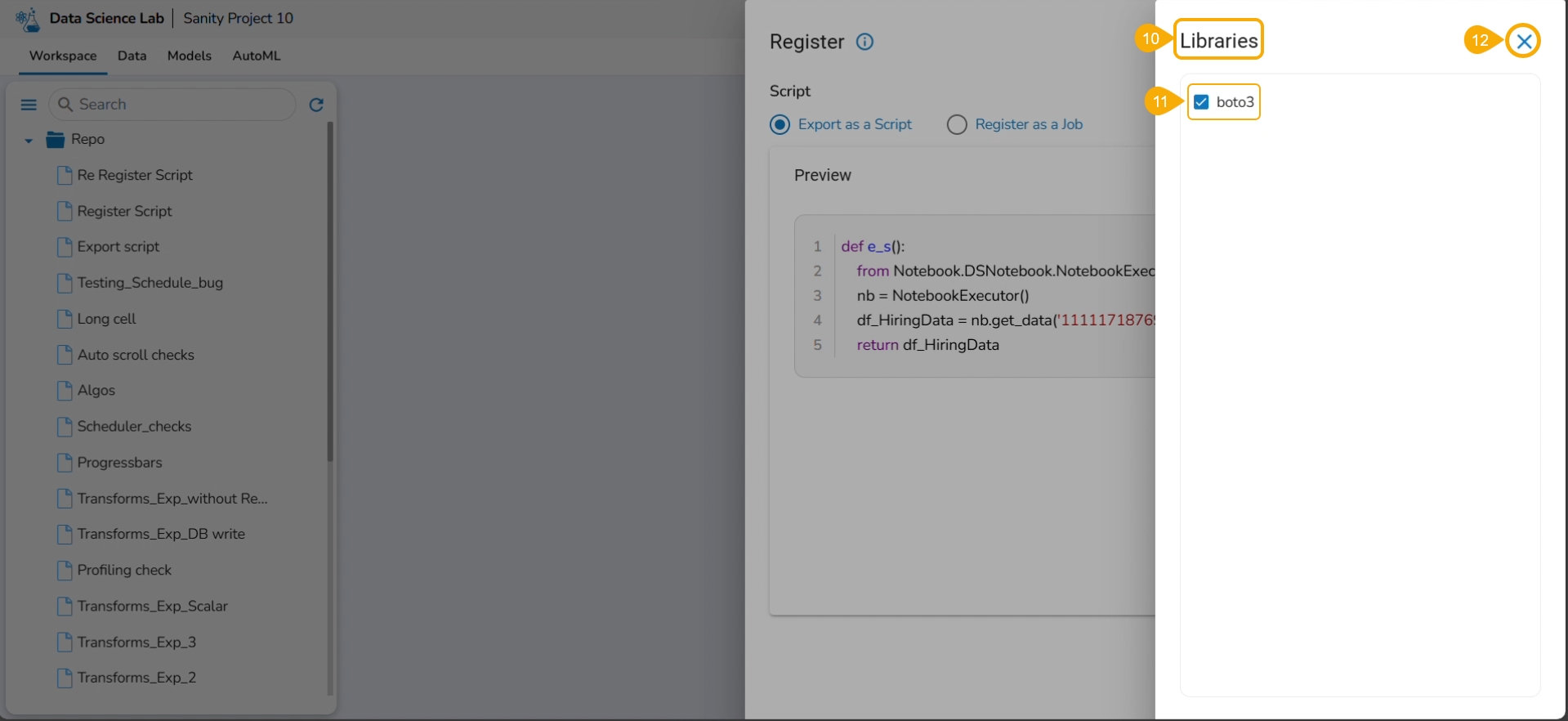

Click the Libraries icon.

The Libraries drawer opens.

Select available libraries by using checkboxes.

Click the Close icon to close the Libraries drawer.

The user gets redirected to the Register page.

Click the Finish option.

A notification message appears to ensure that the selected script is exported.

Please Note: The exported script will be available for the Data Pipeline module to be consumed inside a DS Lab Runner component.

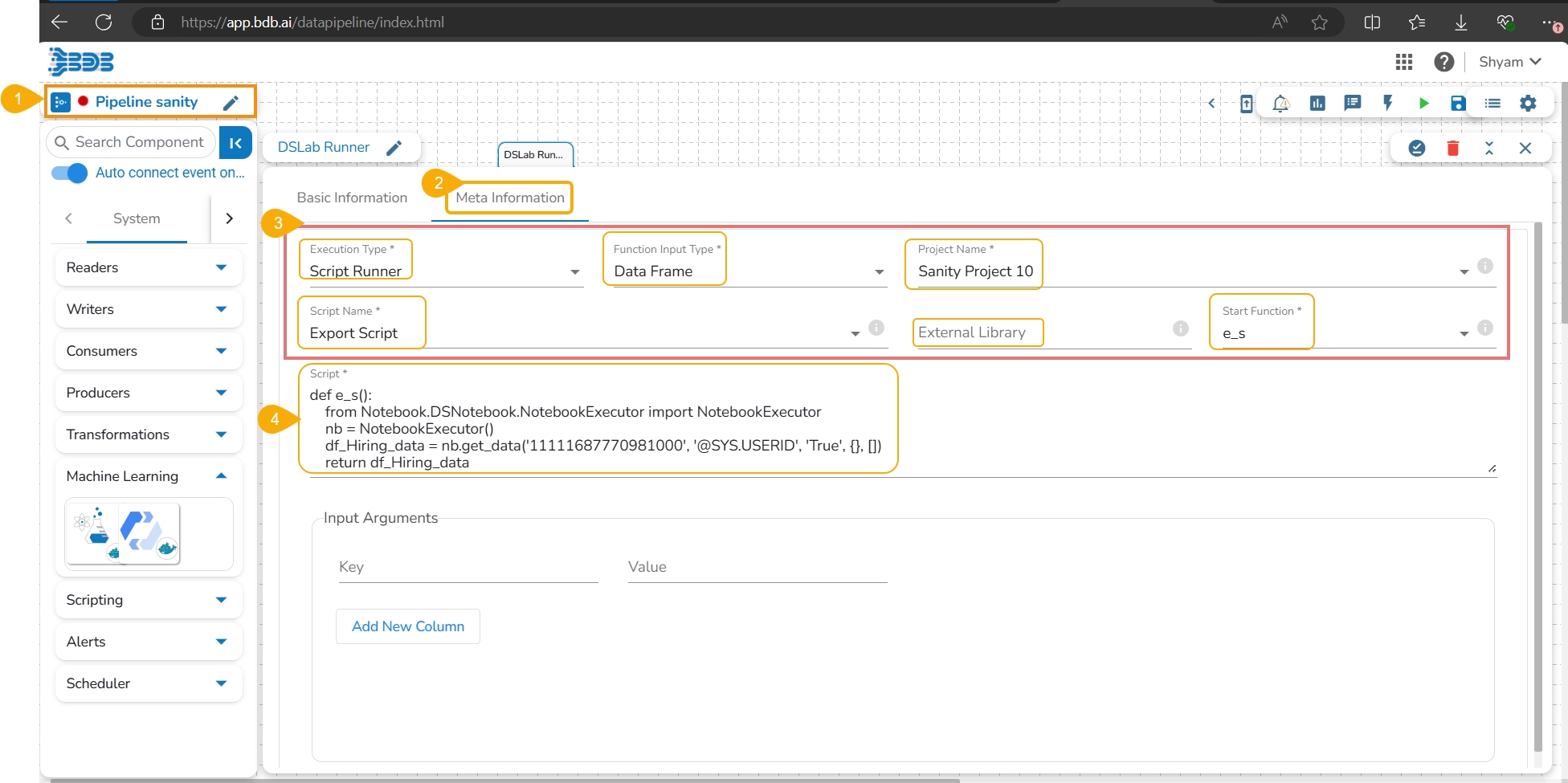

Navigate to a Data Pipeline containing the DS Lab Runner component.

Open the Meta Information tab of the DS Lab Runner component.

Select the required information as given below to access the exported script:

Execution Type: Select the Script Runner option.

Function Input Type: Select one option from the given options: Data Frame or List.

Project Name: Select the Project name using the drop-down menu.

Script Name: Select the script name using the drop-down menu.

External Library: Mention the external library.

Start Function: Select a function name using the drop-down menu.

The exported Script is displayed under the Script section.

The credited options provided to a Notebook are explained under this section.

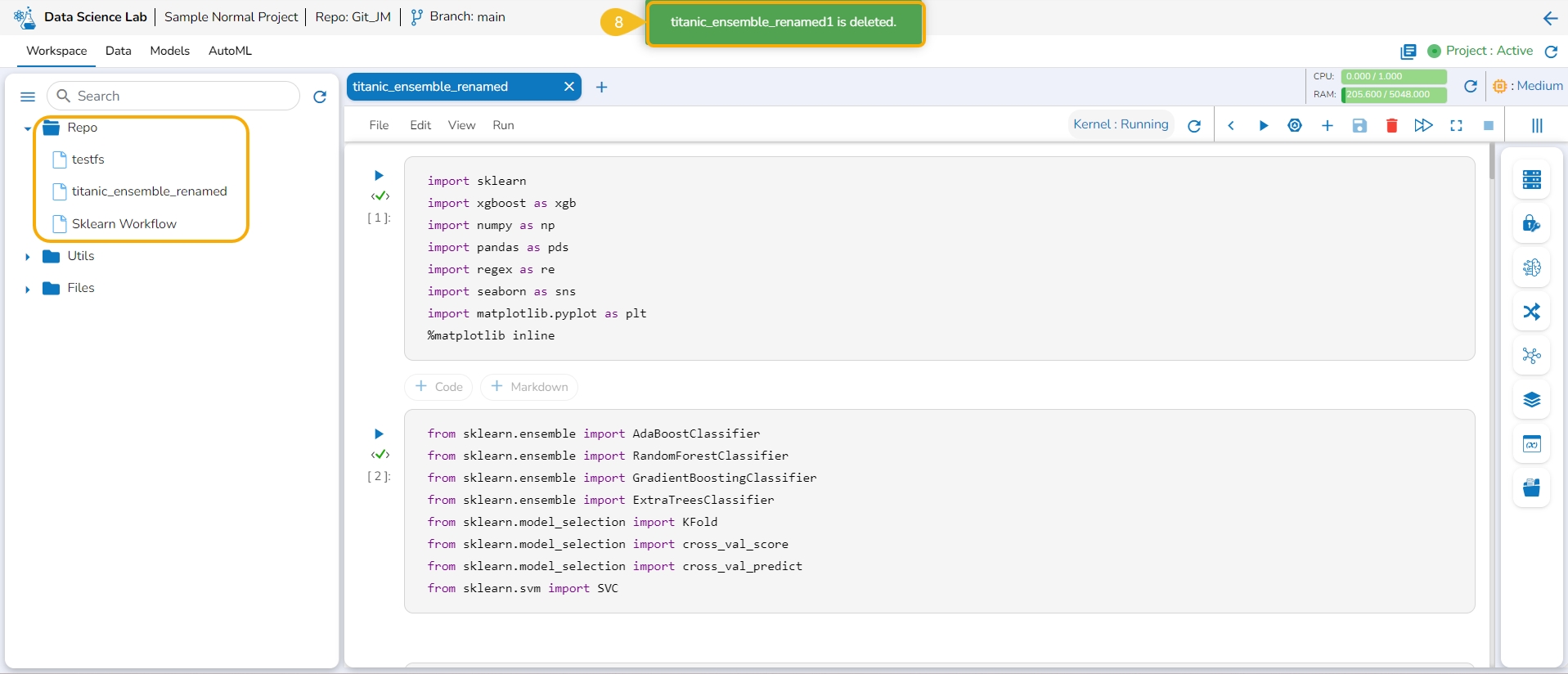

This page explains steps to delete a Notebook.

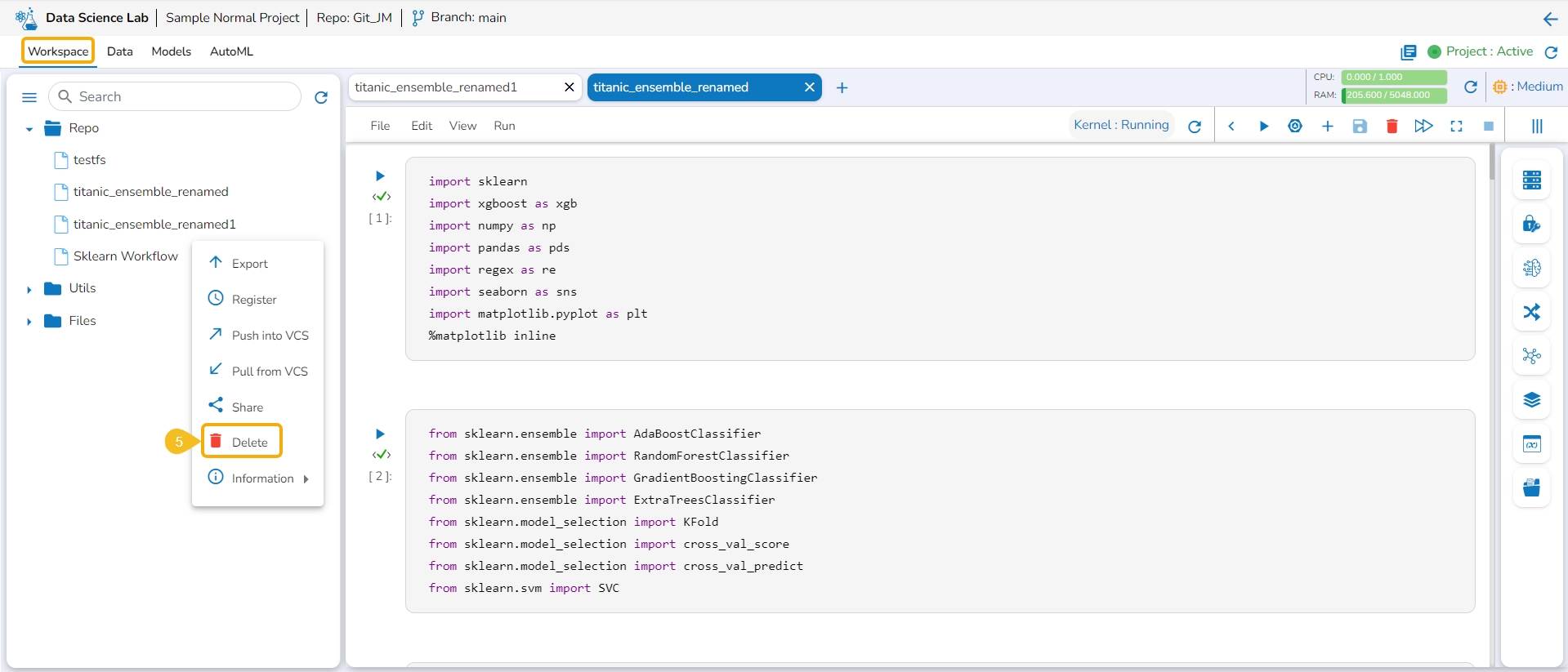

Navigate to the Workspace tab.

Open the Repo folder.

Select a Notebook from the Repo folder.

Click on the ellipsis icon provided for the selected Notebook.

A Context menu appears. Click the Delete option from the Context menu.

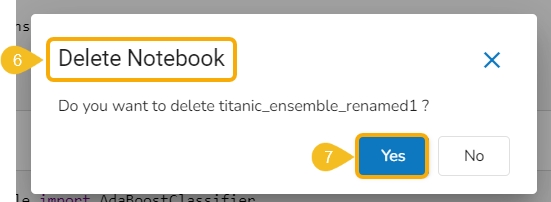

The Delete Notebook dialog box appears for the deletion confirmation.

Click the Yes option.

A notification appears to ensure the successful removal of the selected Notebook. The concerned Notebook gets removed from the Repo folder.

This page describes the steps involved to share a Notebook script and access it as a shared Notebook.

The user can share a DSL Notebook across the teams using this feature.

Check out the walk-through on sharing a Notebook.

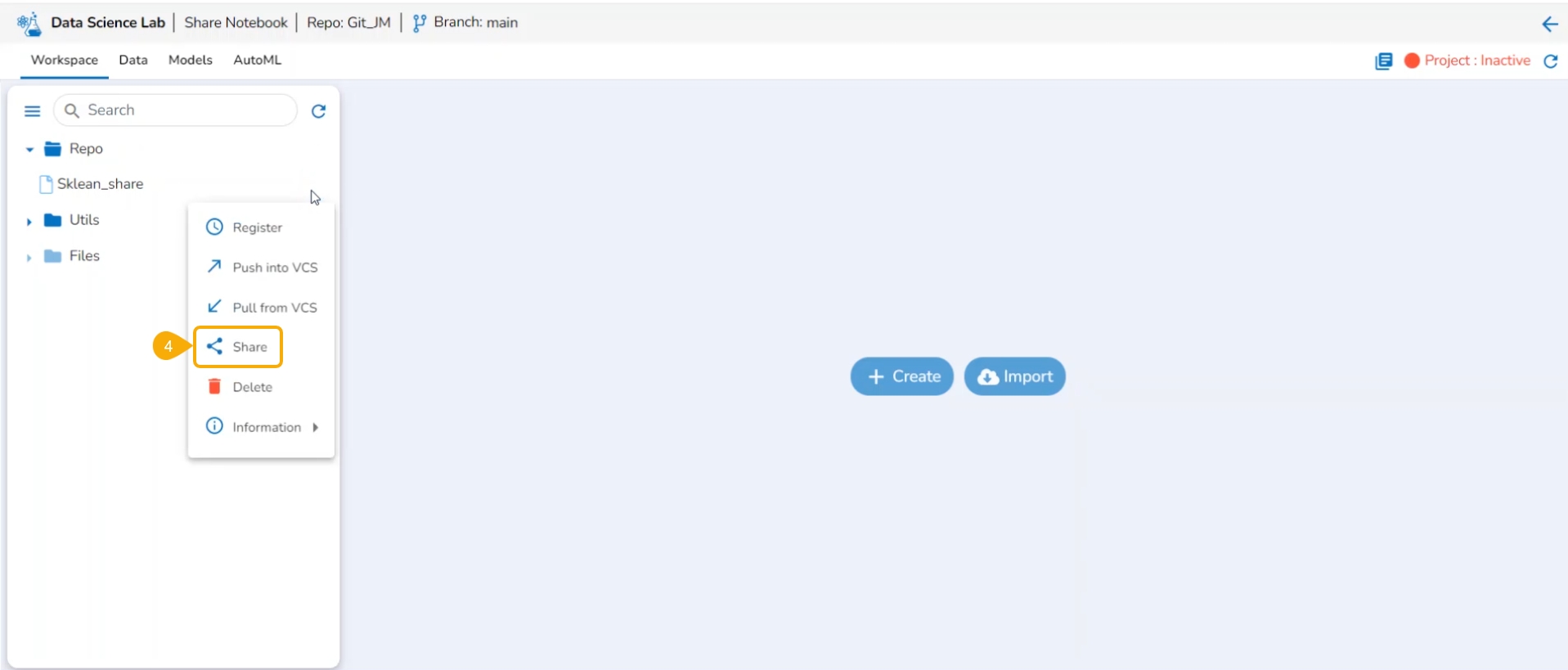

Navigate to the Workspace tab for a DS Lab project.

Select a Notebook from the list.

Click on the Ellipsis icon.

A context menu opens for the selected Notebook, click the Share option from the Context menu.

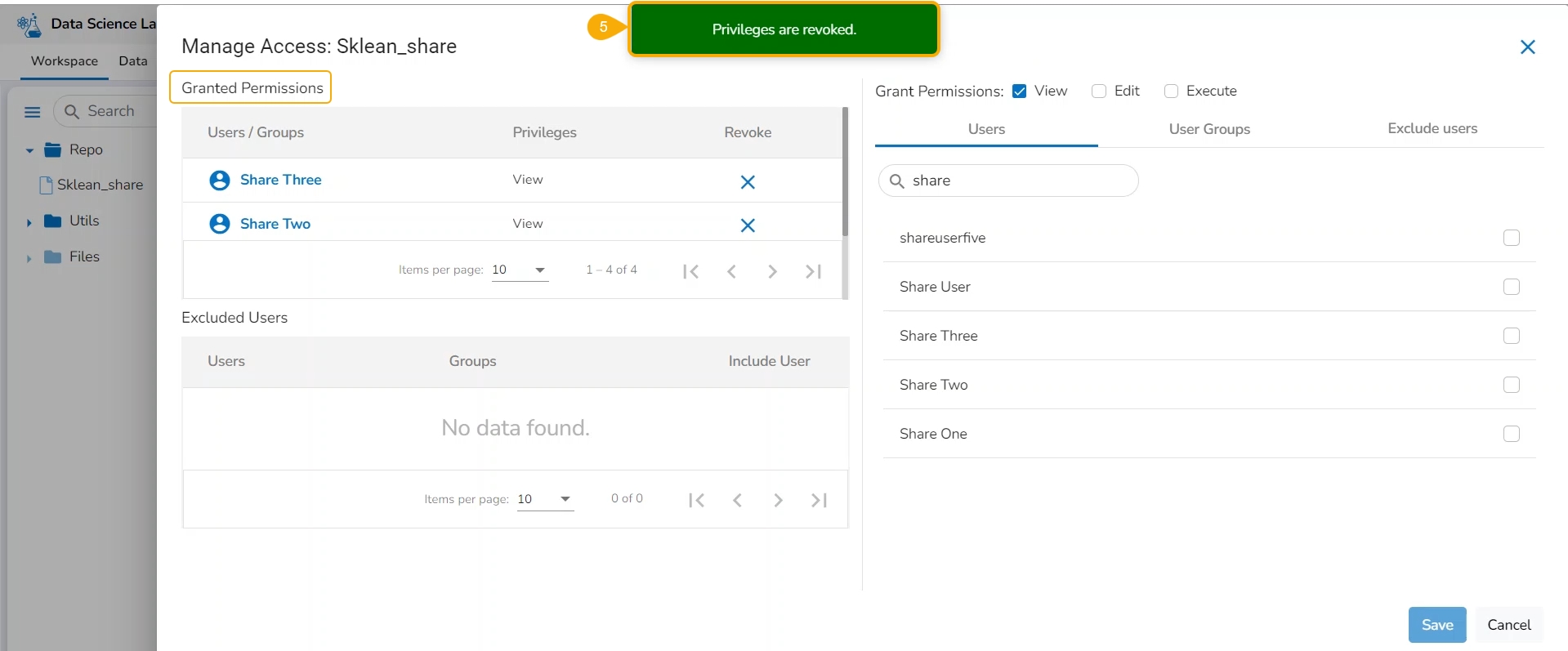

The Manage Access window opens for the selected Notebook.

Select the permissions to be granted to users/ groups using the checkboxes.

The Users, User Groups, and Exclude Users tabs appear. Select a tab from the Users and User Groups tabs.

Search for a specific user or user group to share the Notebook.

Select a User or user group from the respective tabs (as displayed in the image for the Users tab).

Click the Save option.

A notification message appears to ensure about the share action.

The selected user gets added to the Granted Permissions section.

Check out the illustration to access a shared Notebook.

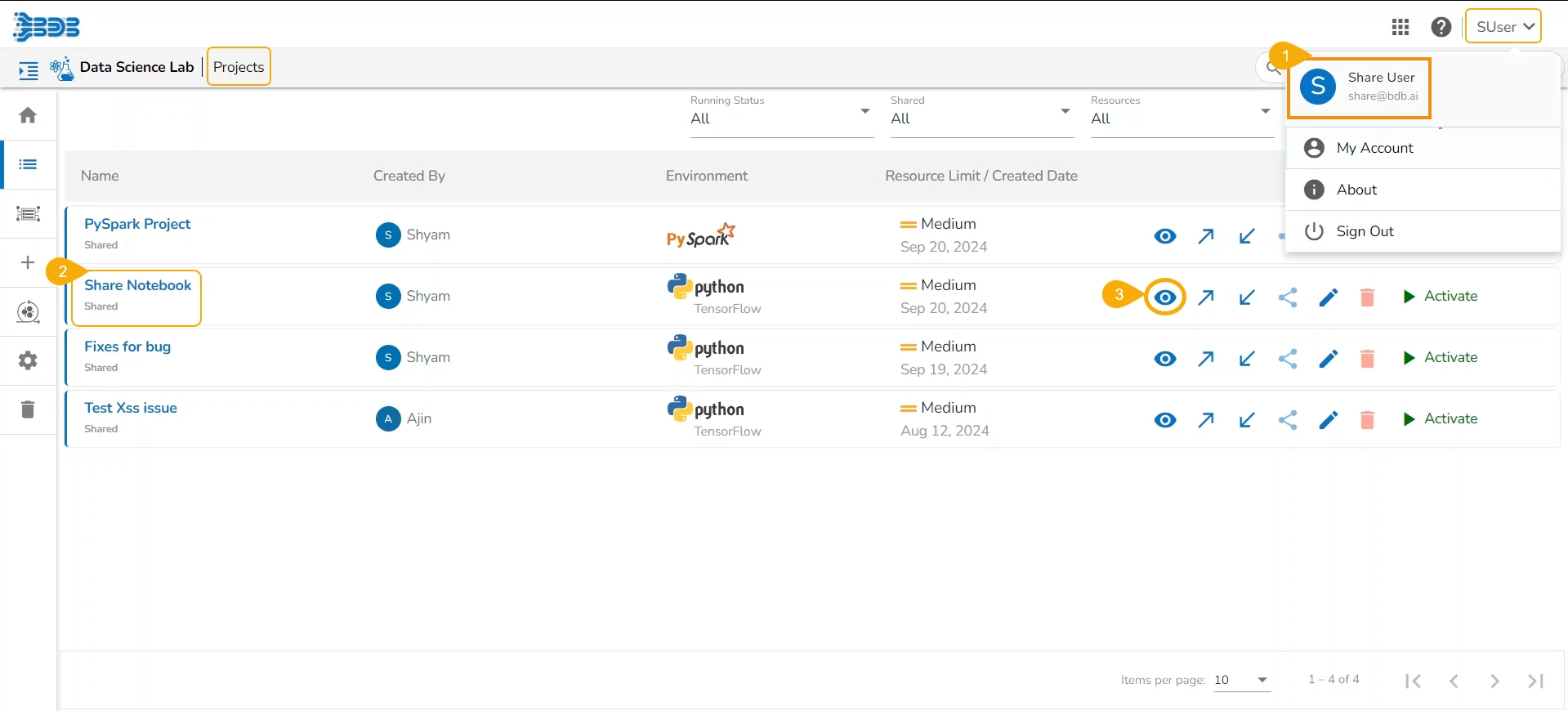

Login to the Platform using the user's credentials to whom the Notebook is shared and navigate to the Projects page for the DS Lab module.

The Shared Project gets indicated as shared on the Projects page.

Click the View icon to open the project.

The Workspace tab opens by default for the shared Project.

The shared Notebook would be listed under the Repo folder.

Open the Notebook Actions menu. The Share and Delete options will be disabled for a shared Notebook.

Please Note: A targeted share user cannot re-share or delete a shared DSL Notebook regardless of the permission level (View/ Edit/Execute).

You can revoke the permissions shared with a user or user group by using the Revoke Permissions icon.

Check out the illustration on revoking the granted permissions.

Navigate to the Manage Permissions window for a shared Notebook.

The Granted Permissions section lists all the users or user groups to whom the Notebook has been shared.

Select a user or user group from the list.

Click the Revoke Privileges icon.

A con

A notification appears, and the shared privileges will be revoked for the selected user/ user group. The user/ user group gets removed from the Granted Permissions list.

The user can exclude some users from the privileges to access a shared Notebook while allowing permissions for the other users of the same group.

Check out the illustration on excluding a user/ user group from the shared privileges of a Notebook.

Navigate to the Manage Access window for a shared Notebook.

Grant Permissions to the user(s)/ user group(s) using the checkboxes.

Open the User Groups tab.

Select a User Group from the displayed list.

Use the checkbox to select it for sharing the Notebook.

Navigate to the Exclude Users tab.

Select a user from the displayed list and use the checkbox to exclude that user from the shared permissions.

Click the Save option.

A notification appears to ensure the shared action.

The selected user gets excluded from the shared Notebook permissions.

The Notebook gets shared with the rest of the users in that group.

Check out the illustration on including an Excluded user for accessing a shared Notebook.

Navigate to the Excluded Users section.

Select a user from the displayed list.

Click the Include User icon.

The Include User confirmation dialog box appears.

Click the Yes option.

A notification appears to ensure the success of the action.

The selected user gets included in the group with the shared permissions for the Notebook. The user will get removed from the Excluded Users list.

Please Note:

If the project is shared with a user group, then all the users under that group appear under the Exclude User tab.

The Project gets shared by default with the concerned Notebook while using the Share function for a Notebook.

A Shared Project even if it is shared by default with a Notebook remains Active for the user to access the Notebook and open it.

This page explains the step by step process for Notebook migration and Push to VCS functionality.

A Notebook script can be migrated across the space and server using the Push into GIT option.

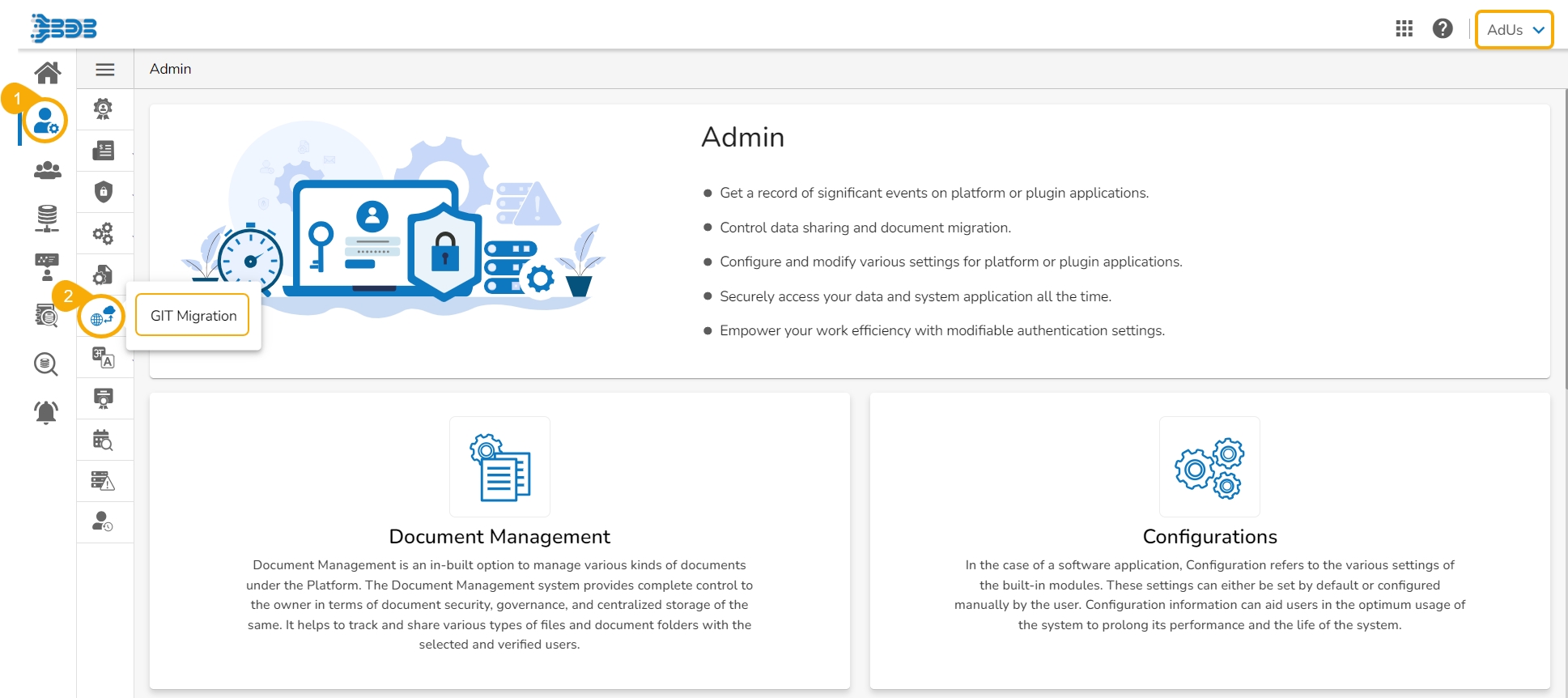

Prerequisites: It is required to set the configuration for the Data Science Lab Migration using the Version Control option from the Admin module before migrating a DS Lab script or model.

Check out the walk-through on how to migrate/ export a Notebook script to the GIT Repository.

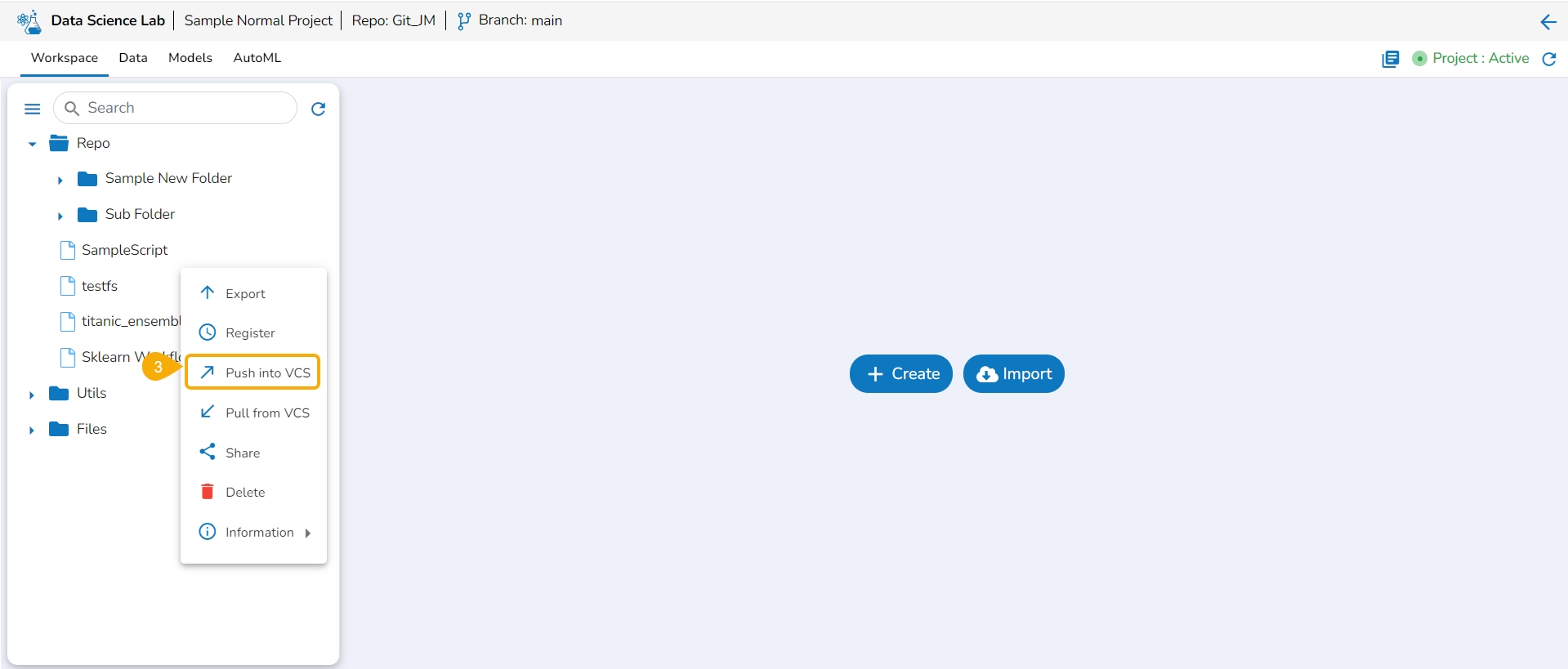

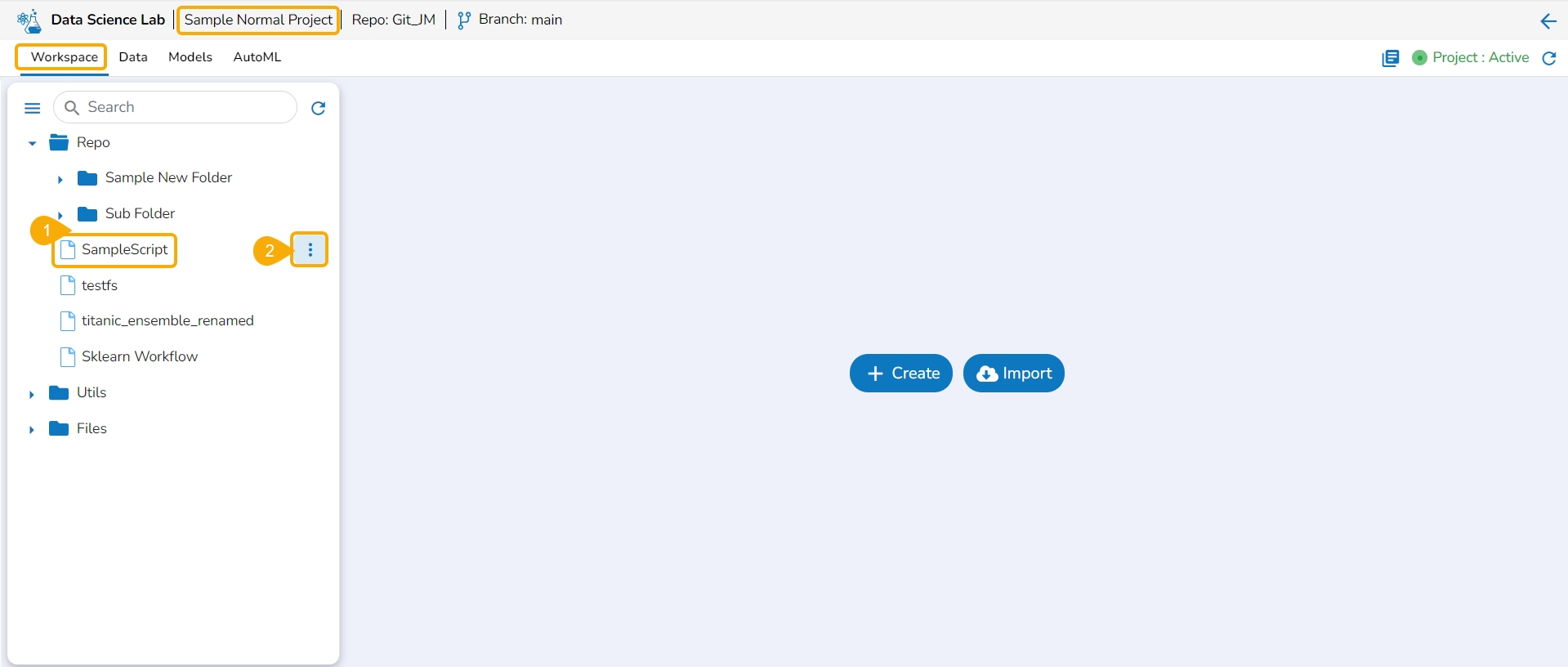

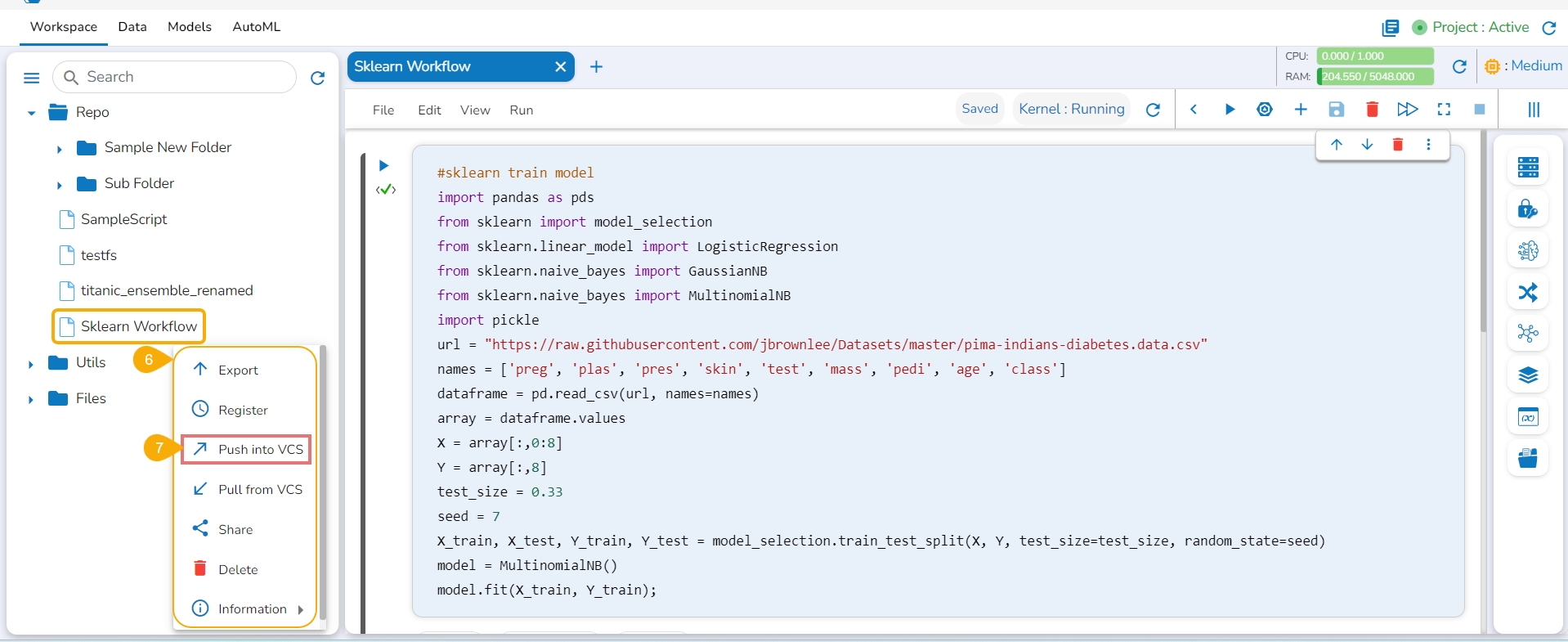

Select a Notebook from the Workspace tab.

Click the Ellipsis icon to get the Notebook list actions.

Click the Push into VCS option for the selected Notebook.

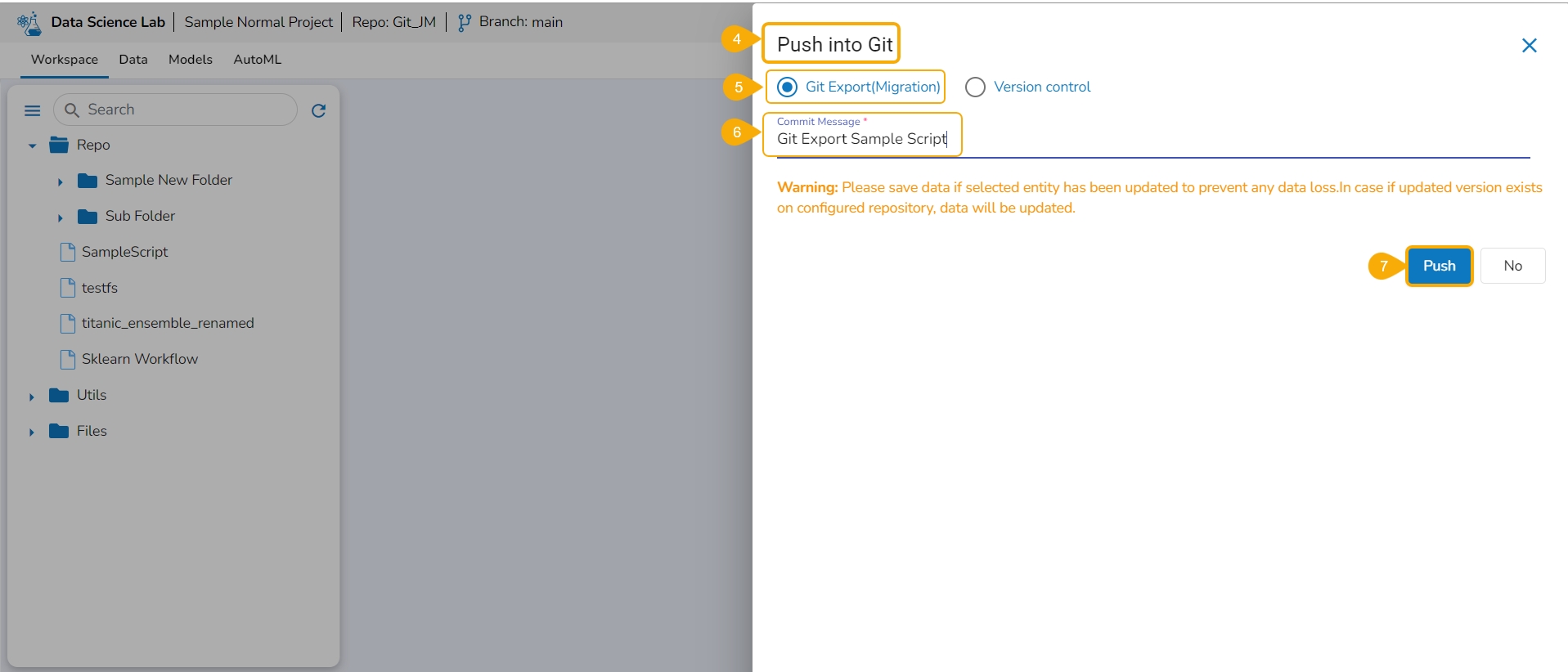

The Push into Git drawer opens.

Select the Git Export (Migration) option.

Provide a Commit Message in the given space.

Click the Push option.

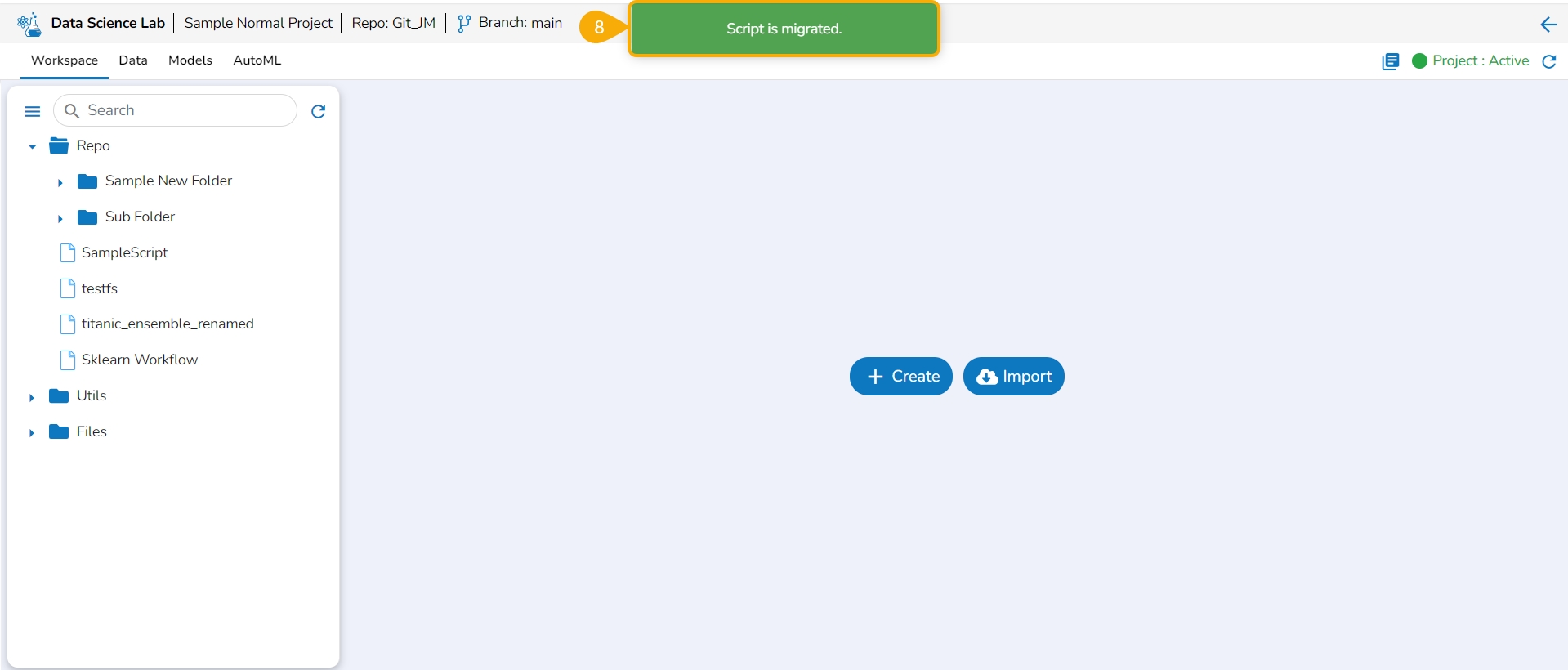

The selected Notebook script version gets migrated to the Git Repository and the user gets notified by a message.

After exporting a DSL script, you can sign in to another user account on a different space or server and import the DSL script.

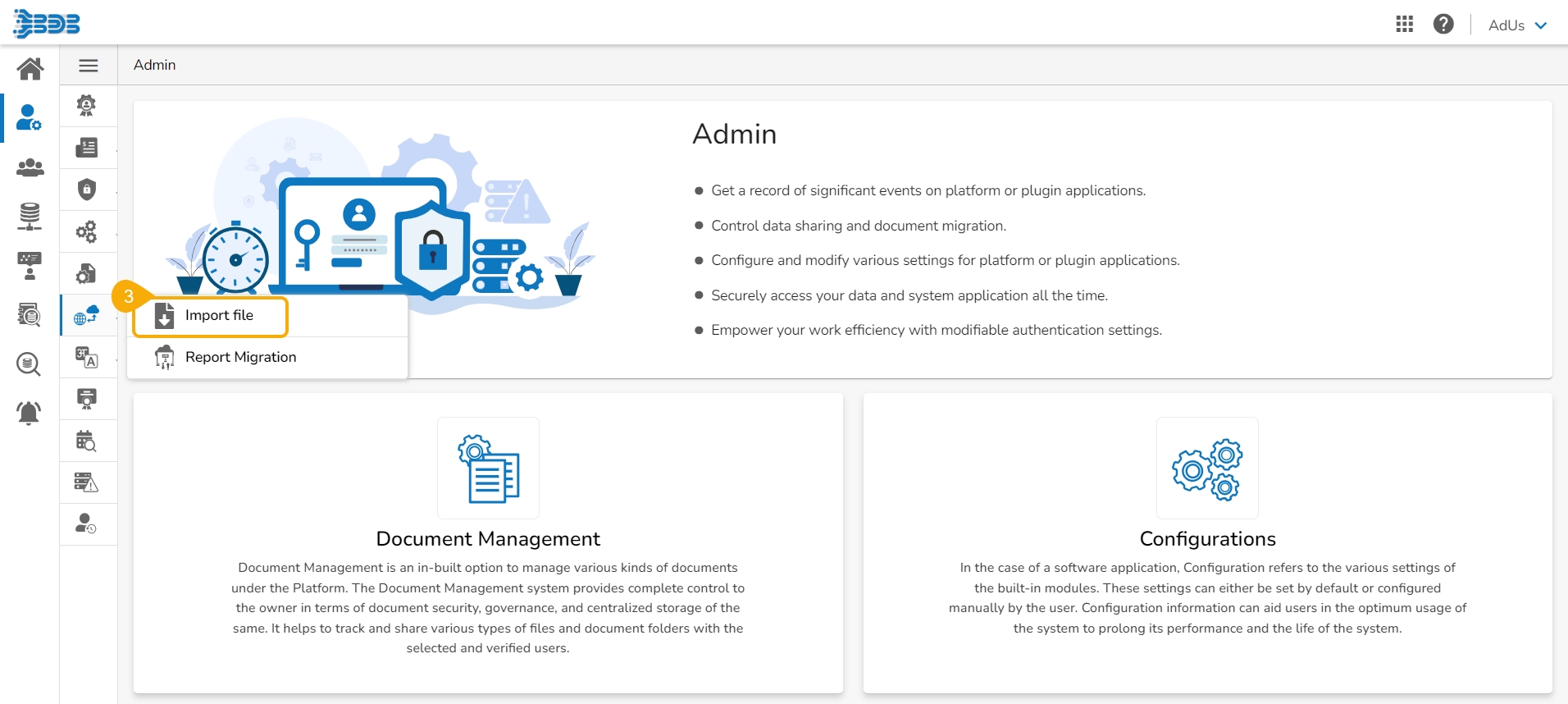

Click the Admin module from the Apps menu.

Select the GIT Migration option from the admin menu panel.

Click the Import File option.

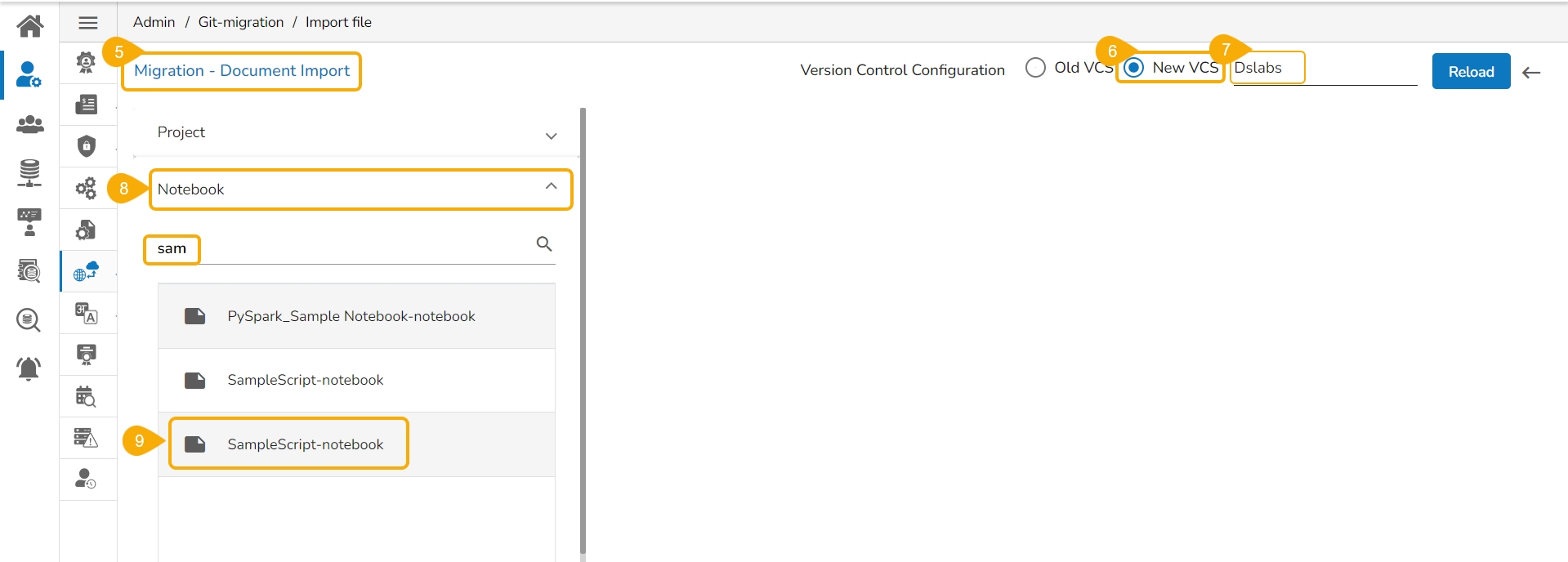

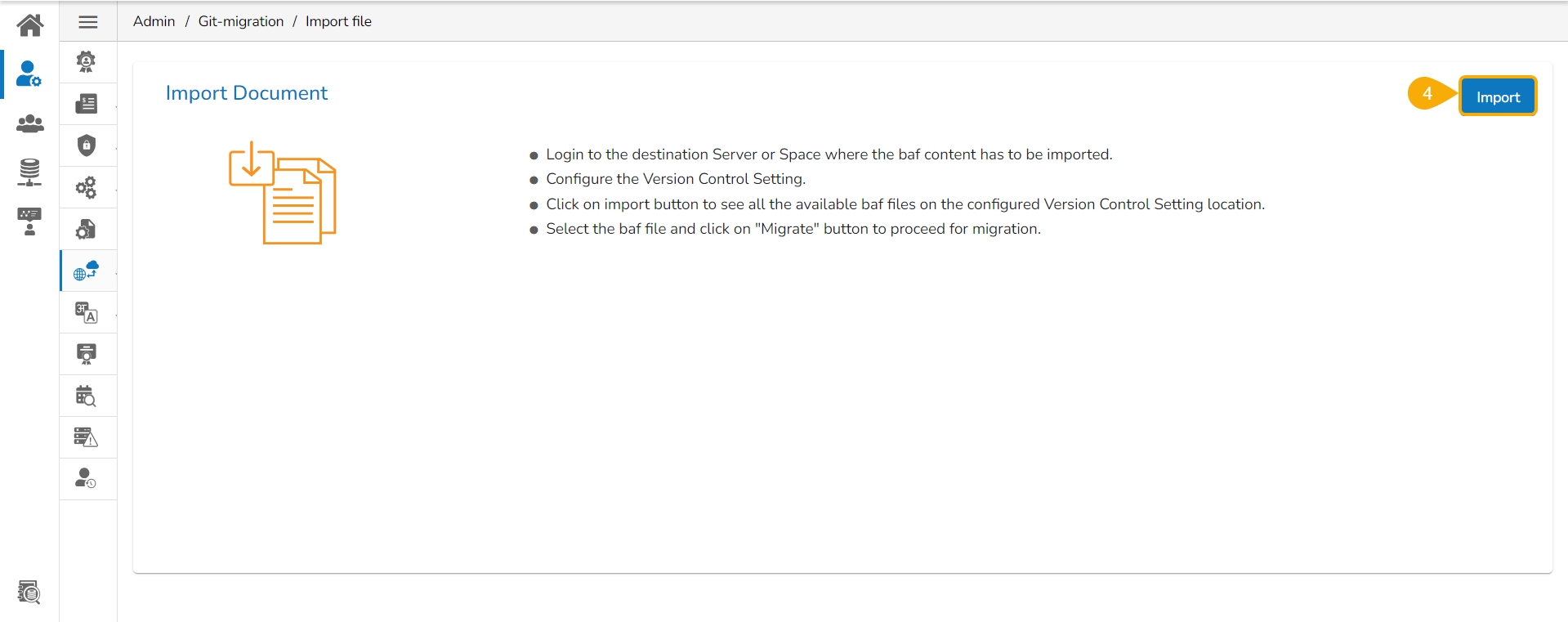

The Import Document page opens, click the Import option as suggested in the following image.

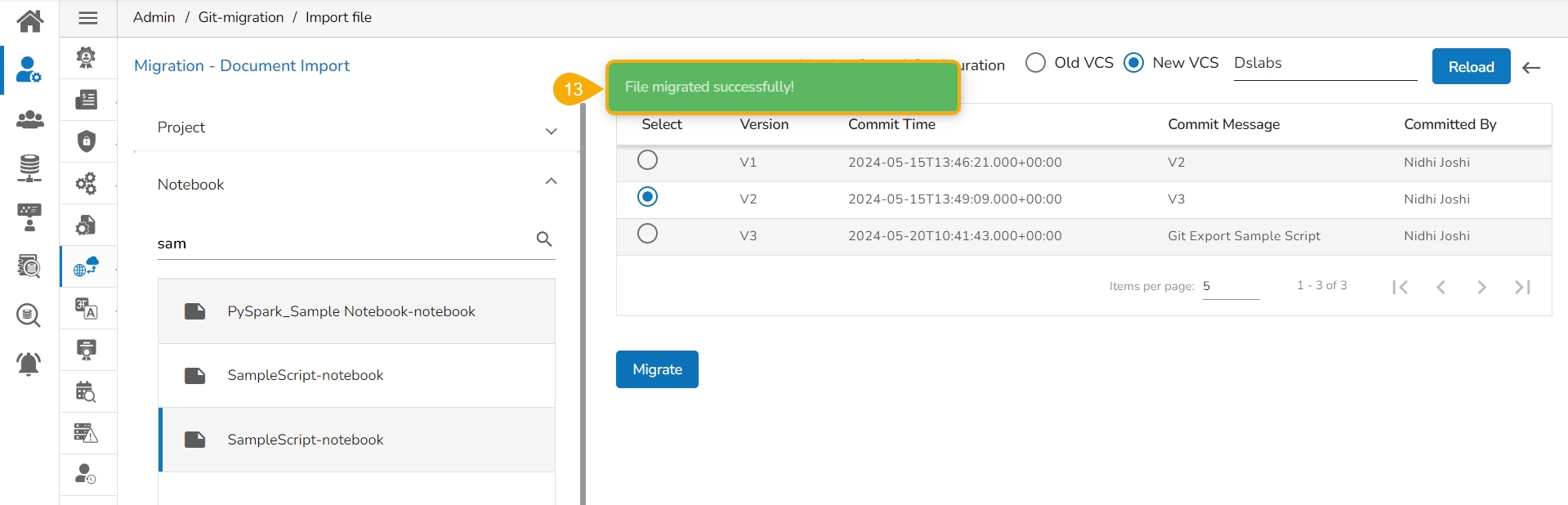

The Migration- Document Import page opens.

Select New VCS as Version Control Configuration.

Select the DSLab option from the module drop-down menu.

Select the Notebook option from the left side panel.

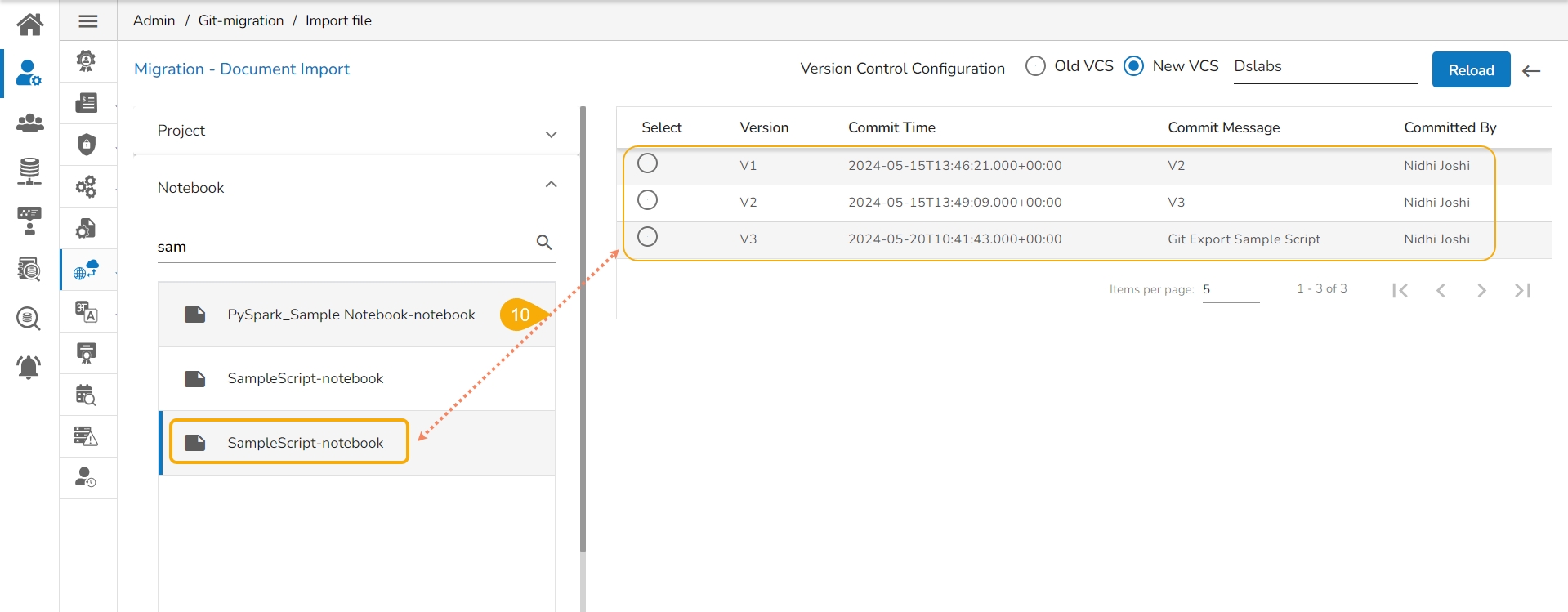

All the migrated Notebooks are listed. The user can use the Search bar to customize the displayed list of the exported Notebooks.

Select a Notebook from the displayed list to open the available versions of that Notebook.

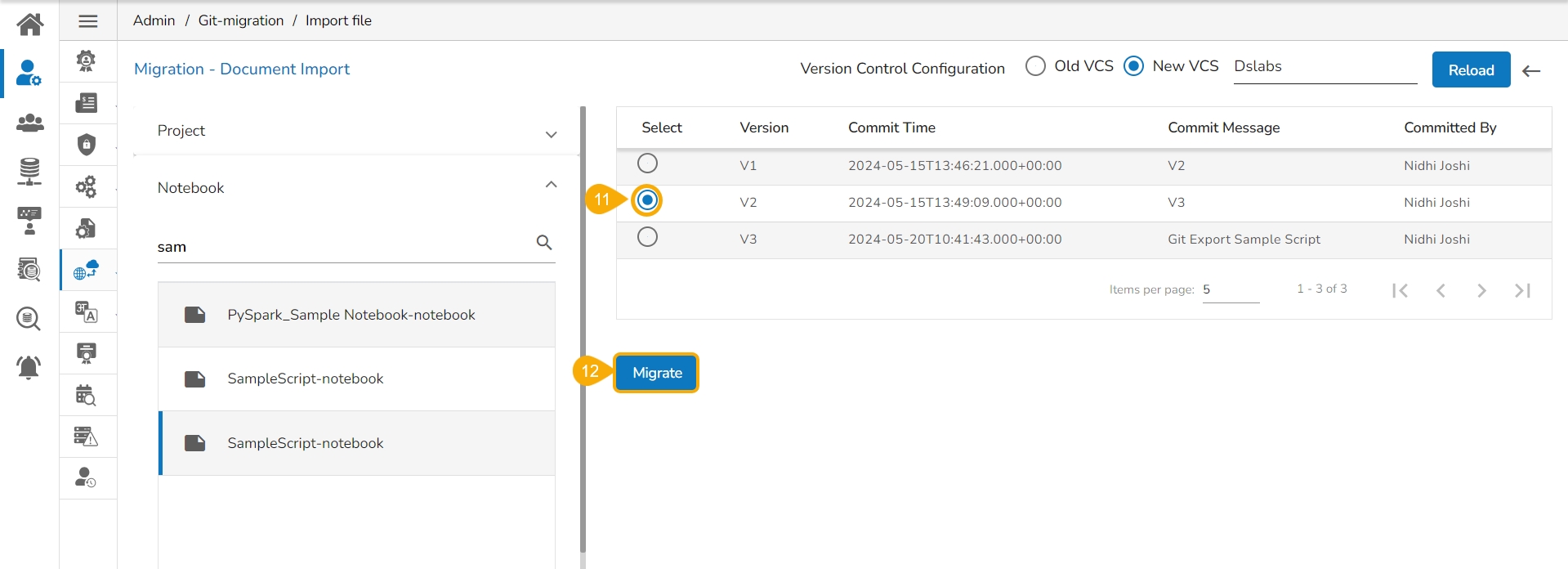

Select a Version that you wish to import.

Click the Migrate option.

A notification message appears informing that the file has been migrated.

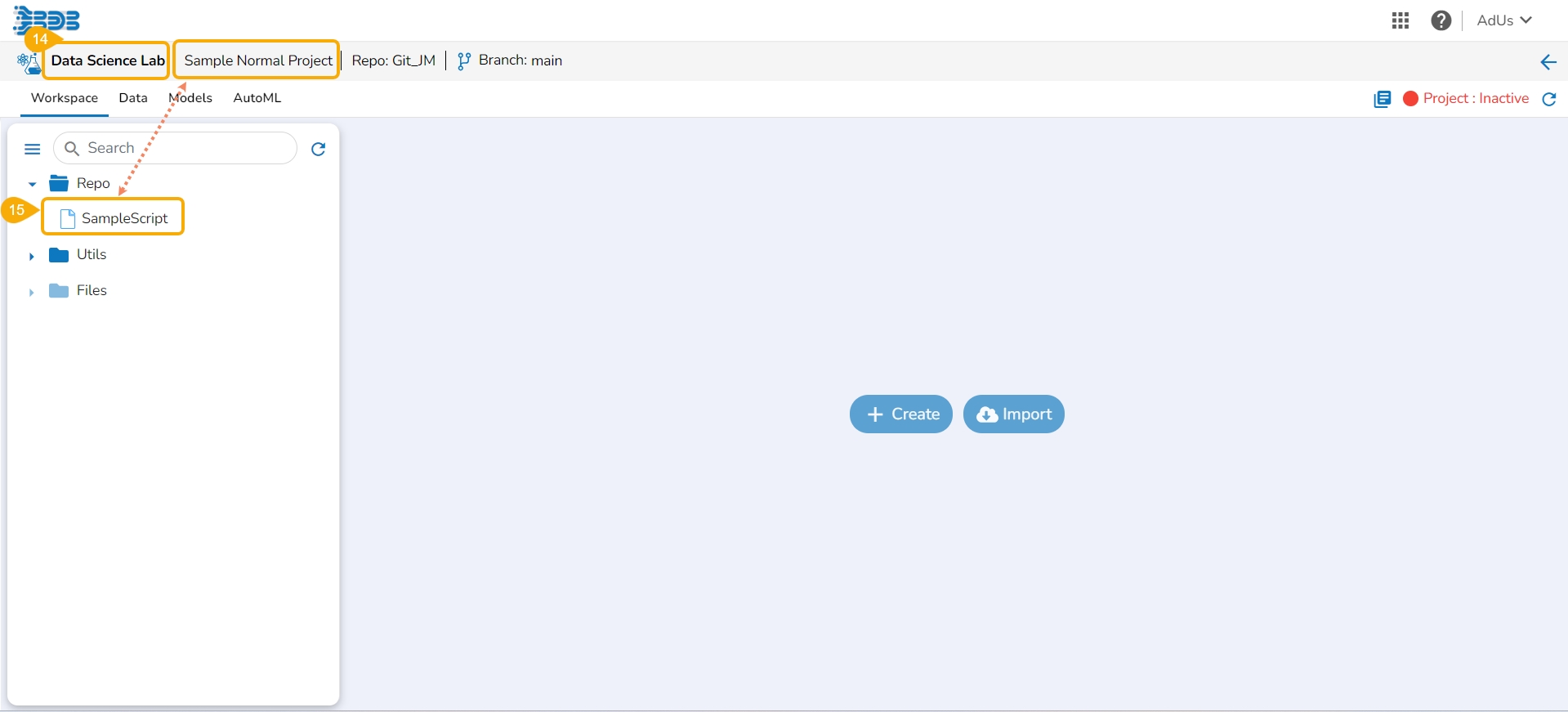

Open the Data Science Lab module and navigate to the List Project Page.

The imported Notebook gets listed with the concerned DSL Project.

Please Note:

The user can migrate only the exported scripts (the exported scripts to the Data Pipeline).

While migrating a DSL Notebook/Script using the Export to Git functionality, the concerned Project under which the Notebook is created also gets migrated.

While migrating a DSL Notebook the utility files which are part of the same Project will also get migrated.

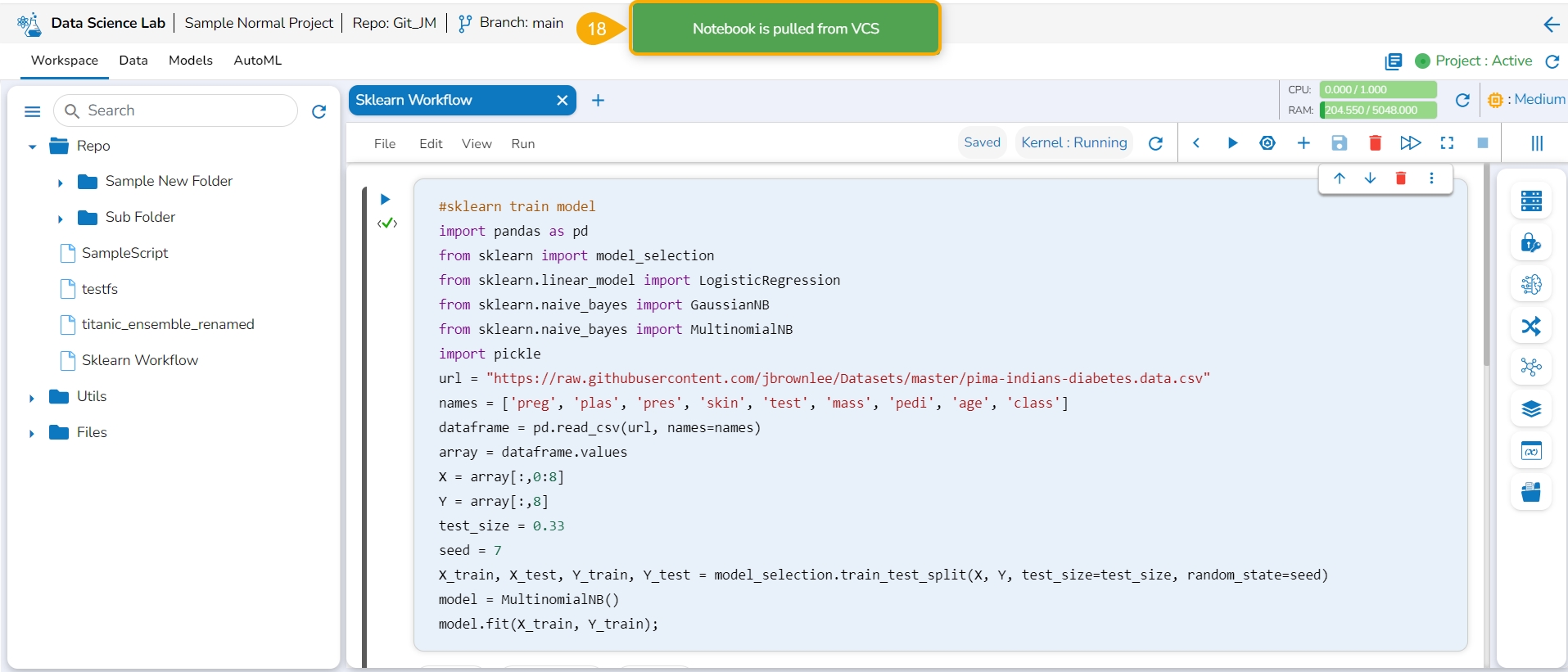

Check out the illustration to understand the Version Controlling steps for a Notebook file.

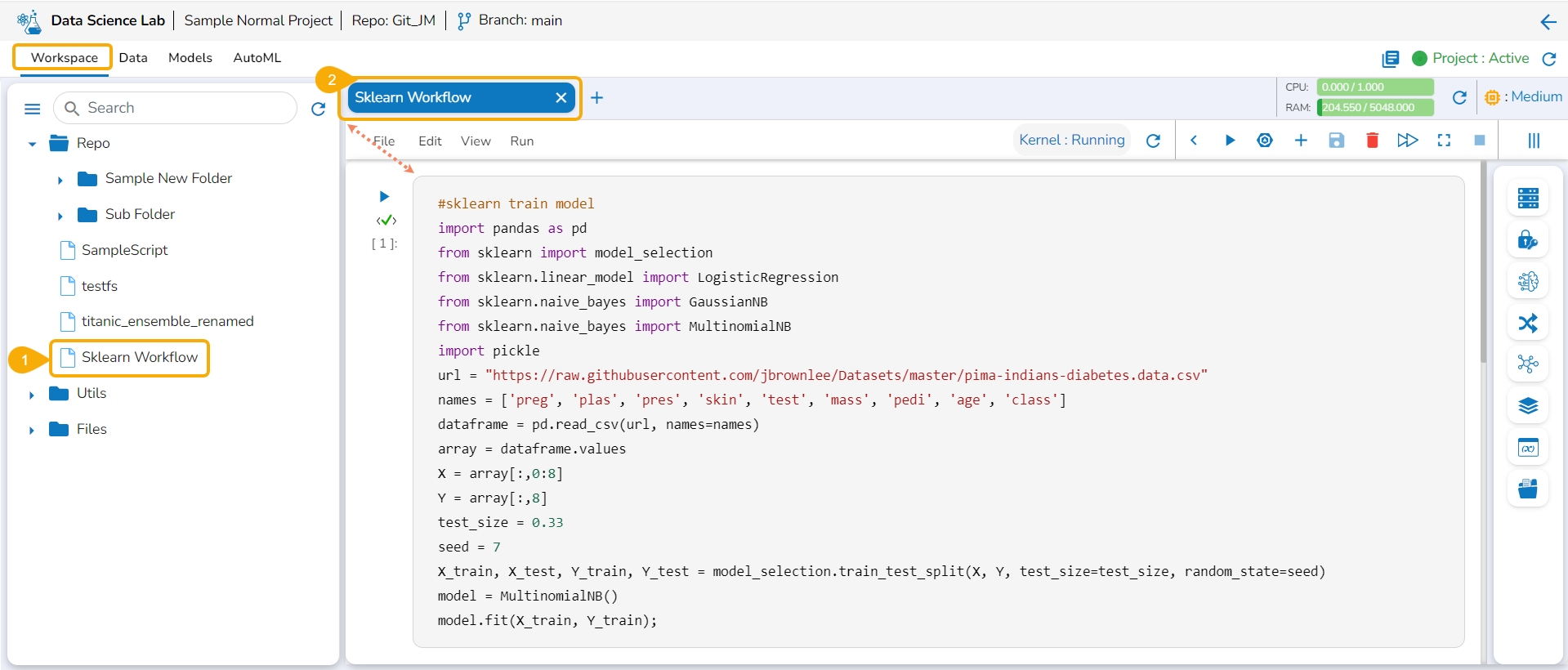

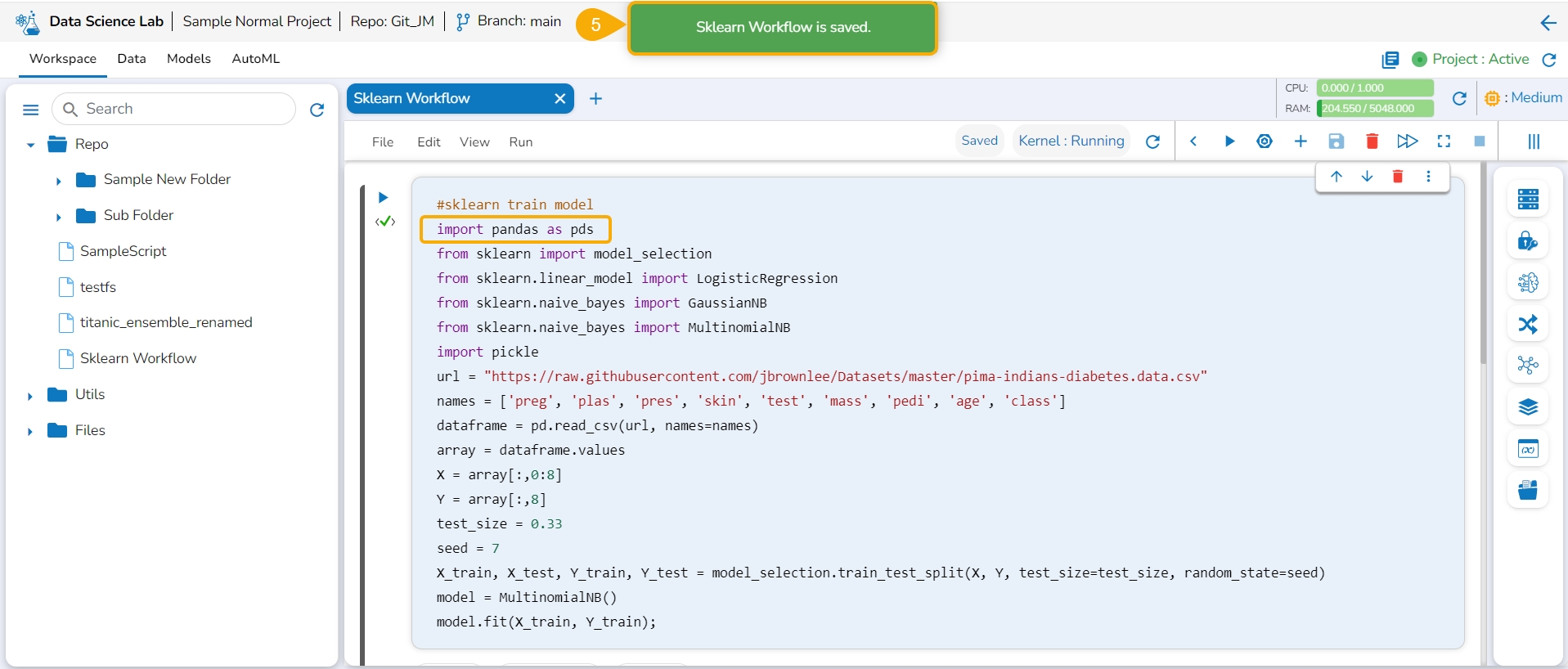

Select a Notebook file from the Workspace tab.

Open the Notebook file.

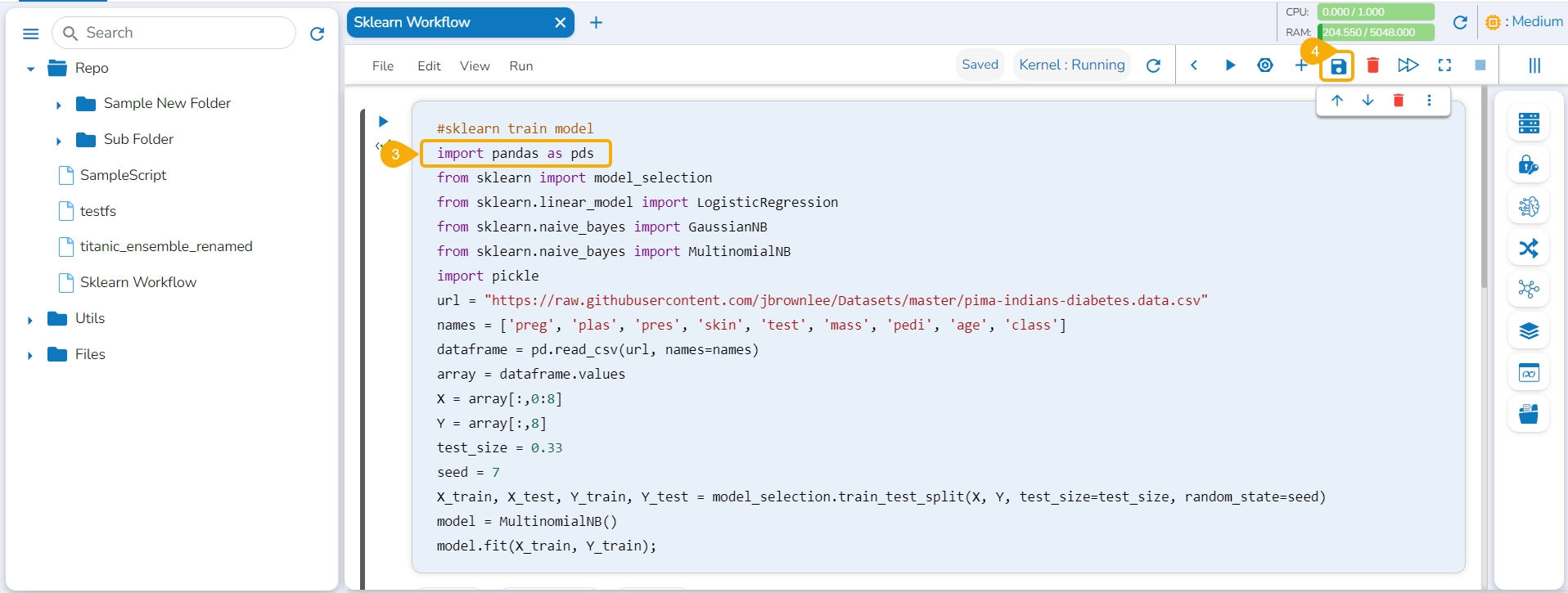

Modify the Notebook script.

Click the Save icon.

A message appears to notify the user that the workflow changes are saved.

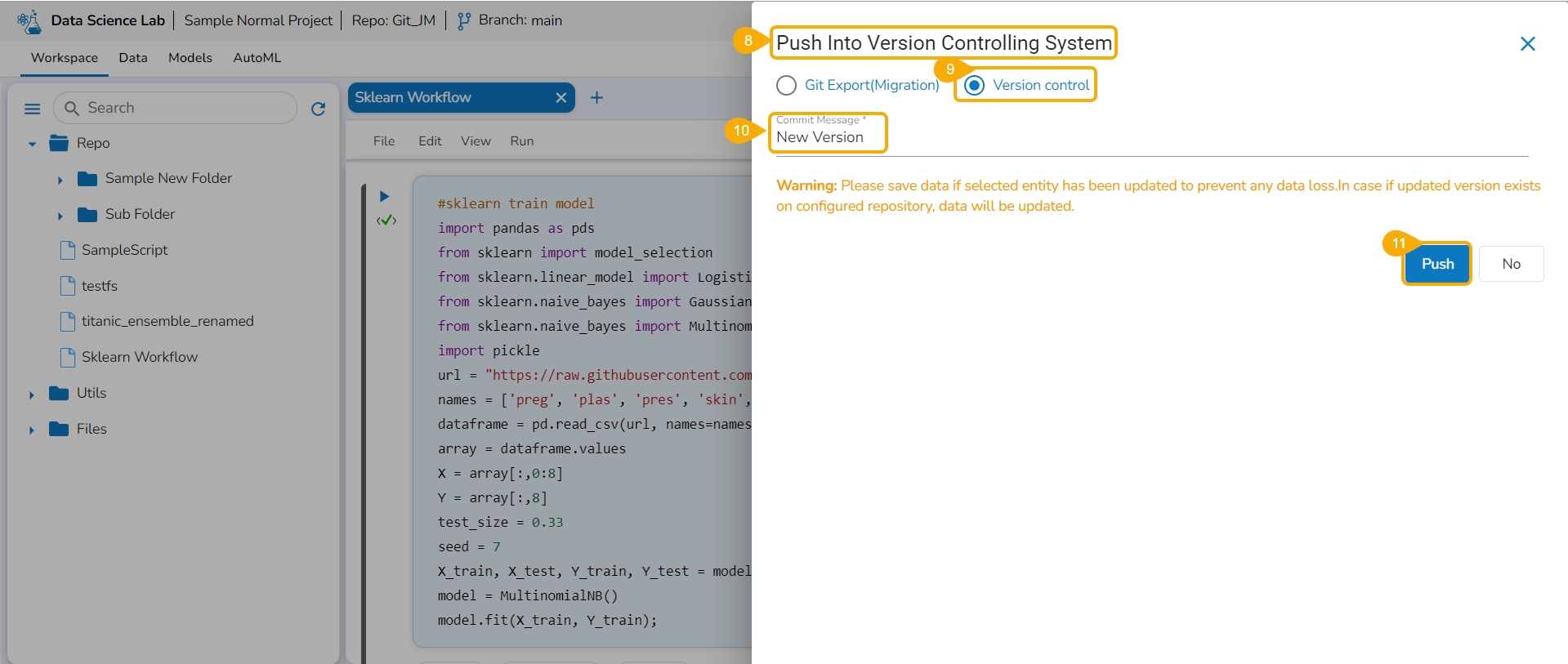

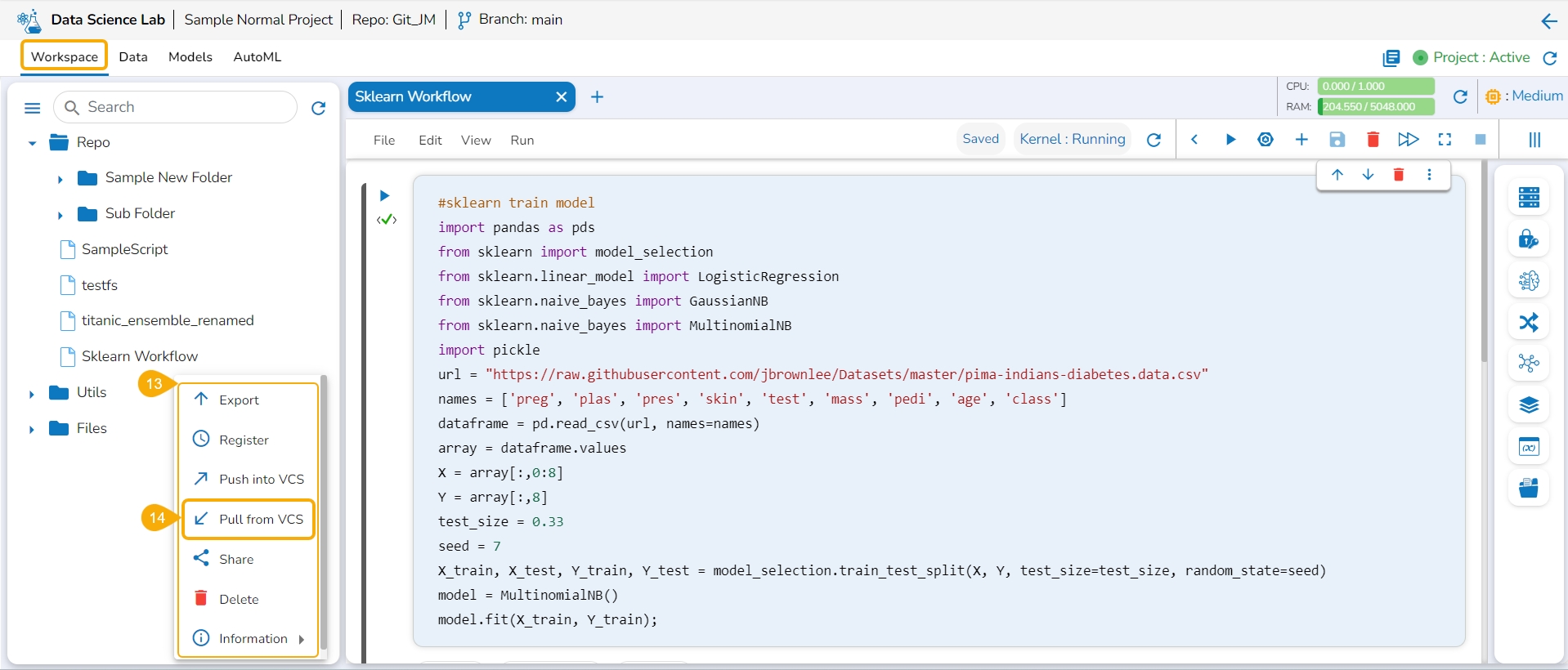

Access the Context menu for the Notebook.

Click the Push into VCS option for the selected Notebook.

The Push into Version Controlling System drawer opens.

Select the Version Control option.

Provide a Commit Message.

Click the Push option.

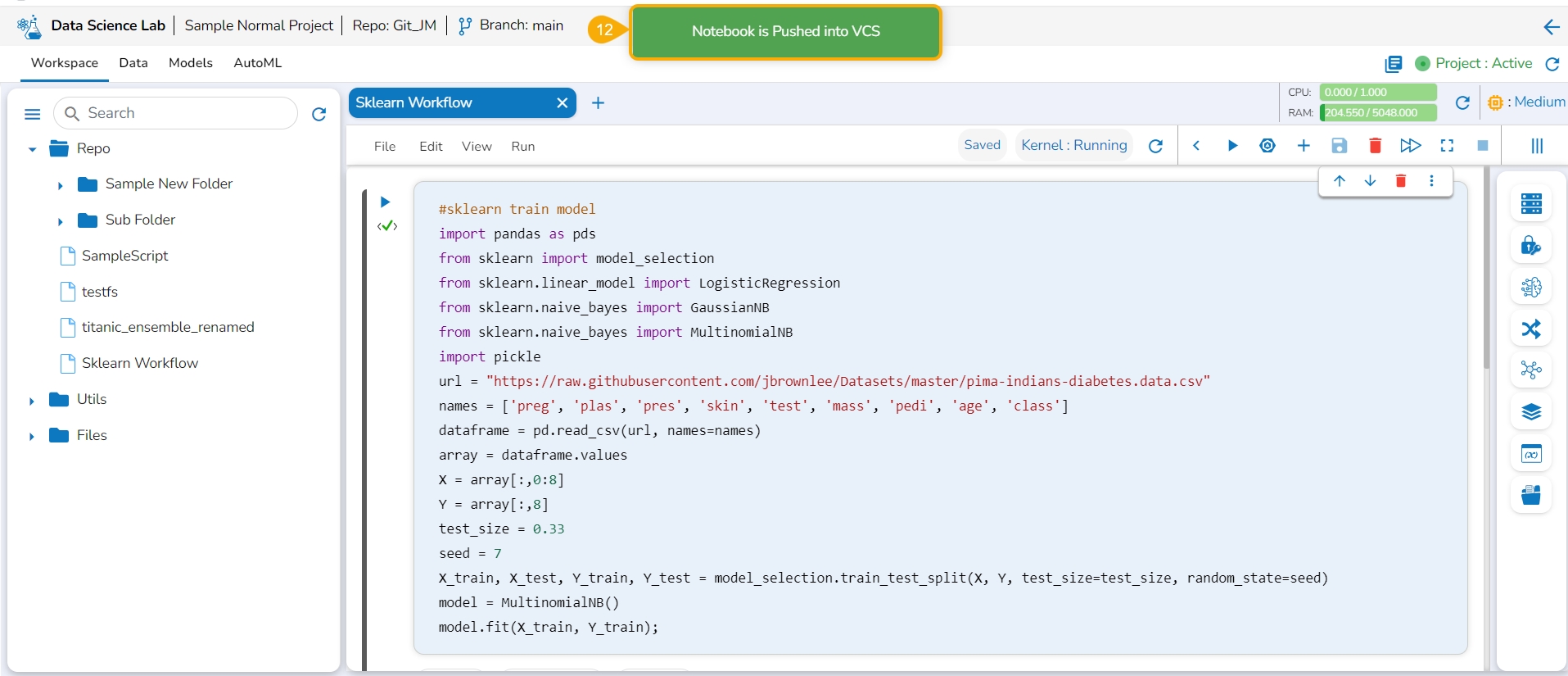

The selected version of the Notebook gets pushed to VCS, and the same is informed by a message.

Open the context menu for the Notebook of which multiple versions are pushed to the VCS.

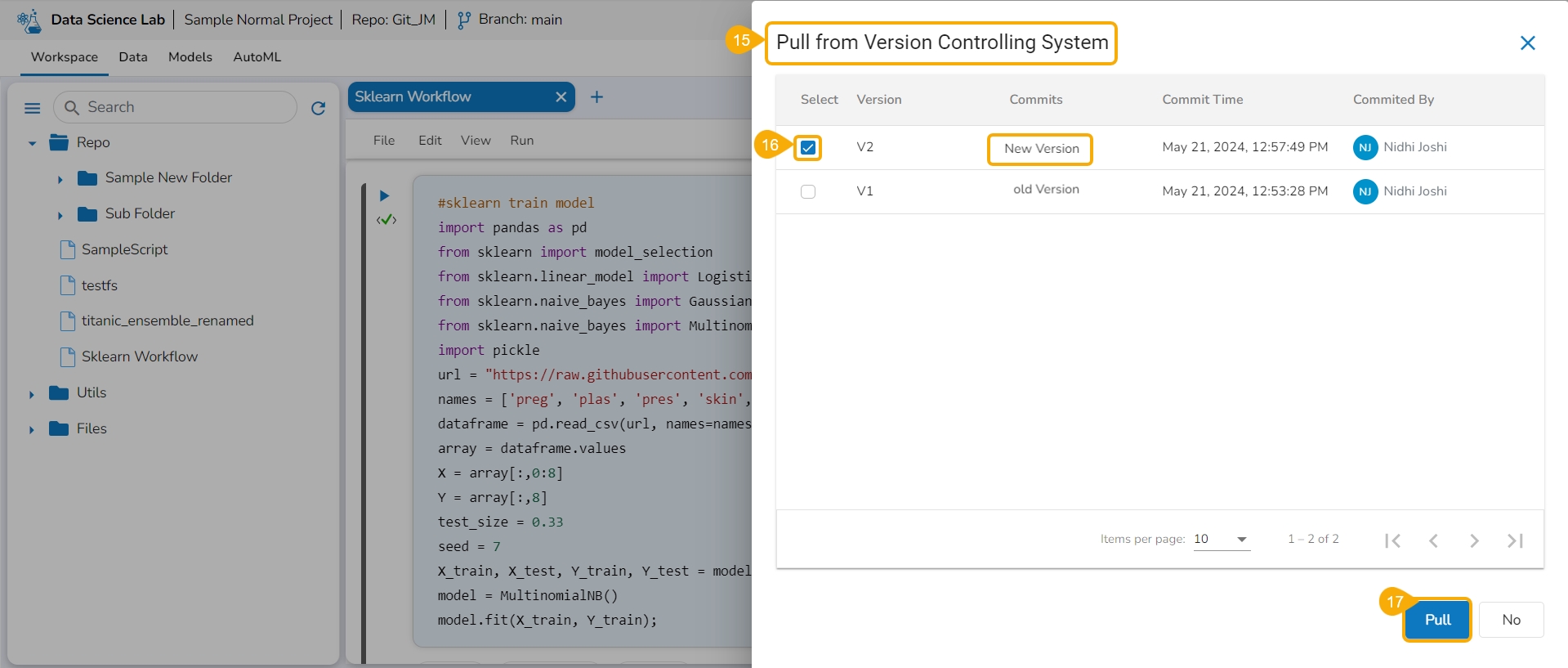

Click the Pull from VCS option from the Context menu.

The Pull from Version Controlling System drawer opens.

Select a version using the checkbox.

Click the Pull option.

A message appears to notify the user that the Notebook is pulled from the VCS.

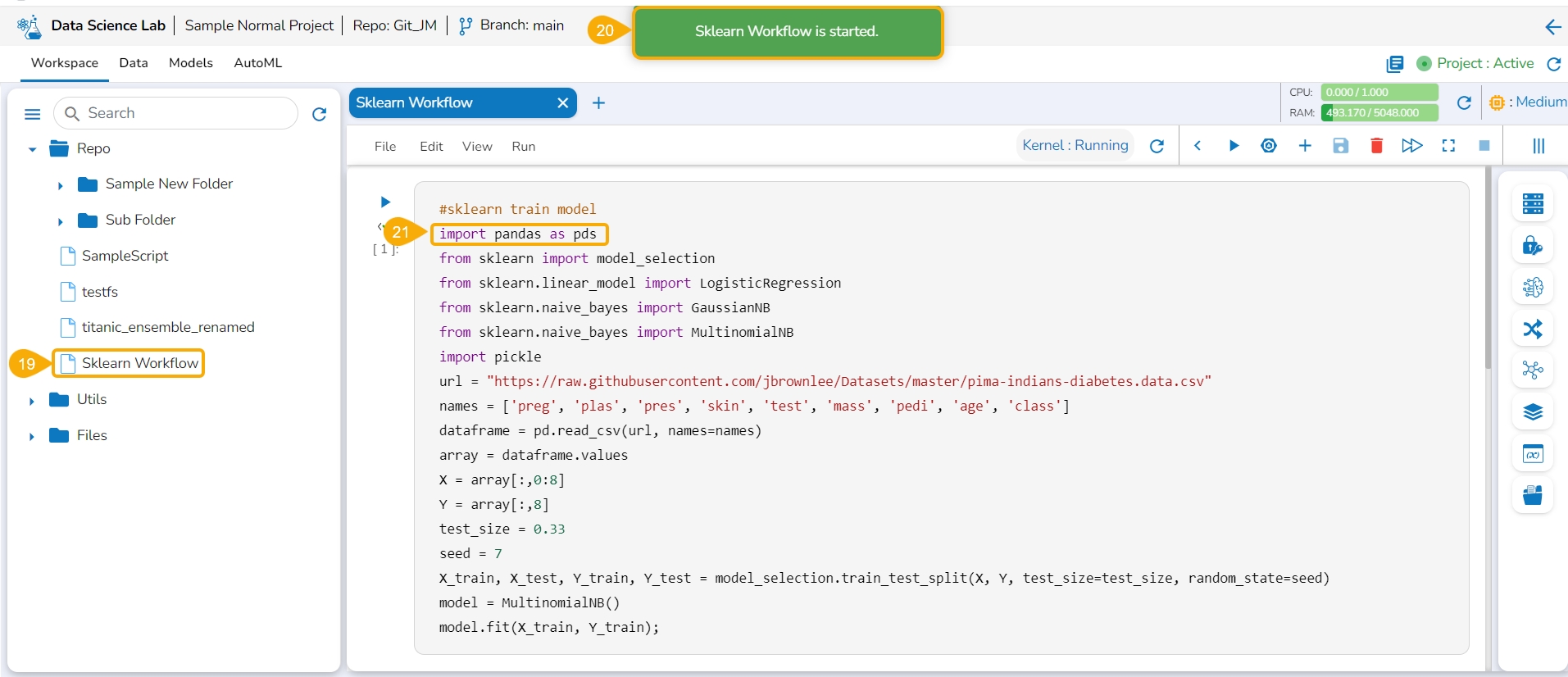

Select the same Notebook file from the Repo folder of the Workspace tab, and open it

A message appears to notify that the selected workflow is started.

The user can verify the Notebook script will reflect the modifications done by the user for the pulled version of the Notebook.

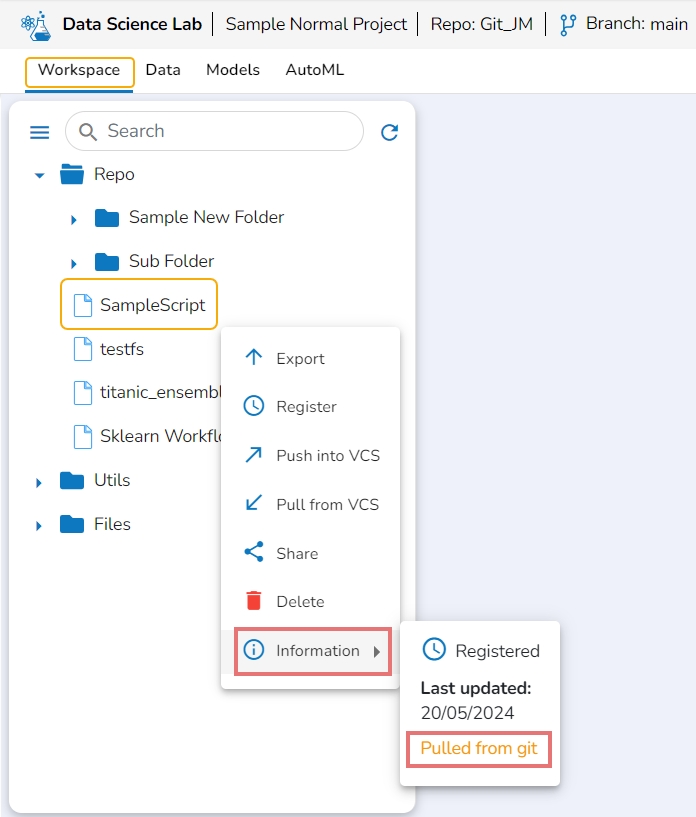

The Version Control process for a Notebook file that is pulled from Git is different from the Notebook file that has been created by the user in the Data Science Lab module.

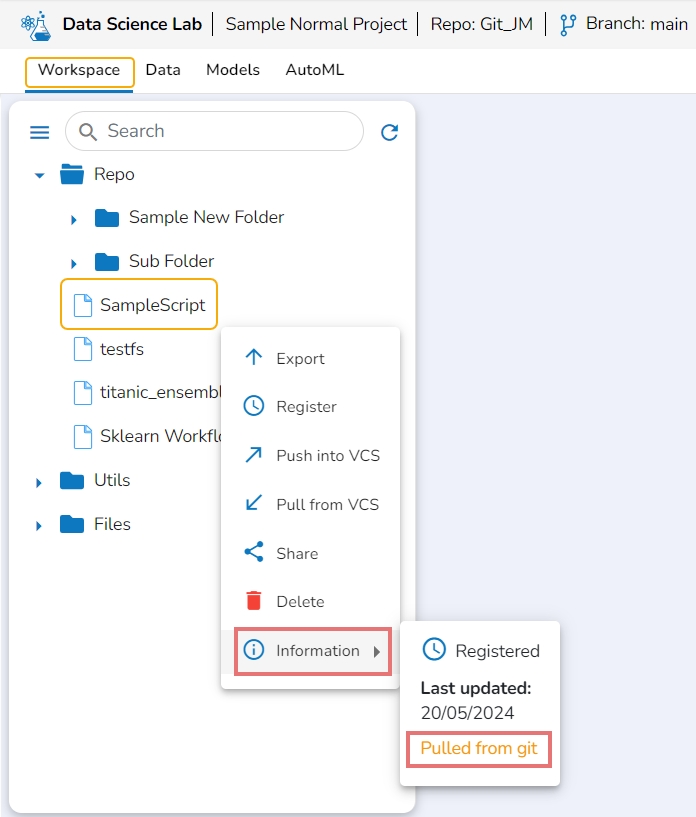

Click the Information option provided in the Context menu for a Notebook. It will mention Pulled from git if the selected Notebook is pulled from Git.

Please Note: The Notebook file that has been pulled from Git will overwrite each time when it is pulled. Thus, the Notebook file that is pulled from Git will not allow the end-user to select from multiple versions while pulling it from the VCS, but it pulls the latest version each time the Pull from VCS action is performed.

Check out the illustration to understand the Version control steps for a Notebook file that is pulled from the Git Repo.

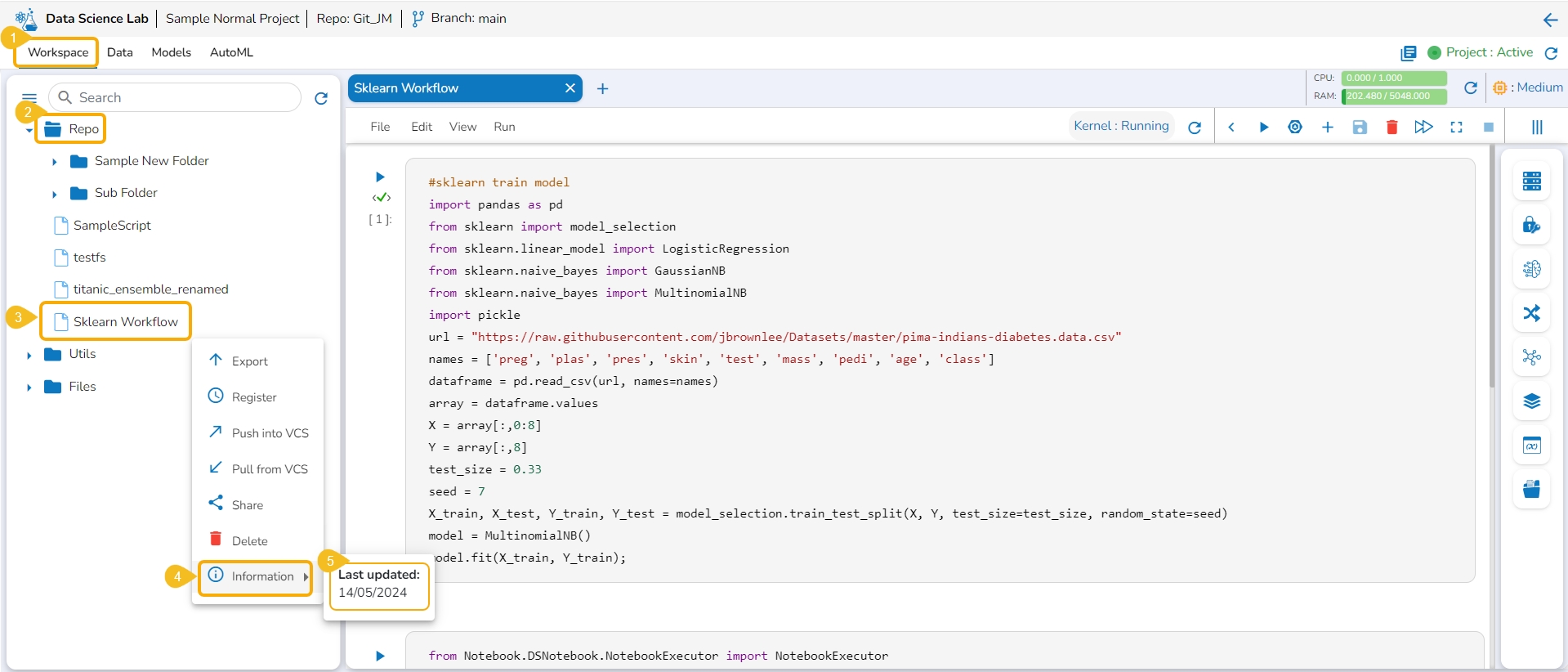

This option displays the last modified date for the selected notebook.

Navigate to the Workspace tab.

Open the Repo folder.

Select a notebook from the Repo folder and click the ellipsis icon for the selected notebook.

A Context Menu opens. Select the Information option from the Context Menu.

The last modified date for the selected notebook is displayed.

The Notebooks pulled from Git get 'Pulled from git' mentioned inside the Information Context menu.

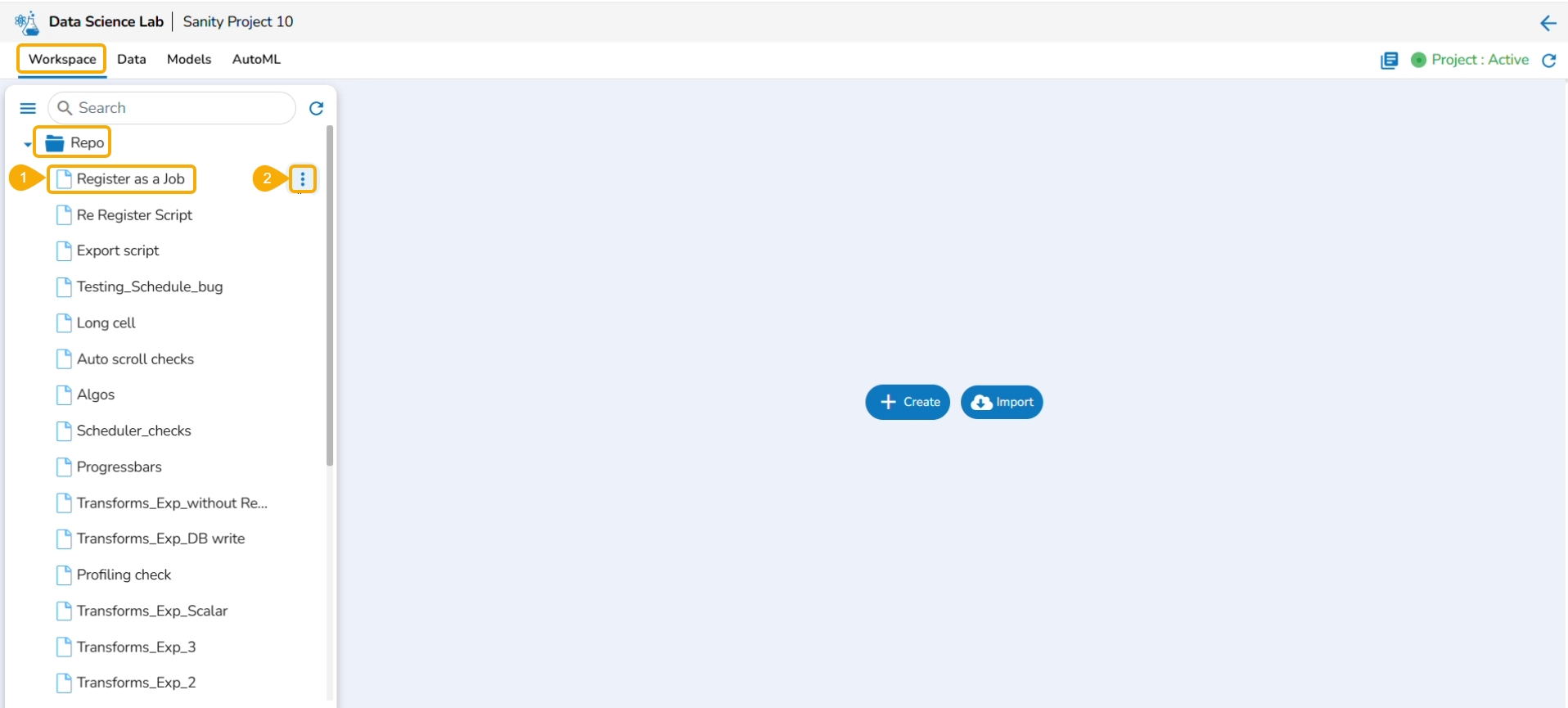

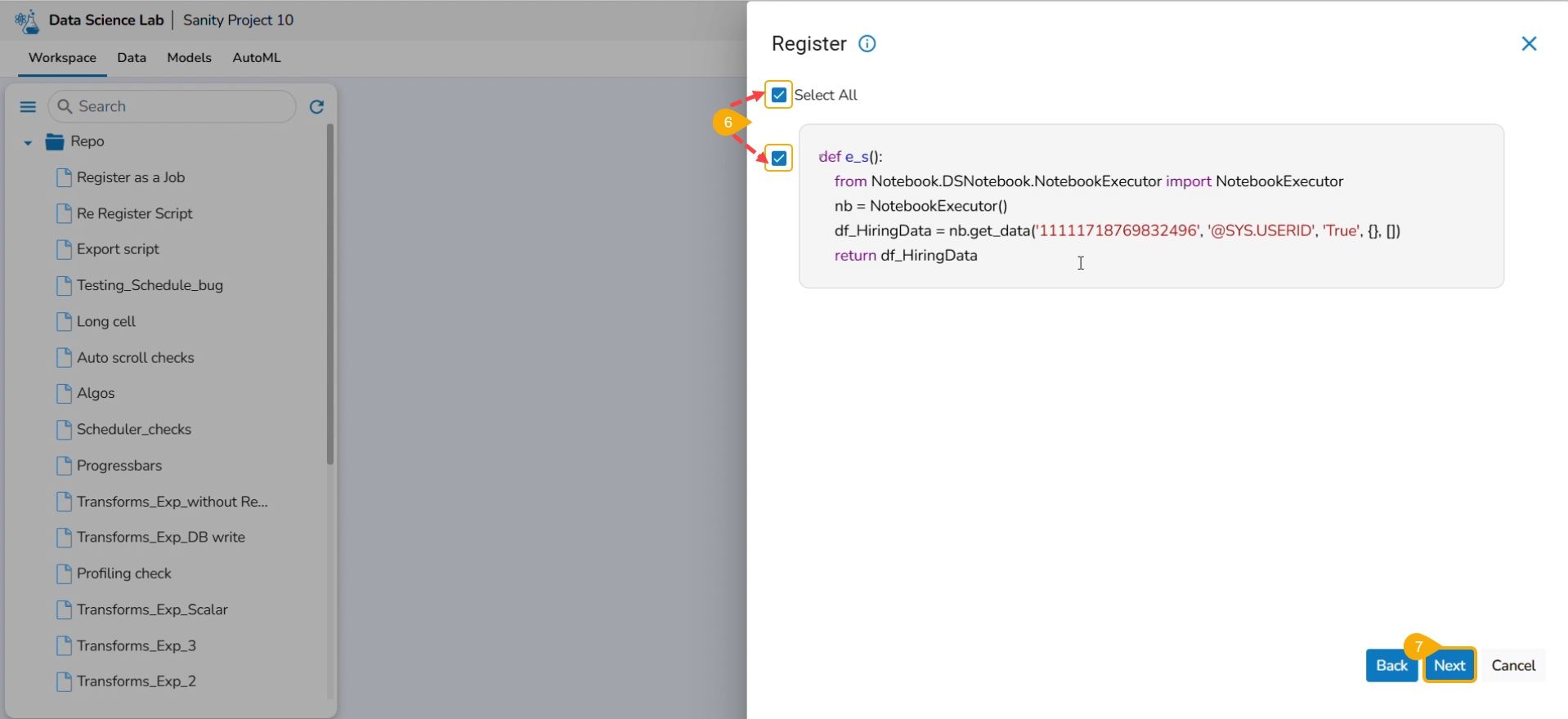

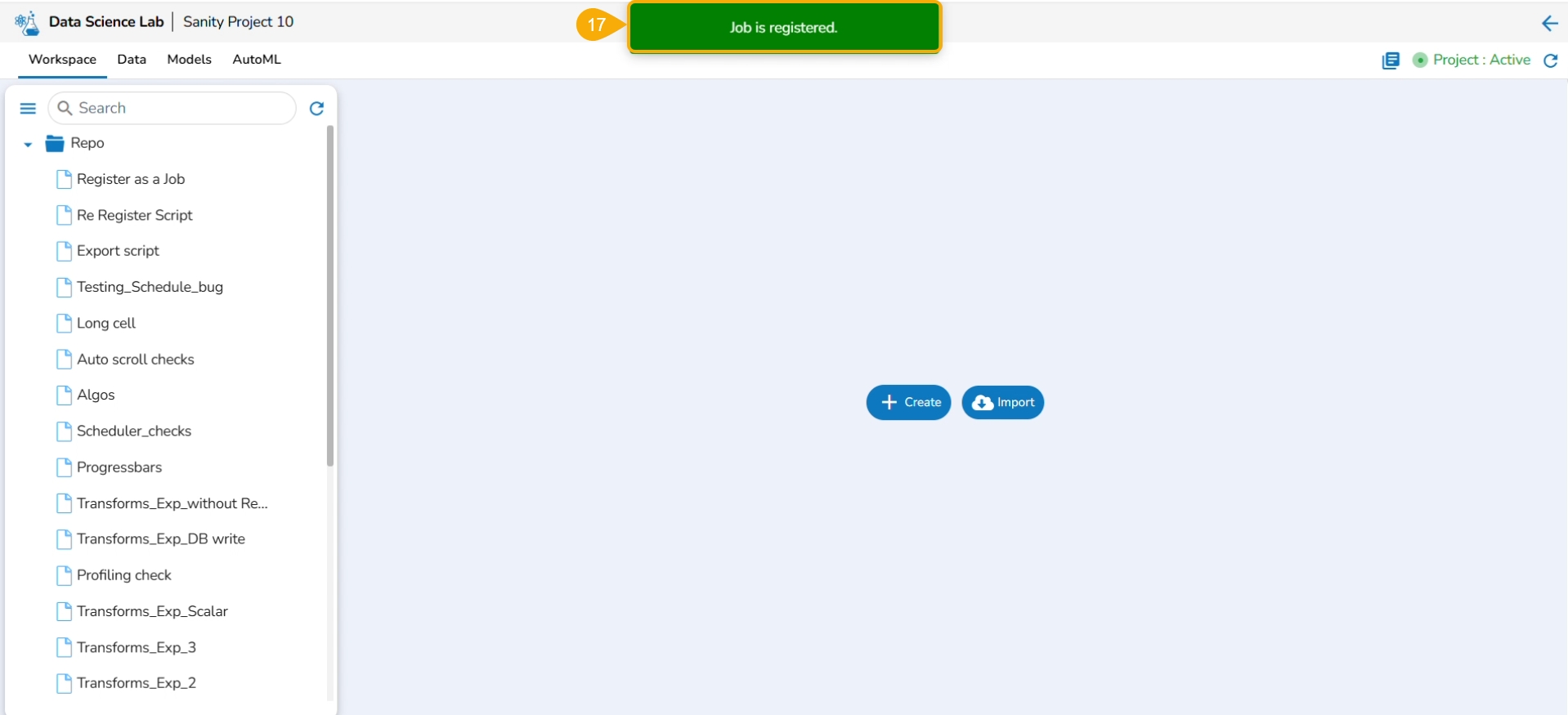

This page describes steps to register a Data Science Script as a Job.

Check out the illustration on registering a Notebook script as a Job to the Data Pipeline module.

The user can register a Notebook script as a Job using this functionality.

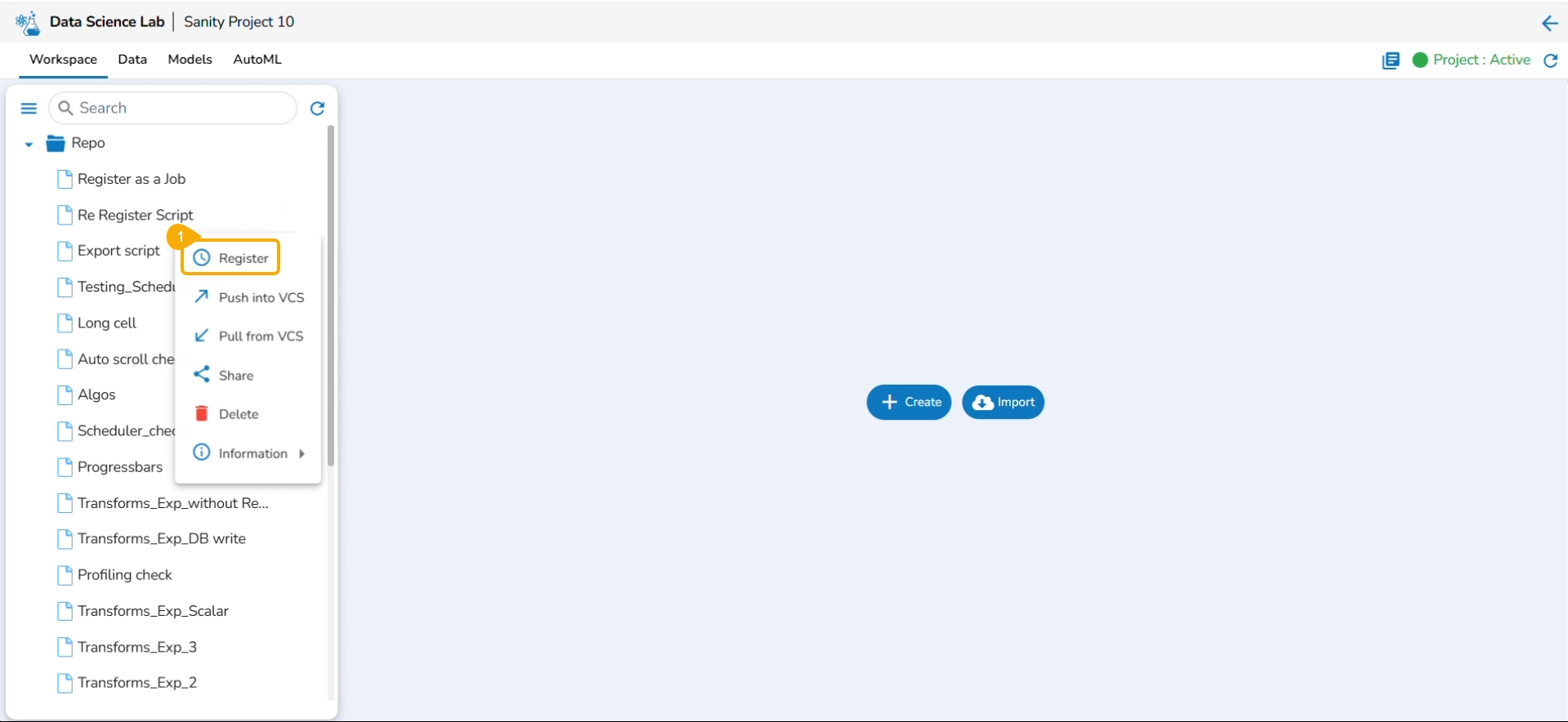

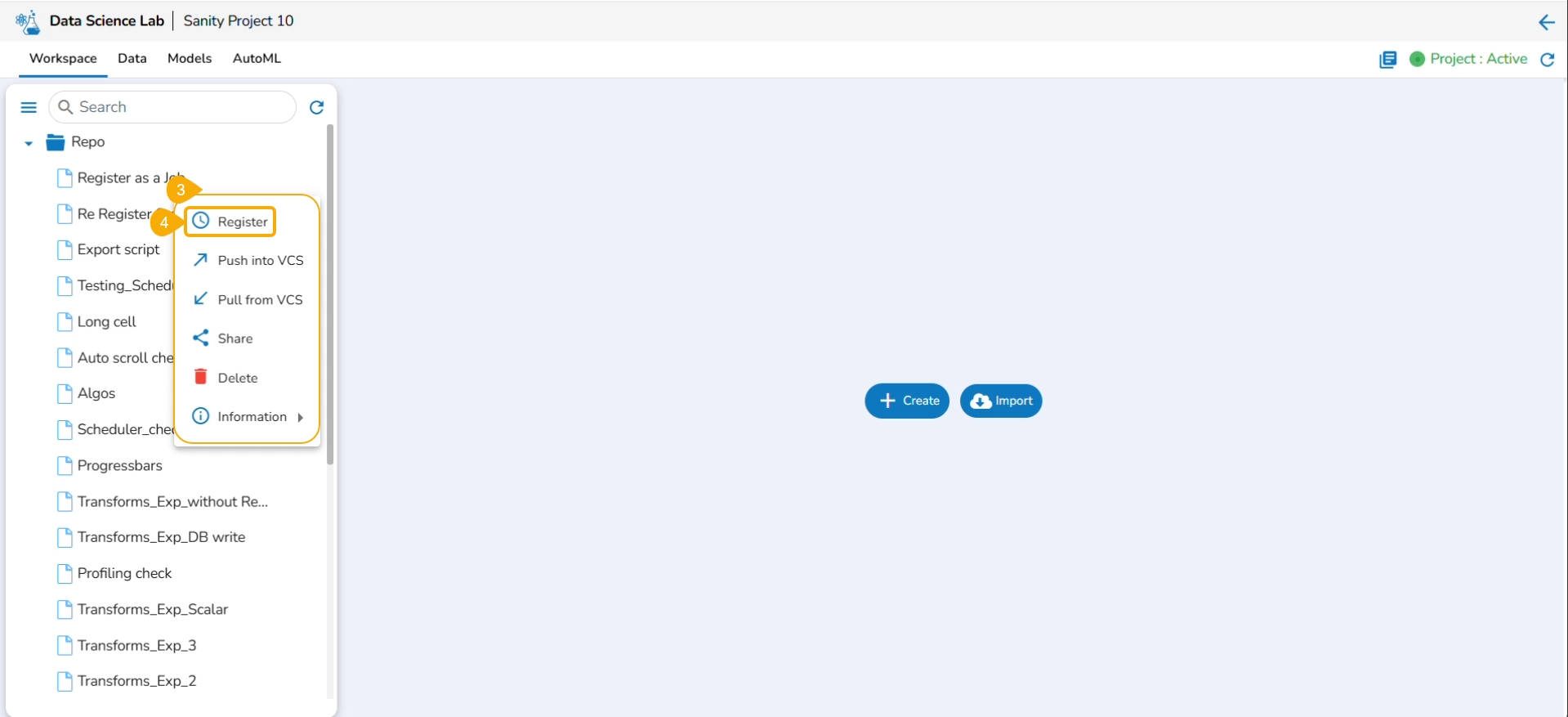

Select a Notebook from the Repo folder in the left side panel.

Click the ellipsis icon.

A context menu opens.

Click the Register option from the context menu.

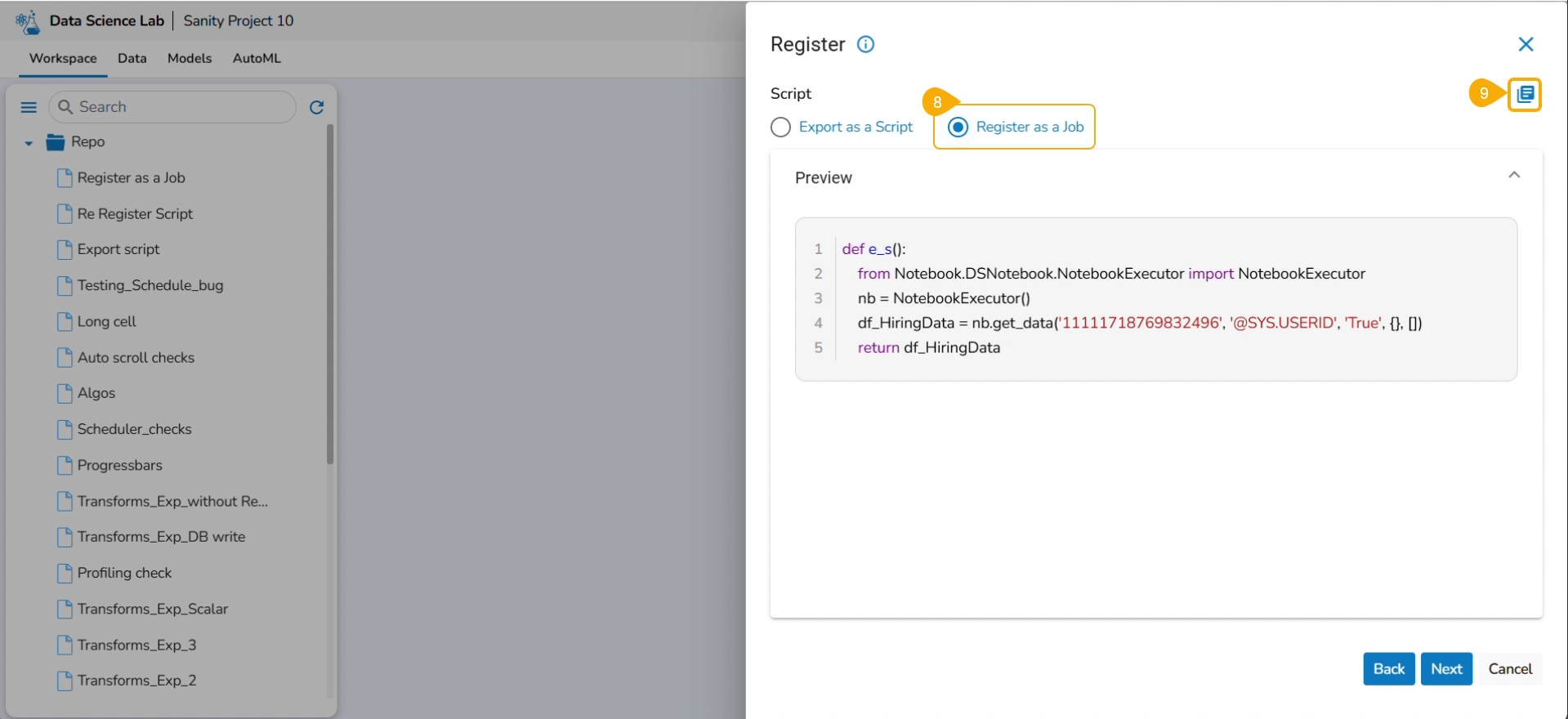

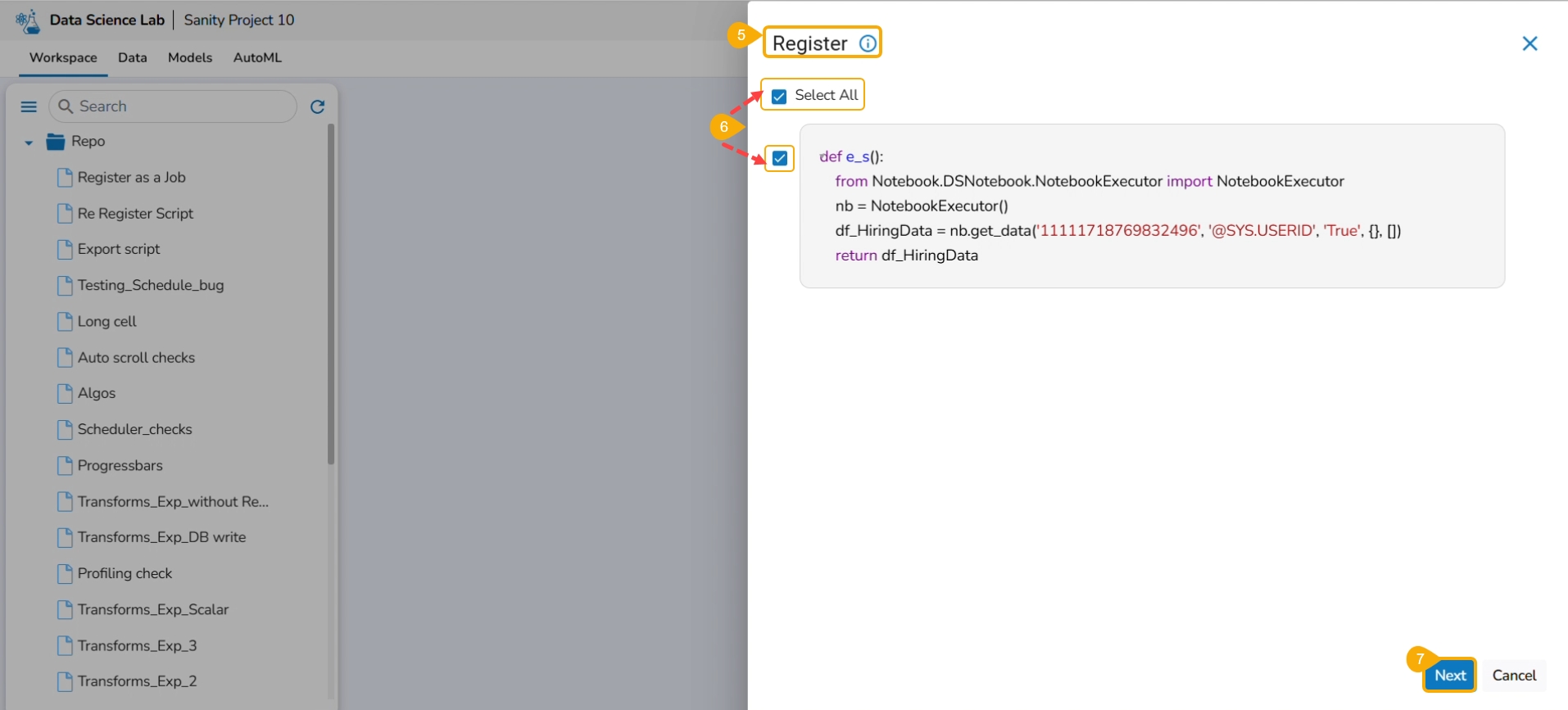

The Register page opens.

Use the Select All option or select the specific script by using the given checkmark.

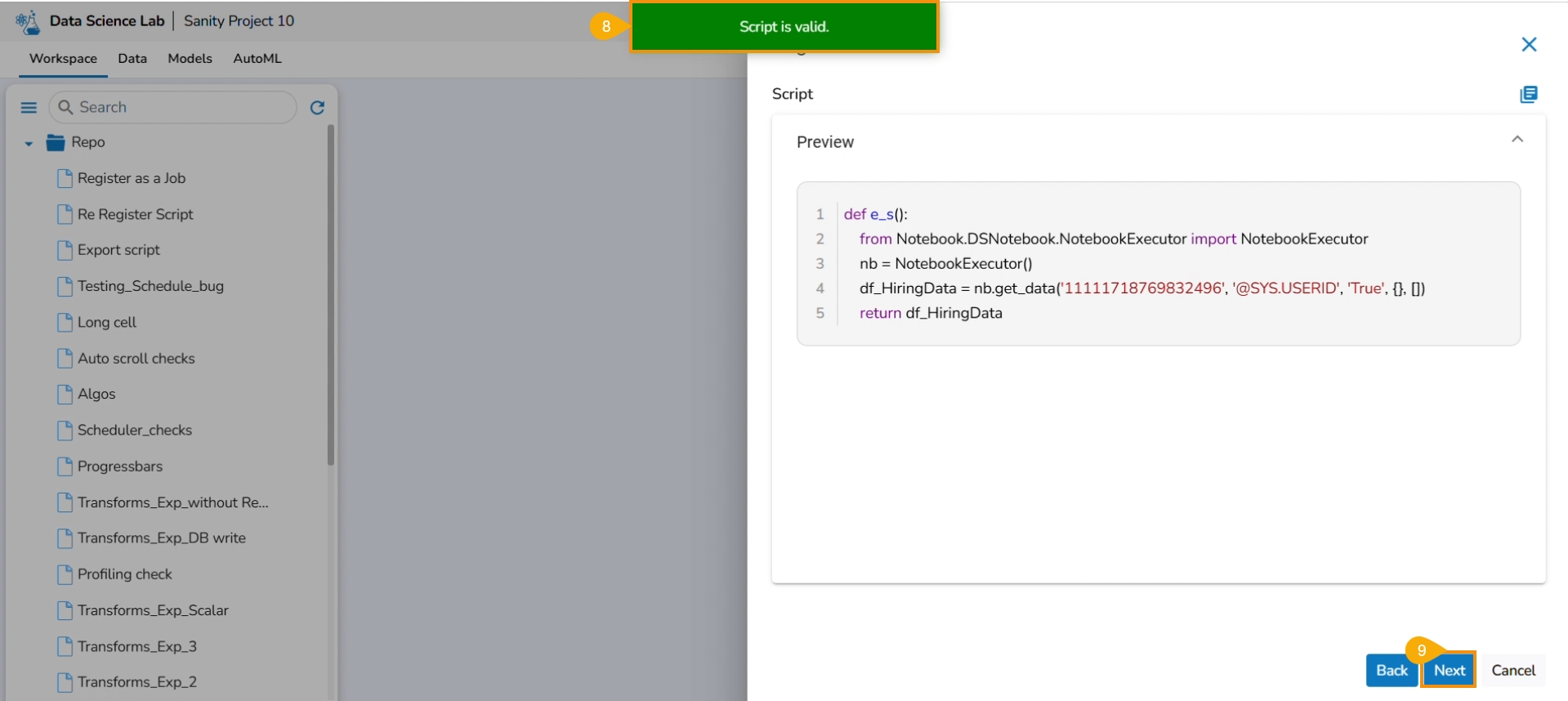

Click the Next option.

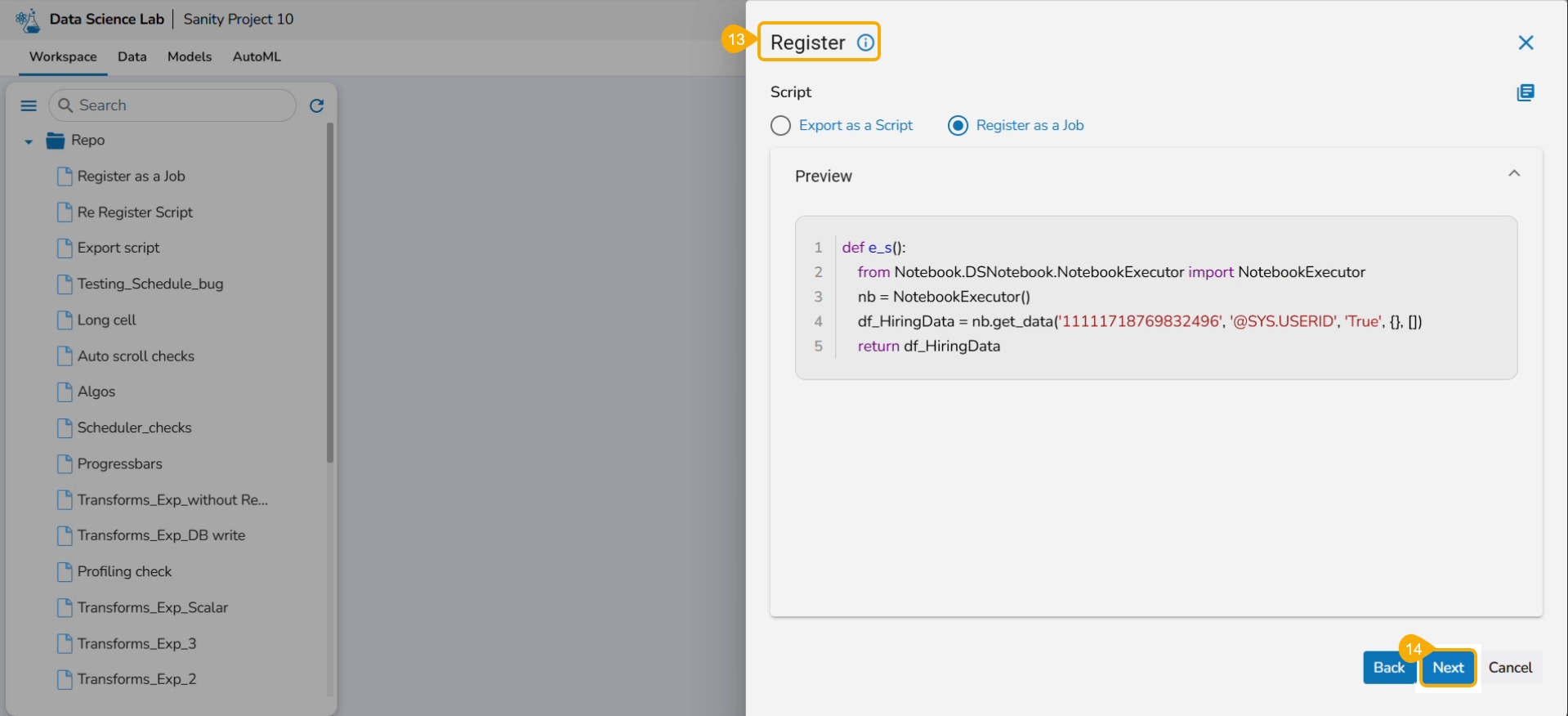

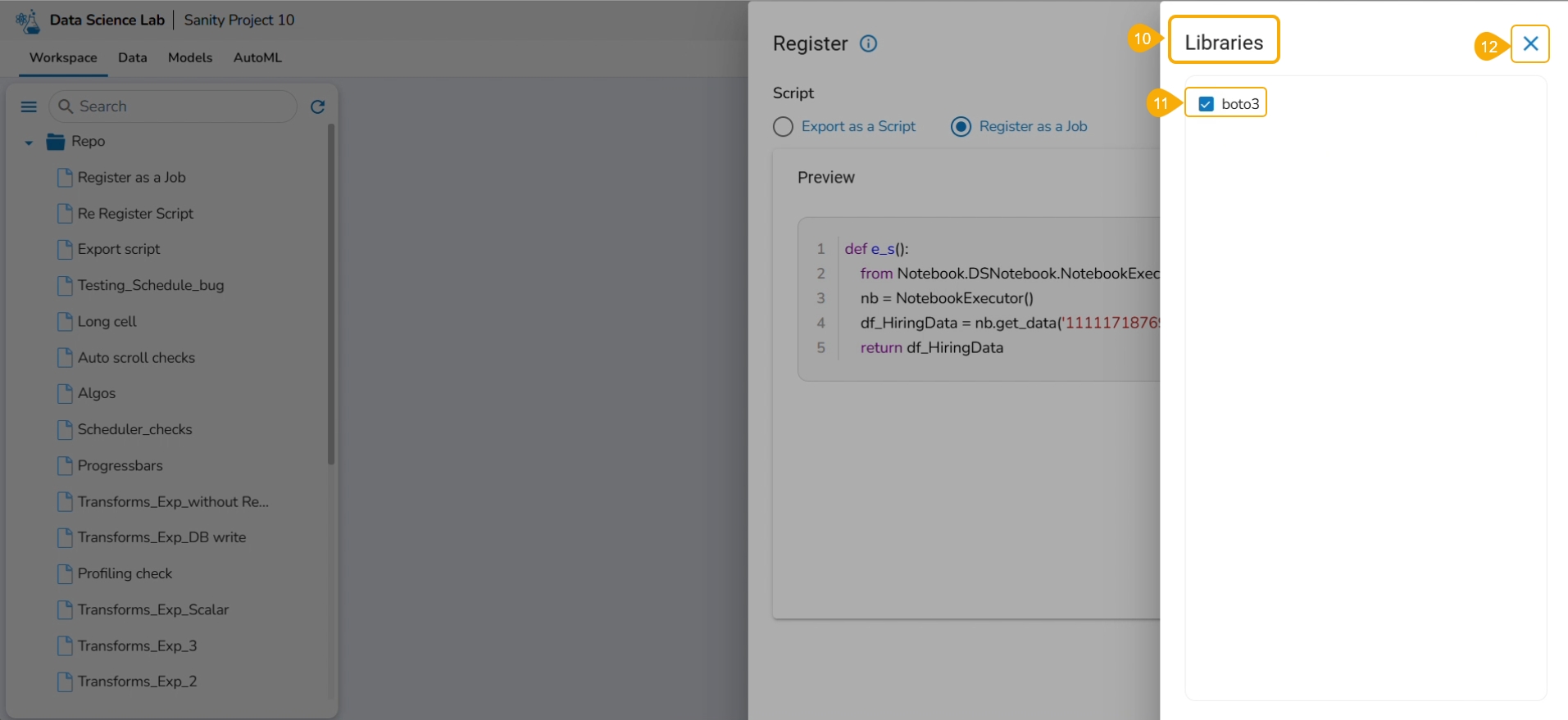

Select the Register as a Job option using the checkbox.

Click the Libraries icon.

The Libraries drawer opens.

Select libraries by using the checkbox.

Click the Close icon.

The user gets redirected to the Register drawer.

Click the Next option.

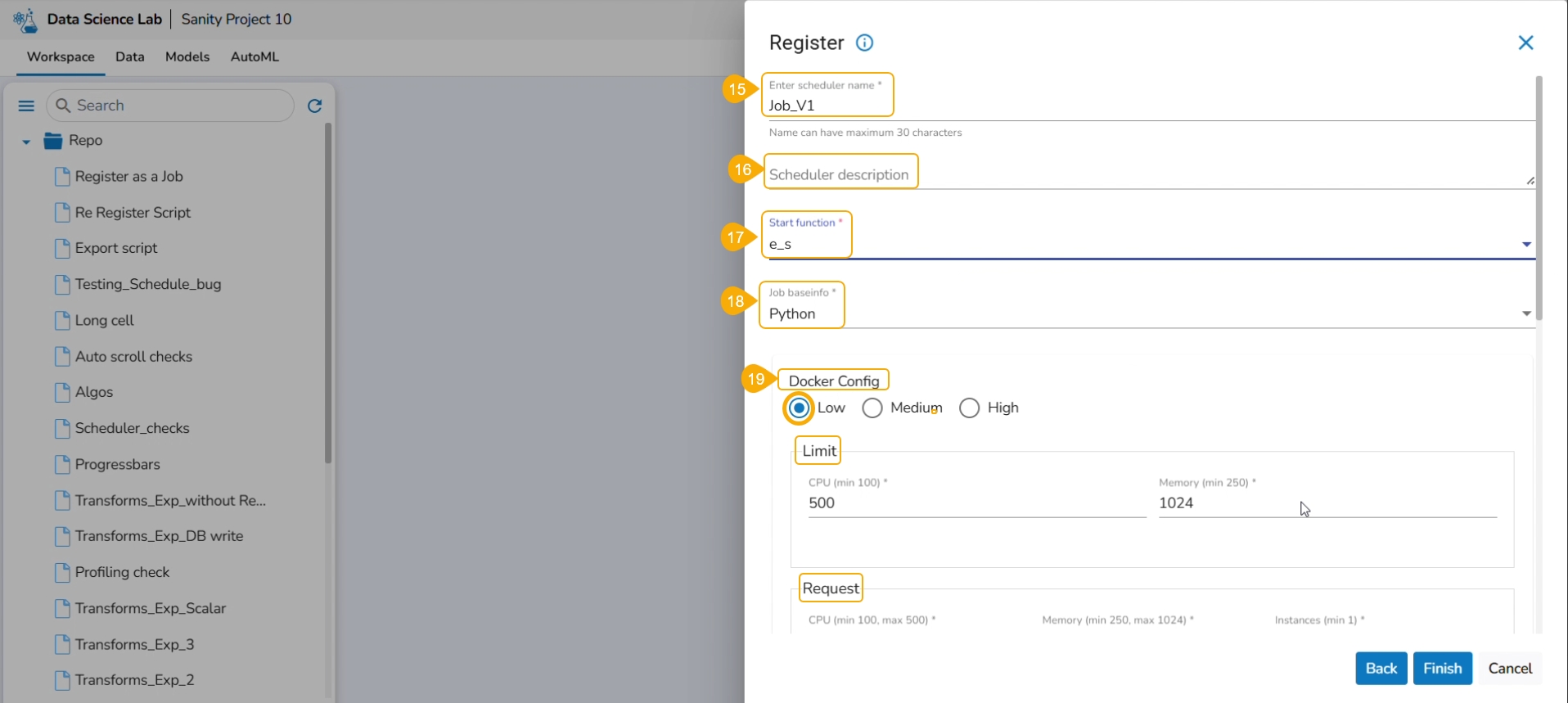

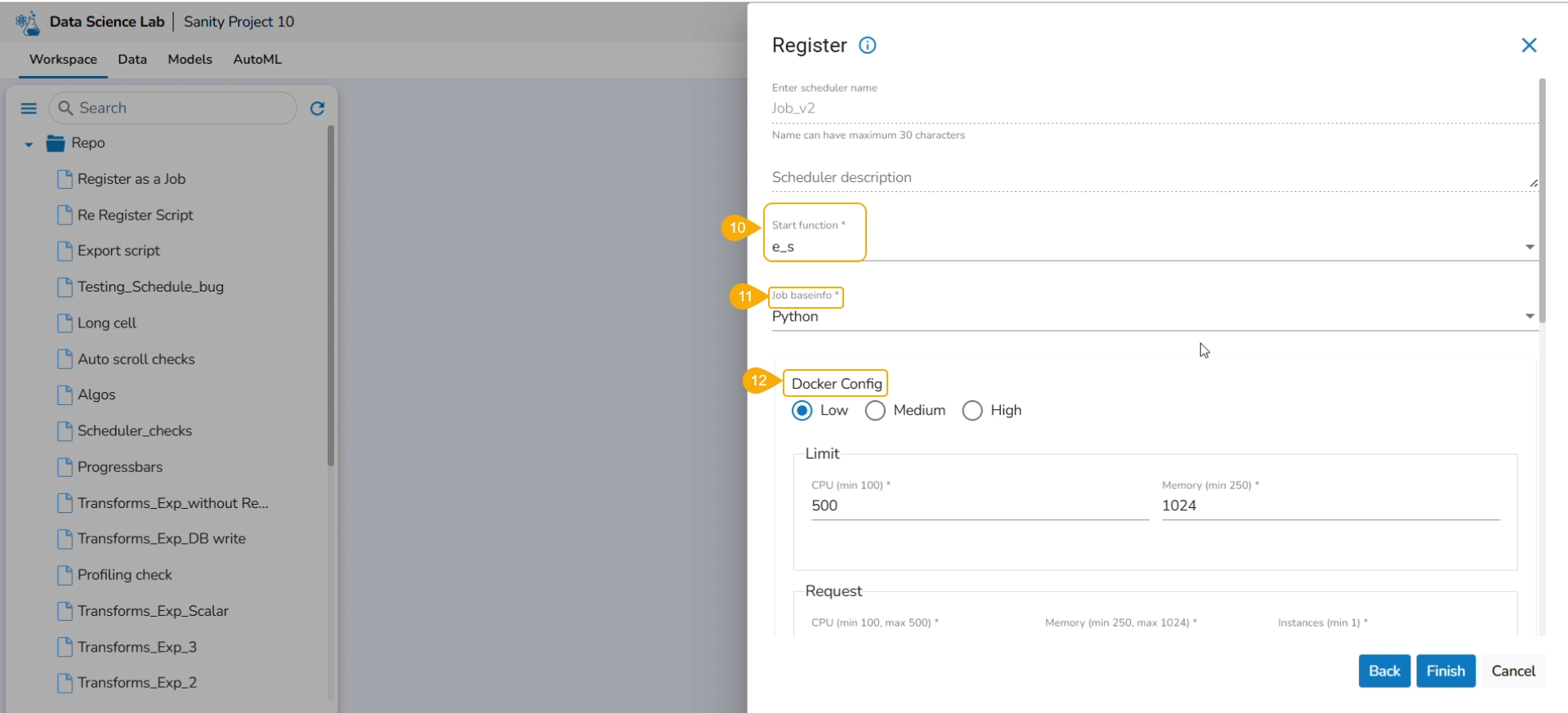

Provide the following information:

Enter scheduler name

Scheduler description

Start function

Job basinfo

Docker Config

Choose an option out of Low, Medium, and High

Limit - based on the selected docker configuration option (Low/Medium/High) the CPU and Memory limit are displayed.

Request -It provides predefined values for CPU, Memory, and count of instances.

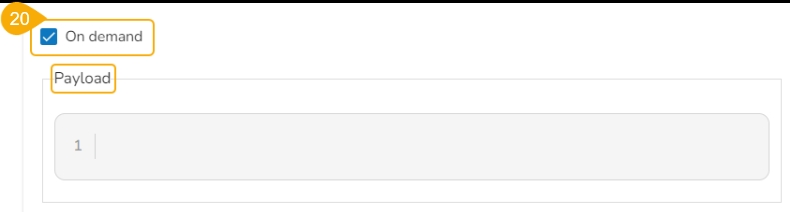

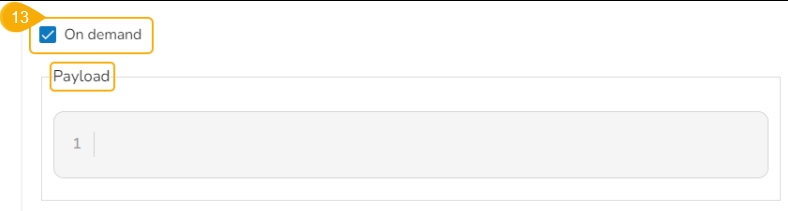

On demand: Check this option if a Python Job (On demand) must be created. In this scenario, the Job will not be scheduled.

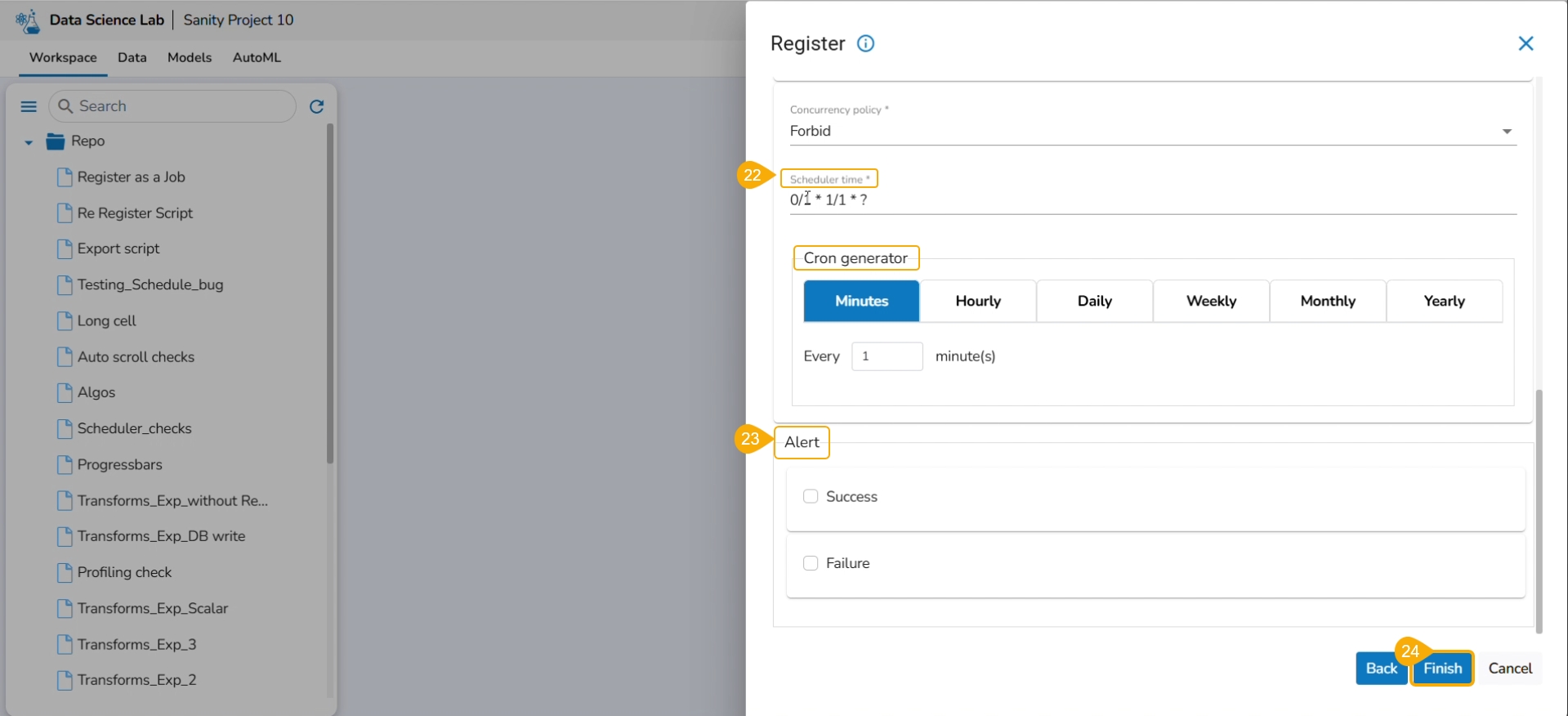

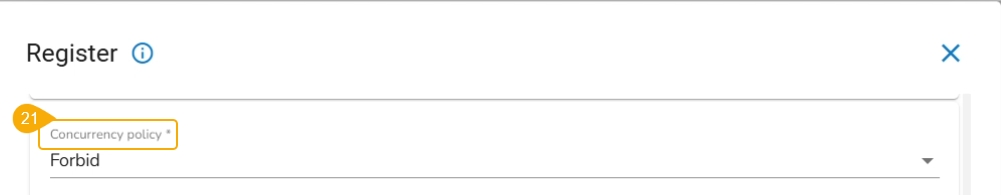

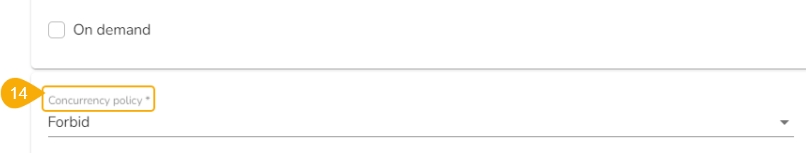

Please Note: The Concurrency policy option doesn't appear for the On-demand jobs, it displays only for the jobs wherein the scheduler is configured.

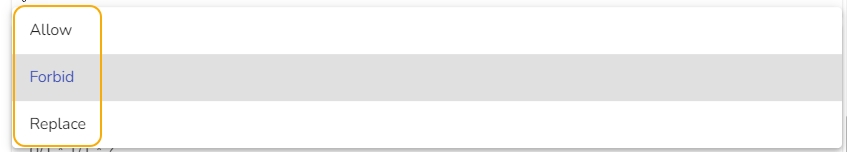

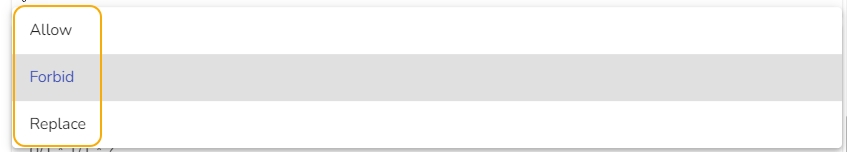

The concurrency policy has three options: Allow, Forbid, and Replace.

Allow: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will run in parallel with the previous task.

Forbid: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will wait until all the previous tasks are completed.

Replace: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the previous task will be terminated and the new task will start processing.

Scheduler Time: Provide scheduler time using the Cron generator.

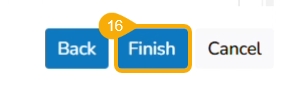

Click the Finish option.

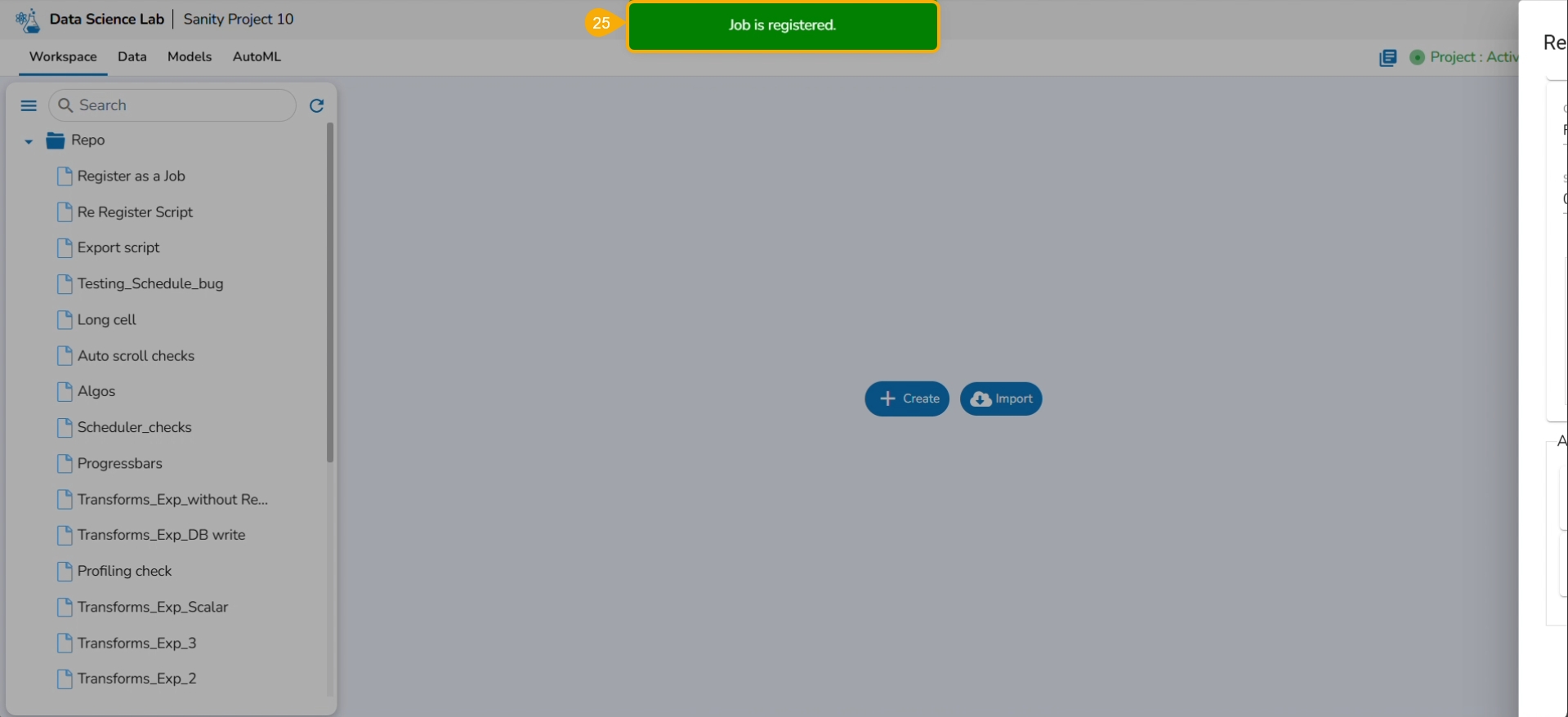

A notification message appears.

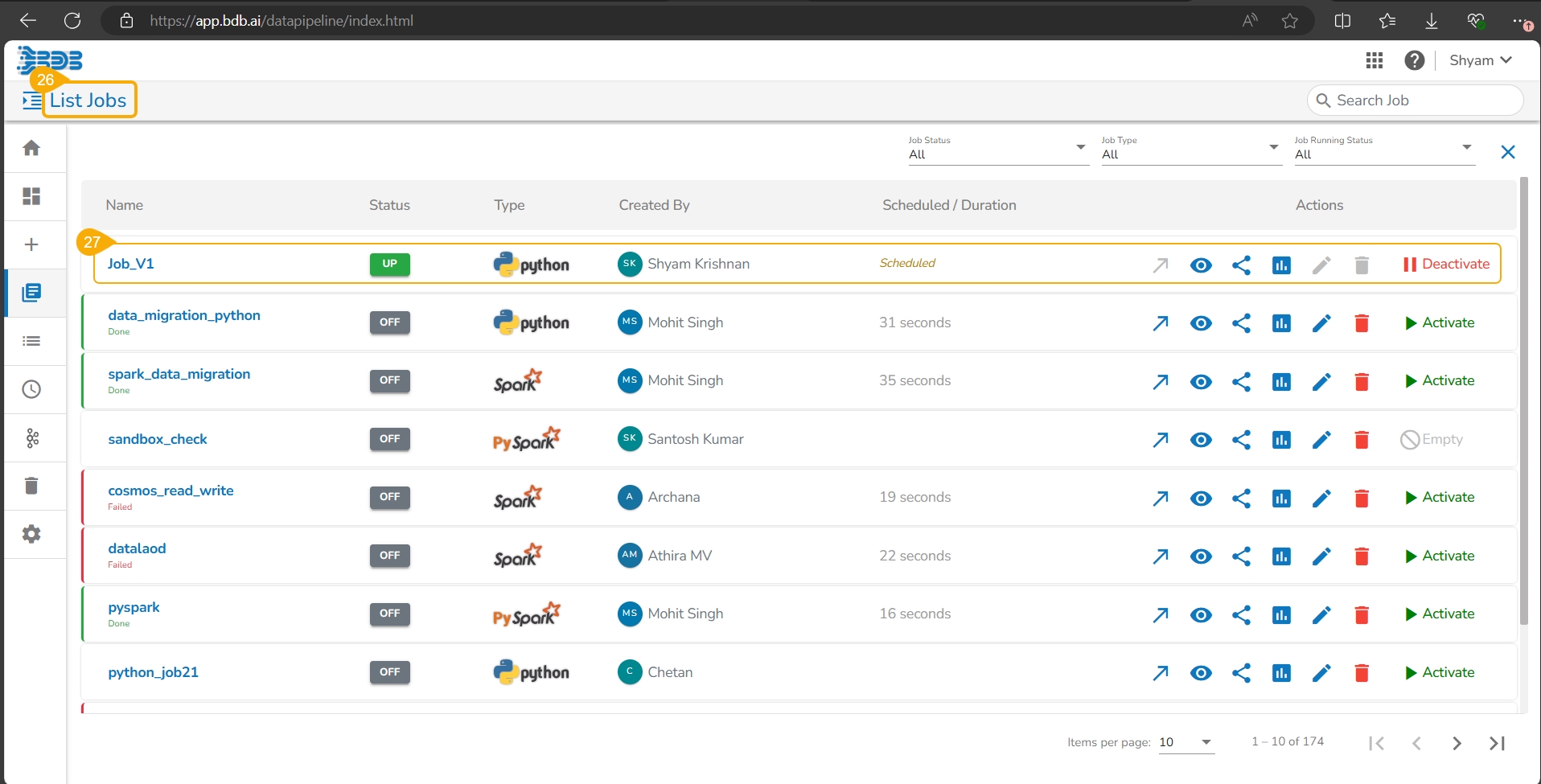

Navigate to the List Jobs page within the Data Pipeline module.

The recently registered DS Script gets listed with the same Scheduler name.

Check out the illustration on re-registering a DS Script as a job.

This option appears for a .ipynb file that has been registered before.

Select the Register option for a .ipynb file that has been registered before.

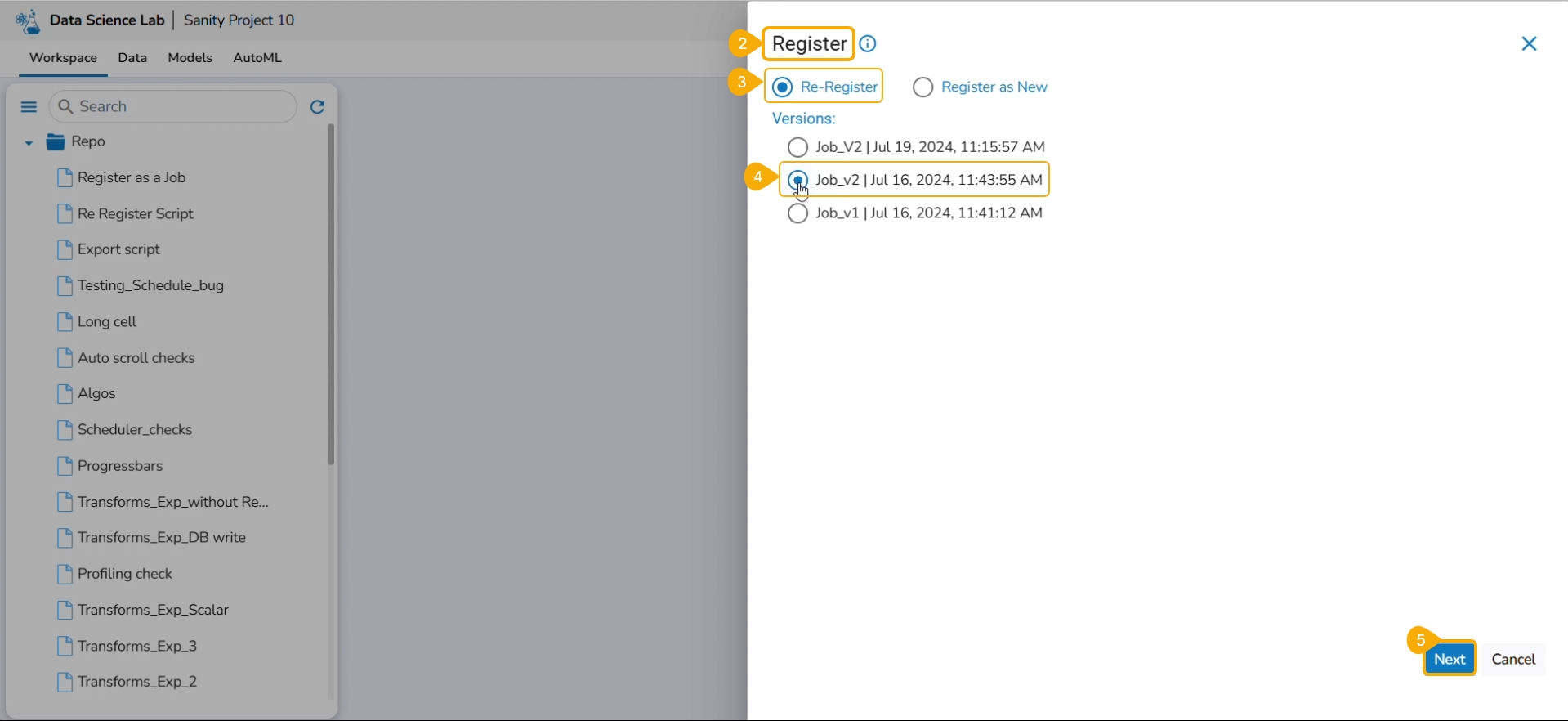

The Register page opens displaying the Re-Register and Register as New options.

Select the Re-Register option by using the checkbox.

Select a version by using a checkbox.

Click the Next option.

Select the script using the checkbox (it appears as per the pre-selection). The user can also choose the Select All option.

Click the Next option.

A notification message appears to ensure that the script is valid.

Click the Next option.

Start function: Select a function from the drop-down menu.

Job basinfo: Select an option from the drop-down menu.

Docker Config

Choose an option for Limit out of Low, Medium, and High

Request - CPU and Memory limit are displayed.

On demand: Check this option if a Python Job (On demand) must be created. In this scenario, the Job will not be scheduled.

Please Note: The Concurrency policy option doesn't appear for the On-demand jobs, it displays only for the jobs wherein the scheduler is configured.

The concurrency policy has three options: Allow, Forbid, and Replace.

Allow: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will run in parallel with the previous task.

Forbid: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will wait until all the previous tasks are completed.

Replace: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the previous task will be terminated and the new task will start processing.

Click the Finish option to register the Notebook as a Job.

A notification message appears.

The user must follow all the steps from the Register a Data Science Script as a Job section while re-registering it with the Register as New option.

Check out the illustration on Registering a DS Script as New.

Payload: This option will appear if the On-demand option is checked in. Enter the payload in the form of a list of dictionaries. For more details about the Python Job (On demand), refer to this link:

Concurrency Policy: Select the desired concurrency policy. For more details about the Concurrency Policy, check this link:

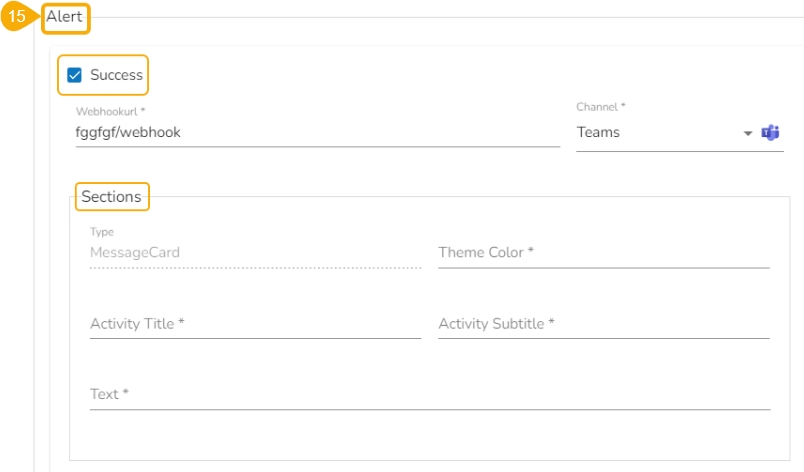

Alert: This feature in the Job allows the users to send an alert message to the specified channel (Teams or Slack) in the event of either the success or failure of the configured Job. Users can also choose success and failure options to send an alert for the configured Job. Check the following link to configure the Alert:

Payload: This option will appear if the On-demand option is checked in. Enter the payload in the form of a list of dictionaries. For more details about the Python Job (On demand), refer to this link:

Concurrency Policy: Select the desired concurrency policy. For more details about the Concurrency Policy, check this link:

Alert: This feature in the Job allows the users to send an alert message to the specified channel (Teams or Slack) in the event of either the success or failure of the configured Job. Users can also choose success and failure options to send an alert for the configured Job. Check the following link to configure the Alert: