MongoDB Reader

This page covers configuration details for the MongoDB Reader component.

A MongoDB reader is designed to read and access data stored in a MongoDB database. Mongo readers typically authenticate with MongoDB using a username and password or other authentication mechanisms supported by MongoDB.

All component configurations are classified broadly into the following sections:

Meta Information

Please follow the demonstration to configure the component.

MongoDB Reader reads the data from the specified collection of Mongo Database. It has an option to filter data using spark SQL query.

Drag & Drop the MongoDB Reader on the Workflow Editor.

Click on the dragged reader component to open the component properties tabs below.

Basic Information

It is the default tab to open for the MongoDB reader while configuring the component.

Select an Invocation type from the drop-down menu to confirm the running mode of the reader component. Select the Real-Time or Batch option from the drop-down menu.

Deployment Type: It displays the deployment type for the component. This field comes pre-selected.

Container Image Version: It displays the image version for the docker container. This field comes pre-selected.

Failover Event: Select a failover Event from the drop-down menu.

Batch Size: Provide the maximum number of records to be processed in one execution cycle.

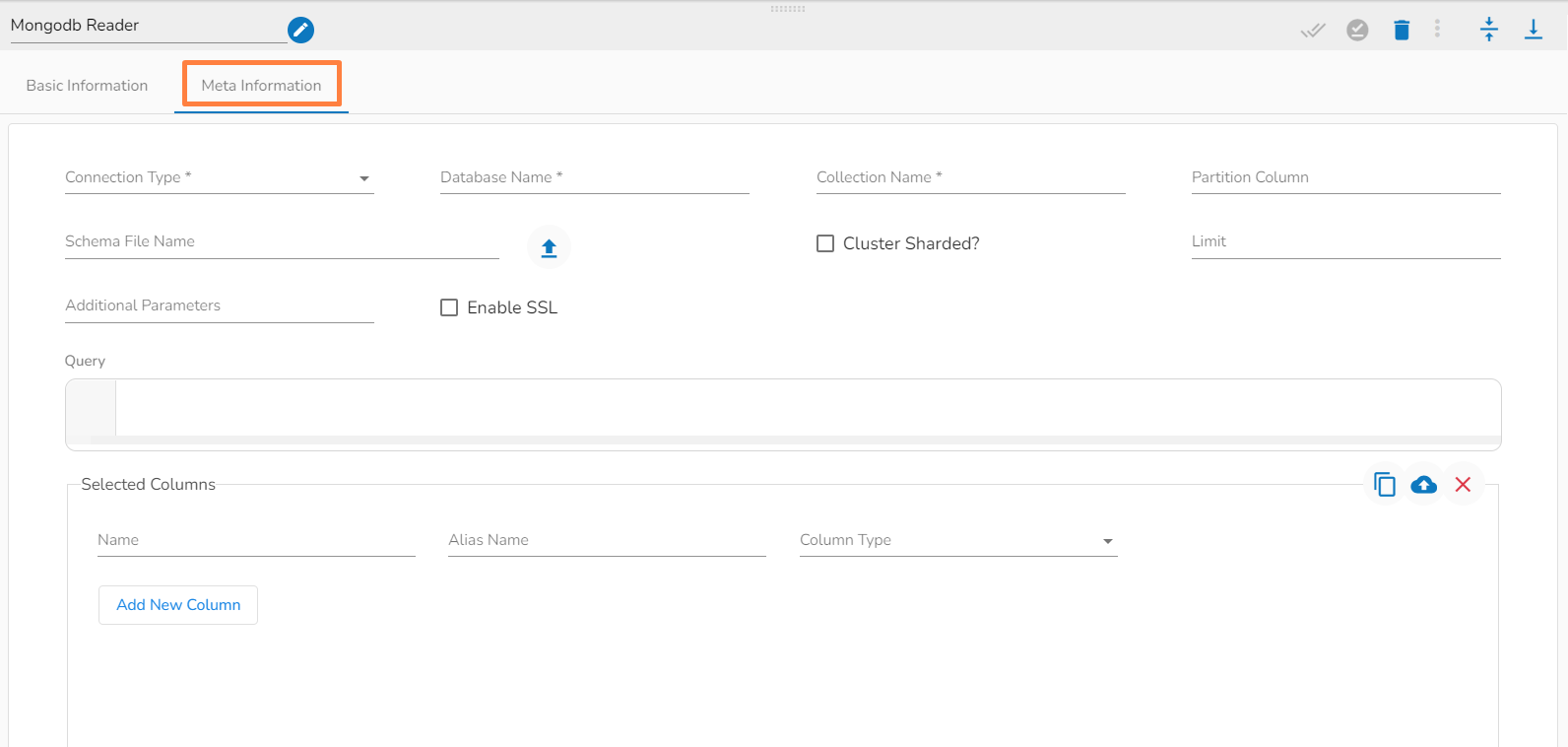

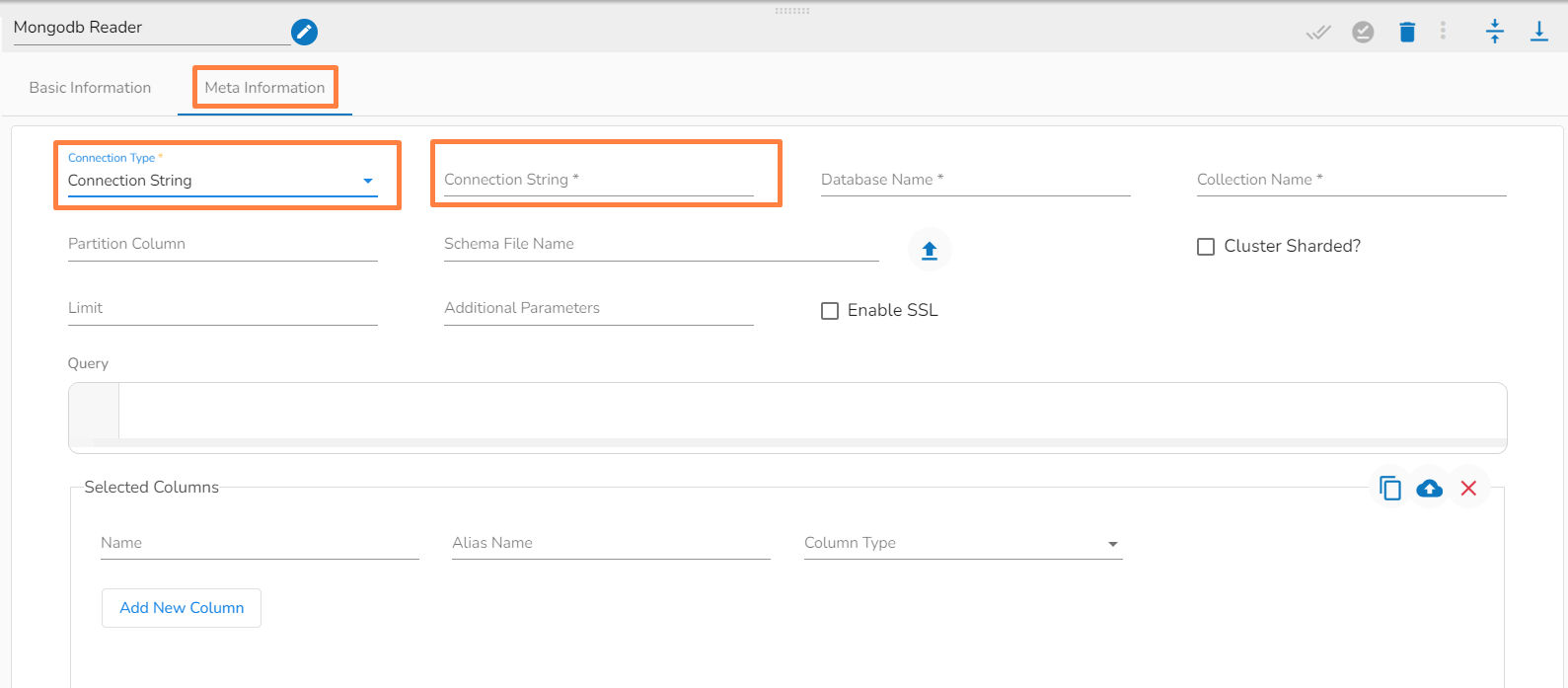

Configuring the Meta Information tab of the MongoDB Reader

Please Note: The fields marked as (*) are mandatory fields.

Connection Type: Select the connection type from the drop-down:

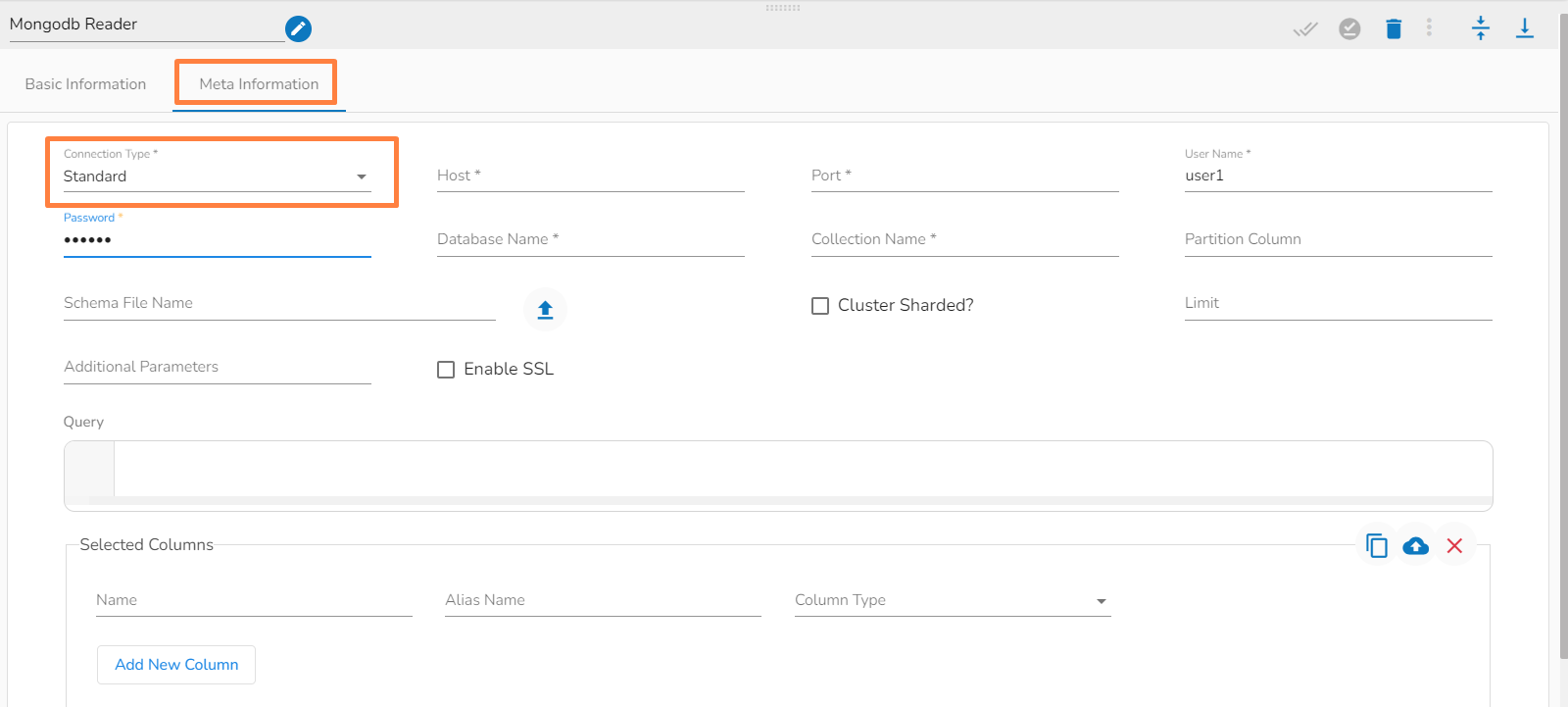

Standard

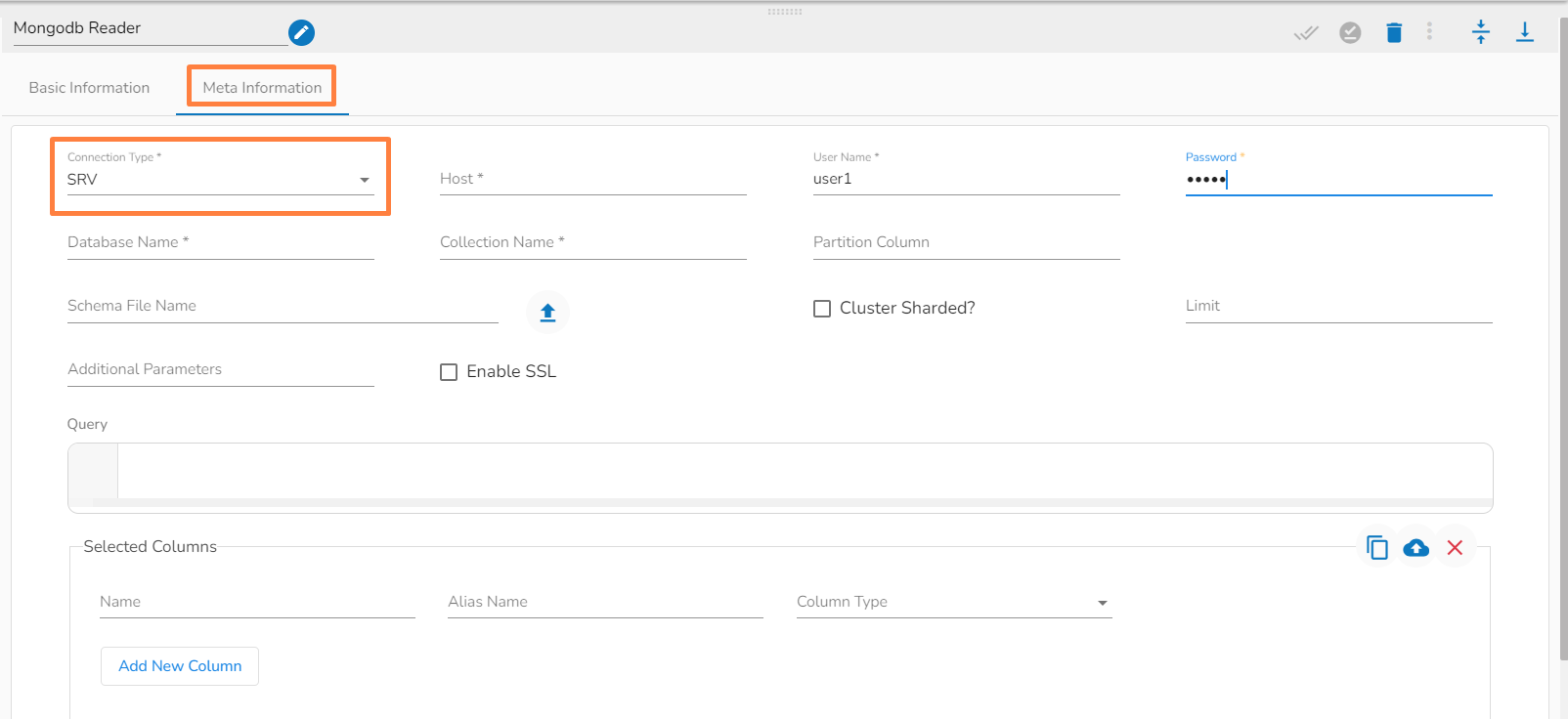

SRV

Connection String

Host IP Address (*): Hadoop IP address of the host.

Port(*): Port number (It appears only with the Standard Connection Type).

Username(*): Provide username.

Password(*): Provide a valid password to access the MongoDB.

Database Name(*): Provide the name of the database from where you wish to read data.

Collection Name(*): Provide the name of the collection.

Partition Column: specify a unique column name, whose value is a number .

Query: Insert an Spark SQL query (it takes a query containing a Join statement as well).

Limit: Set a limit for the number of records to be read from MongoDB collection.

Schema File Name: Upload Spark Schema file in JSON format.

Cluster Sharded: Enable this option if data has to be read from sharded clustered database. A sharded cluster in MongoDB is a distributed database architecture that allows for horizontal scaling and partitioning of data across multiple nodes or servers. The data is partitioned into smaller chunks, called shards, and distributed across multiple servers.

Additional Parameters: Provide the additional parameters to connect with MongoDB. This field is optional.

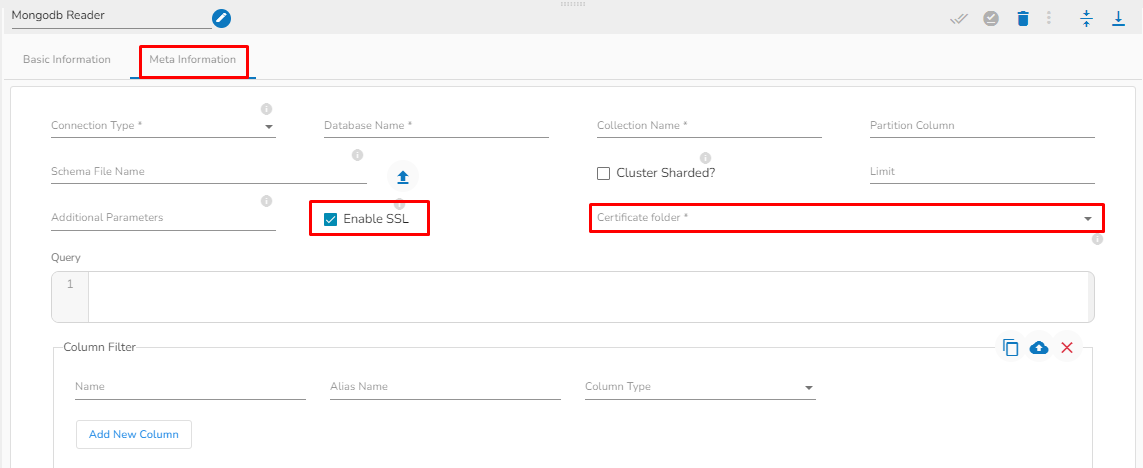

Enable SSL: Check this box to enable SSL for this components. MongoDB connection credentials will be different if this option is enabled.

Certificate Folder: This option will appear when the Enable SSL field is checked-in. The user has to select the certificate folder from drop down which contains the files which has been uploaded to the admin settings for connecting MongoDB with SSL. Please refer the below given images for the reference.

Please Note: The Meta Information fields vary based on the selected Connection Type option.

The following images display the various possibilities of the Meta Information for the MongoDB Reader:

i. Meta Information Tab with Standard as Connection Type.

ii. Meta Information Tab with SRV as Connection Type.

iii. Meta Information Tab with Connection String as Connection Type.

Column Filter: The users can select some specific columns from the table to read data instead of selecting a complete table; this can be achieved via the Column Filter section. Select the columns which you want to read and if you want to change the name of the column, then put that name in the alias name section otherwise keep the alias name the same as of column name and then select a Column Type from the drop-down menu.

or

Use the Download Data and Upload File options to select the desired columns.

1. Upload File: The user can upload the existing system files (CSV, JSON) using the Upload File icon (file size must be less than 2 MB).

2. Download Data (Schema): Users can download the schema structure in JSON format by using the Download Data icon.

After doing all the configurations click the Save Component in Storage icon provided in the reader configuration panel to save the component.

A notification message appears to inform about the component configuration success.

Last updated