Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

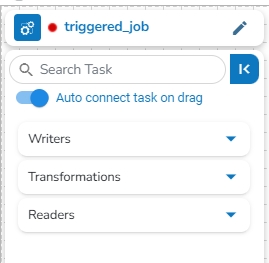

This section provides details about the various categories of the task components which can be used in the Spark Job.

There are three categories of task components available:

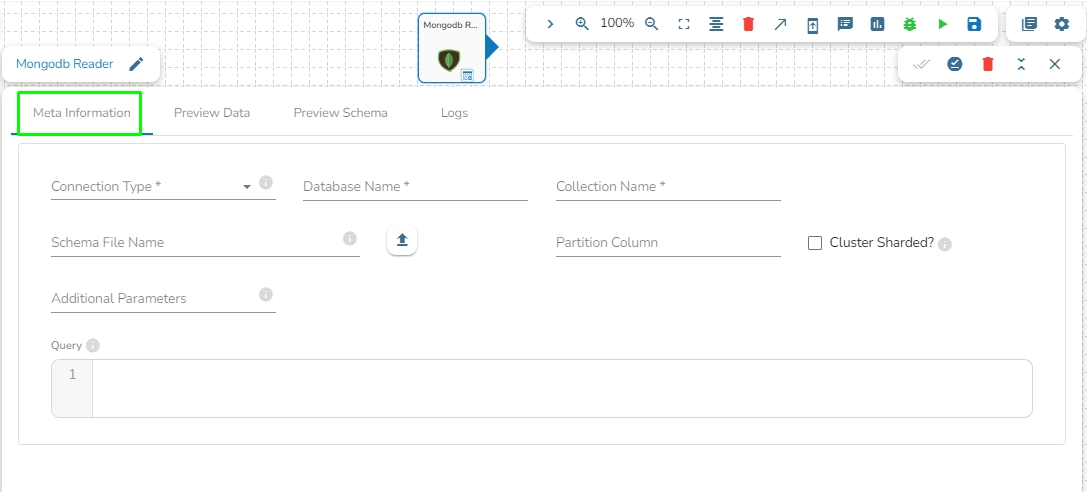

This task is used to read data from MongoDB collection.

Drag the MongoDB reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Connection Type: Select the connection type from the drop-down:

Standard

SRV

Connection String

Port (*): Provide the Port number (It appears only with the Standard connection type).

Host IP Address (*): The IP address of the host.

Username (*): Provide a username.

Password (*): Provide a valid password to access the MongoDB.

Database Name (*): Provide the name of the database where you wish to write data.

Additional Parameters: Provide details of the additional parameters.

Cluster Shared: Enable this option to horizontally partition data across multiple servers.

Schema File Name: Upload Spark Schema file in JSON format.

Query: Please provide Mongo Aggregation query in this field.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

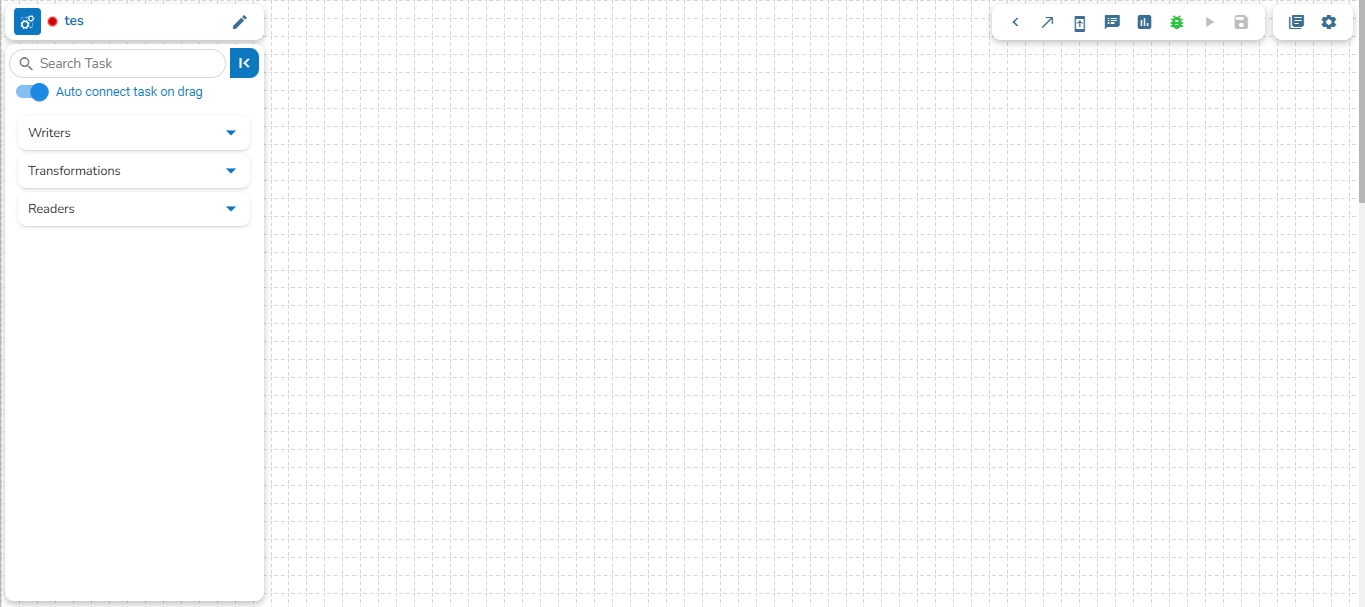

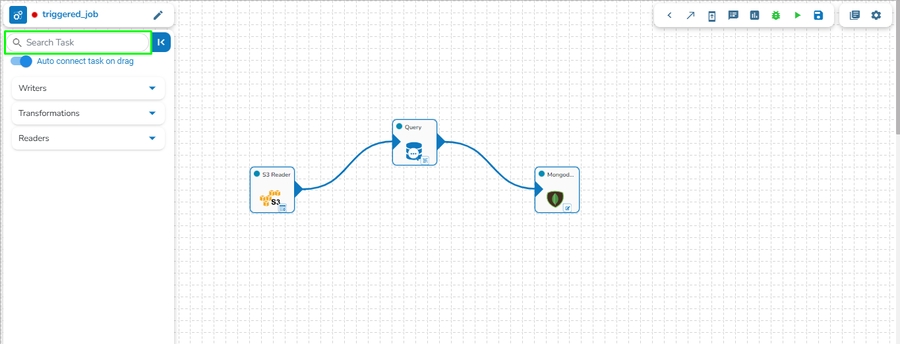

The Job Editor Page provides the user with all the necessary options and components to add a task and eventually create a Job workflow.

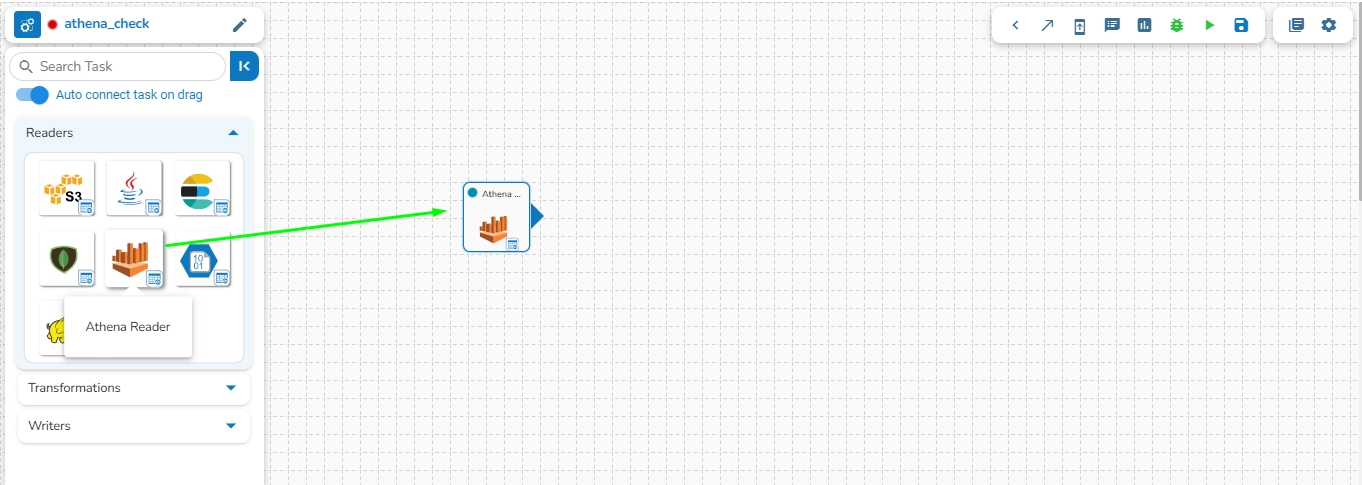

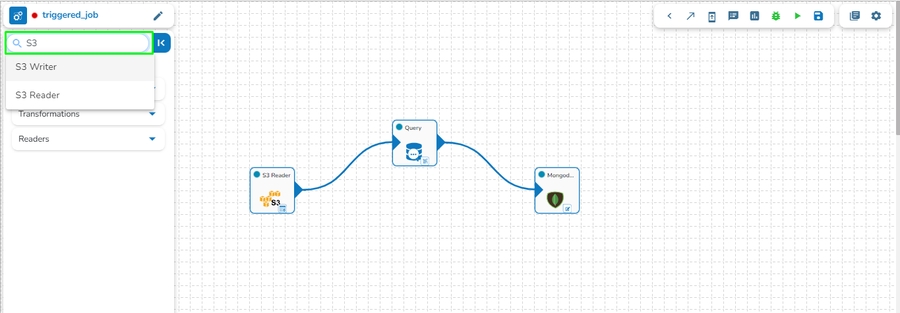

Once the Job gets saved in the Job list, the user can add a Task to the canvas. The user can drag the required tasks to the canvas and configure it to create a Job workflow or dataflow.

The Job Editor appears displaying the Task Pallet containing various components mentioned as Tasks.

Navigate to the Job List page.

It will list all the jobs and display the Job type if it is Spark Job or PySpark Job in the type column.

Select a Job from the displayed list.

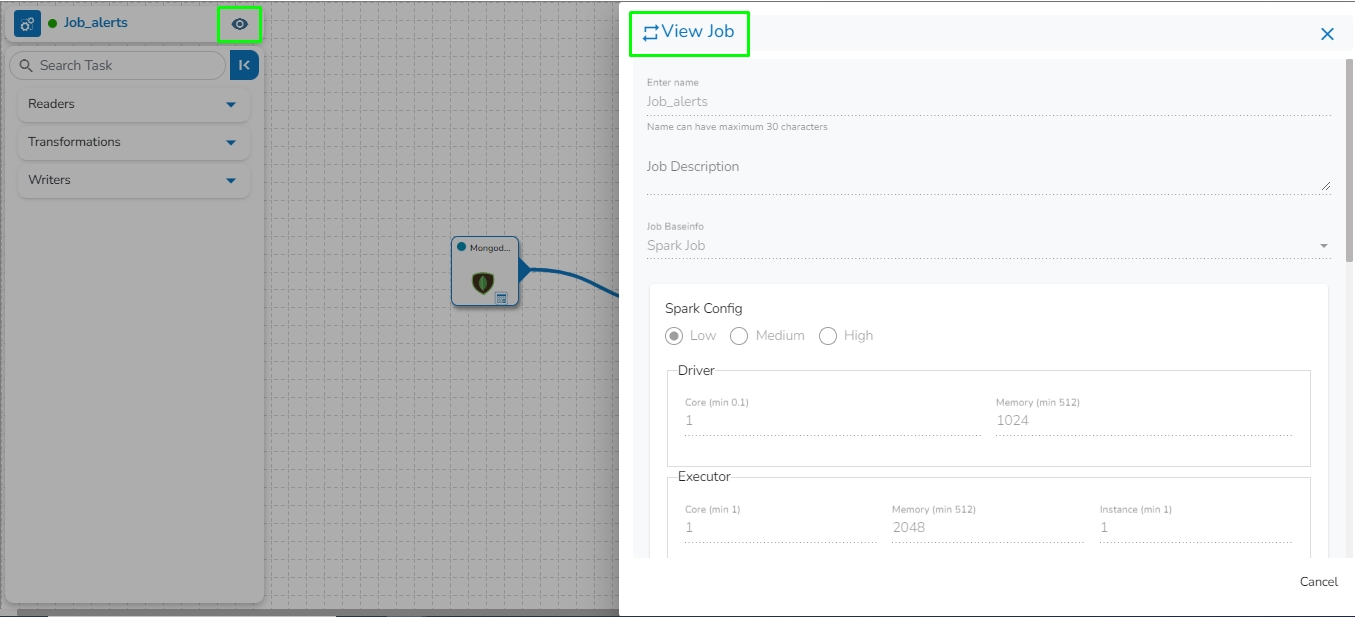

Click the View icon for the Job.

Please Note: Generally, the user can perform this step-in continuation to the Job creation, but if the user has come out of the Job Editor the above steps can help to access it.

The Job Editor opens for the selected Job.

Drag and drop the new required task, make changes in the existing task’s meta information, or change the task configuration as the requirement. (E.g., the DB Reader is dragged to the workspace in the below-given image):

Click on the dragged task icon.

The task-specific fields open asking the meta-information about the dragged component.

Open the Meta Information tab and configure the required information for the dragged component.

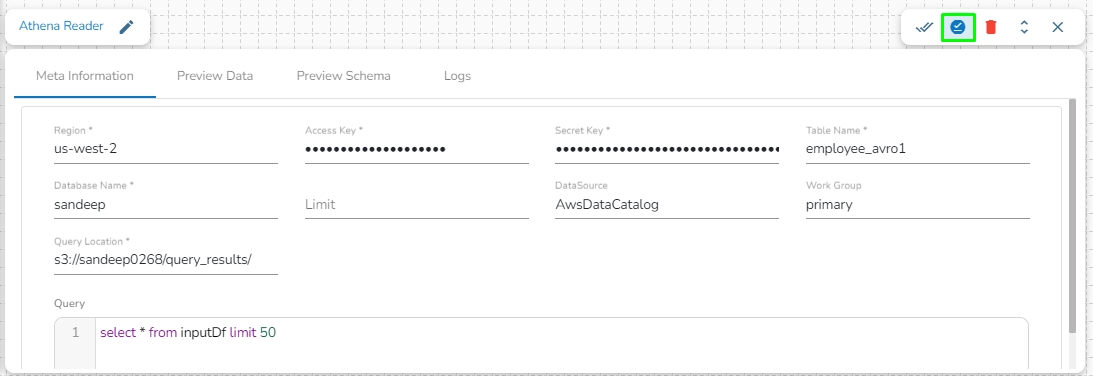

Click the given icon to validate the connection.

Click the Save Task in Storage icon.

A notification message appears.

A dialog window opens to confirm the action of job activation.

Click the YES option to activate the job.

A success message appears confirming the activation of the job.

Once the job is activated, the user can see their job details while running the job by clicking on the View icon; the edit option for the job will be replaced by the View icon when the job is activated.

Please Note:

If the job is not running in Development mode, there will be no data in the preview tab of tasks.

The Status for the Job gets changed on the job List page when they are running in the Development mode or it is activated.

Users can get a sample of the task data under the Preview Data tab provided for the tasks in the Job Workflows.

Navigate to the Job Editor page for a selected job.

Open a task from where you want to preview records.

Click the Preview Data tab to view the content.

Please Note:

Users can preview, download, and copy up to 10 data entries.

Click the Download icon to download the data in CSV, JSON, or Excel format.

Click on the Copy option to copy the data as a list of dictionaries.

Users can drag and drop column separators to adjust columns' width, allowing personalized data view.

This feature helps accommodate various data lengths and user preferences.

The event data is displayed in a structured table format.

The table supports sorting and filtering to enhance data usability. The previewed data can be filtered based on the Latest, Beginning, and Timestamp options.

The Timestamp filter option redirects the user to select a timestamp from the Time Range window. The user can select a start and end date or choose from the available time ranges to apply and get a data preview.

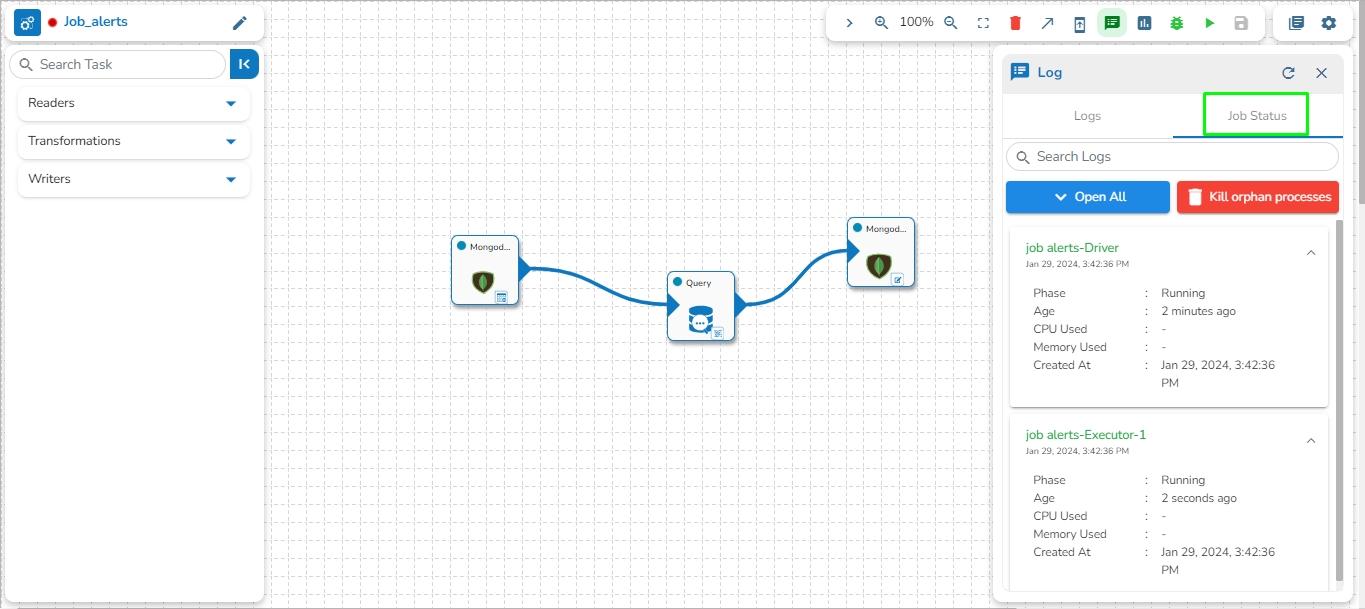

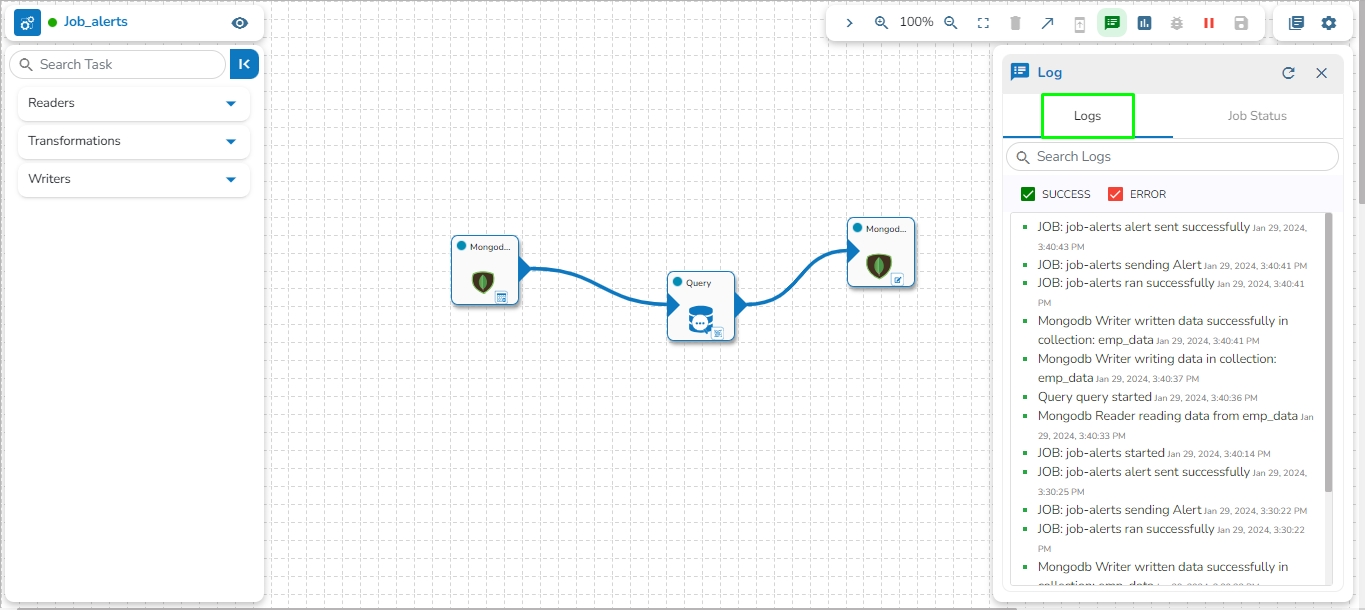

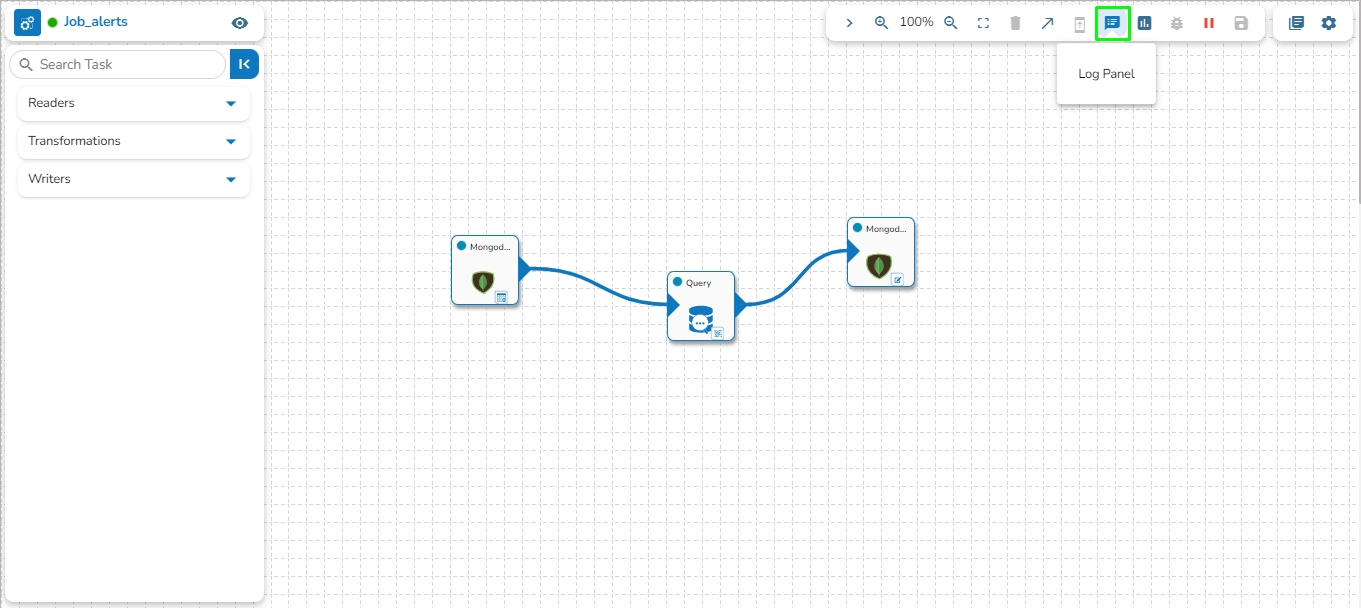

The Toggle Log Panel displays the Logs and Advanced Logs tabs for the Job Workflows.

Navigate to the Job Editor page.

Click the Toggle Log Panel icon on the header.

A panel Toggles displaying the collective logs of the job under the Logs tab.

Select the Job Status tab to display the pod status of the complete Job.

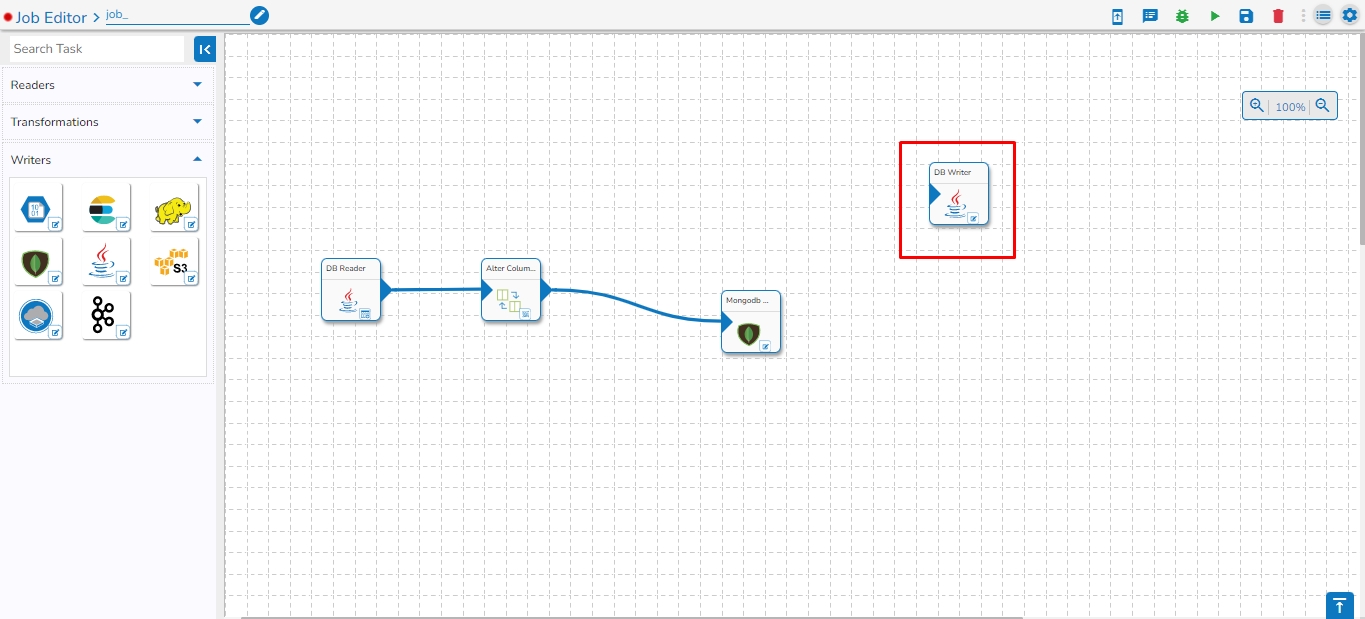

Please Note: If any orphan task is used in the Job Editor Workspace that is not in use, it will cause failure for the entire Job. So, avoid using any orphan task in the Job. Please see the image below, for reference. In the image below the highlighted DB writer task is an orphan task and if the Job is activated, then this Job will fail because the orphan DB writer task is not getting any input. Please avoid the use of an orphan task inside the Job Editor workspace.

Navigate to the Job Editor page.

The Job Version update button will display a red dot indicating that new updates are available for the selected job.

The Confirm dialog box appears.

Click the YES option.

After the job is upgraded, the Upgrade Job Version button gets disabled. It will display that the job is up to date and no updates are available.

Job Version details

Displays the latest versions for the Jobs upgrade.

Displays Jobs logs and Job Status tab under Log panel.

Redirects to the Job Monitoring page

Development Mode

Runs the job in development mode.

Activate Job

Activates the current Job.

Update Job

Updates the current Job.

Edit Job

To edit the job name/ configurations.

Delete Job

Deletes the current Job.

Push Job

Push the selected job to GIT.

Redirects to the List Job page.

Redirects to the Settings page.

Opens the Job in Full screen

Format the Job tasks in arranged manner.

Zoom in the Job workspace.

Zoom out the Job workspace.

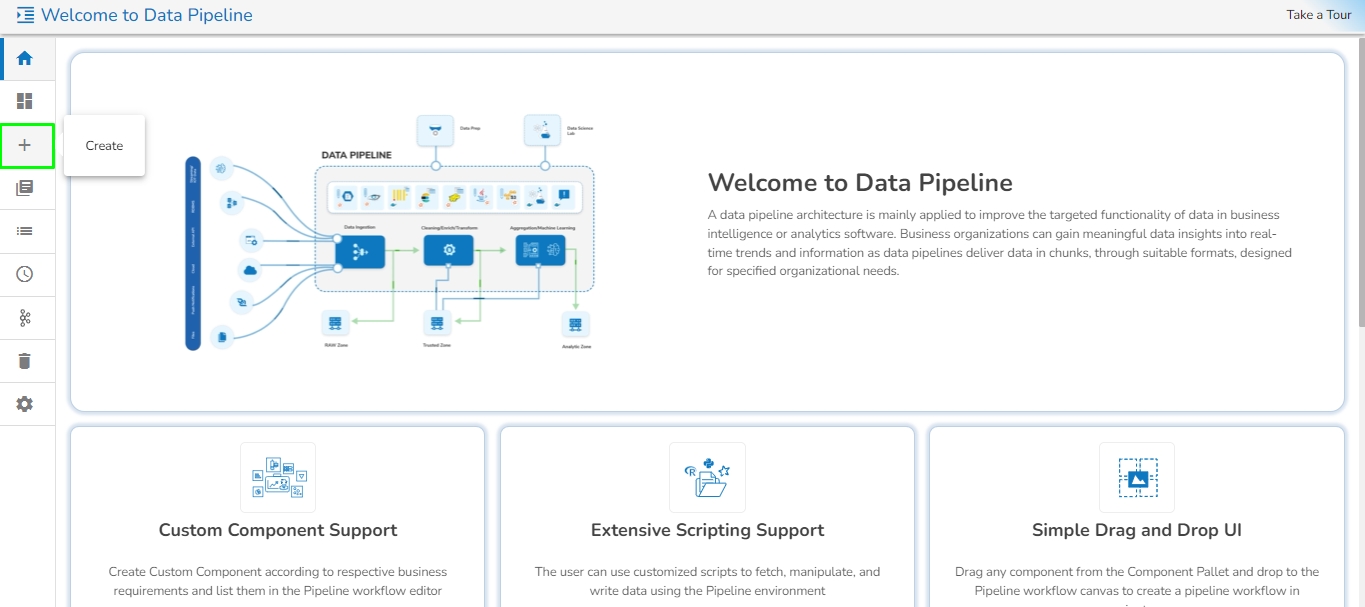

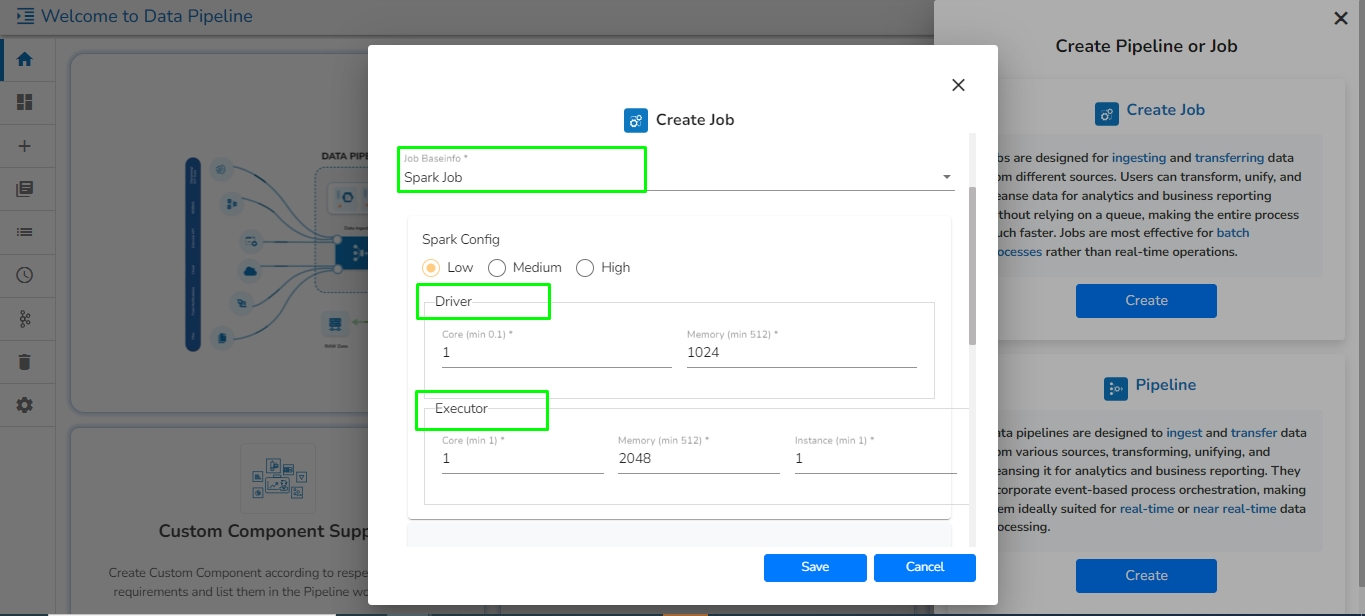

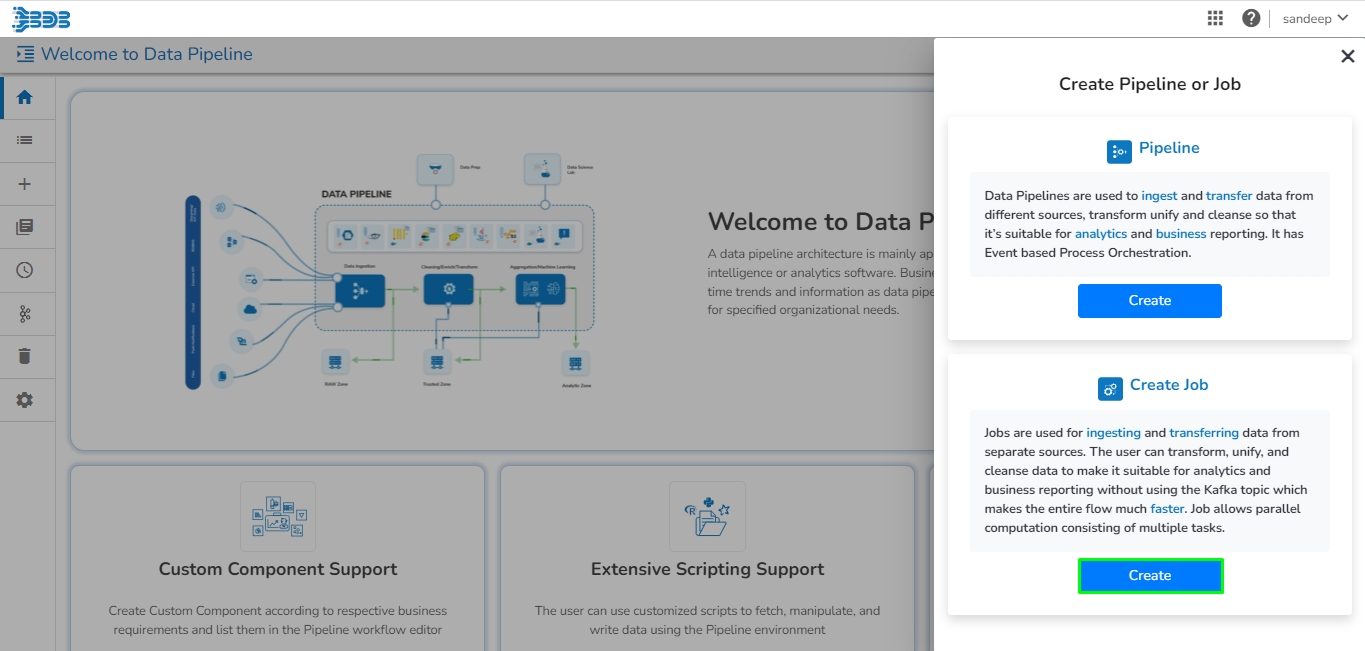

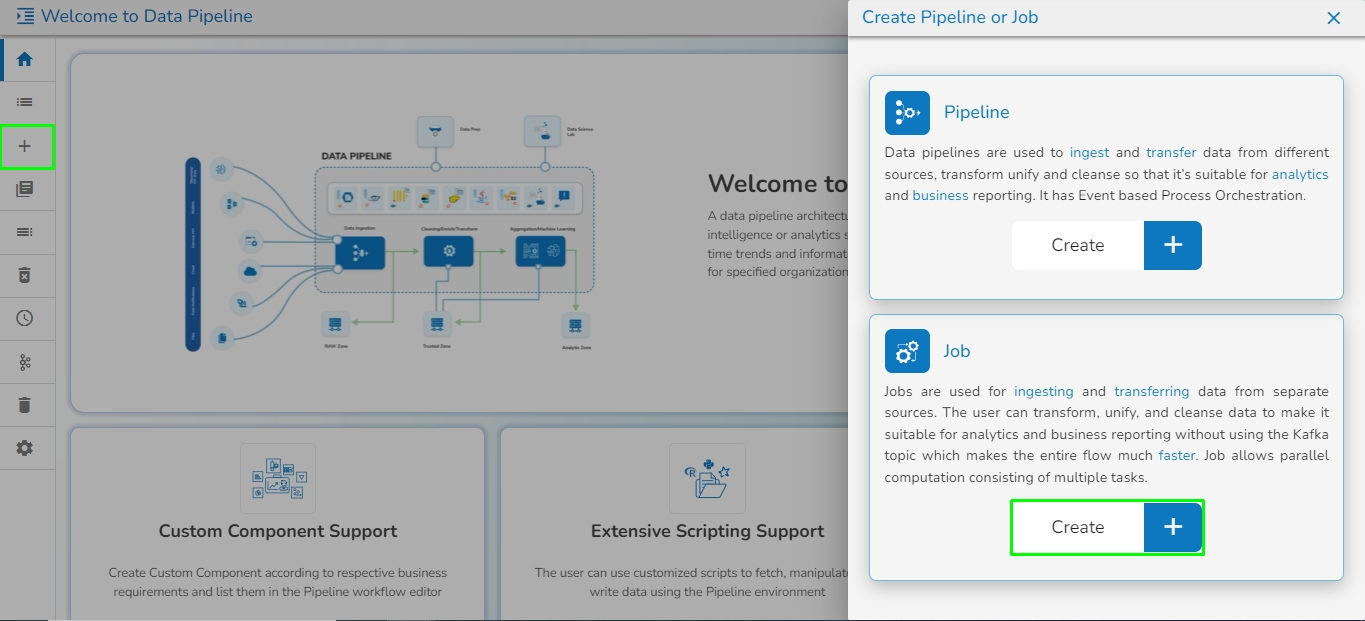

This section provides detailed information on the Jobs to make your data process faster.

Jobs are used for ingesting and transferring data from separate sources. The user can transform, unify, and cleanse data to make it suitable for analytics and business reporting without using the Kafka topic which makes the entire flow much faster.

Check out the given demonstration to understand how to create and activate a job.

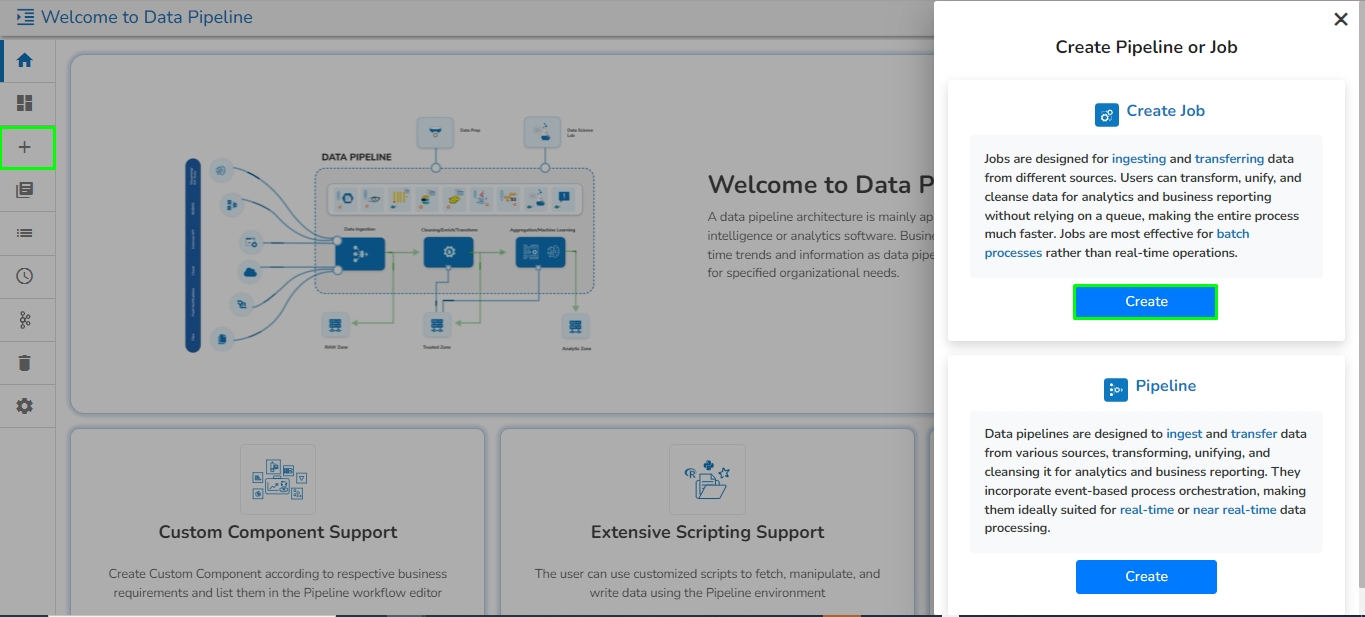

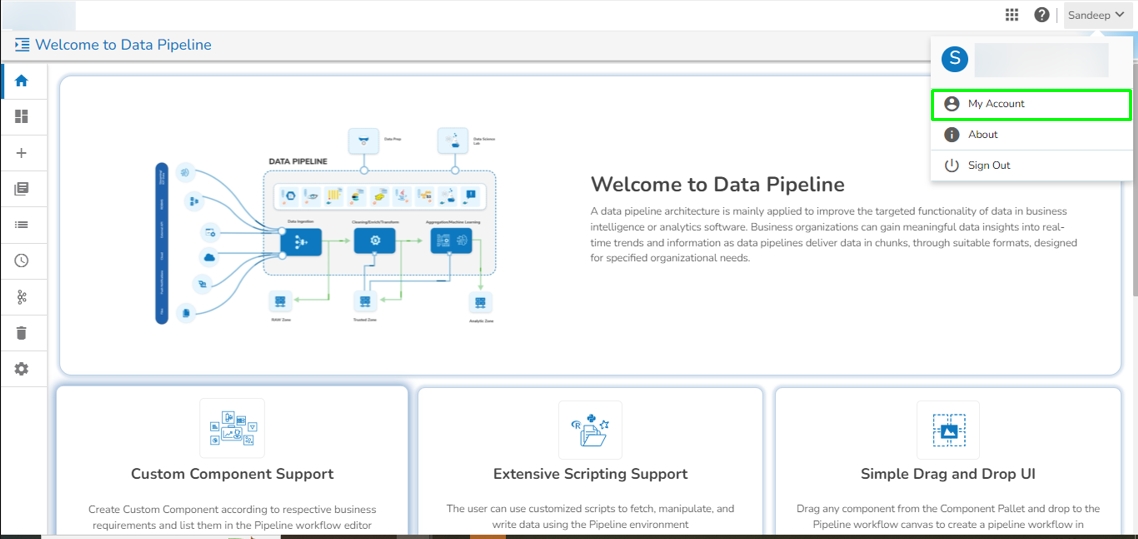

Navigate to the Data Pipeline homepage.

Click on the Create icon.

Navigate to the Create Pipeline or Job interface.

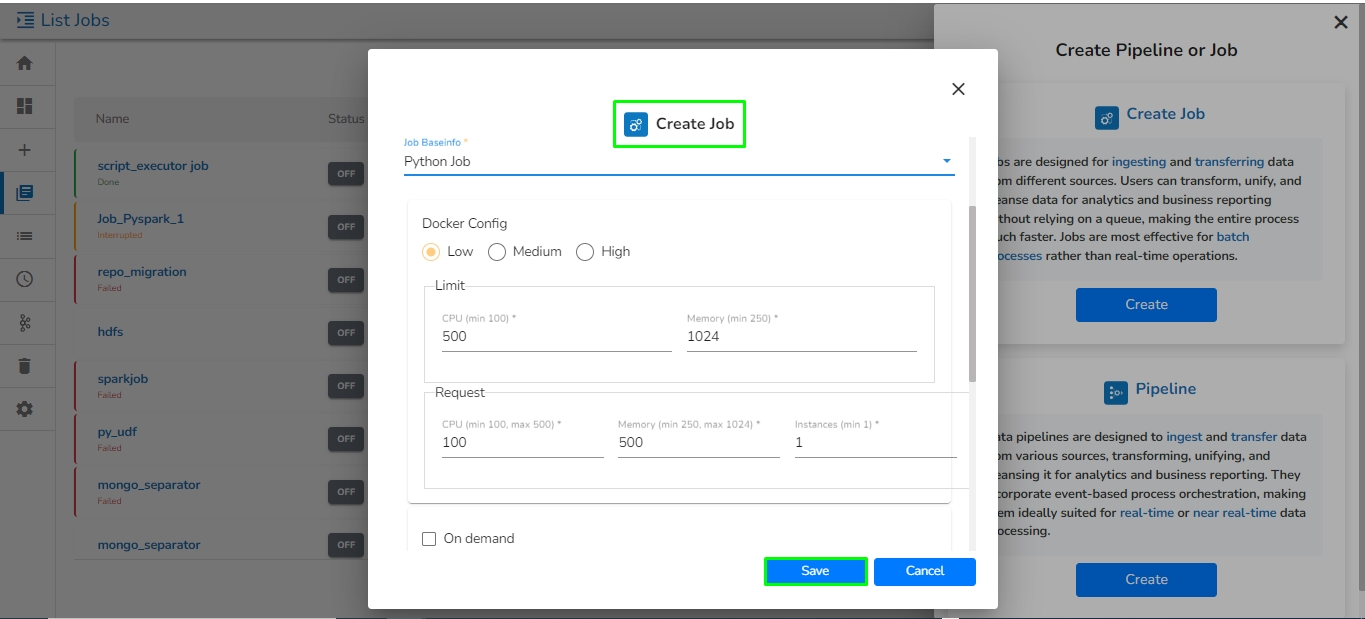

The New Job dialog box appears redirecting the user to create a new Job.

Enter a name for the new Job.

Describe the Job(Optional).

Job Baseinfo: In this field, there are three options:

Trigger By: There are 2 options for triggering a job on success or failure of a job:

Success Job: On successful execution of the selected job the current job will be triggered.

Failure Job: On failure of the selected job the current job will be triggered.

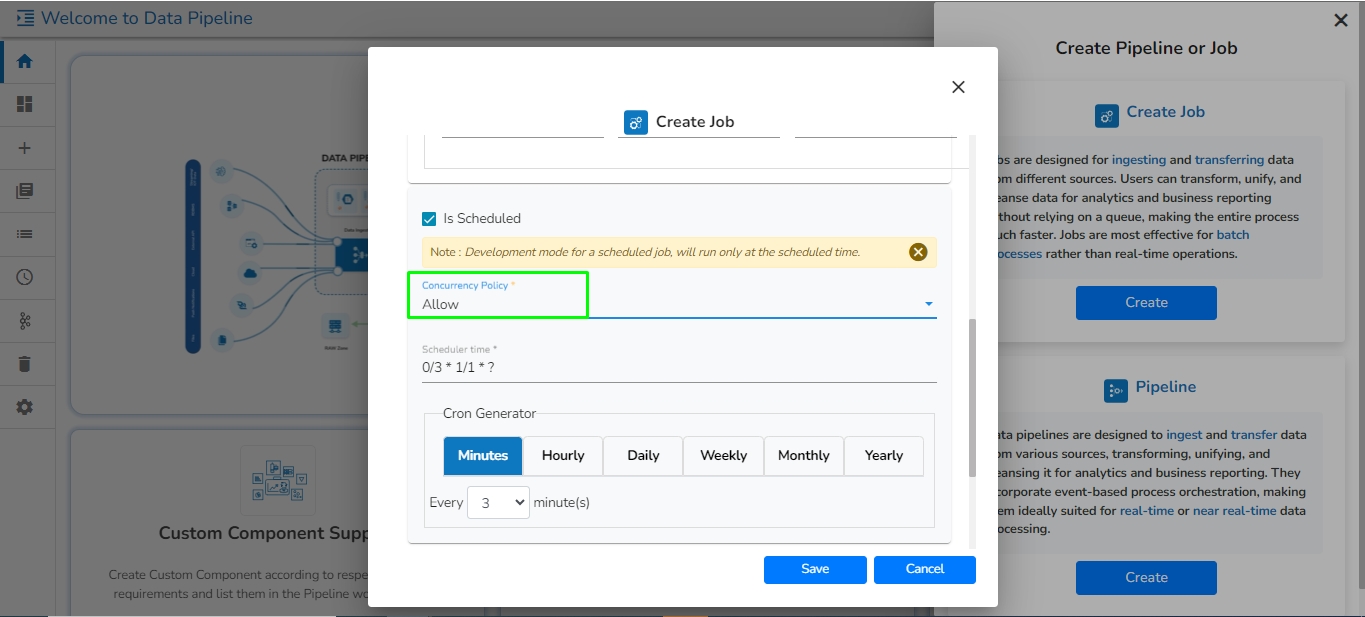

Is Scheduled?

A job can be scheduled for a particular timestamp. Every time at the same timestamp the job will be triggered.

Job must be scheduled according to UTC.

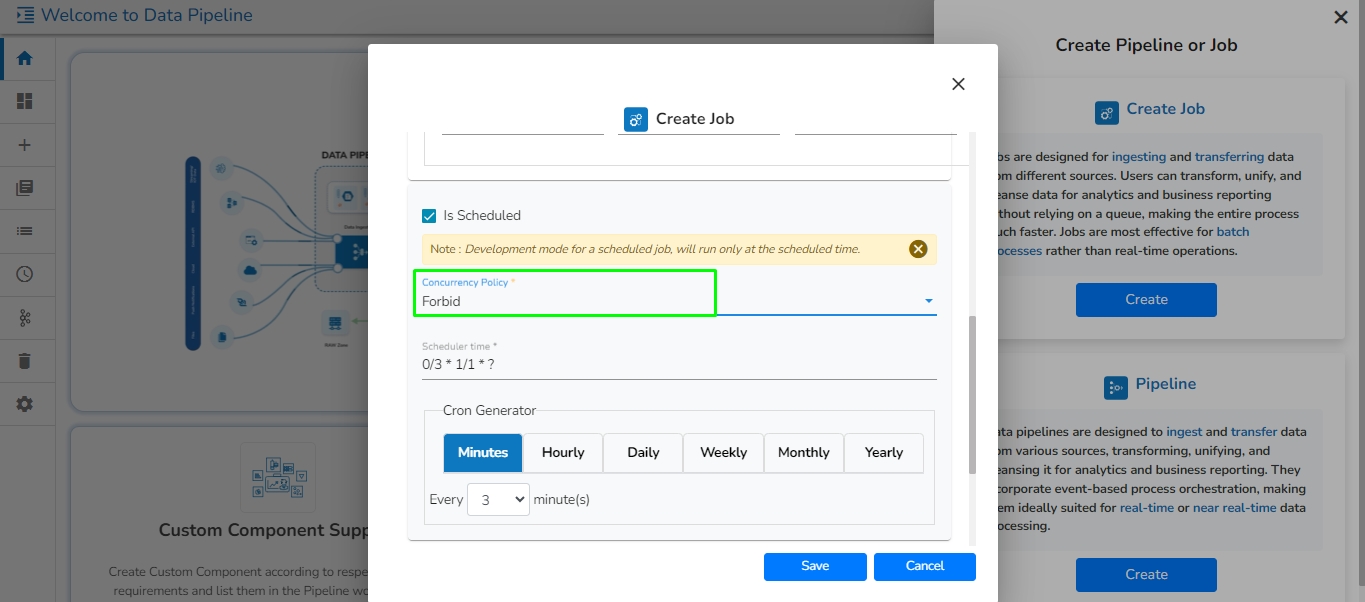

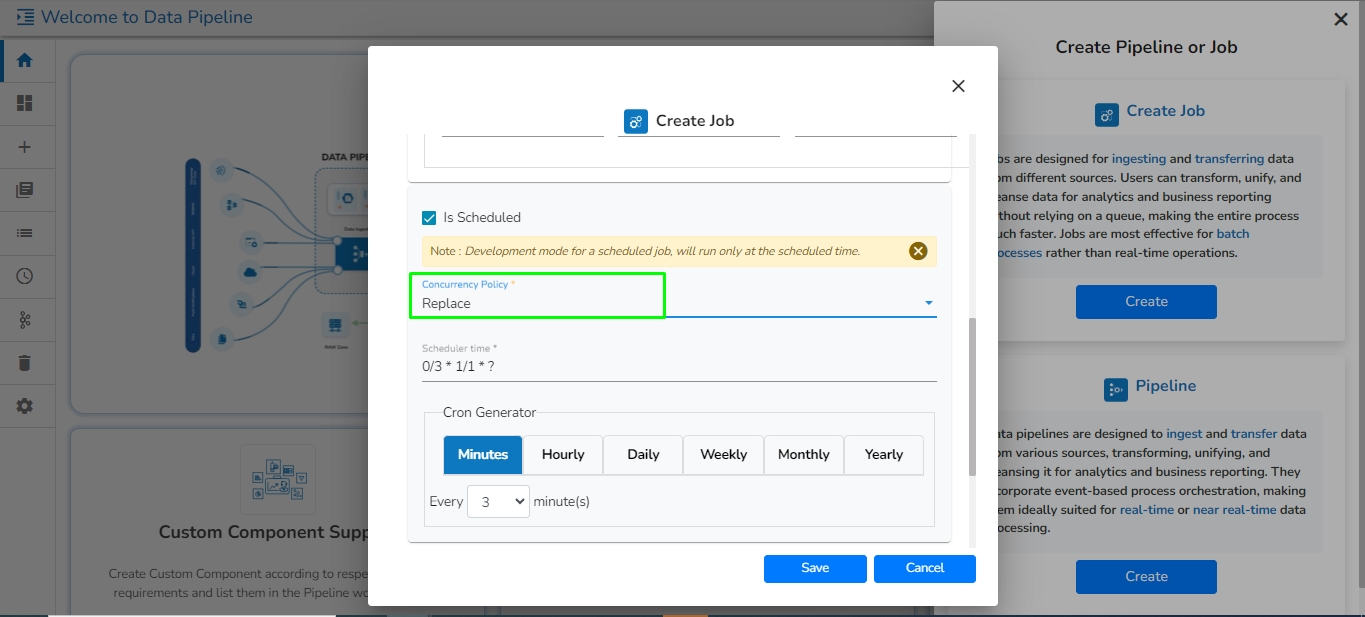

Concurrency Policy: Concurrency policy schedulers are responsible for managing the execution and scheduling of concurrent tasks or threads in a system. They determine how resources are allocated and utilized among the competing tasks. Different scheduling policies exist to control the order, priority, and allocation of resources for concurrent tasks.

Please Note:

Concurrency Policy will appear only when "Is Scheduled" is enabled.

If the job is scheduled, then the user has to activate it for the first time. Afterward, the job will automatically be activated each day at the scheduled time.

There are 3 Concurrency Policy available:

Allow: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will run in parallel with the previous tasks.

Forbid: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will wait until all the previous tasks are completed.

Replace: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the previous task will be terminated and the new task will start processing.

Spark Configuration

Select a resource allocation option using the radio button. The given choices are:

Low

Medium

High

This feature is used to deploy the Job with high, medium, or low-end configurations according to the velocity and volume of data that the Job must handle.

Also, provide the resources to Driver and Executer according to the requirement.

Alert: There are 2 options for sending an alert:

Success: On successful execution of the configured job, the alert will be sent to selected channel.

Failure: On failure of the configured job, the alert will be sent to selected channel.

Please go through the given link to configure the Alerts in Job: Job Alert

Click the Save option to create the job.

A success message appears to confirm the creation of a new job.

The Job Editor page opens for the newly created job.

Please Note:

The Trigger by feature will not work if the selected Trigger by job is running in the Development mode. Trigger by feature will only work when the selected Trigger by Job is activated.

By clicking the Save option, the user gets redirected to the job workflow editor.

Click the Activate Job icon to activate the job(It appears only after the newly created job gets successfully updated).

Jobs can be run in the Development mode as well. The user can preview only 10 records in the preview tab of the task if the job is running in the Development mode and if any writer task is used in the job then it will write only 10 records in the table of the given database.

Please Note: Click the Delete icon from the Job Editor page to delete the selected job. The deleted job gets removed from the Job list.

A notification message appears stating that the version is upgraded.

All the available Reader Task components are included in this section.

Readers are a group of tasks that can read data from different DB and cloud storages. In Jobs, all the tasks run in real-time.

There are eight(8) Readers tasks in Jobs. All the readers tasks contains the following tabs:

Meta Information: Configure the meta information same as doing in pipeline components.

Preview Data: Only ten(10) random data can be previewed in this tab only when the task is running in Development mode.

Preview schema: Spark schema of the reading data will be shown in this tab.

Logs: Logs of the tasks will display here.

All the available Writer Task components for a Job are explained in this section.

Writers are a group of components that can write data to different DB and cloud storages.

There are Eight(8) Writers tasks in Jobs. All the Writers tasks is having the following tabs:

Meta Information: Configure the meta information same as doing in pipeline components.

Preview Data: Only ten random data can be previewed in this tab only when the task is running in Development mode.

Preview schema: Spark schema of the data will be shown in this tab.

Logs: Logs of the tasks will display here.

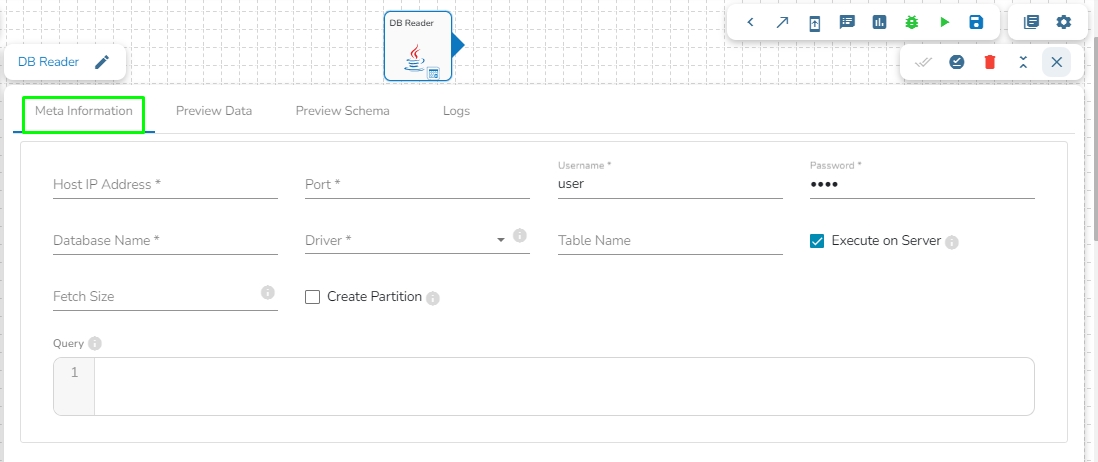

This task is used to read the data from the following databases: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Drag the DB reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the Host IP Address for the selected driver.

Port: Enter the port for the given IP Address.

Database name: Enter the Database name.

Table name: Provide a single or multiple table names. If multiple table name has be given, then enter the table names separated by comma(,).

User name: Enter the user name for the provided database.

Password: Enter the password for the provided database.

Driver: Select the driver from the drop down. There are 7 drivers supported here: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Fetch Size: Provide the maximum number of records to be processed in one execution cycle.

Create Partition: This is used for performance enhancement. It's going to create the sequence of indexing. Once this option is selected, the operation will not execute on server.

Partition By: This option will appear once create partition option is enabled. There are two options under it:

Auto Increment: The number of partitions will be incremented automatically.

Index: The number of partitions will be incremented based on the specified Partition column.

Query: Enter the spark SQL query in this field for the given table or table(s). Please refer the below image for making query on multiple tables.

Please Note:

The ClickHouse driver in the Spark components will use HTTP Port and not the TCP port.

In the case of data from multiple tables (join queries), one can write the join query directly without specifying multiple tables, as only one among table and query fields is required.

Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

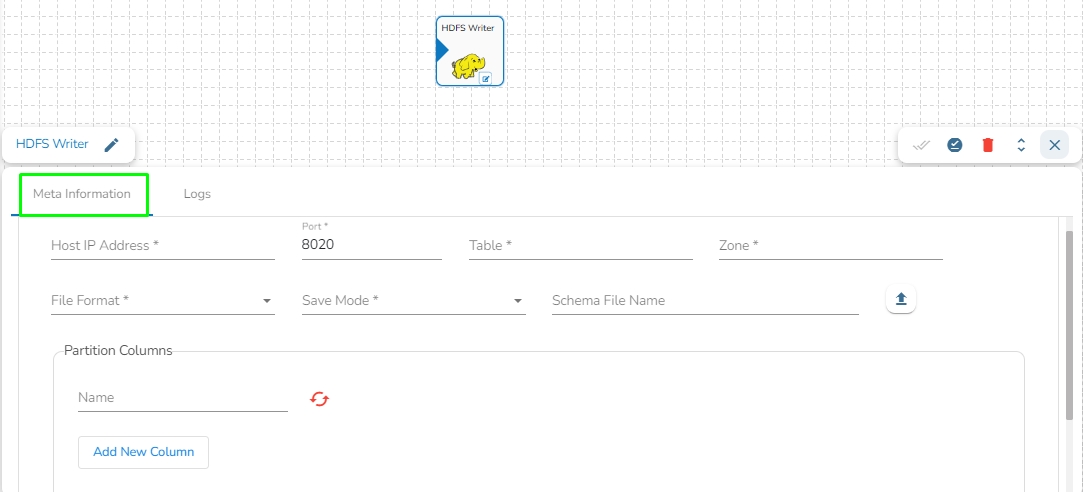

HDFS stands for Hadoop Distributed File System. It is a distributed file system designed to store and manage large data sets in a reliable, fault-tolerant, and scalable way. HDFS is a core component of the Apache Hadoop ecosystem and is used by many big data applications.

This task writes the data in HDFS(Hadoop Distributed File System).

Drag the HDFS writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the host IP address for HDFS.

Port: Enter the Port.

Table: Enter the table name where the data has to be written.

Zone: Enter the Zone for HDFS in which the data has to be written. Zone is a special directory whose contents will be transparently encrypted upon write and transparently decrypted upon read.

File Format: Select the file format in which the data has to be written:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the save mode.

Schema file name: Upload spark schema file in JSON format.

Partition Columns: Provide a unique Key column name to partition data in Spark.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

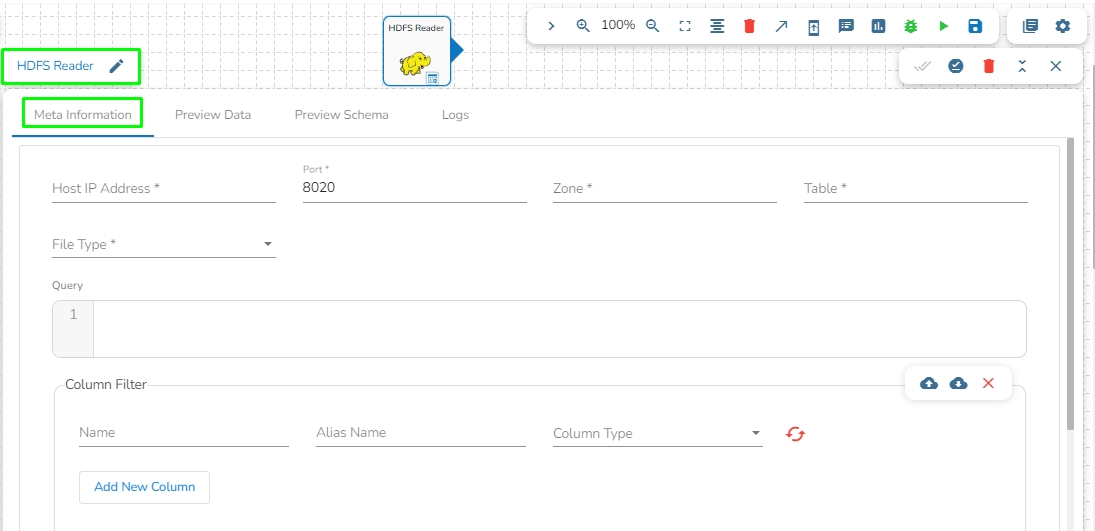

HDFS stands for Hadoop Distributed File System. It is a distributed file system designed to store and manage large data sets in a reliable, fault-tolerant, and scalable way. HDFS is a core component of the Apache Hadoop ecosystem and is used by many big data applications.

This task reads the file located in HDFS (Hadoop Distributed File System).

Drag the HDFS reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the host IP address for HDFS.

Port: Enter the Port.

Zone: Enter the Zone for HDFS. Zone is a special directory whose contents will be transparently encrypted upon write and transparently decrypted upon read.

File Type: Select the File Type from the drop down. The supported file types are:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Root Tag: Provide the root tag from the XML files.

Row Tags: Provide the row tags from the XML files.

Join Row Tags: Enable this option to join multiple row tags.

Path: Provide the path of the file.

Partition Columns: Provide a unique Key column name to partition data in Spark.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

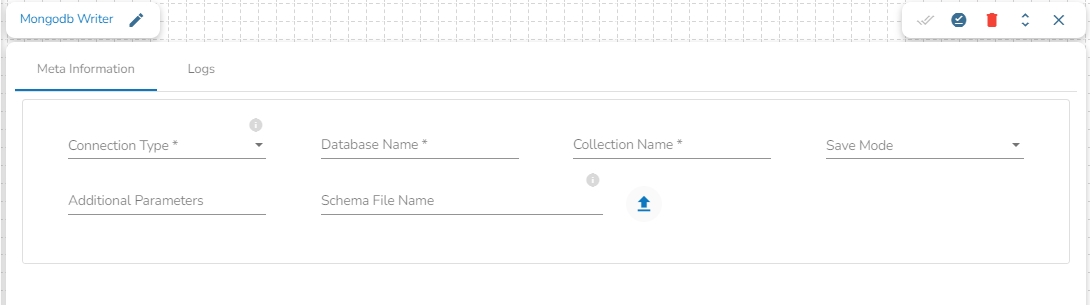

This task writes the data to MongoDB collection.

Drag the MongoDB writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Connection Type: Select the connection type from the drop-down:

Standard

SRV

Connection String

Port (*): Provide the Port number (It appears only with the Standard connection type).

Host IP Address (*): The IP address of the host.

Username (*): Provide a username.

Password (*): Provide a valid password to access the MongoDB.

Database Name (*): Provide the name of the database where you wish to write data.

Additional Parameters: Provide details of the additional parameters.

Schema File Name: Upload Spark Schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append: This operation adds the data to the collection.

Ignore: "Ignore" is an operation that skips the insertion of a record if a duplicate record already exists in the database. This means that the new record will not be added, and the database will remain unchanged. "Ignore" is useful when you want to prevent duplicate entries in a database.

Upsert: It is a combination of "update" and "insert". It is an operation that updates a record if it already exists in the database or inserts a new record if it does not exist. This means that "upsert" updates an existing record with new data or creates a new record if the record does not exist in the database.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

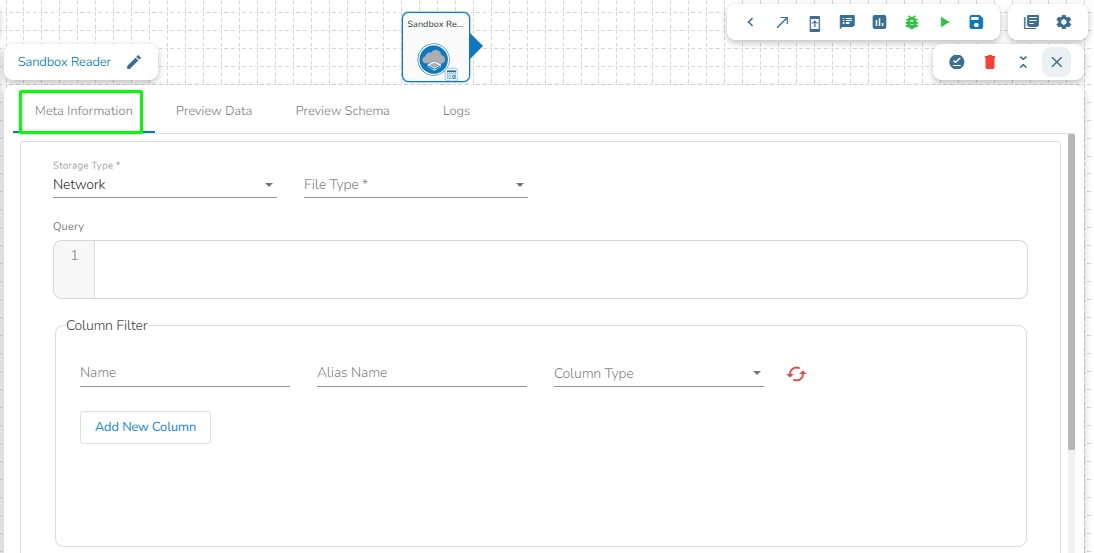

This task can read the data from the Network pool of Sandbox.

Drag the Sandbox reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Storage Type: This field is pre-defined.

Sandbox File: Select the file name from the drop-down.

File Type: Select the file type from the drop down.

There are four(5) types of file extensions are available under it:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Query: Provide Spark SQL query in this field.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

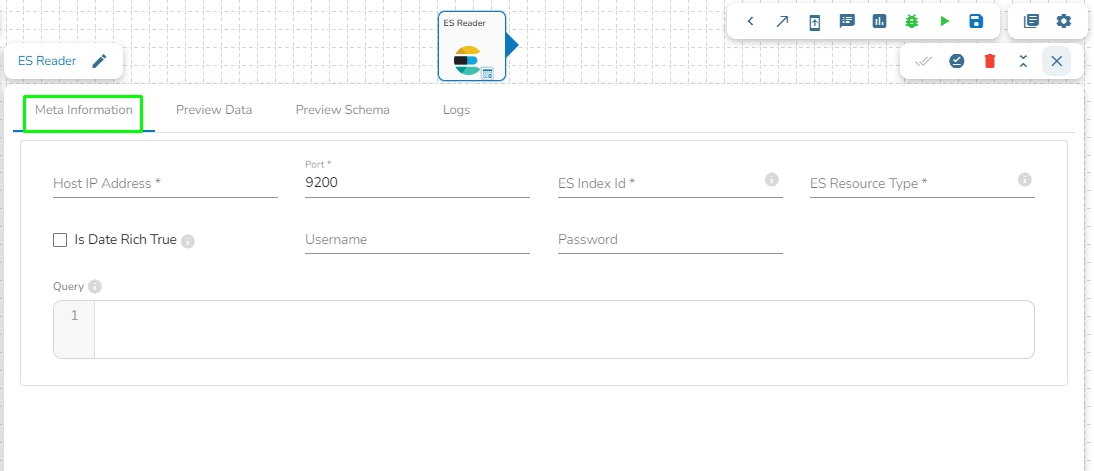

Elasticsearch is an open-source search and analytics engine built on top of the Apache Lucene library. It is designed to help users store, search, and analyze large volumes of data in real-time. Elasticsearch is a distributed, scalable system that can be used to index and search structured, semi-structured, and unstructured data.

This task is used to read the data located in Elastic Search engine.

Drag the ES reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the host IP Address for Elastic Search.

Port: Enter the port to connect with Elastic Search.

Index ID: Enter the Index ID to read a document in elastic search. In Elasticsearch, an index is a collection of documents that share similar characteristics, and each document within an index has a unique identifier known as the index ID. The index ID is a unique string that is automatically generated by Elasticsearch and is used to identify and retrieve a specific document from the index.

Resource Type: Provide the resource type. In Elasticsearch, a resource type is a way to group related documents together within an index. Resource types are defined at the time of index creation, and they provide a way to logically separate different types of documents that may be stored within the same index.

Is Date Rich True: Enable this option if any fields in the reading file contain date or time information. The "date rich" feature in Elasticsearch allows for advanced querying and filtering of documents based on date or time ranges, as well as date arithmetic operations.

Username: Enter the username for elastic search.

Password: Enter the password for elastic search.

Query: Provide a spark SQL query.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

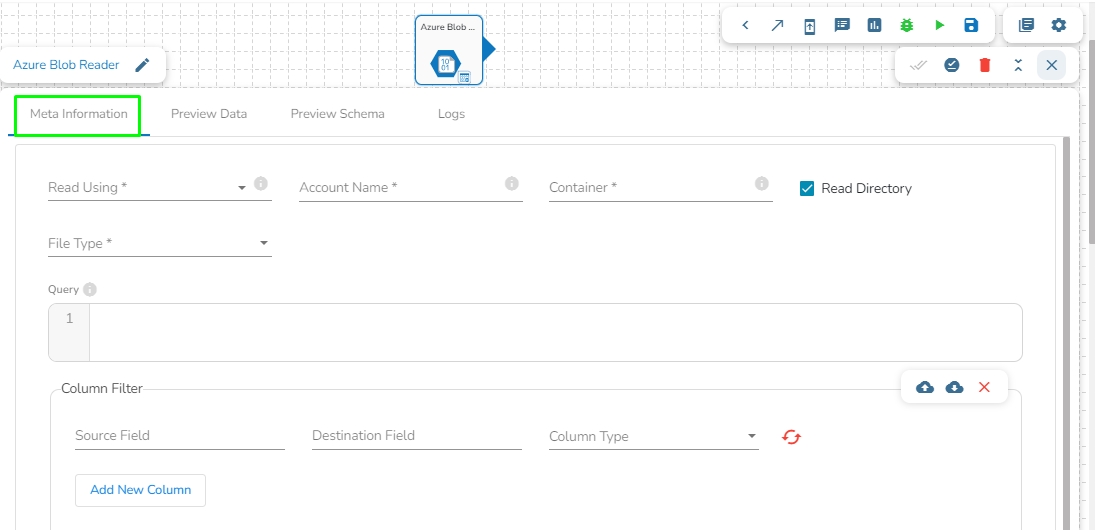

This task is used to read data from Azure blob container.

Drag the Azure Blob reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

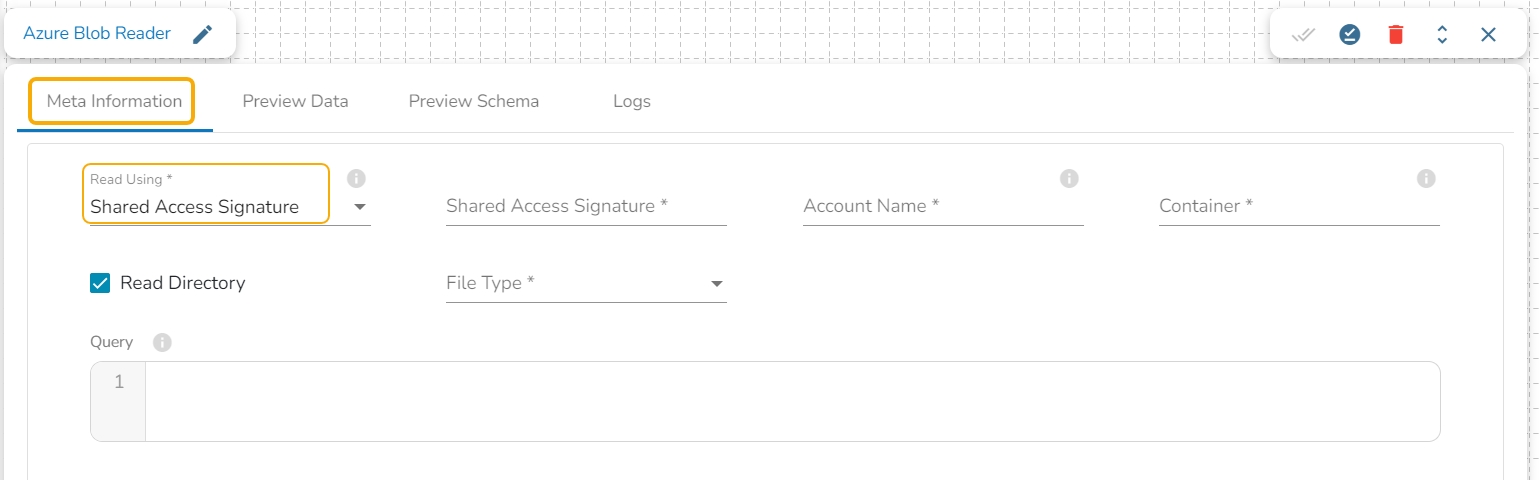

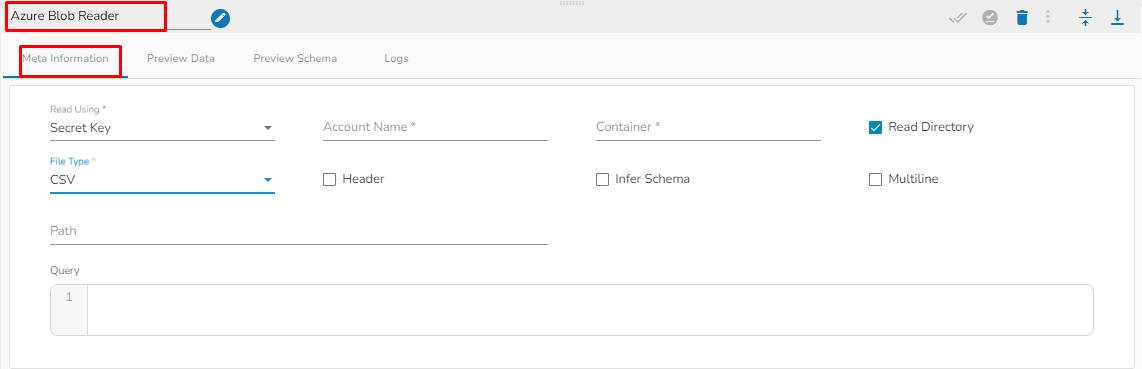

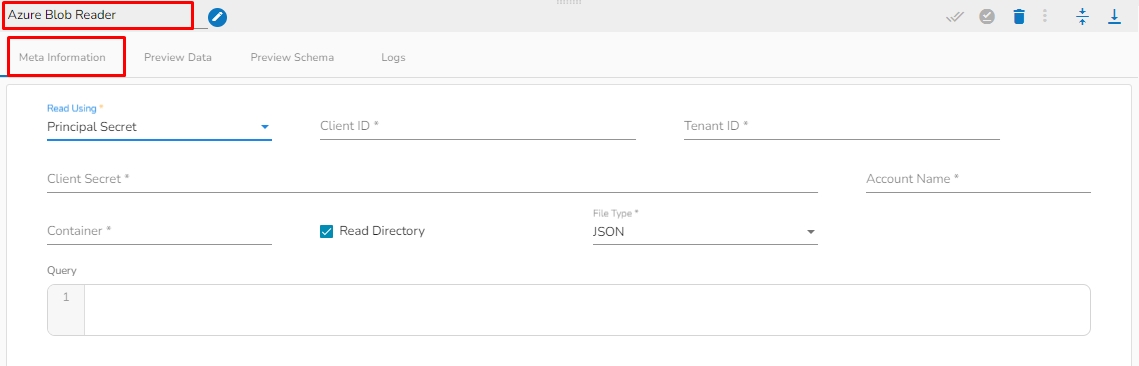

Read using: There are three(3) options available under this tab:

Provide the following details:

Shared Access Signature: This is a URI that grants restricted access rights to Azure Storage resources.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the file is located and which has to be read.

File type: There are four(5) types of file extensions are available under it:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Root Tag: Provide the root tag from the XML files.

Row Tags: Provide the row tags from the XML files.

Join Row Tags: Enable this option to join multiple row tags.

Path: This option will appear once the file type is selected. Enter the path where the selected file type is located.

Read Directory: Check in this box to read the specified directory.

Query: Provide Spark SQL query in this field.

Provide the following details:

Account Key: Enter the Azure account key. In Azure, an account key is a security credential that is used to authenticate access to storage resources, such as blobs, files, queues, or tables, in an Azure storage account.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

File type: There are four(5) types of file extensions are available under it:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Root Tag: Provide the root tag from the XML files.

Row Tags: Provide the row tags from the XML files.

Join Row Tags: Enable this option to join multiple row tags.

Path: This option will appear once the file type is selected. Enter the path where the selected file type is located.

Read Directory: Check in this box to read the specified directory.

Query: Provide Spark SQL query in this field.

Provide the following details:

Client ID: Provide Azure Client ID. The client ID is the unique Application (client) ID assigned to your app by Azure AD when the app was registered.

Tenant ID: Provide the Azure Tenant ID. Tenant ID (also known as Directory ID) is a unique identifier that is assigned to an Azure AD tenant, which represents an organization or a developer account. It is used to identify the organization or developer account that the application is associated with.

Client Secret: Enter the Azure Client Secret. Client Secret (also known as Application Secret or App Secret) is a secure password or key that is used to authenticate an application to Azure AD.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Query: Provide Spark SQL query in this field.

File type: There are four(5) types of file extensions are available under it:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Root Tag: Provide the root tag from the XML files.

Row Tags: Provide the row tags from the XML files.

Join Row Tags: Enable this option to join multiple row tags.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

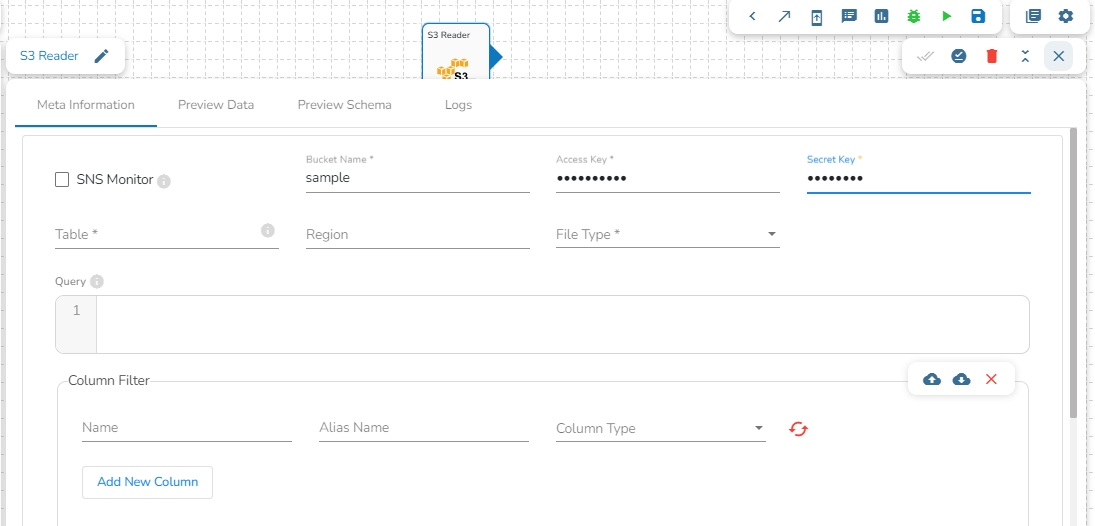

This task reads the file from Amazon S3 bucket.

Please follow the below mentioned steps to configure meta information of S3 reader task:

Drag the S3 reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Bucket Name (*): Enter S3 bucket name.

Region (*): Provide the S3 region.

Access Key (*): Access key shared by AWS to login..

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which is to be read

File Type (*): Select a file type from the drop-down menu (CSV, JSON, PARQUET, AVRO, XML are the supported file types)

Limit: Set a limit for the number of records.

Query: Insert an SQL query (it supports query containing a join statement as well).

Access Key (*): Access key shared by AWS to login

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which has to be read

File Type (*): Select a file type from the drop-down menu (CSV, JSON, PARQUET, AVRO, XML are the supported file types)

Limit: Set limit for the number of records

Query: Insert an SQL query (it supports query containing a join statement as well)

Provide a unique Key column name to partition data in Spark.

Please Note:

Please click the Save Task In Storage icon to save the configuration for the dragged reader task.

Once file type is selected the multiple fields will appear. Follow the below steps for the selected different file types.

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Root Tag: Provide the root tag from the XML files.

Row Tags: Provide the row tags from the XML files.

Join Row Tags: Enable this option to join multiple row tags.

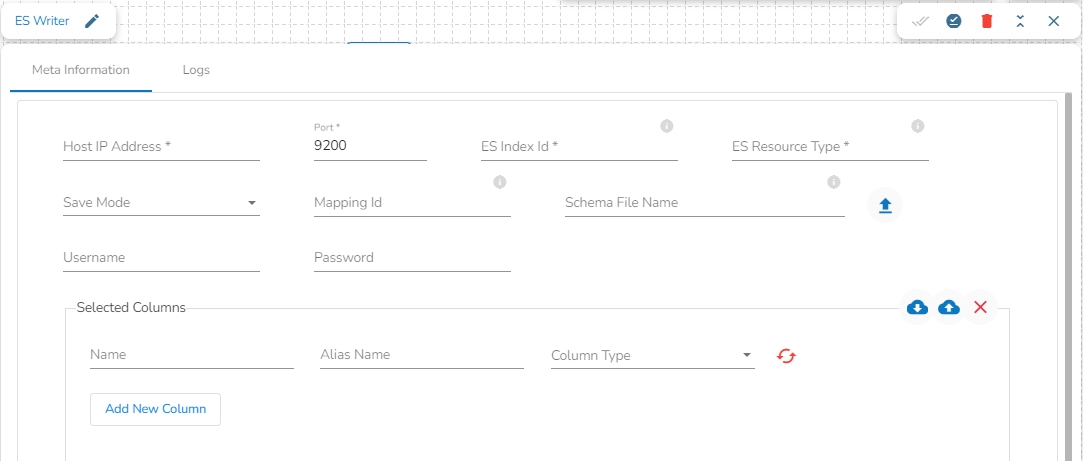

Elasticsearch is an open-source search and analytics engine built on top of the Apache Lucene library. It is designed to help users store, search, and analyze large volumes of data in real-time. Elasticsearch is a distributed, scalable system that can be used to index and search structured, semi-structured, and unstructured data.

This task is used to write the data in Elastic Search engine.

Drag the ES writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the host IP Address for Elastic Search.

Port: Enter the port to connect with Elastic Search.

Index ID: Enter the Index ID to read a document in elastic search. In Elasticsearch, an index is a collection of documents that share similar characteristics, and each document within an index has a unique identifier known as the index ID. The index ID is a unique string that is automatically generated by Elasticsearch and is used to identify and retrieve a specific document from the index.

Mapping ID: Provide the Mapping ID. In Elasticsearch, a mapping ID is a unique identifier for a mapping definition that defines the schema of the documents in an index. It is used to differentiate between different types of data within an index and to control how Elasticsearch indexes and searches data.

Resource Type: Provide the resource type. In Elasticsearch, a resource type is a way to group related documents together within an index. Resource types are defined at the time of index creation, and they provide a way to logically separate different types of documents that may be stored within the same index.

Username: Enter the username for elastic search.

Password: Enter the password for elastic search.

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Selected columns: The user can select the specific column, provide some alias name and select the desired data type of that column.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

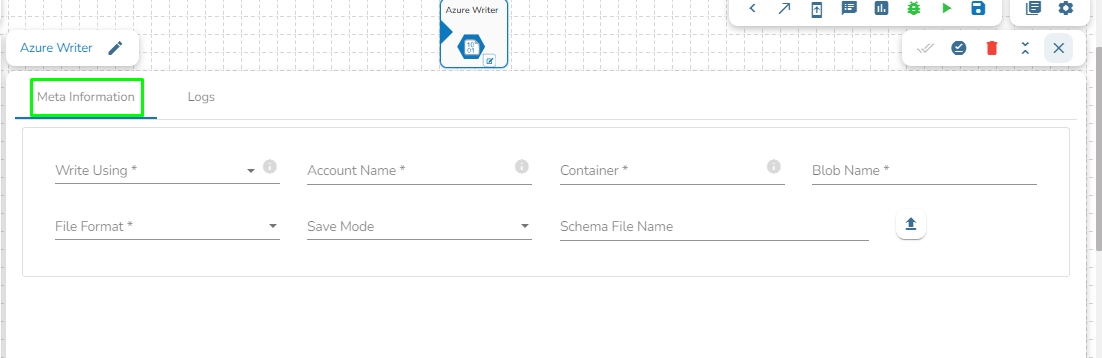

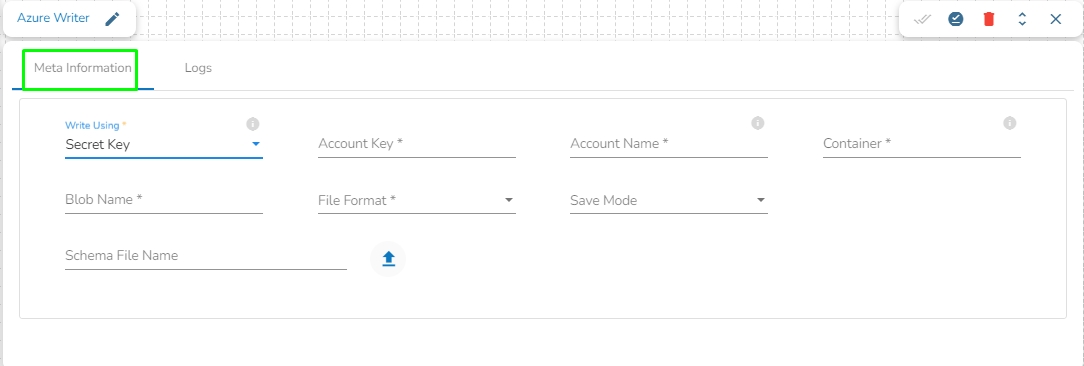

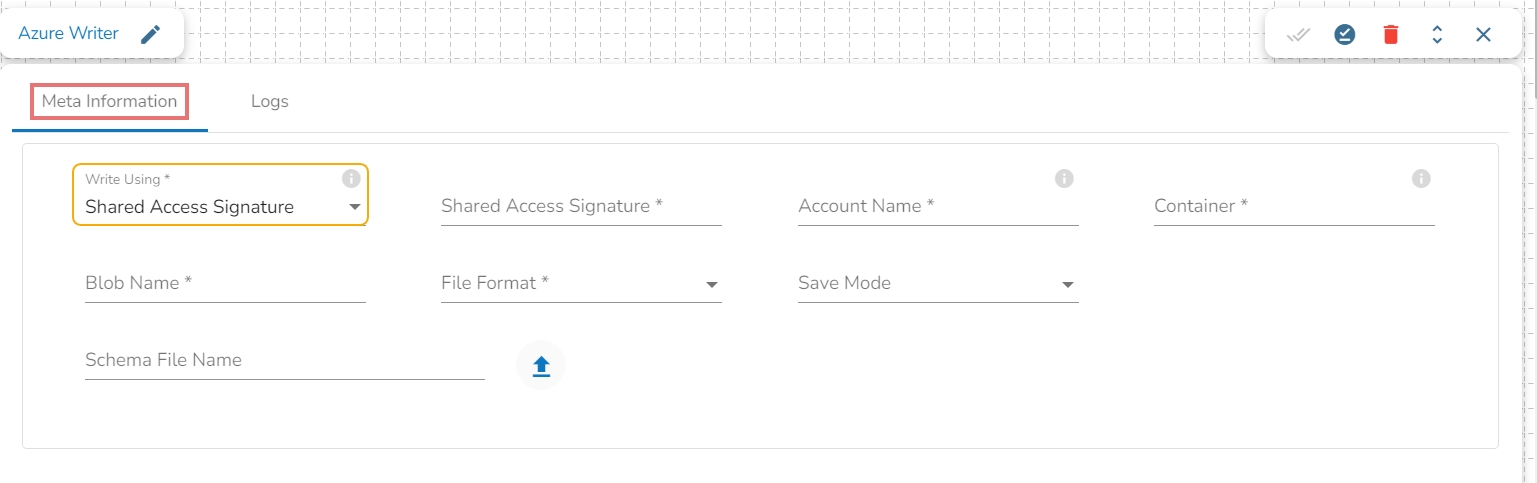

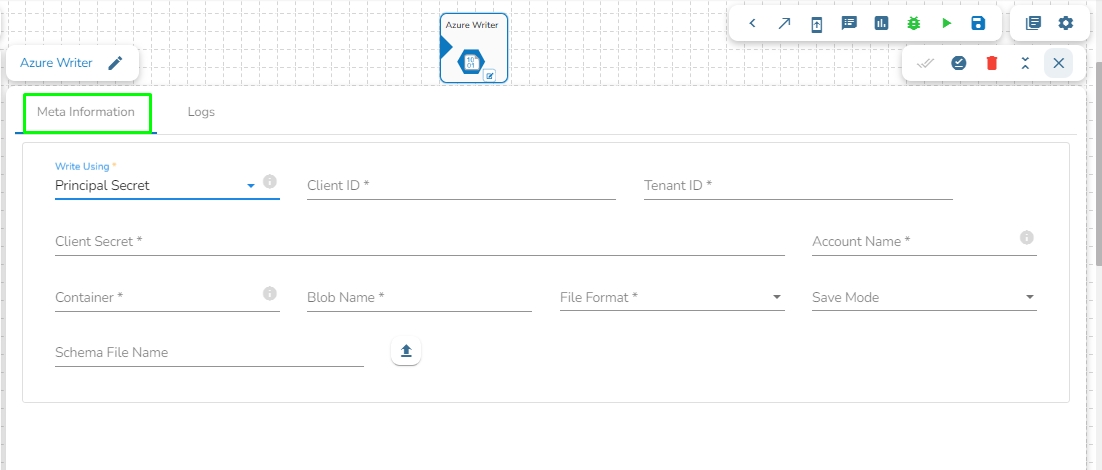

Azure is a cloud computing platform and service. It provides a range of cloud services, including infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS) offerings, as well as tools for building, deploying, and managing applications in the cloud.

Azure Writer task is used to write the data in the Azure Blob Container.

Drag the Azure writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Write using: There are three(3) options available under this tab:

Shared Access Signature:

Secret Key

Principal Secret

Provide the following details:

Shared Access Signature: This is a URI that grants restricted access rights to Azure Storage resources.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Blob Name: Enter the Blob name. A blob is a type of object storage that is used to store unstructured data, such as text or binary data, like images or videos.

File Format: There are four(4) types of file extensions are available under it, select the file format in which the data has to be written:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

Account Key: Enter the azure account key. In Azure, an account key is a security credential that is used to authenticate access to storage resources, such as blobs, files, queues, or tables, in an Azure storage account.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Blob Name: Enter the Blob name. A blob is a type of object storage that is used to store unstructured data, such as text or binary data, like images or videos.

File type: There are four(4) types of file extensions are available under it:

CSV

JSON

PARQUET

AVRO

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Provide the following details:

Client ID: Provide Azure Client ID. The client ID is the unique Application (client) ID assigned to your app by Azure AD when the app was registered.

Tenant ID: Provide the Azure Tenant ID. Tenant ID (also known as Directory ID) is a unique identifier that is assigned to an Azure AD tenant, which represents an organization or a developer account. It is used to identify the organization or developer account that the application is associated with.

Client Secret: Enter the Azure Client Secret. Client Secret (also known as Application Secret or App Secret) is a secure password or key that is used to authenticate an application to Azure AD.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Blob Name: Enter the Blob name. A blob is a type of object storage that is used to store unstructured data, such as text or binary data, like images or videos.

File type: There are four(4) types of file extensions are available under it:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

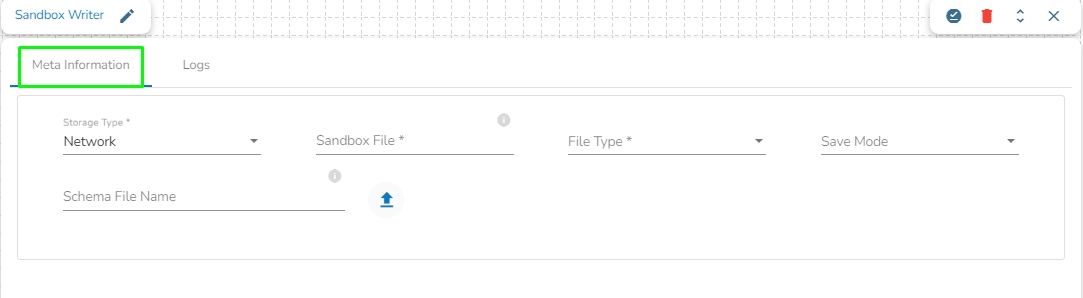

This task writes data to network pool of Sandbox.

Drag the Sandbox writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Storage Type: This field is pre-defined.

Sandbox File: Enter the file name.

File Type: Select the file type in which the data has to be written. There are 4 files types supported here:

CSV

JSON

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

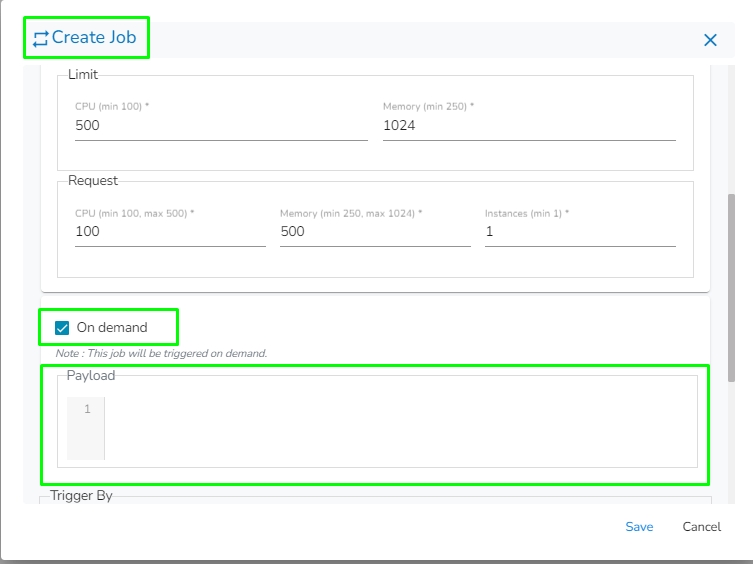

The on-demand python job functionality allows you to initiate a python job based on a payload using an API call at a desirable time.

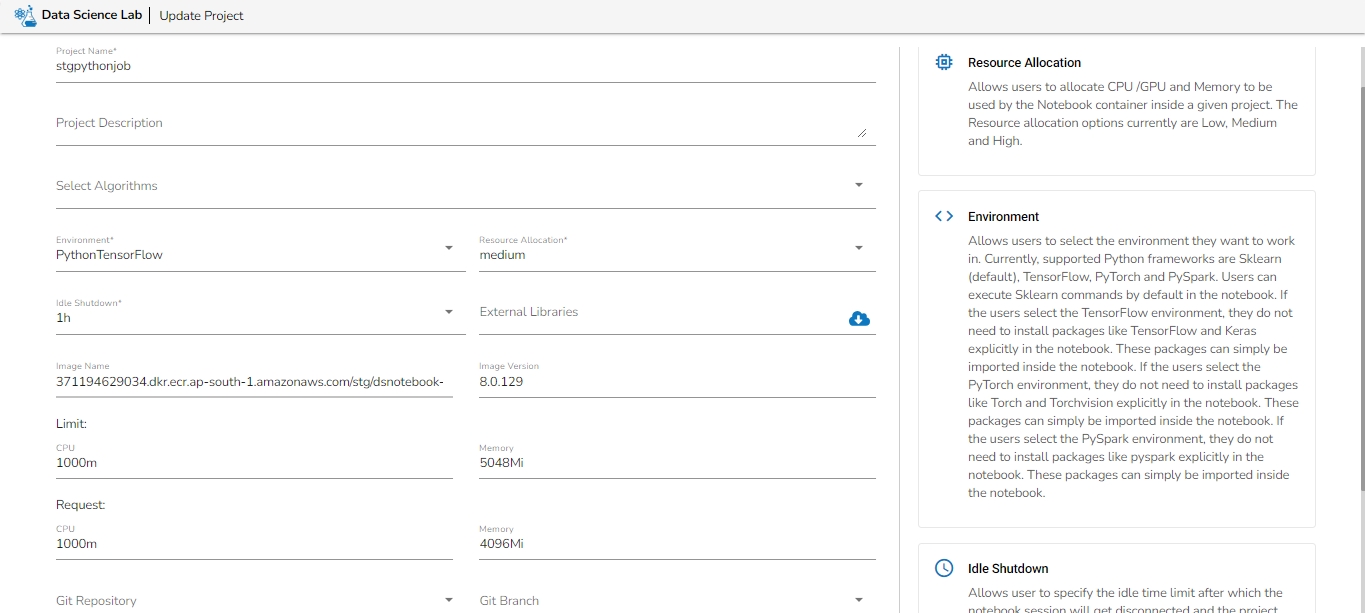

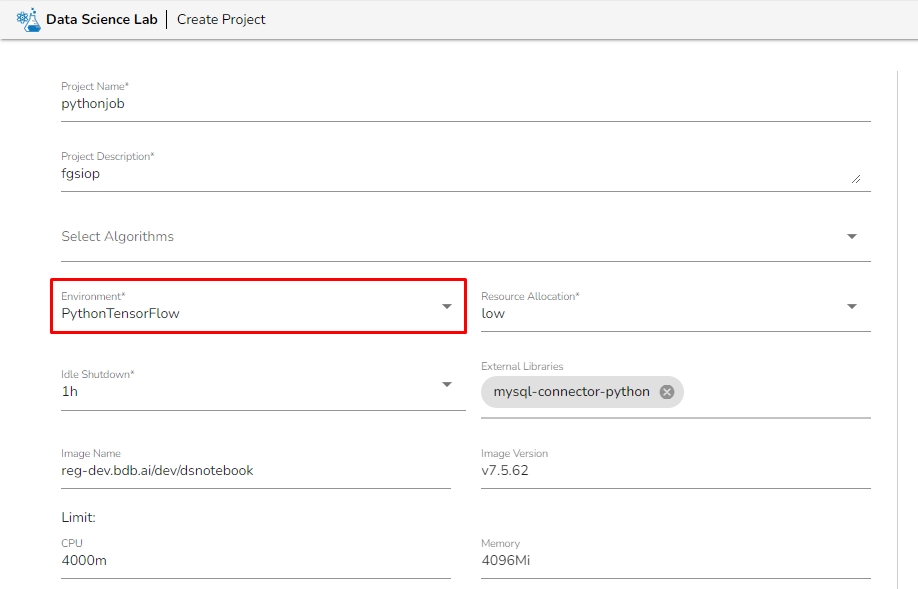

Before creating the Python (On demand) Job, the user has to create a project in the Data Science Lab module under Python Environment. Please refer the below image for reference:

Please follow the below given steps to configure Python Job(On-demand):

Navigate to the Data Pipeline homepage.

Click on the Create Job icon.

The New Job dialog box appears redirecting the user to create a new Job.

Enter a name for the new Job.

Describe the Job(Optional).

Job Baseinfo: In this field, there are three options:

Spark Job

PySpark Job

Python Job

Select the Python Job option from Job Baseinfo.

Check-in On demand option as shown in the below image.

Docker Configuration: Select a resource allocation option using the radio button. The given choices are:

Low

Medium

High

Provide the resources required to run the python Job in the limit and Request section.

Limit: Enter max CPU and Memory required for the Python Job.

Request: Enter the CPU and Memory required for the job at the start.

Instances: Enter the number of instances for the Python Job.

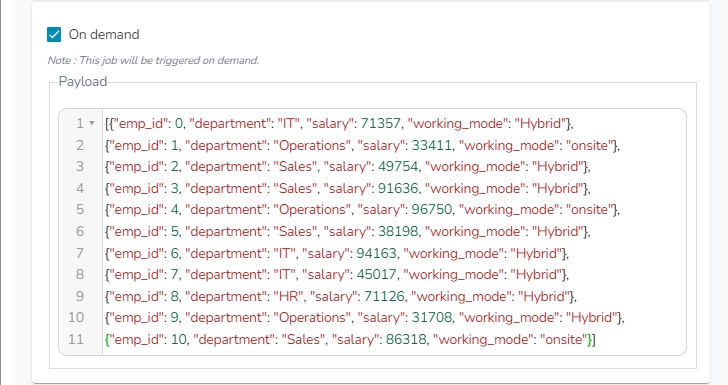

The payload field will appear once the "On Demand" option is checked. Enter the payload in the form of a JSON array containing JSON objects.

Trigger By: There are 2 options for triggering a job on success or failure of a job:

Success Job: On successful execution of the selected job the current job will be triggered.

Failure Job: On failure of the selected job the current job will be triggered.

Click the Save option to create the job.

A success message appears to confirm the creation of a new job.

The Job Editor page opens for the newly created job.

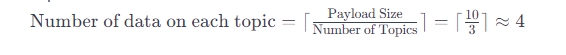

Based on the given number of instances, topics will be created, and the payload will be distributed across these topics. The logic for distributing the payload among the topics is as follows:

The number of data on each topic can be calculated as the ceiling value of the ratio between the payload size and the number of instances.

For example:

Payload Size: 10

Number of Topics=Number of Instances: 3

The number of data on each topic is calculated as Payload Size divided by Number of Topics:

In this case, each topic will hold the following number of data:

Topic 1: 4

Topic 2: 4

Topic 3: 2 (As there are only 2 records left)

In the On-Demand Job system, the naming convention for topics is based on the Job_Id, followed by an underscore (_) symbol, and successive numbers starting from 0. The numbers in the topic names will start from 0 and go up to n-1, where n is the number of instances. For clarity, consider the following example:

Job_ID: job_13464363406493

Number of instances: 3

In this scenario, three topics will be created, and their names will follow the pattern:

Please Note:

When writing a script in DsLab Notebook for an On-Demand Job, the first argument of the function in the script is expected to represent the payload when running the Python On-Demand Job. Please refer to the provided sample code.

job_payload is the payload provided when creating the job or sent from an API call or ingested from the Job trigger component from the pipeline.

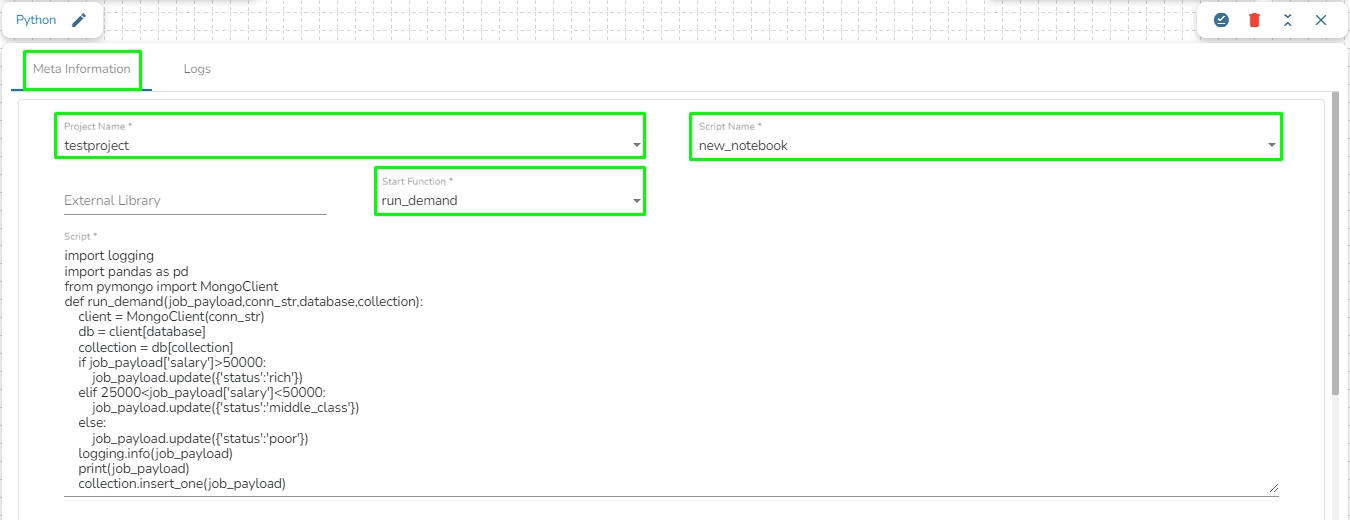

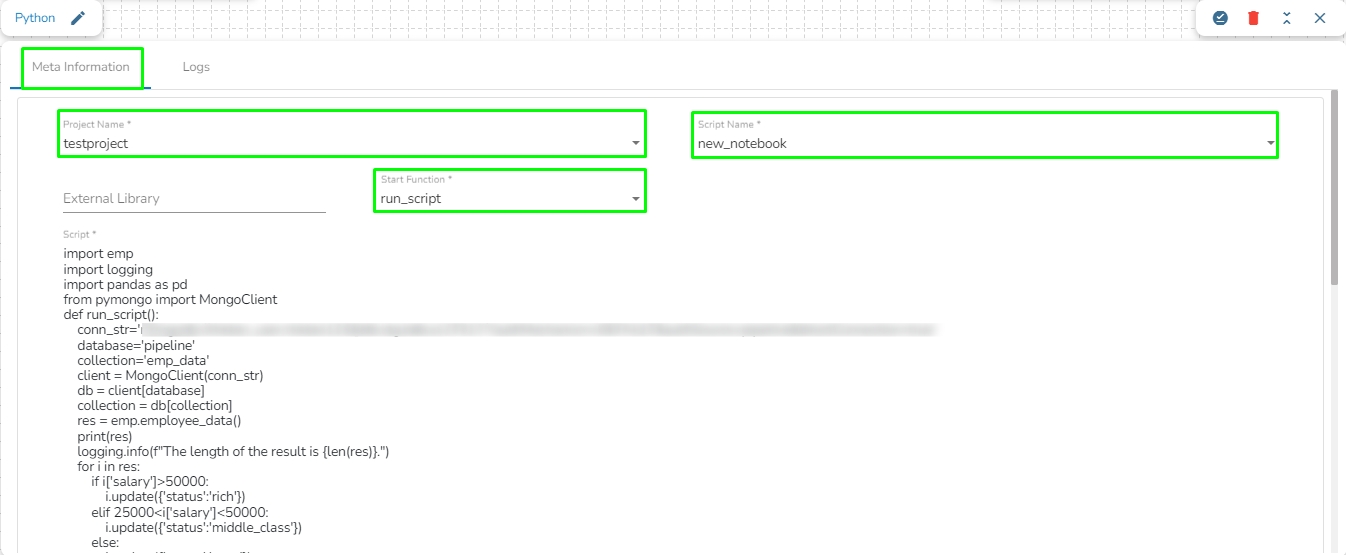

Once the Python (On demand) Job is created, follow the below given steps to configure the Meta Information tab of the Python Job:

Project Name: Select the same Project using the drop-down menu where the Notebook has been created.

Script Name: This field will list the exported Notebook names which are exported from the Data Science Lab module to Data Pipeline.

External Library: If any external libraries are used in the script the user can mention it here. The user can mention multiple libraries by giving comma (,) in between the names.

Start Function: Here, all the function names used in the script will be listed. Select the start function name to execute the python script.

Script: The Exported script appears under this space.

Input Data: If any parameter has been given in the function, then the name of the parameter is provided as Key, and value of the parameters has to be provided as value in this field.

Python (On demand) Job can be activated in the following ways:

Activating from UI

Activating from Job trigger component

For activating Python (On demand) job from UI, it is mandatory for the user to enter the payload in the Payload section. Payload has to be given in the form of a JSON Array containing JSON objects as shown in the below given image.

Once the user configure the Python (On demand) job, the job can be activated using the activate icon on the job editor page.

Please go through the below given walk-through which will provide a step-by-step guide to facilitate the activation of the Python (On demand) Job as per user preferences.

Sample payload for Python Job (On demand):

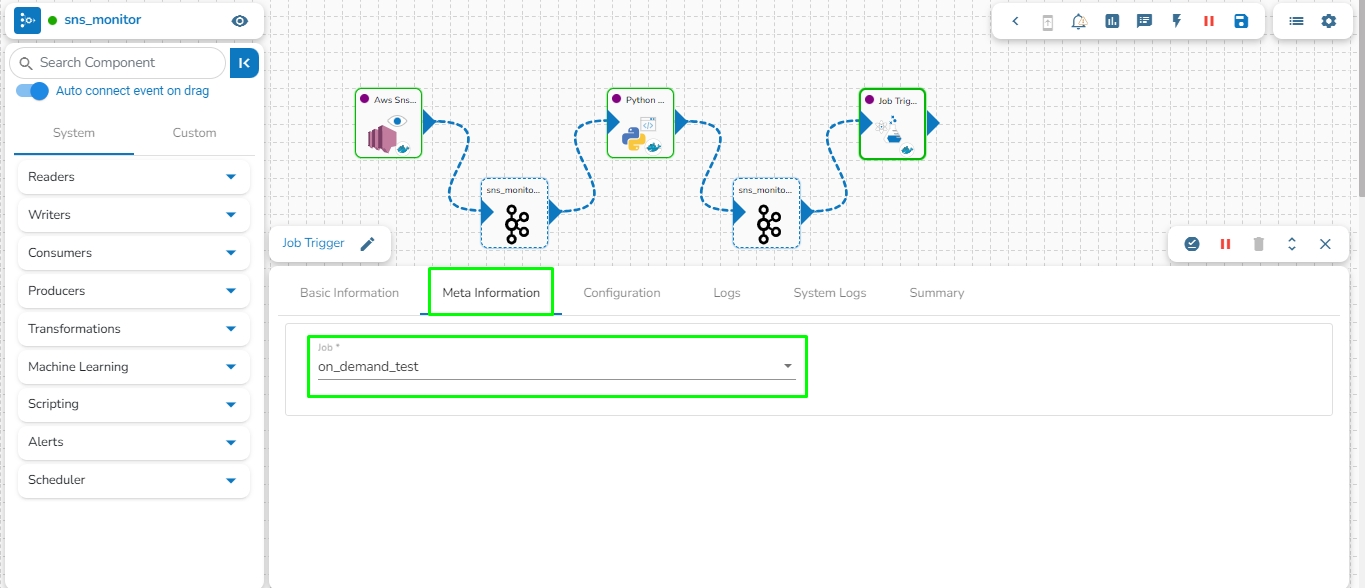

The Python (On demand) job can be activated using the Job Trigger component in the pipeline. To configure this, the user has to set up their Python (On demand) job in the meta-information component within the pipeline. The in-event data of the Job Trigger component will then be utilized as a payload in the Python (On demand) Job.

Please go through the below given walk-through which will provide a step-by-step guide to facilitate the activation of the Python (On demand) Job through Job trigger component.

Please follow the below given steps to configure Job trigger component to activate the Python (On demand) job:

Create a pipeline that generates meaningful data to be sent to the out event, which will serve as the payload for the Python (On demand) job.

Connect the Job Trigger component to the event that holds the data to be used as payload in the Python (On demand) job.

Open the meta-information of the Job Trigger component and select the job from the drop-down menu that needs to be activated by the Job Trigger component.

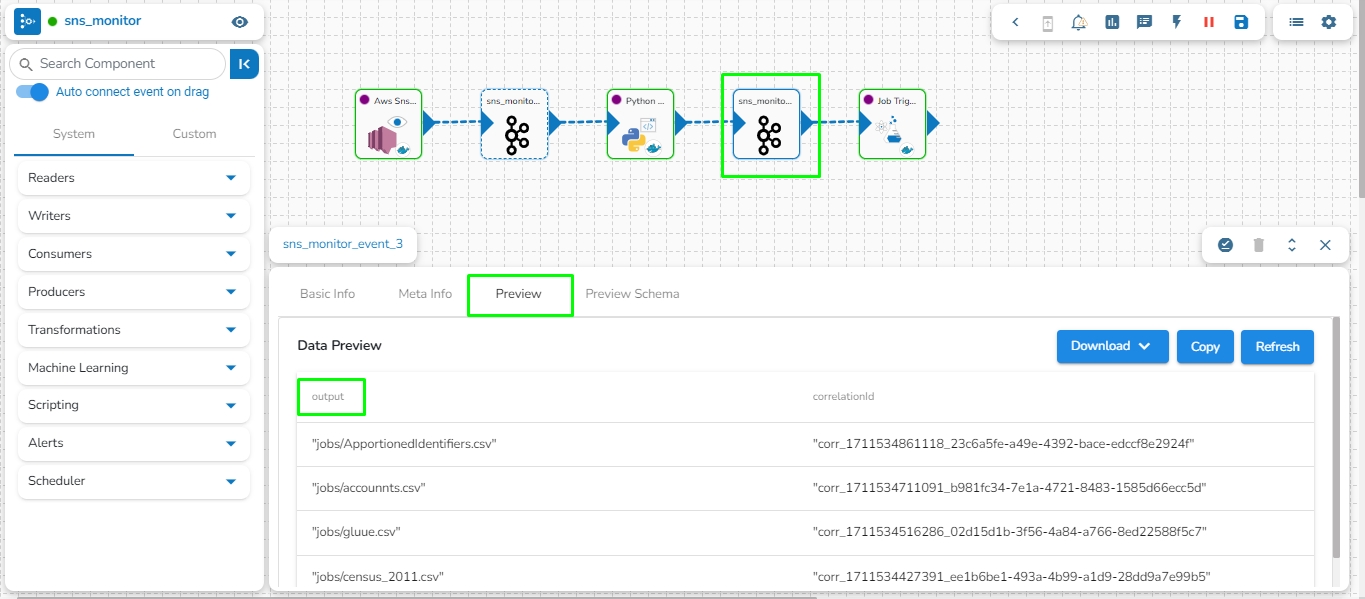

The data from the previously connected event will be passed as JSON objects within a JSON Array and used as the payload for the Python (On demand) job. Please refer to the image below for reference:

In the provided image, the event contains a column named "output" with four different values: "jobs/ApportionedIdentifiers.csv", "jobs/accounnts.csv", "jobs/gluue.csv", and "jobs/census_2011.csv". The payload will be passed to the Python (On demand) job in the JSON format given below.

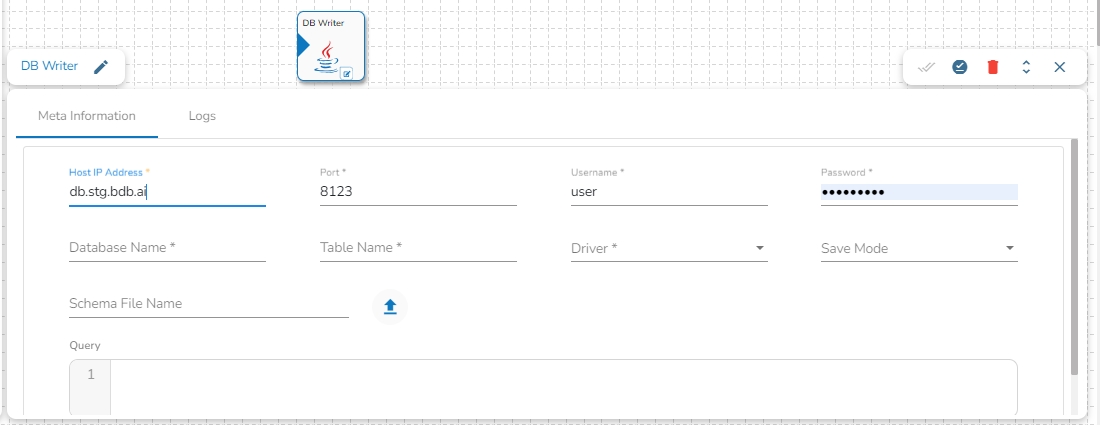

This task is used to write data in the following databases: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Drag the DB writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the Host IP Address for the selected driver.

Port: Enter the port for the given IP Address.

Database name: Enter the Database name.

Table name: Provide a single or multiple table names. If multiple table name has be given, then enter the table names separated by comma(,).

User name: Enter the user name for the provided database.

Password: Enter the password for the provided database.

Driver: Select the driver from the drop down. There are 6 drivers supported here: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Query: Write the create table(DDL) query.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

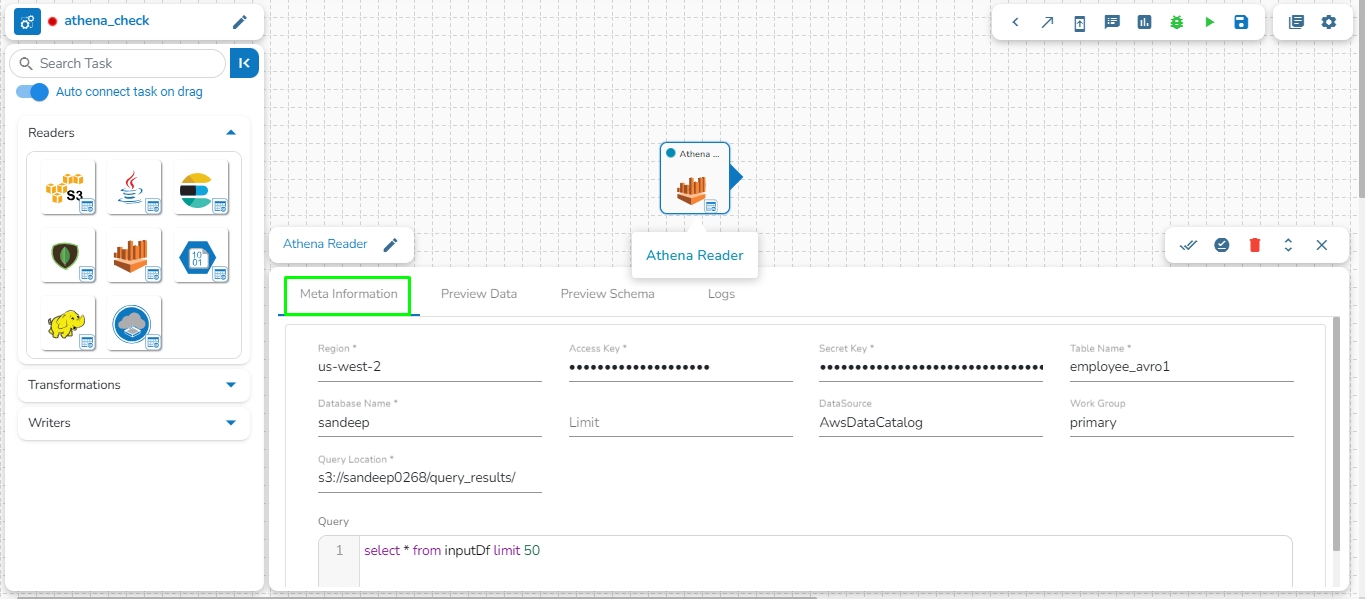

Amazon Athena is an interactive query service that easily analyzes data directly in Amazon Simple Storage Service (Amazon S3) using standard SQL. With a few actions in the AWS Management Console, you can point Athena at your data stored in Amazon S3 and begin using standard SQL to run ad-hoc queries and get results in seconds.

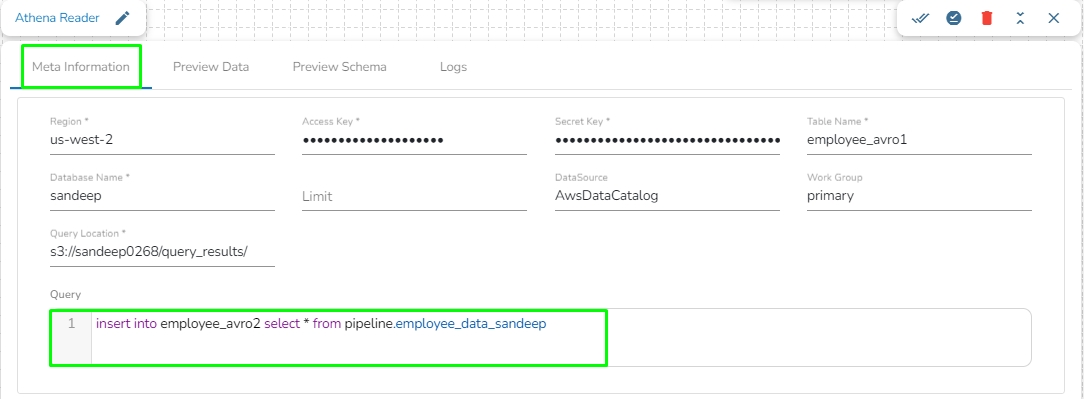

Athena Query Executer task enables users to read data directly from the external table created in AWS Athena.

Please Note: Please go through the below given demonstration to configure Athena Query Executer in Jobs.

Region: Enter the region name where the bucket is located.

Access Key: Enter the AWS Access Key of the account that must be used.

Secret Key: Enter the AWS Secret Key of the account that must be used.

Table Name: Enter the name of the external table created in Athena.

Database Name: Name of the database in Athena in which the table has been created.

Limit: Enter the number of records to be read from the table.

Data Source: Enter the Data Source name configured in Athena. Data Source in Athena refers to your data's location, typically an S3 bucket.

Workgroup: Enter the Workgroup name configured in Athena. The Workgroup in Athena is a resource type to separate query execution and query history between Users, Teams, or Applications running under the same AWS account.

Query location: Enter the path where the results of the queries done in the Athena query editor are saved in the CSV format. Users can find this path under the Settings tab in the Athena query editor as Query Result Location.

Query: Enter the Spark SQL query.

Sample Spark SQL query that can be used in Athena Reader:

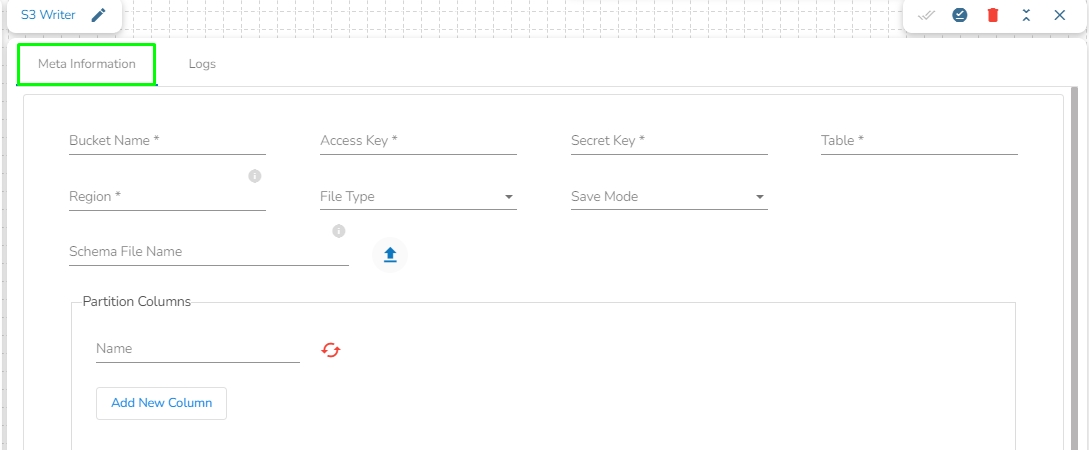

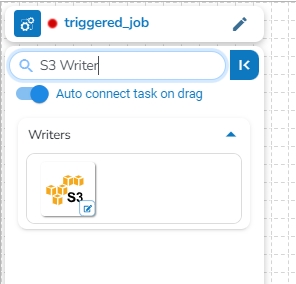

This task is used to write the data in Amazon S3 bucket.

Drag the S3 writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Bucket Name (*): Enter S3 Bucket name.

Region (*): Provide S3 region.

Access Key (*): Access key shared by AWS to login

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which is to be read

File Type (*): Select a file type from the drop-down menu (CSV, JSON, PARQUET, AVRO are the supported file types).

Save Mode: Select the Save mode from the drop down.

Append

Schema File Name: Upload spark schema file in JSON format.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

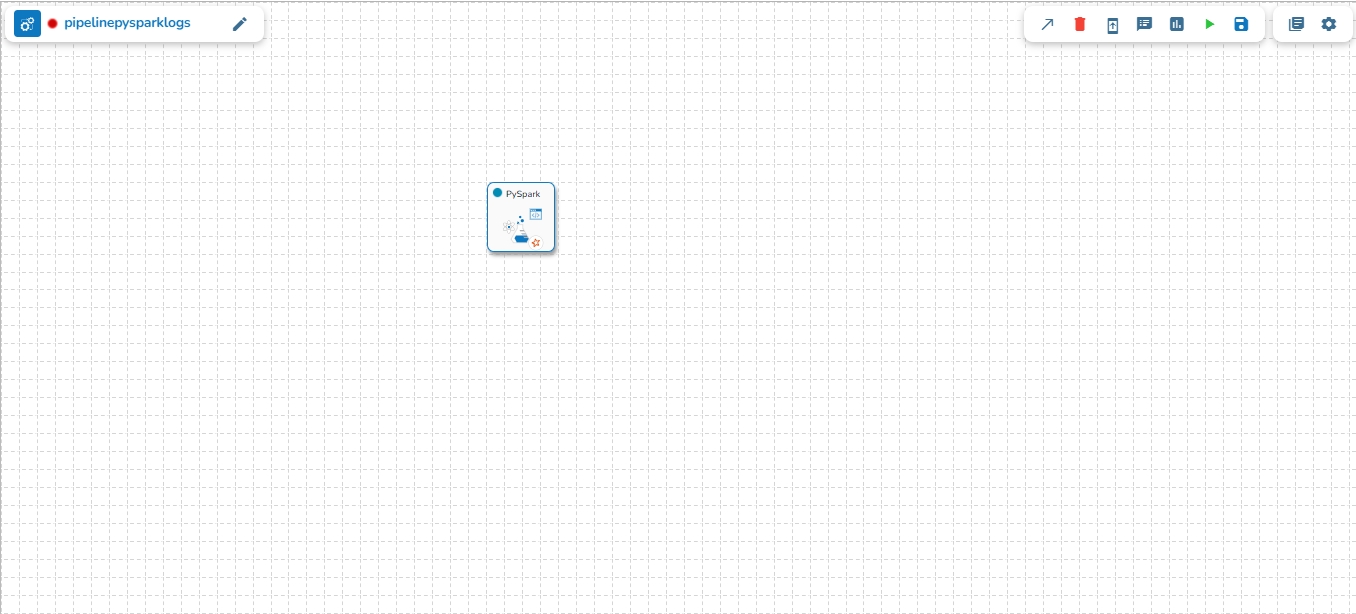

Write PySpark scripts and run them flawlessly in the Jobs.

This feature allows users to write their own PySpark script and run their script in the Jobs section of Data Pipeline module.

Before creating the PySpark Job, the user has to create a project in the Data Science Lab module under PySpark Environment. Please refer the below image for reference:

Please go through the below given demonstration to create and configure a PySpark Job.

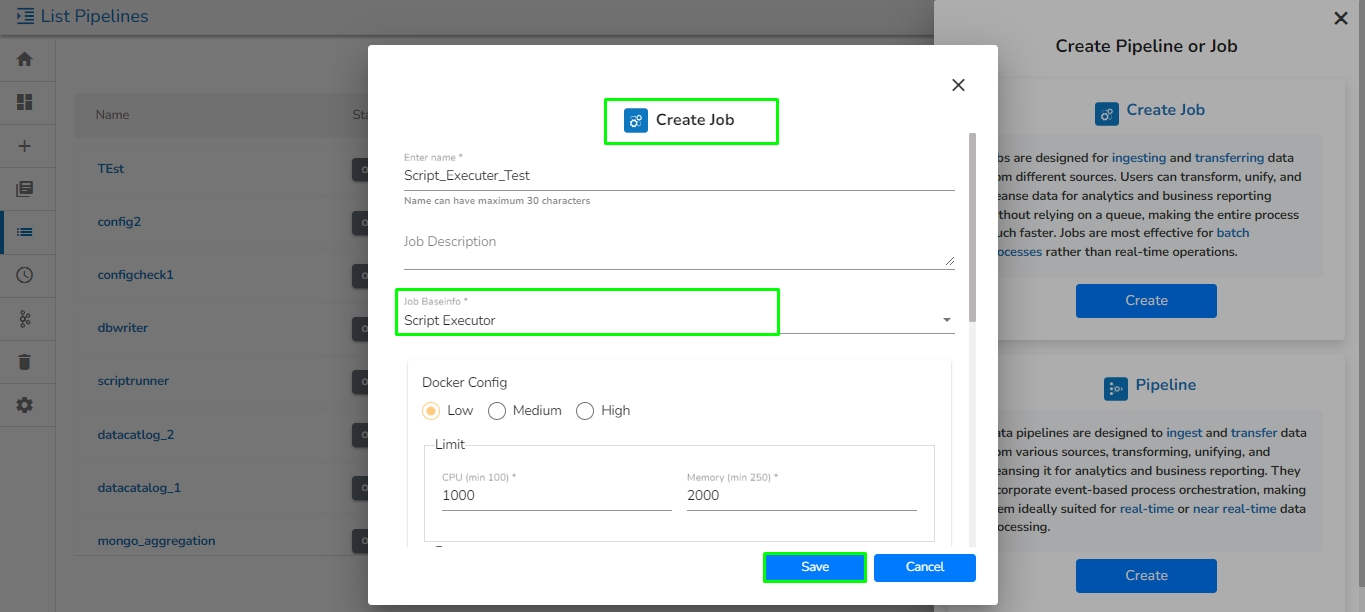

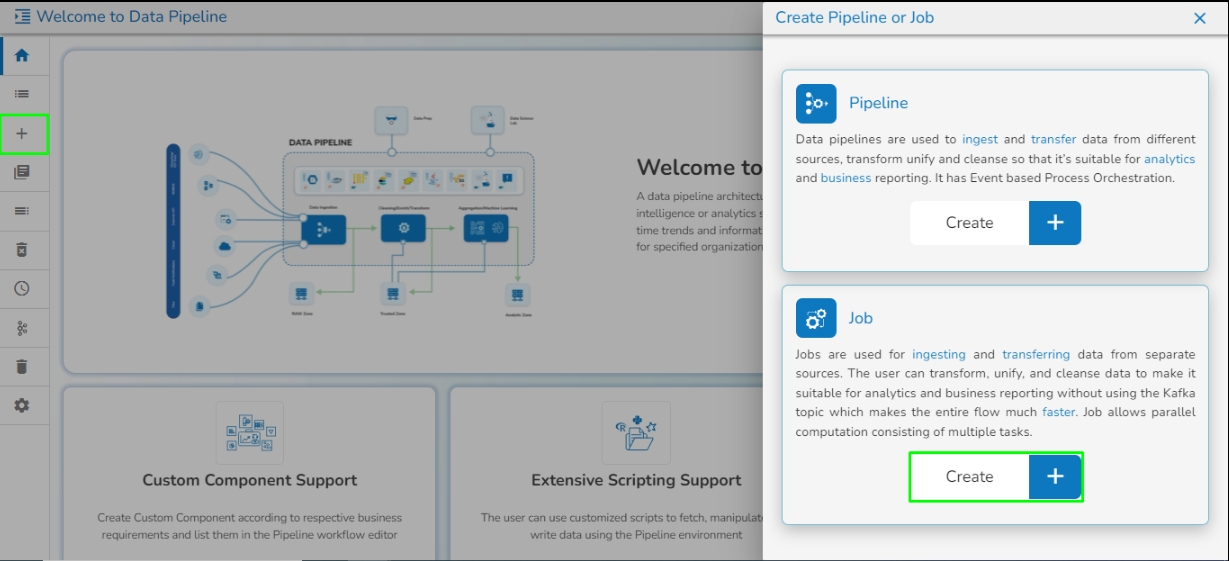

Open the pipeline homepage and click on the Create option.

The new panel opens from right hand side. Click on Create button in Job option.

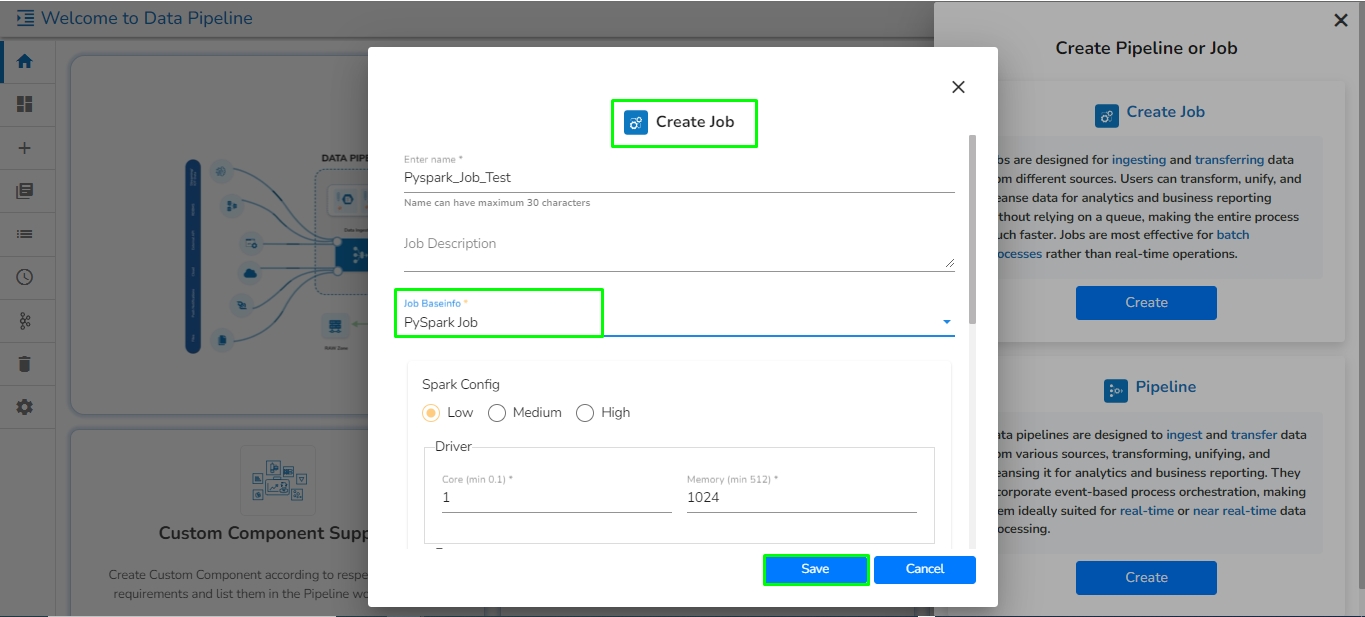

Provide the following information:

Enter name: Enter the name for the job.

Job Description: Add description of the Job (It is an optional field).

Job Baseinfo: Select PySpark Job option from the drop in Job Base Information.

Trigger By: The PySpark Job can be triggered by another Job or PySpark Job. The PySpark Job can be triggered in two scenarios from another jobs:

On Success: Select a job from drop-down. Once the selected job is run successfully, it will trigger the PySpark Job.

On Failure: Select a job from drop-down. Once the selected job gets failed, it will trigger the PySpark Job.

Is Schedule: Put a checkmark in the given box to schedule the new Job.

Spark config: Select resource for the job.

Click on Save option to save the Job.

The PySpark Job gets saved and it will redirect the user to the Job workspace.

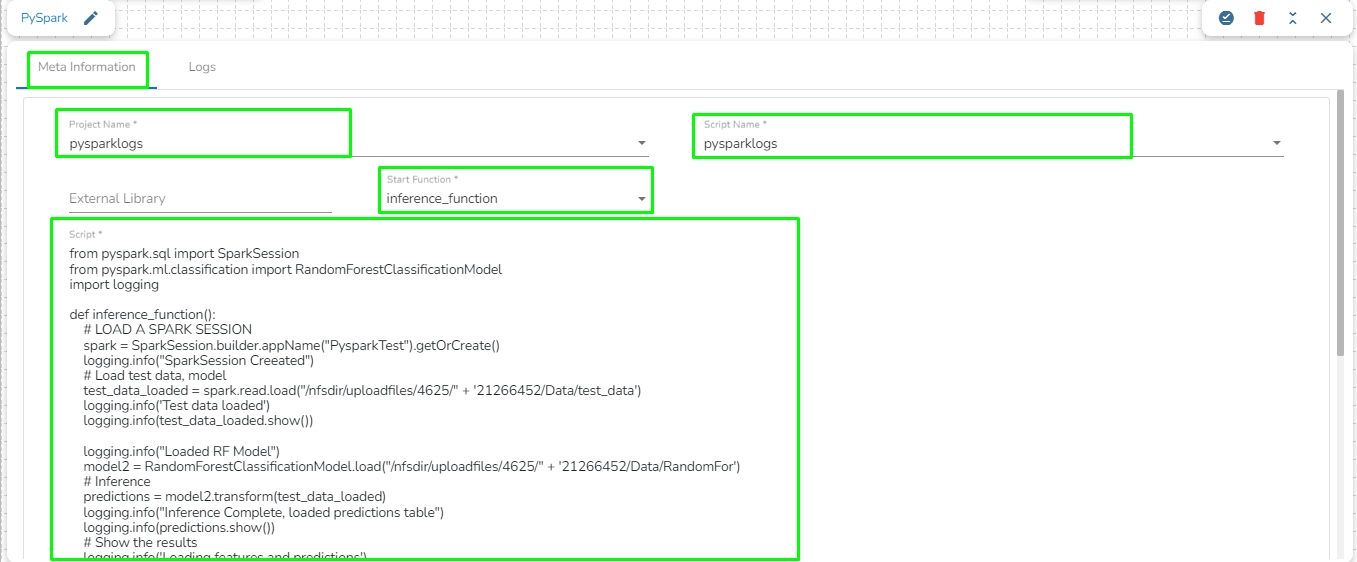

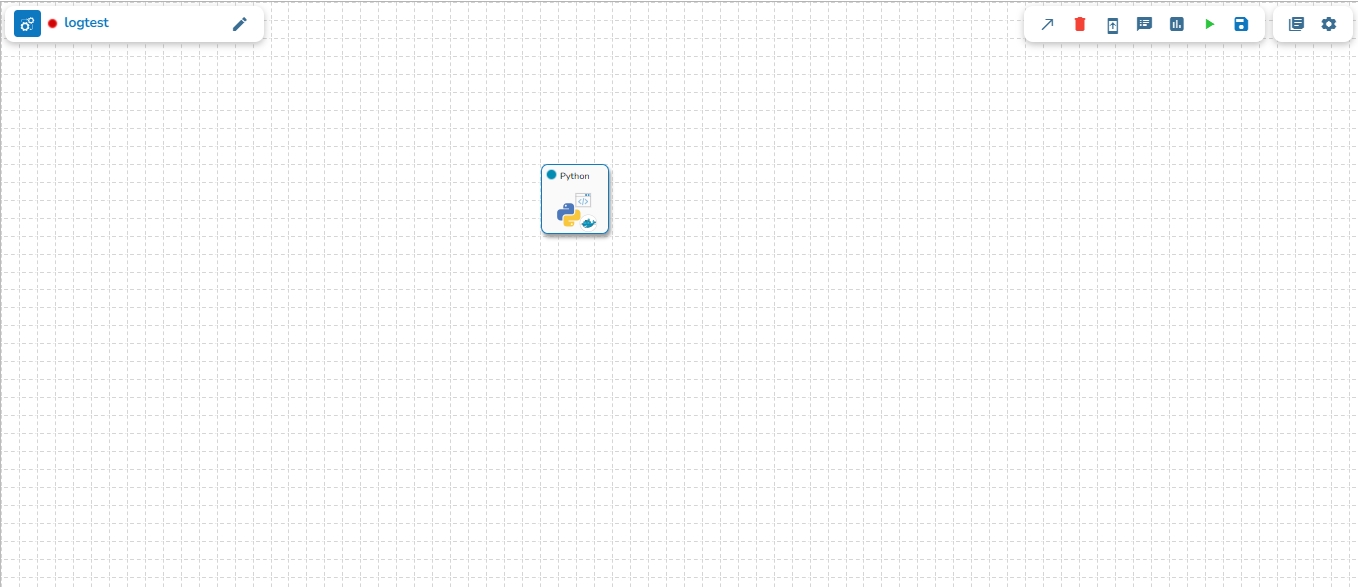

Once the PySpark Job is created, follow the below given steps to configure the Meta Information tab of the PySpark Job.

Project Name: Select the same Project using the drop-down menu where the concerned Notebook has been created.

Script Name: This field will list the exported Notebook names which are exported from the Data Science Lab module to Data Pipeline.

Please Note: The script written in DS Lab module should be inside a function. Refer the Export to Pipeline page for more details on how to export a PySpark script to the Data Pipeline module.

External Library: If any external libraries are used in the script the user can mention it here. The user can mention multiple libraries by giving comma(,) in between the names.

Start Function: Here, all the function names used in the script will be listed. Select the start function name to execute the python script.

Script: The Exported script appears under this space.

Input Data: If any parameter has been given in the function, then the name of the parameter is provided as Key and value of the parameters has to be provided as value in this field.

Please note: We are currently supporting following JDBC connector:

MySQL

MSSQL

Oracle

MongoDB

PostgreSQL

ClickHouse

The Script Executor job is designed to execute code snippets or scripts written in various programming languages such as Go, Julia, and Python. This job allows users to fetch code from their Git repository and execute it seamlessly.

Navigate to the Data Pipeline module homepage.

Open the pipeline homepage and click on the Create option.

The new panel opens from right hand side. Click on Create button in Job option.

Enter a name for the new Job.

Describe the Job (Optional).

Job Baseinfo: Select Script Executer from the drop-down.

Trigger By: There are 2 options for triggering a job on success or failure of a job:

Success Job: On successful execution of the selected job the current job will be triggered.

Failure Job: On failure of the selected job the current job will be triggered.

Is Scheduled?

A job can be scheduled for a particular timestamp. Every time at the same timestamp the job will be triggered.

Job must be scheduled according to UTC.

Docker Configuration: Select a resource allocation option using the radio button. The given choices are:

Low

Medium

High

Provide the resources required to run the python Job in the limit and Request section.

Limit: Enter max CPU and Memory required for the Python Job.

Request: Enter the CPU and Memory required for the job at the start.

Instances: Enter the number of instances for the Python Job.

Alert: Please refer to the Job Alerts page to configure alerts in job.

Click the Save option to save the Python Job.

The Script Executer Job gets saved, and it will redirect the users to the Job Editor workspace.

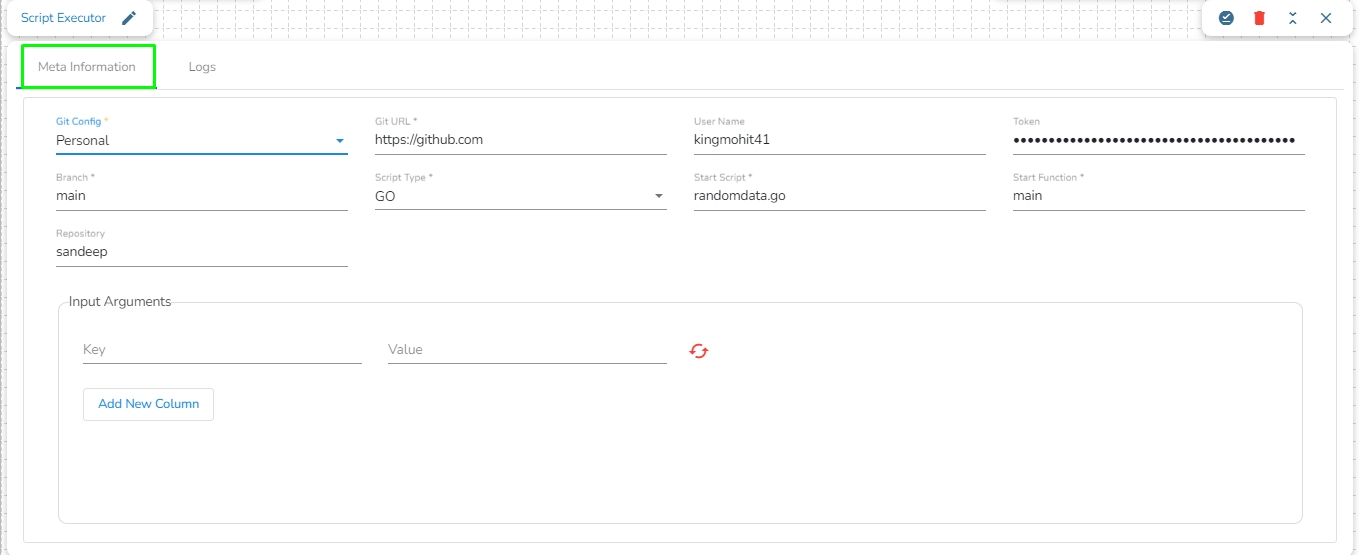

Please go through the demonstration given-below to configure the Script Executor.

Git Config: Select an option from the drop-down.

Personal: If this option is selected, provide the following information:

Git URL: Enter the Git URL.

URL for Github: https://github.com

URL for Gitlab: https://gitlab.com

User Name: Enter the GIT username.

Token: Enter the Access token or API token for authentication and authorization when accessing the Git repository, commonly used for secure automated processes like code fetching and execution.

Branch: Specify the Git branch for code fetching.

Script Type: Select the script's language for execution from the drop down:

GO

Julia

Python

Start Script: Enter the script name (with extension) which has to be executed.

For example, if Python is selected as the Script type, then the script name will be in the following format: script_name.py.

If Golang is selected as the Script type, then the script name will be in the following format: script_name.go.

Start Function: Specify the initial function or method within the Start Script for execution, particularly relevant for languages like Python with reusable functions.

Repository: Provide the Git repository name.

Input Arguments: Allows users to provide input parameters or arguments needed for code execution, such as dynamic values, configuration settings, or user inputs affecting script behavior.

Admin: If this option is selected, then Git URL, User Name, Token & Branch fields have to be configured in the platform in order to use Script Executor.

In this option, the user has to provide the following fields:

Script Type

Start Script

Start Function

Repository

Input Arguments

Please Note: Follow the below given steps to configure GitLab/GitHub credentials in the Admin Settings in the platform:

Navigate to Admin >> Configurations >> Version Control.

From the first drop-down menu, select the Version.

Choose 'DsLabs' as the module from the drop-down.

Select either GitHub or GitLab based on the requirement for Git type.

Enter the host for the selected Git type.

Provide the token key associated with the Git account.

Select a Git project.

Choose the branch where the files are located.

After providing all the details correctly, click on 'Test,' and if the authentication is successful, an appropriate message will appear. Subsequently, click on the 'Save' option.

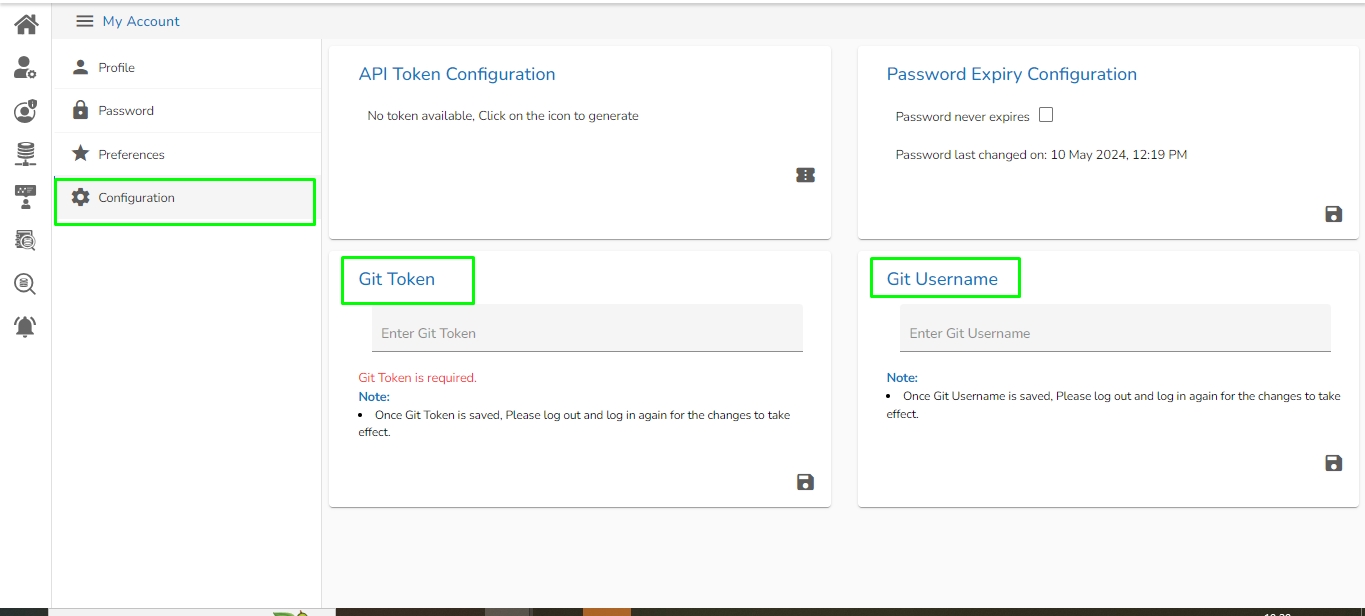

To complete the configuration, navigate to My Account >> Configuration. Enter the Git Token and Git Username, then save the changes.

Write Python scripts and run them flawlessly in the Jobs.

This feature allows users to write their own Python script and run their script in the Jobs section of Data Pipeline module.

Before creating the Python Job, the user has to create a project in the Data Science Lab module under Python Environment. Please refer the below image for reference:

After creating the Data Science project, the users need to activate it and create a Notebook where they can write their own Python script. Once the script is written, the user must save it and export it to be able to use it in the Python Jobs.

Navigate to the Data Pipeline module homepage.

Open the pipeline homepage and click on the Create option.

The new panel opens from right hand side. Click on Create button in Job option.

Enter a name for the new Job.

Describe the Job (Optional).

Job Baseinfo: Select Python Job from the drop-down.

Trigger By: There are 2 options for triggering a job on success or failure of a job:

Success Job: On successful execution of the selected job the current job will be triggered.

Failure Job: On failure of the selected job the current job will be triggered.

Is Scheduled?

A job can be scheduled for a particular timestamp. Every time at the same timestamp the job will be triggered.

Job must be scheduled according to UTC.

On demand: Check the "On demand" option to create an on-demand job. For more information on Python Job (On demand), check here.

Docker Configuration: Select a resource allocation option using the radio button. The given choices are:

Low

Medium

High

Provide the resources required to run the python Job in the limit and Request section.

Limit: Enter max CPU and Memory required for the Python Job.

Request: Enter the CPU and Memory required for the job at the start.

Instances: Enter the number of instances for the Python Job.

Alert: Please refer to the Job Alerts page to configure alerts in job.

Click the Save option to save the Python Job.

The Python Job gets saved, and it will redirect the users to the Job Editor workspace.

Check out the below given demonstration configure a Python Job.

Once the Python Job is created, follow the below given steps to configure the Meta Information tab of the Python Job.

Project Name: Select the same Project using the drop-down menu where the Notebook has been created.

Script Name: This field will list the exported Notebook names which are exported from the Data Science Lab module to Data Pipeline.

External Library: If any external libraries are used in the script the user can mention it here. The user can mention multiple libraries by giving comma (,) in between the names.

Start Function: Here, all the function names used in the script will be listed. Select the start function name to execute the python script.

Script: The Exported script appears under this space.

Input Data: If any parameter has been given in the function, then the name of the parameter is provided as Key, and value of the parameters has to be provided as value in this field.

In Apache Kafka, a "producer" is a client application or program that is responsible for publishing (or writing) messages to a Kafka topic.

A Kafka producer sends messages to Kafka brokers, which are then distributed to the appropriate consumers based on the topic, partitioning, and other configurable parameters.

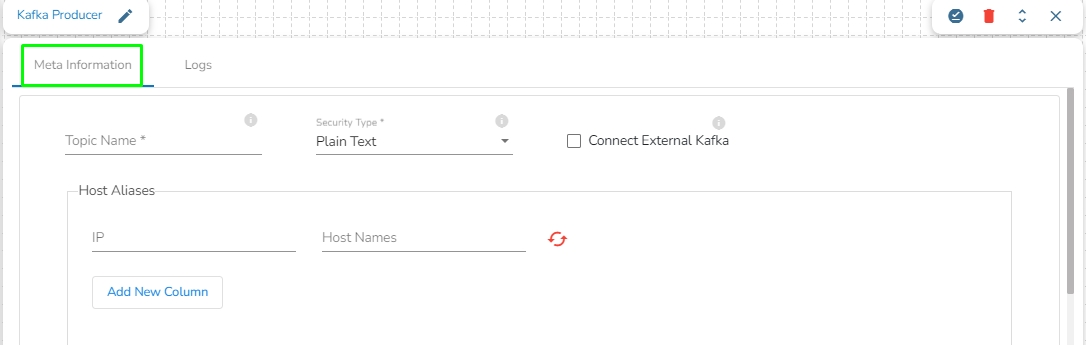

Drag the Kafka Producer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Topic Name: Specify topic name where user want to produce data.

Security Type: Select the security type from drop down:

Plain Text

SSL

Is External: User can produce the data to external Kafka topic by enabling 'Is External' option. ‘Bootstrap Server’ and ‘Config’ fields will display after enable 'Is External' option.

Bootstrap Server: Enter external bootstrap details.

Config: Enter configuration details.

Host Aliases: In Apache Kafka, a host alias (also known as a hostname alias) is an alternative name that can be used to refer to a Kafka broker in a cluster. Host aliases are useful when you need to refer to a broker using a name other than its actual hostname.

IP: Enter the IP.

Host Names: Enter the host names.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

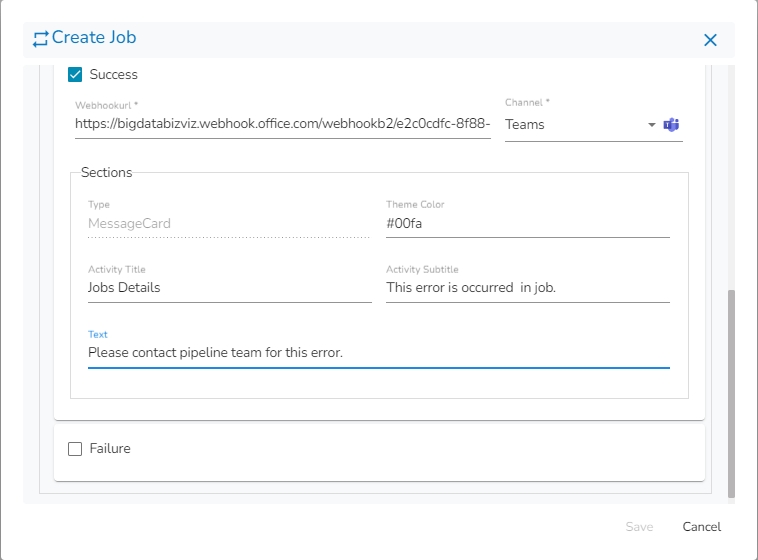

The Alert feature in the job allows users to send an alert message to the specified channel (Teams or Slack) in the event of either the success or failure of the configured job. Users can also choose both success and failure options to send an alert for the configured job.

Webhook URL: Provide the Webhook URL of the selected channel group where the Alert message needs to be sent.

Type: Message Card. (This field will be Pre-filled)

Theme Color: Enter the Hexadecimal color code for ribbon color in the selected channel. Please refer the image given at the bottom of this page for the reference.

Sections: In this tab, the following fields are there:

Activity Title: This is the title of the alert which has to be to sent on the Teams channel. Enter the Activity Title as per the requirement.

Activity Subtitle: Enter the Activity Subtitle. Please refer the image given at the bottom of this page for the reference.

Text: Enter the text message which should be sent along with Alert.

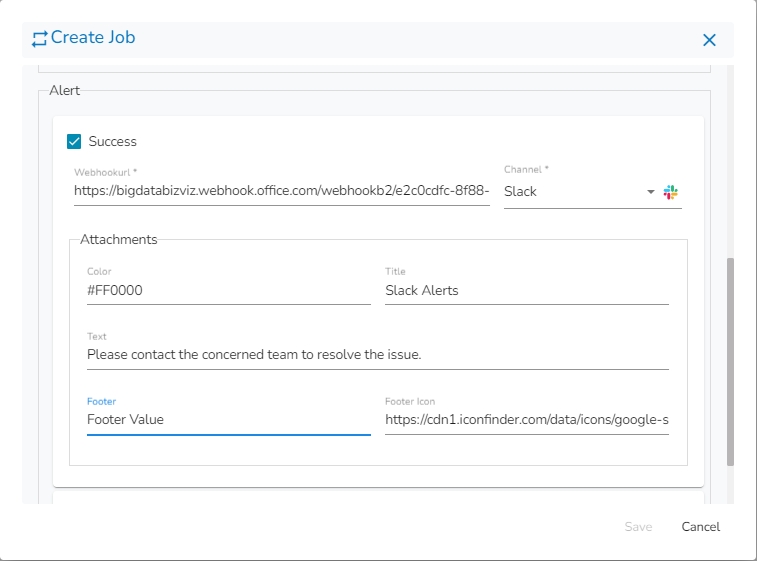

Webhook URL: Provide the Webhook URL of the selected channel group where the Alert message needs to be sent.

Attachments: In this tab, the following fields are there:

Title: This is the title of the alert which has to be to sent on the selected channel. Enter the Activity Title as per the requirement.

Color: Enter the Hexadecimal color code for ribbon color in the Slack channel. Please refer the image given at the bottom of this page for the reference.

Text: Enter the text message which should be sent along with Alert.

Footer: The "Footer" typically refers to additional information or content appended at the end of a message in a Slack channel. This can include details like a signature, contact information, or any other supplementary information that you want to include with your message. Footers are often used to provide context or additional context to the message content.

Footer Icon: In Slack, the footer icon refers to an icon or image that is displayed at the bottom of a message or attachment. The footer icon can be a company logo, an application icon, or any other image that represents the entity responsible for the message. Enter image URL as the value of Footer icon.

Follow these steps to set the Footer icon in Slack:

Go to the desired image that has to be used as the footer icon.

Right-click on the image.

Select the 'Copy image address' to get the image URL.

Now, the obtained image URL can be used as the value for the footer icon in Slack.

Sample image URL for Footer icon:

Sample Hexadecimal Color code which can be used in Job Alert.

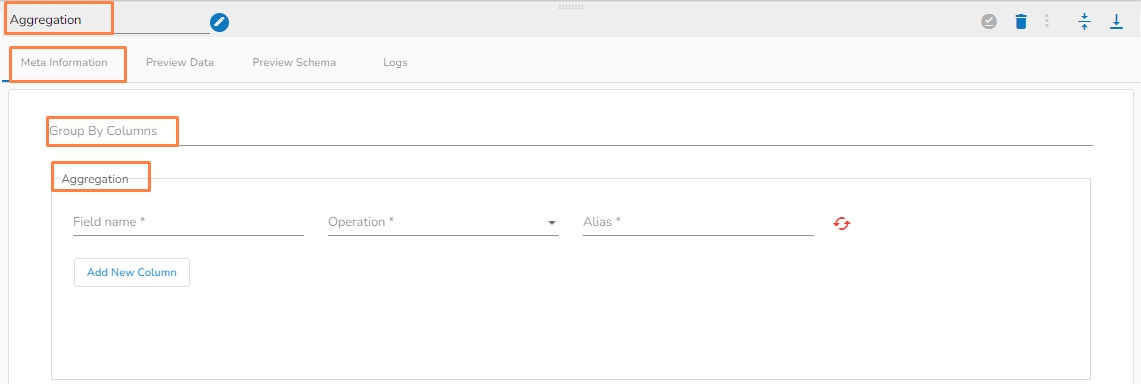

This page aims to explain the various transformation options provided on the Jobs Editor page.

The following transformations are provided under the Transformations section.

Alter Columns

Select Columns

Date Formatter

Query

Filter

Formula

Join

Aggregation

Sort Task

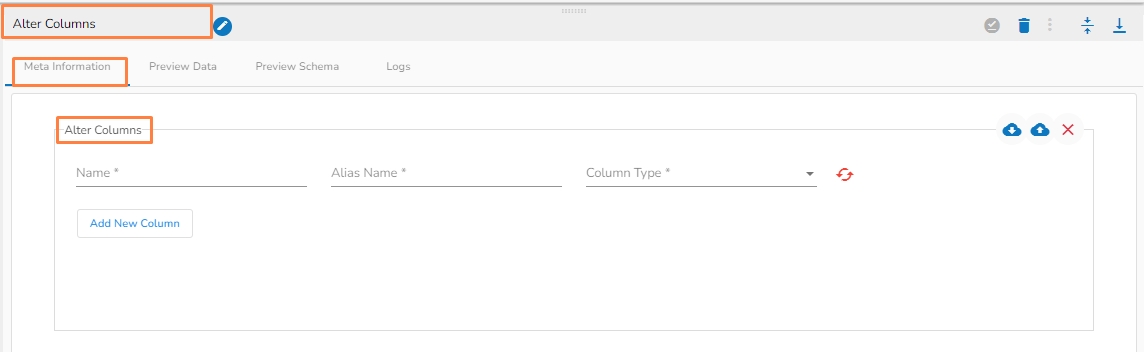

The Alter Columns command is used to change the data type of a column in a table.

Add the Column name from the Alter Columns tab where the datatype needs to be changed.

Name (*): Column name.

Alias Name(*) : New column name.

Column Type(*): Specify datatype from dropdown.

Add New Column: Multiple columns can be added for desired modification in the datatype.

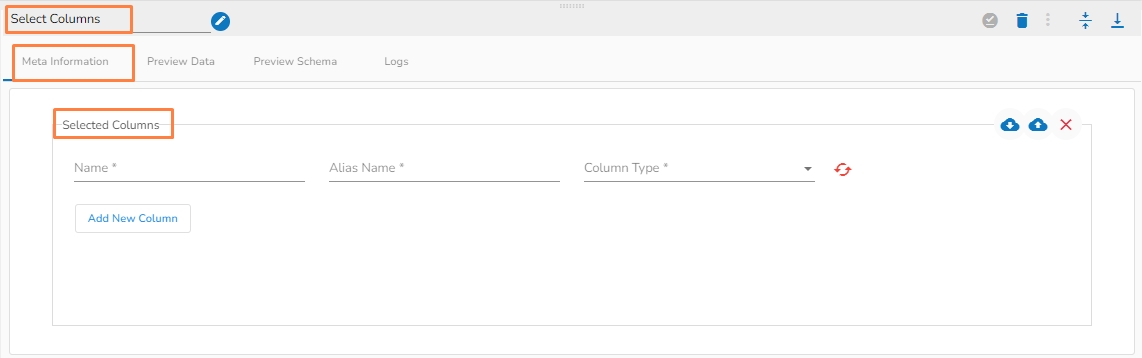

Helps to select particular columns from a table definition.

Name (*): Column name.

Alias Name(*) : New column name.

Column Type(*) : Specify datatype from dropdown.

Add New Column: Multiple columns can be added for the desired result

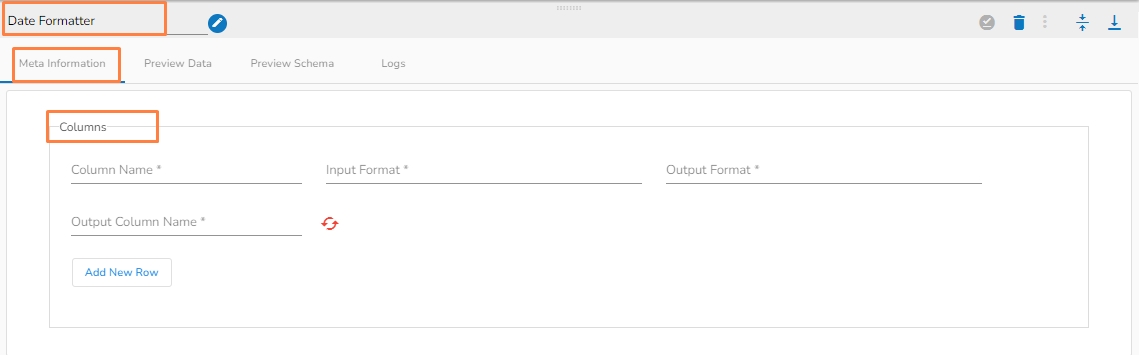

It helps in converting Date and Datetime columns to a desired format.

Name (*): Column name.

Input Format(*) : The function has total 61 different formats.

Output Format(*): The format in which the output will be given.

Output Column Name(*): Name of the output column.

Add New Row: To insert a new row.

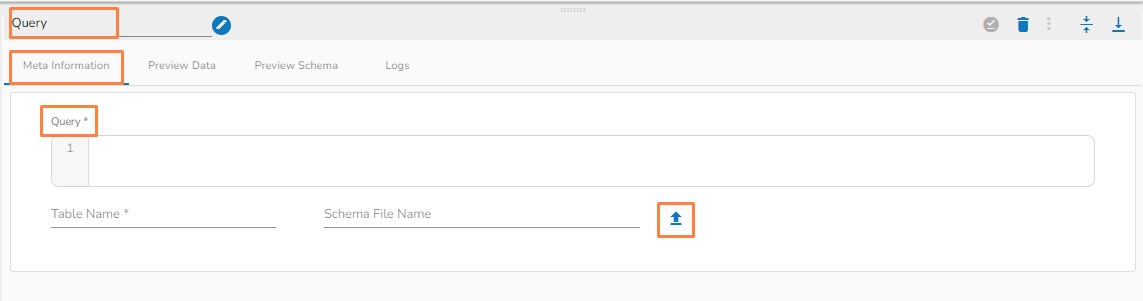

The Query transformation allows you to write SQL (DML) queries such as Select queries and data view queries.

Query(*): Provide a valid query to transform data.

Table Name(*): Provide the table name.

Schema File Name: Upload Spark Schema file in JSON format.

Choose File: Upload a file from the system.

Please Note: Alter query will not work.

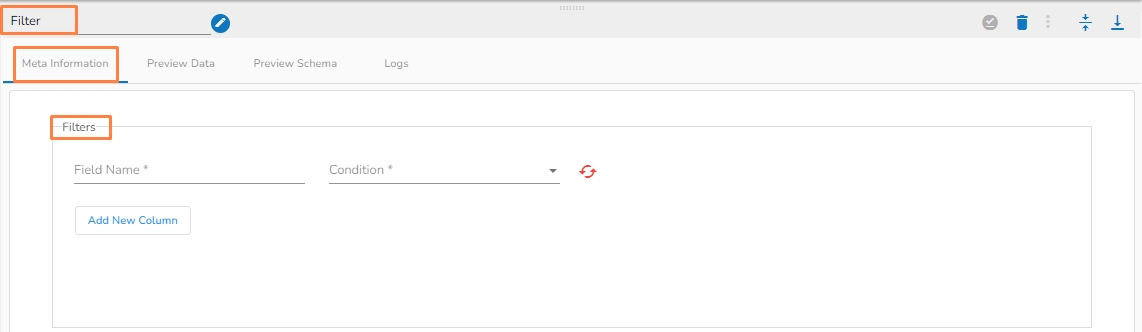

The Filter columns allow the user to filter table data based on different defined conditions.

Field Name(*): Provide field name.

Condition (*): 8 condition operations are available within this function.

Logical Condition(*)(AND/OR):

Add New Column: Adds a new Column.

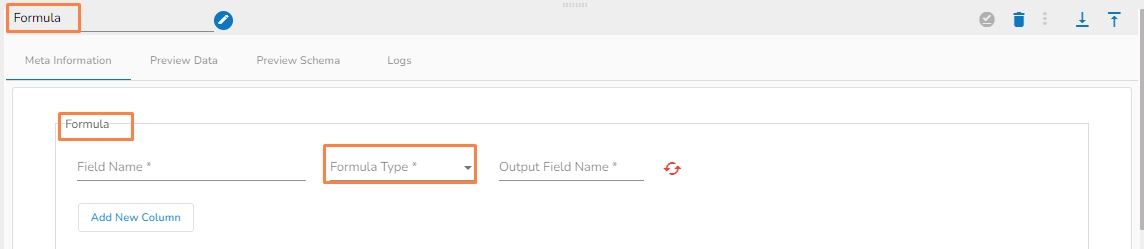

It gives computation results based on the selected formula type.

Field Name(*): Provide field name.

Formula Type(*): Select a Formula Type from the drop-down option.

Math (22 Math Operations)

String (16 String Operations )

Bitwise (3 Bitwise Operations)

Output Field Name(*): Provide the output field name.

Add New Column: Adds a new column.

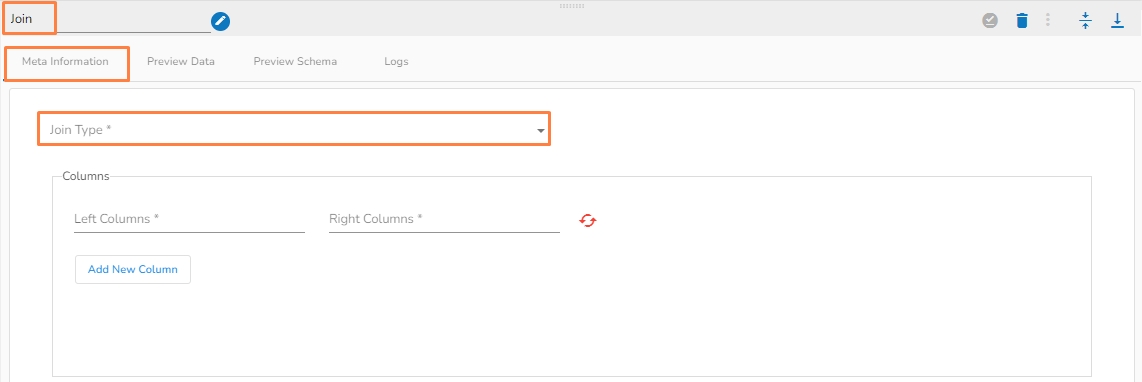

It joins 2 tables based on the specified column conditions.

Join Type (*): Provides drop-down menu to choose a Join type.

The supported Join Types are:

Inner

Outer

Full

Full outer

Left outer

Left

Right outer

Right

Left semi

Left anti

Left Column(*): Conditional column from the left table.

Right Column(*) : Conditional column from right table.

Add New Column: Adds a new column.

An aggregate task performs a calculation on a set of values and returns a single value by using the Group By column.

Group By Columns(*): Provide a name for the group by column.

Field Name(*): Provide the field name.

Operation (*): 30 operations are available within this function.

Alias(*): Provide an alias name.

Add New Column: Adds a new column.

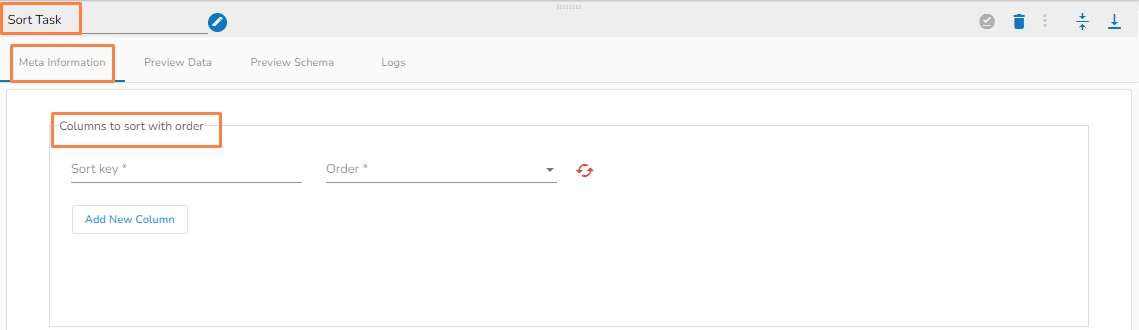

This transformation sorts all the data from a table based on the selected column and order.

Sort Key(*): Provide a sort key.

Order(*): Select an option out of Ascending or Descending.

Add New Column: Adds a new column.