Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

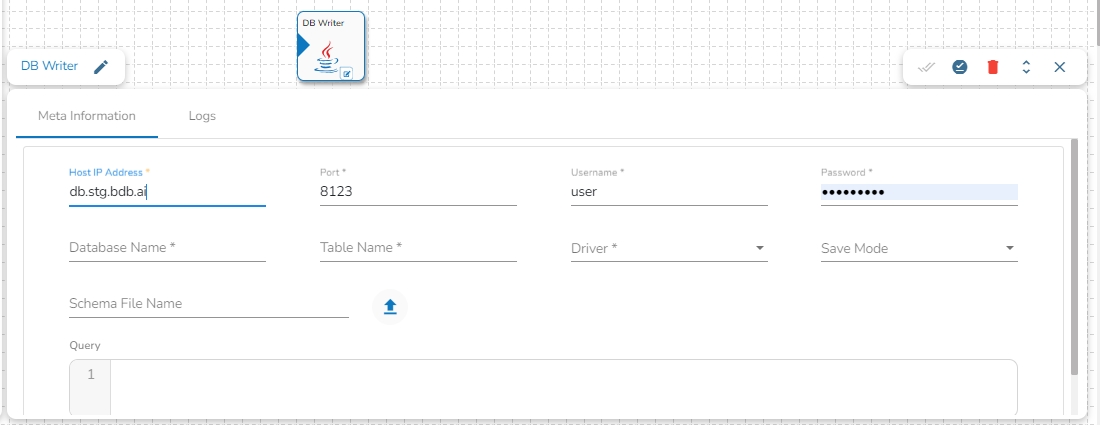

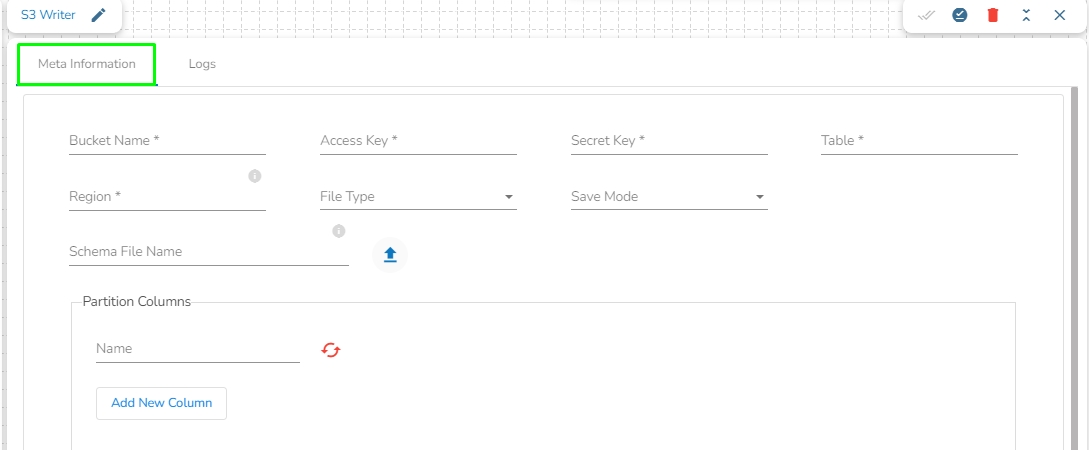

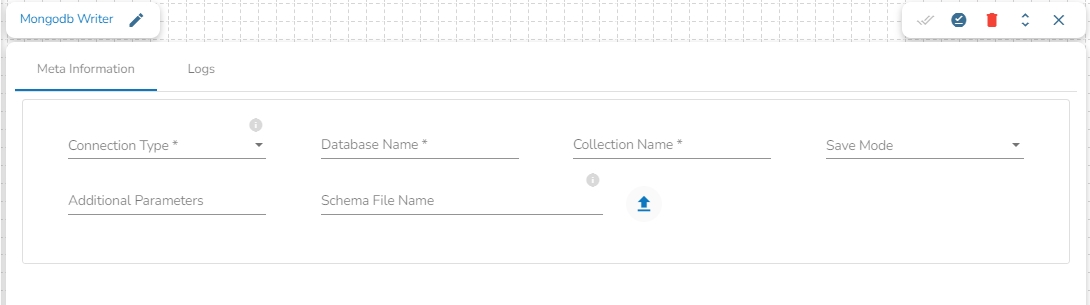

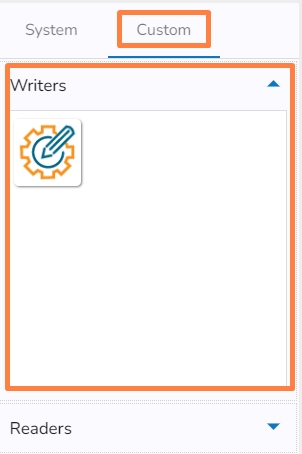

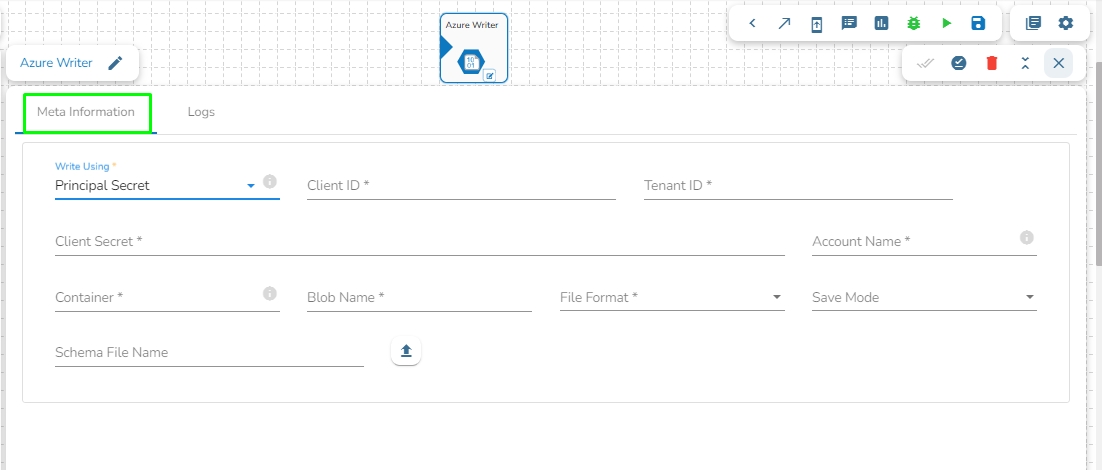

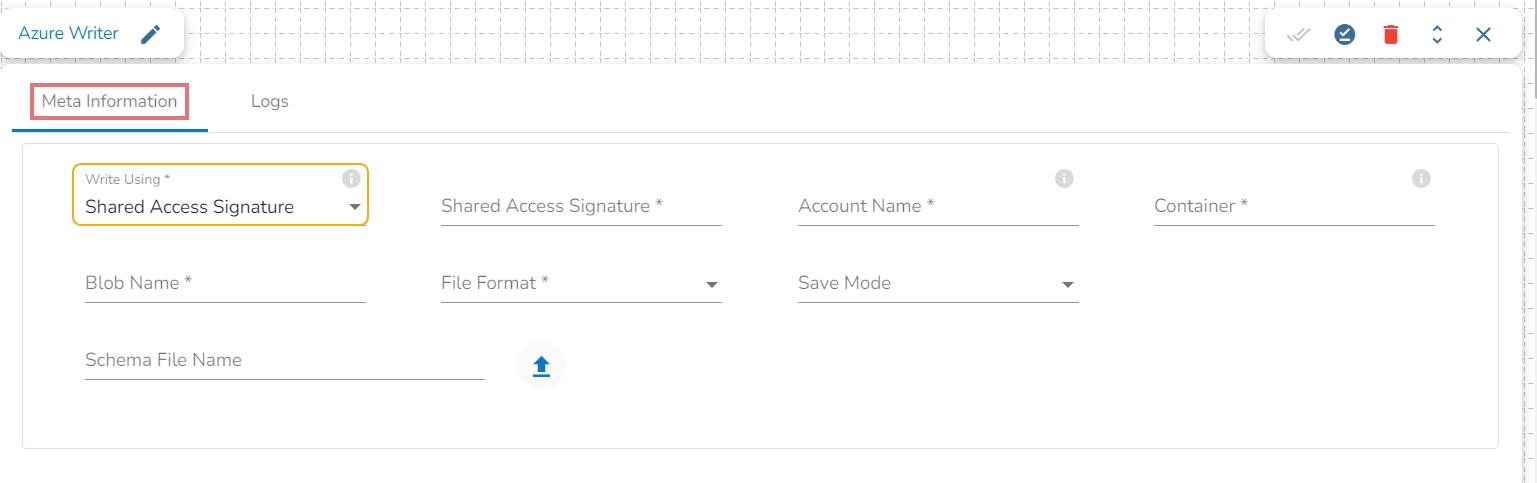

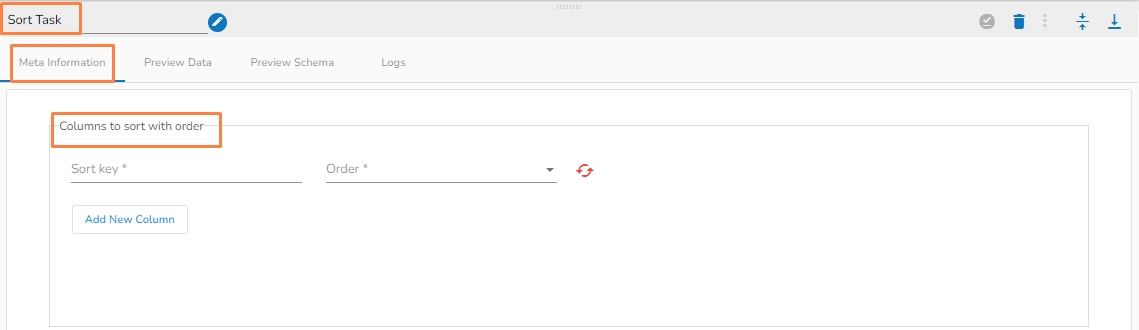

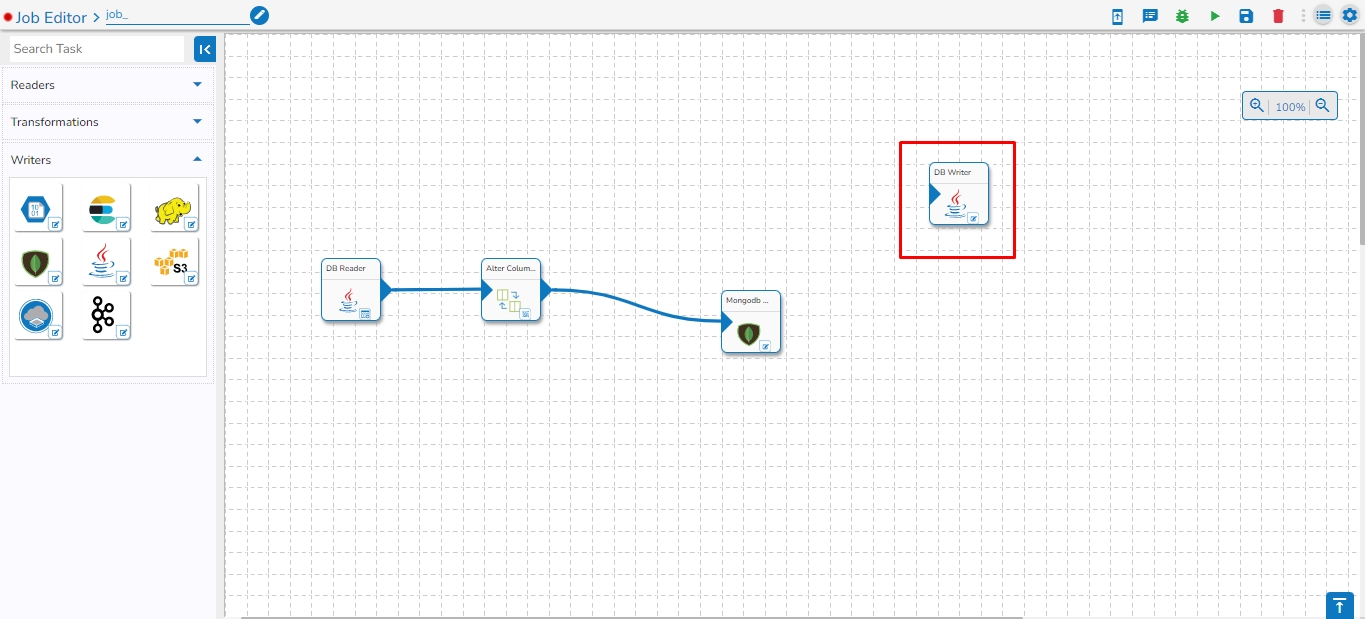

All the available Writer Task components for a Job are explained in this section.

Writers are a group of components that can write data to different DB and cloud storages.

There are Eight(8) Writers tasks in Jobs. All the Writers tasks is having the following tabs:

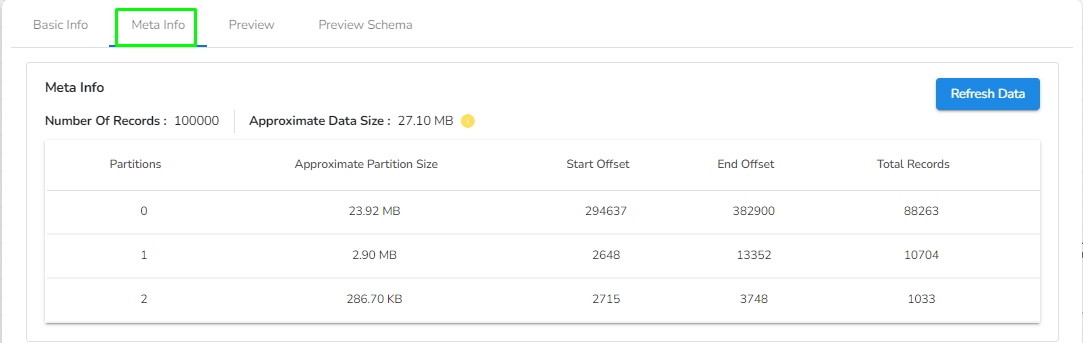

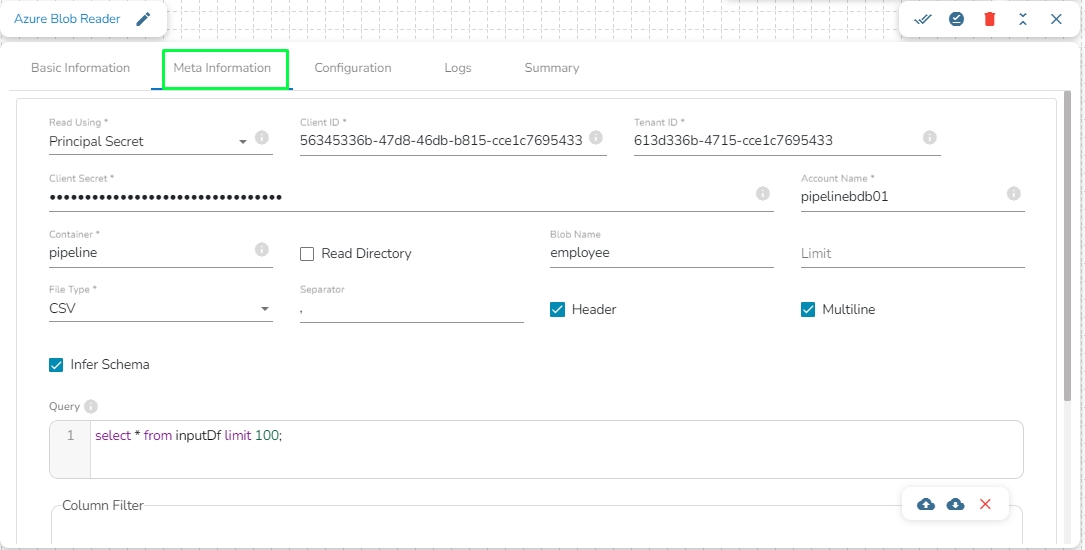

Meta Information: Configure the meta information same as doing in pipeline components.

Preview Data: Only ten random data can be previewed in this tab only when the task is running in Development mode.

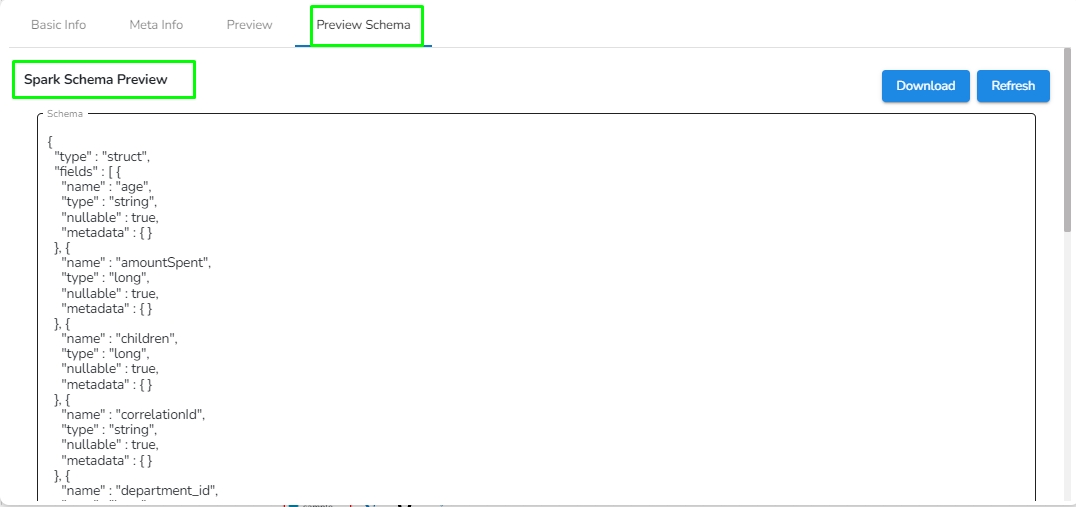

Preview schema: Spark schema of the data will be shown in this tab.

Logs: Logs of the tasks will display here.

This section provides details about the various categories of the task components which can be used in the Spark Job.

There are three categories of task components available:

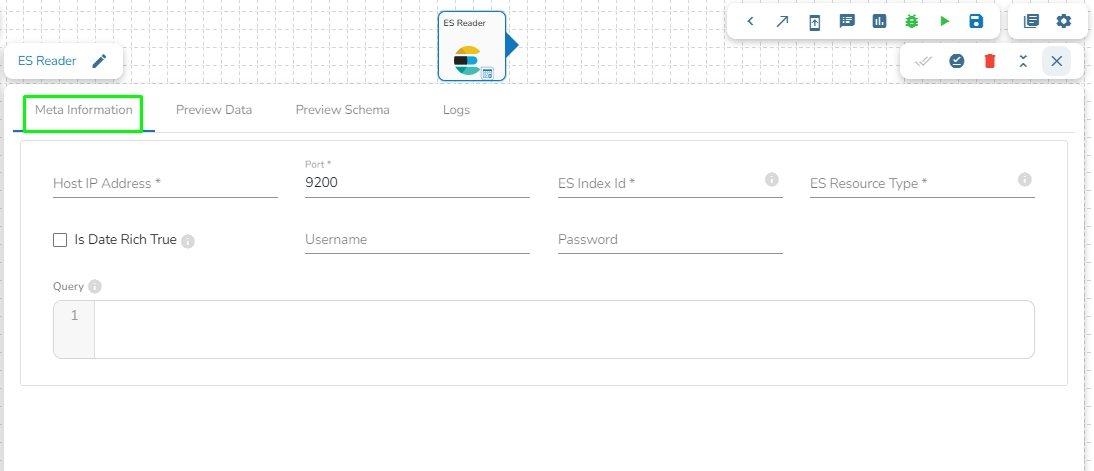

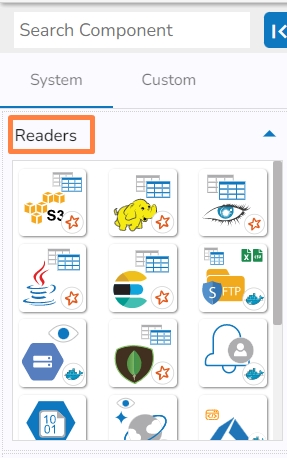

All the available Reader Task components are included in this section.

Readers are a group of tasks that can read data from different DB and cloud storages. In Jobs, all the tasks run in real-time.

There are eight(8) Readers tasks in Jobs. All the readers tasks contains the following tabs:

Meta Information: Configure the meta information same as doing in pipeline components.

Preview Data: Only ten(10) random data can be previewed in this tab only when the task is running in Development mode.

Preview schema: Spark schema of the reading data will be shown in this tab.

Logs: Logs of the tasks will display here.

Readers are a group of components that can read data from different DB and cloud storages in both invocation types i.e., Real-Time and Batch.

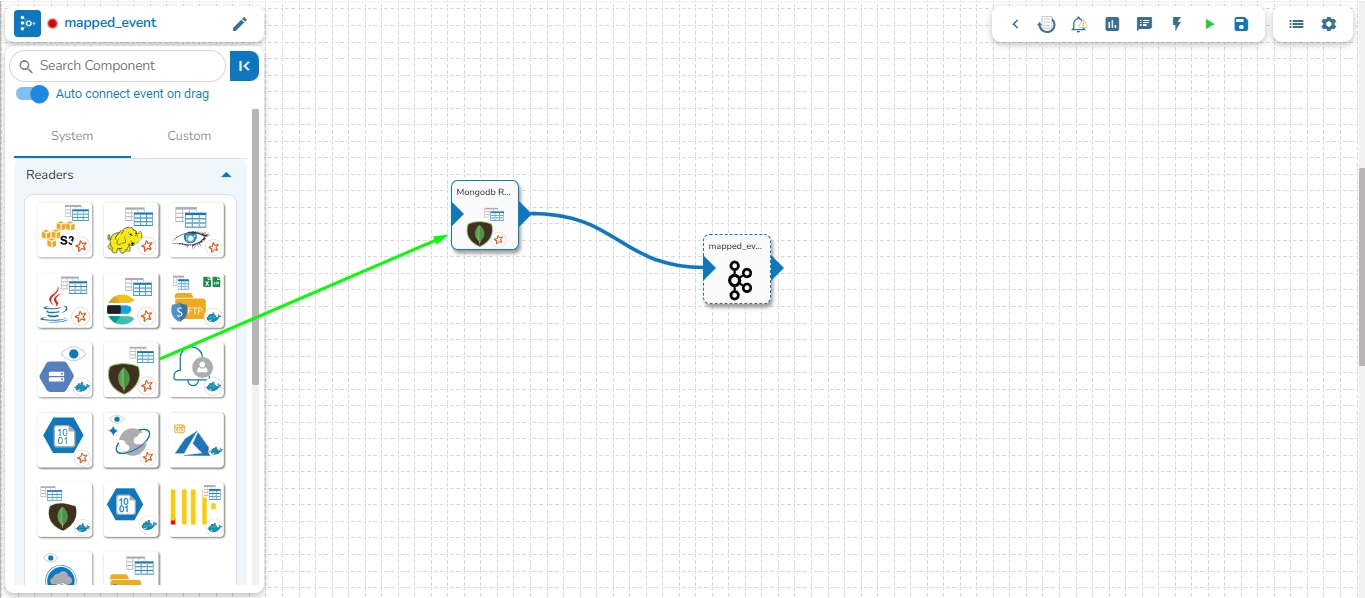

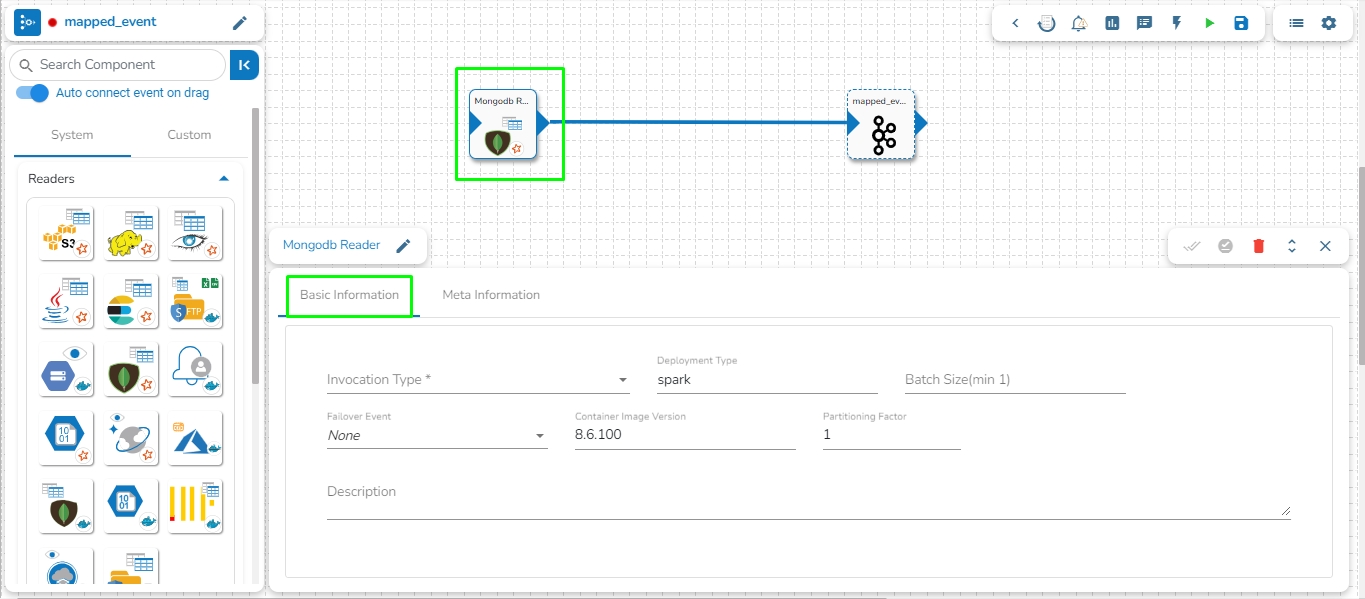

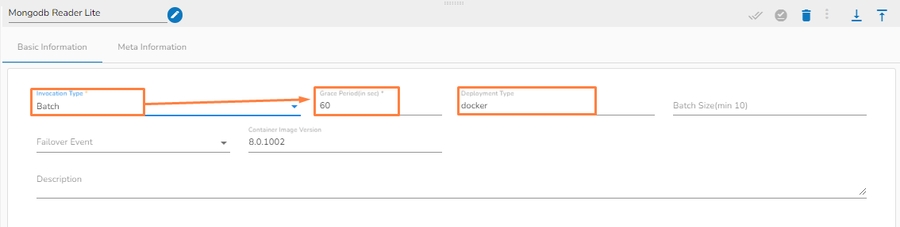

Mongo DB reader component contains both the deployment-types: Spark & Docker

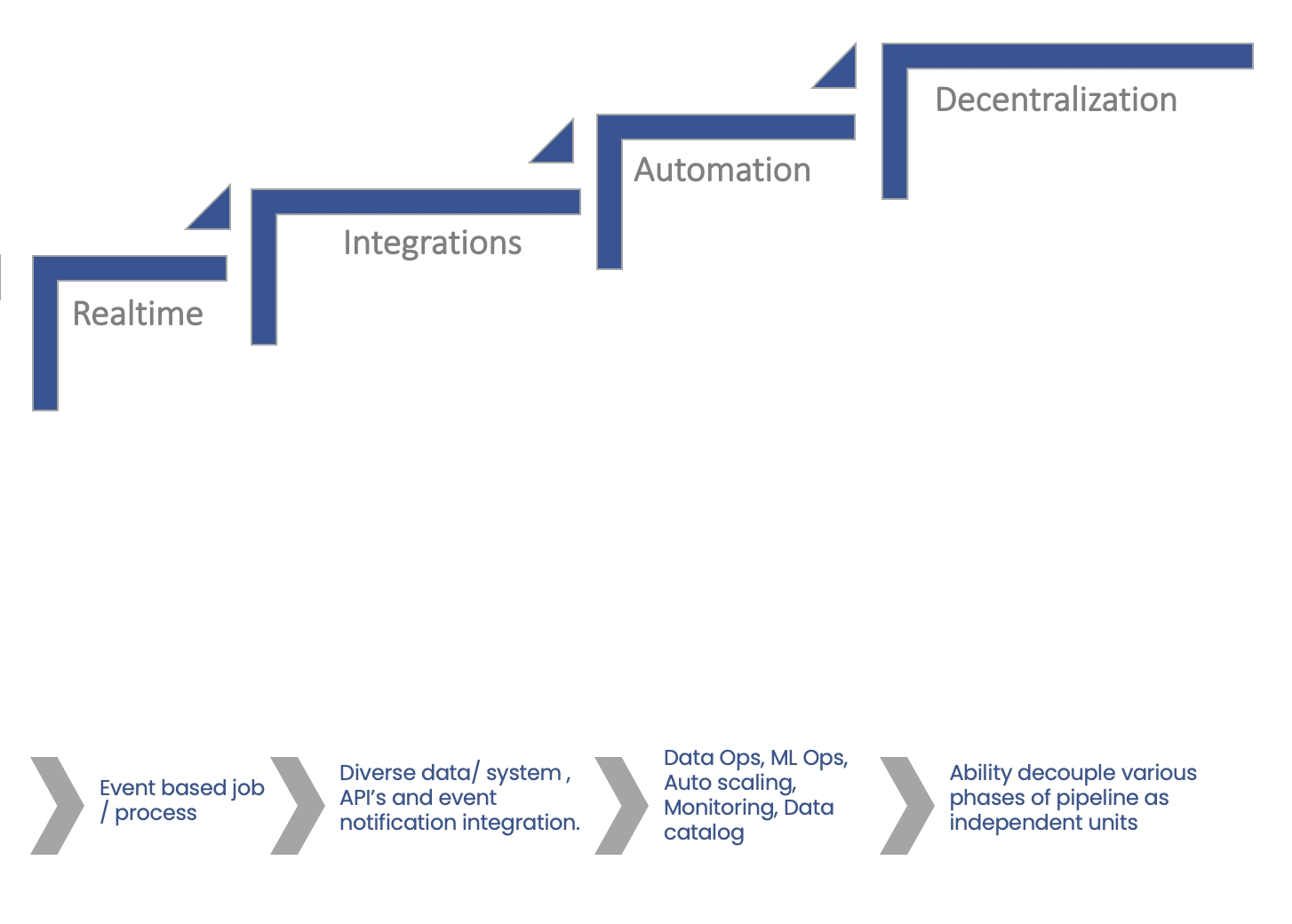

Real-time processing deals with streams of data that are captured in real-time and processed with minimal latency. These processes run continuously and stay live even if the data info has stopped.

Batch job orchestration runs the process based on a trigger. In the BDB Data Pipeline, this trigger is the input event. Anytime data is pushed to the input trigger, the job will kick start. After completing the job, the process is gracefully terminated. This process can be near real-time. Also, it allows you to effectively utilise the compute resources.

Each component is fully decoupled micro service that interact with events.

Every component in pipeline has a build-in consumer and producer functionality. This allows the component to consume data from an event process and send the output back to another Event/Topic.

Each component has an in-event and out-event. Component consumes data from in event/topic, this data is then processed and pushed to another event/topic.

Assembling a data pipeline is very simple. Just click and drag the component you want to use into editor canvas. Connect the component output to an event/topic.

A wide variety of out-of-the-box components are available to read, write, transform, ingest data into the BDB Data Pipeline from a wide variety of data sources.

Components can be easily configured just by specifying the required metadata.

For extensibility, we have provided Python-based scripting support that allows the pipeline developer to build complex business requirements which cannot be met by out-of-the-box components.

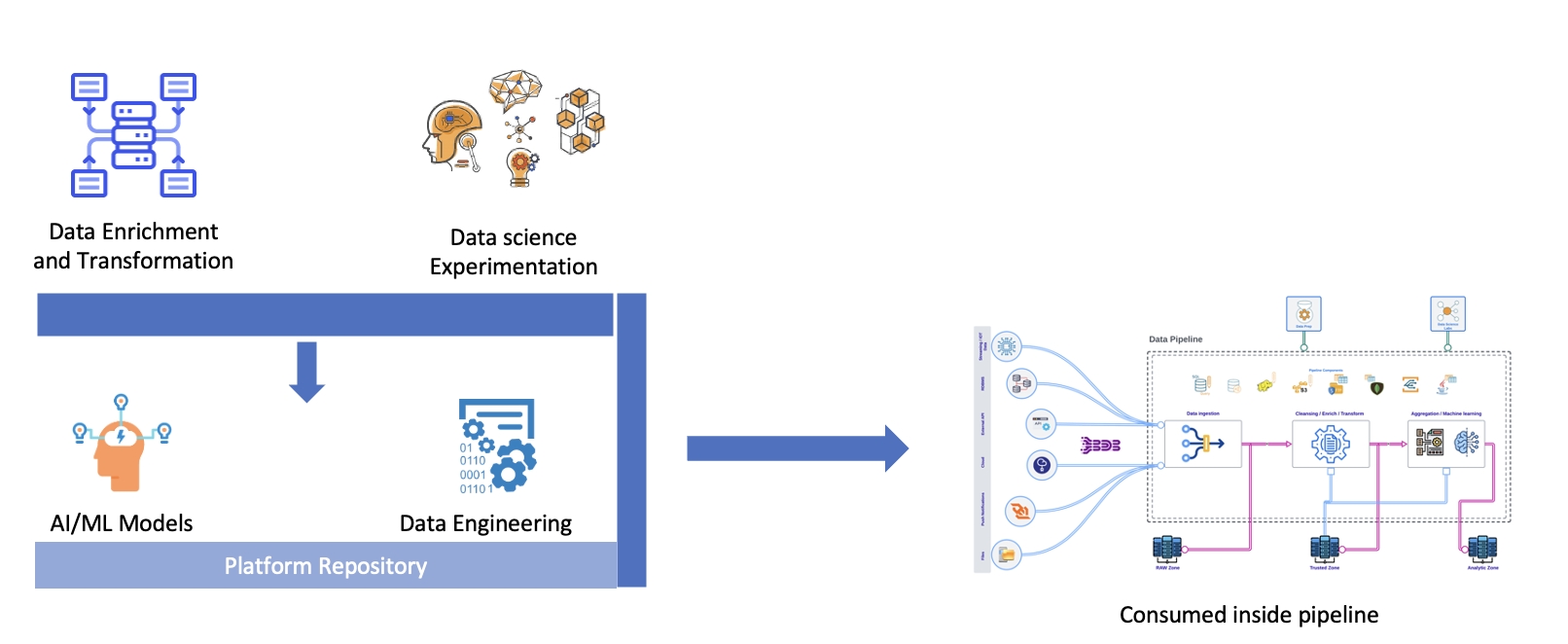

Data Pipeline provides extensibility to create your transformation logic via the DS Lab module that acts as the innovation lab for data engineers and data scientists using which they can conduct modeling experiments, before productionizing the component using the Data Pipeline.

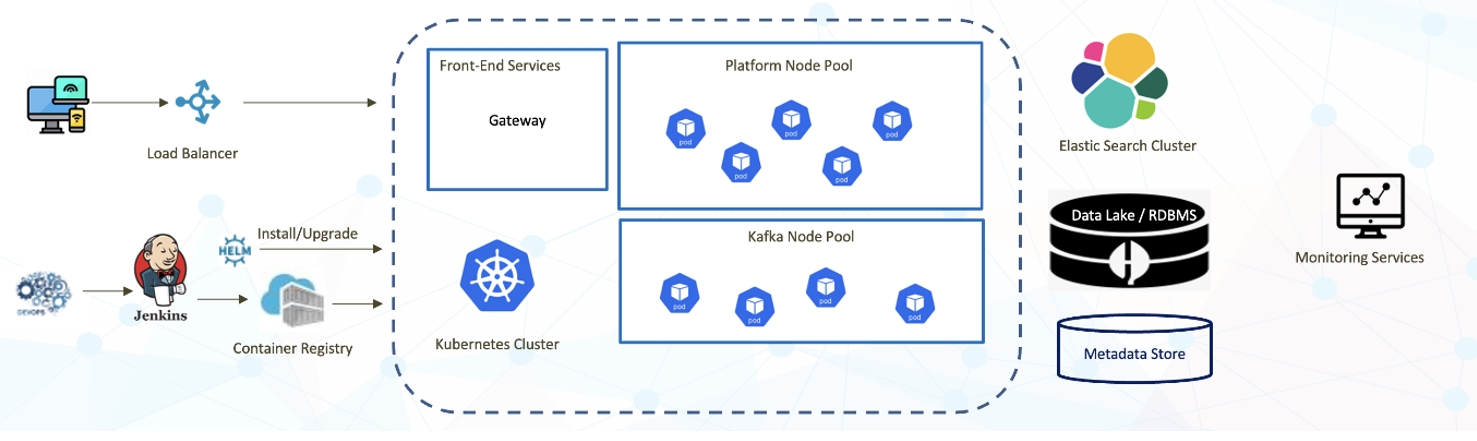

Distributed computing is the process of connecting multiple computers via a local network or wide area network so that they can act together as a single ultra-powerful computer capable of performing computations that no single computer within the network would be able to perform on its own.Distributed computers offer two key advantages:

Easy scalability: Just add more computers to expand the system.

Redundancy: Since many different machines are providing the same service, that service can keep running even if one (or more) of the computers goes down.

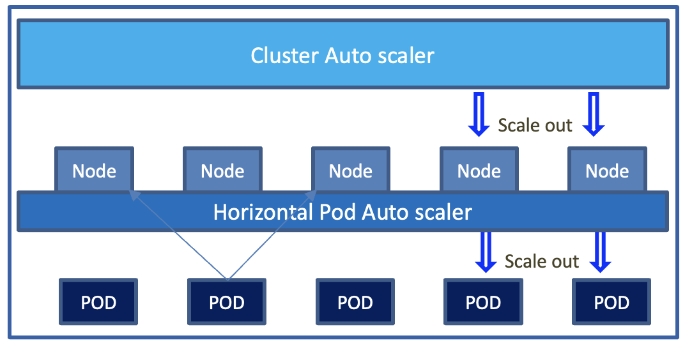

The user can run multiple instances of the same process to increase the process throughput. This can be done using the auto scaling feature.

This page provides an overview and summary of the pipeline module, including details such as running status, types, number of pipelines and jobs, and resources used.

An event-driven architecture uses events to trigger and communicate between decoupled services and is common in modern applications built with microservices.

The connecting components help to assemble various pipeline components and create a Pipeline Workflow. Just click and drag the component you want to use into the editor canvas. Connect the component output to a Kafka Event.

Once a Pipeline is created the User Interface of the Data Pipeline provides a canvas for the user to build the data flow (Pipeline Workflow).The Pipeline assembling process can be divided into two parts as mentioned below:

Adding Components to the Canvas

Adding Connecting Components (Events) to create the Data flow/ Pipeline workflow

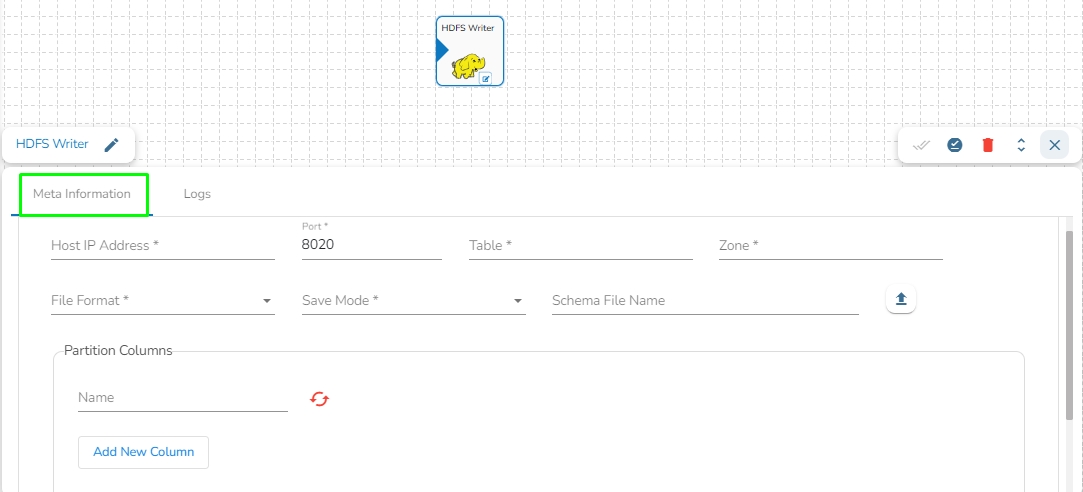

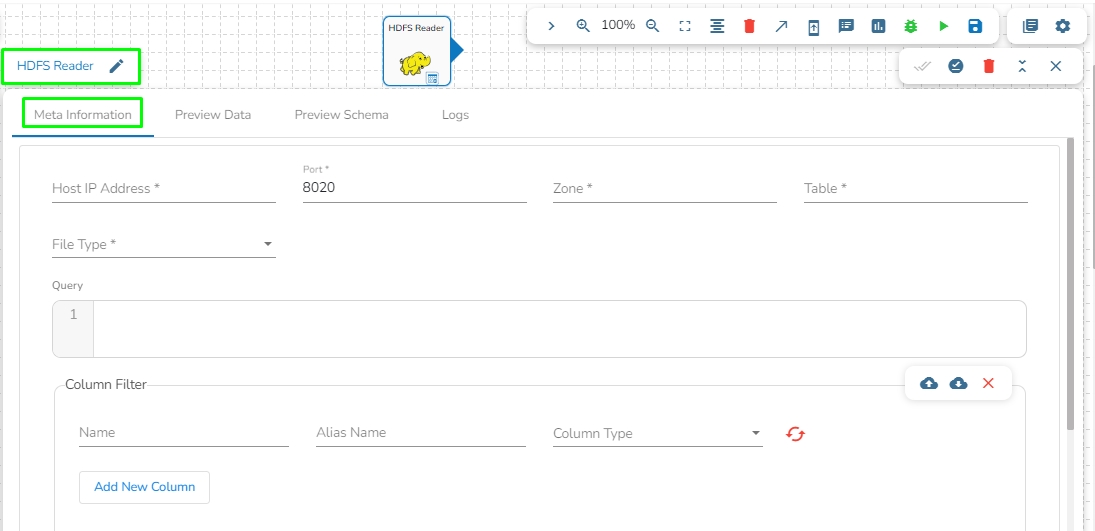

HDFS stands for Hadoop Distributed File System. It is a distributed file system designed to store and manage large data sets in a reliable, fault-tolerant, and scalable way. HDFS is a core component of the Apache Hadoop ecosystem and is used by many big data applications.

This task writes the data in HDFS(Hadoop Distributed File System).

Drag the HDFS writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

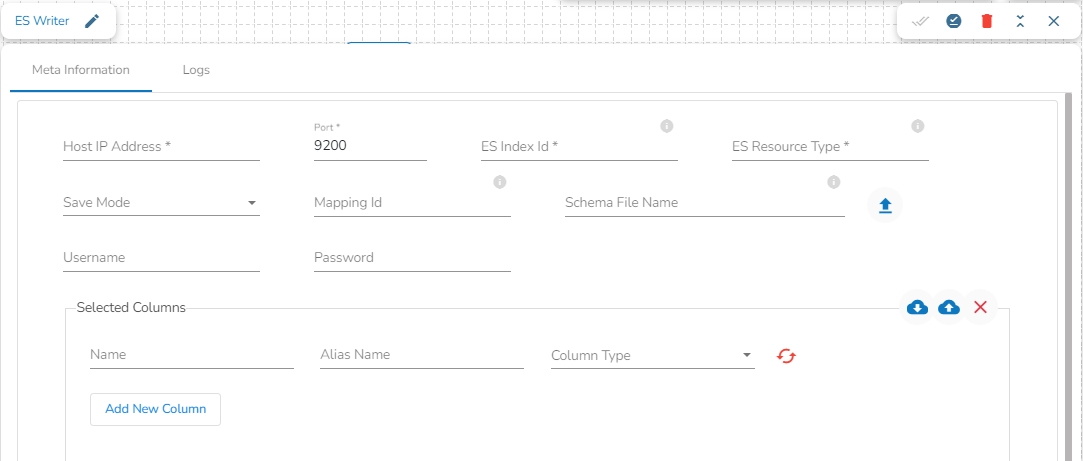

Elasticsearch is an open-source search and analytics engine built on top of the Apache Lucene library. It is designed to help users store, search, and analyze large volumes of data in real-time. Elasticsearch is a distributed, scalable system that can be used to index and search structured, semi-structured, and unstructured data.

This task is used to read the data located in Elastic Search engine.

Drag the ES reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Kubernetes Cluster auto scaler will scale in and scale out the Nodes based on CPU and Memory Load.

Nodes are Virtual/Physical machines.

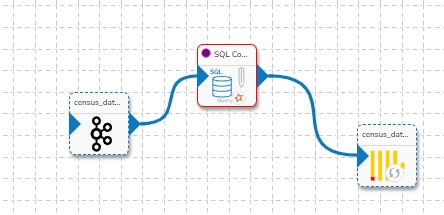

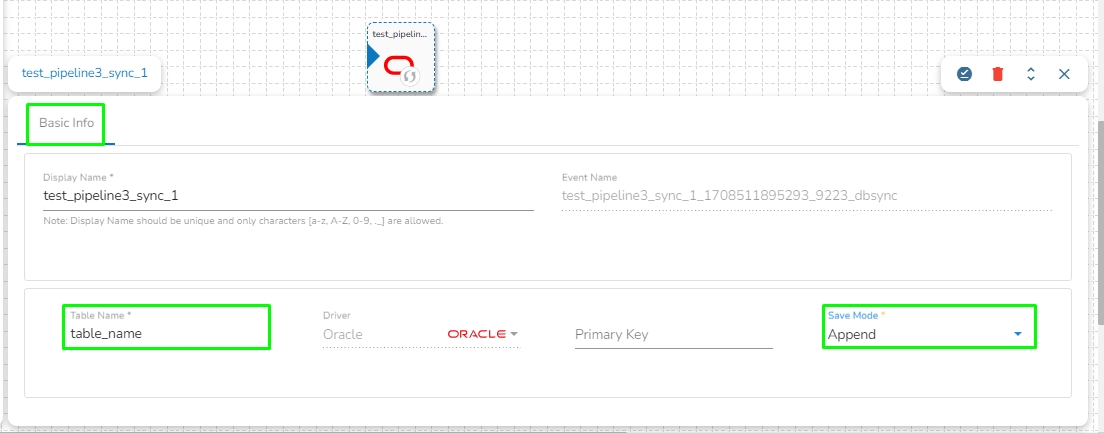

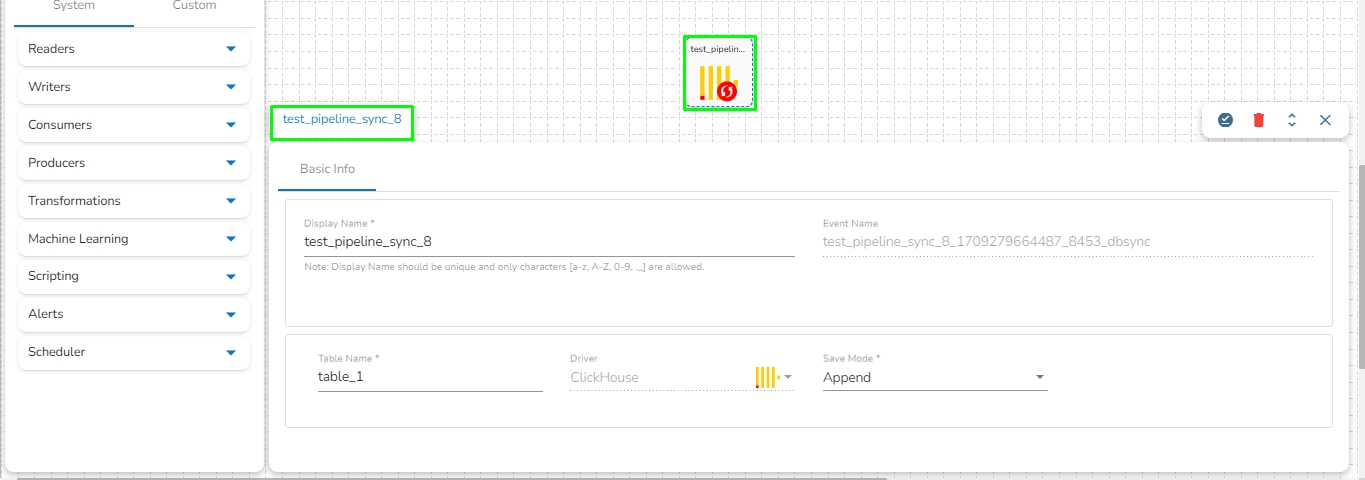

This task is used to write data in the following databases: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Drag the DB writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

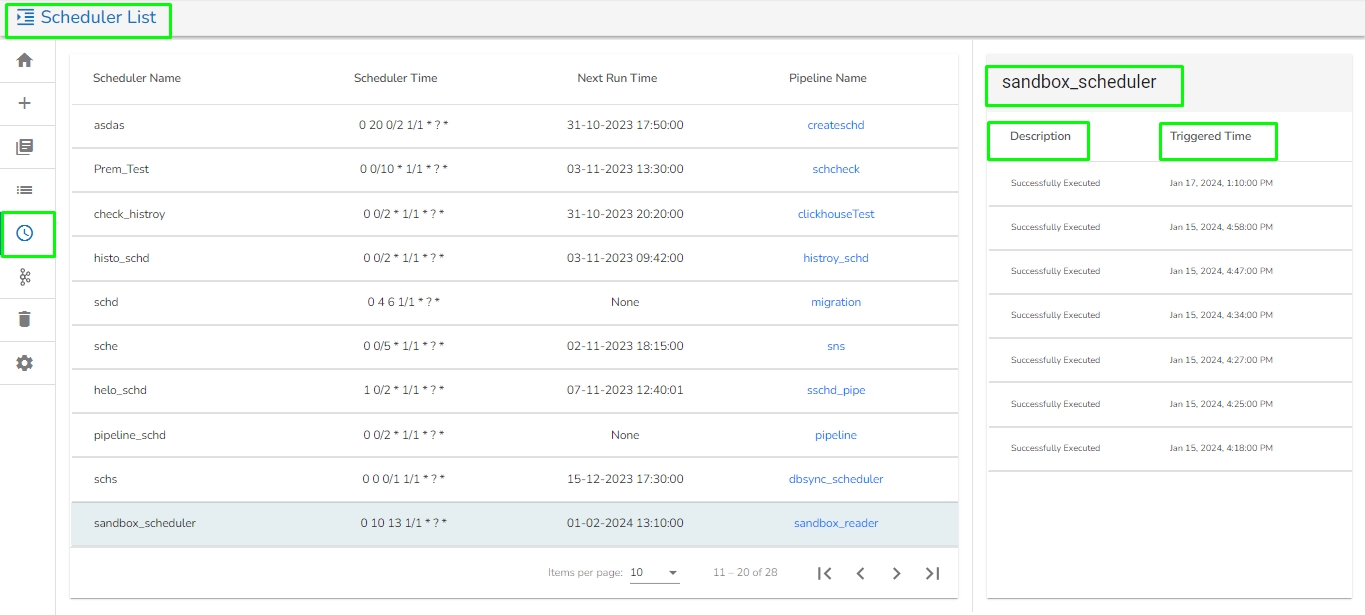

Check-out the given walk-through on how the Scheduler option works in the Data Pipeline.

The Scheduler List page opens displaying all the registered schedulers in a pipeline.

Elasticsearch is an open-source search and analytics engine built on top of the Apache Lucene library. It is designed to help users store, search, and analyze large volumes of data in real-time. Elasticsearch is a distributed, scalable system that can be used to index and search structured, semi-structured, and unstructured data.

This task is used to write the data in Elastic Search engine.

Drag the ES writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

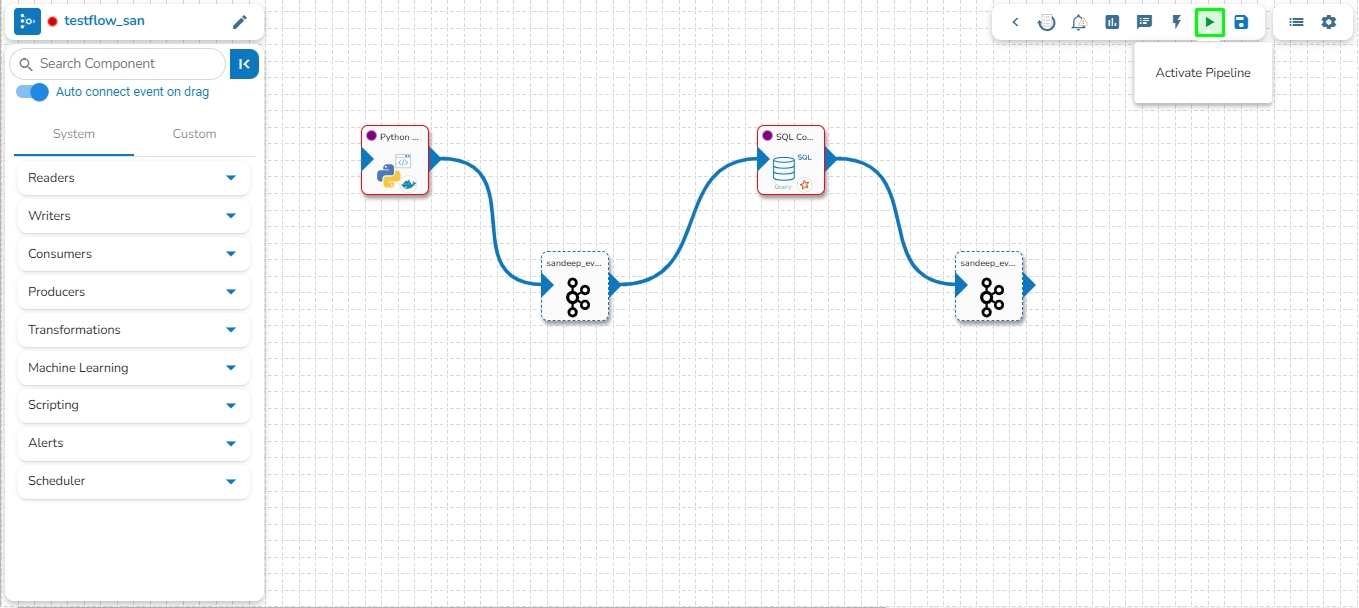

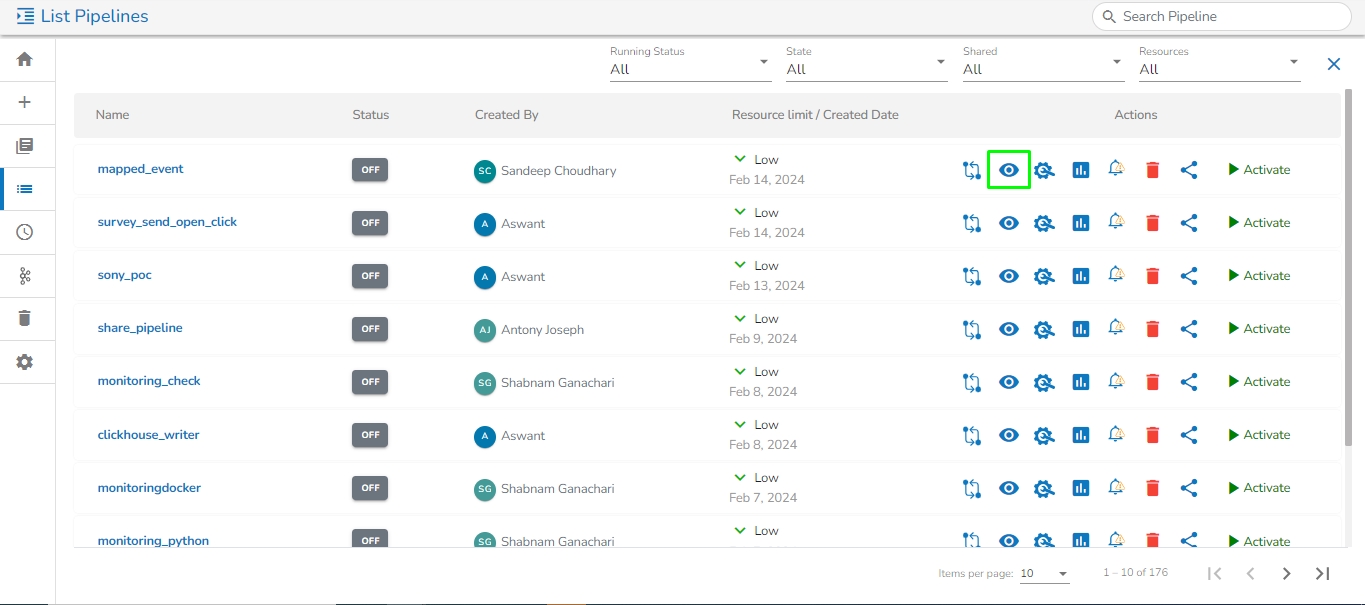

Activating pipeline to deploy the pipelines.

The pipelines can be activated from two places by clicking onicon one from the page or the Workflow Editor tool-tip.

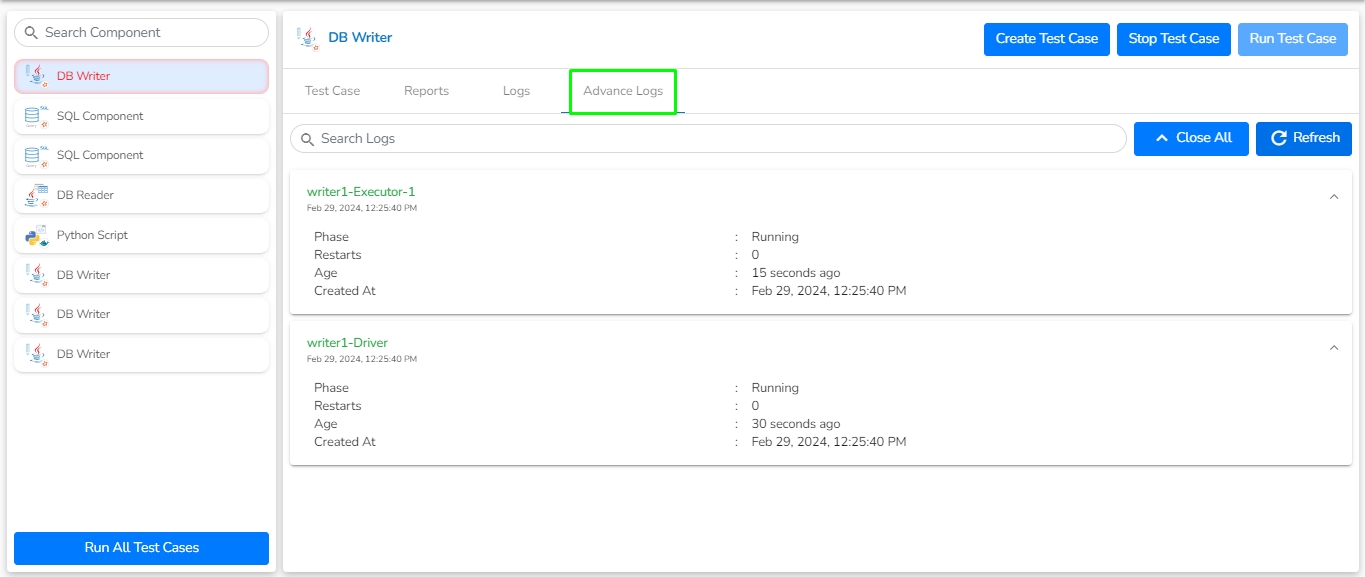

Once the pipeline gets activated all the pods are listed in the advanced logs.

Once the pipeline is activated the components get deployed and the list of deployed components can be seen in the advance logs panel.

The Zoom In/Zoom Out feature enables users to adjust the pipeline workflow editor according to their comfort, providing the flexibility to zoom in or zoom out as needed.

The Connection Validation option helps the users to validate the connection details of the db/cloud storages.

This option is available for all the components to validate the connection before deploying the components to avoid connection-related errors. This will also work with environment variables.

Check out a sample illustration of connection validation.

The right-side panel on the Pipeline Editor page gets displayed for some of the Pipeline Toolbar options.

The options for which a panel gets appeared on the right-side of the Pipeline Workflow Editor page are as listed below:

Each components inside a pipeline are fully decoupled. Each component acts as a producer and consumer of data. The design is based on event-driven process orchestration. For passing the output of one component to another component we need an Intermediatory event. An event-driven architecture contains three items:

Event Producer [Components]

Event Stream [Event (Kafka topic/ DB Sync)

Event Consumer [Components]

Host IP Address: Enter the host IP address for HDFS.

Port: Enter the Port.

Table: Enter the table name where the data has to be written.

Zone: Enter the Zone for HDFS in which the data has to be written. Zone is a special directory whose contents will be transparently encrypted upon write and transparently decrypted upon read.

File Format: Select the file format in which the data has to be written:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the save mode.

Schema file name: Upload spark schema file in JSON format.

Partition Columns: Provide a unique Key column name to partition data in Spark.

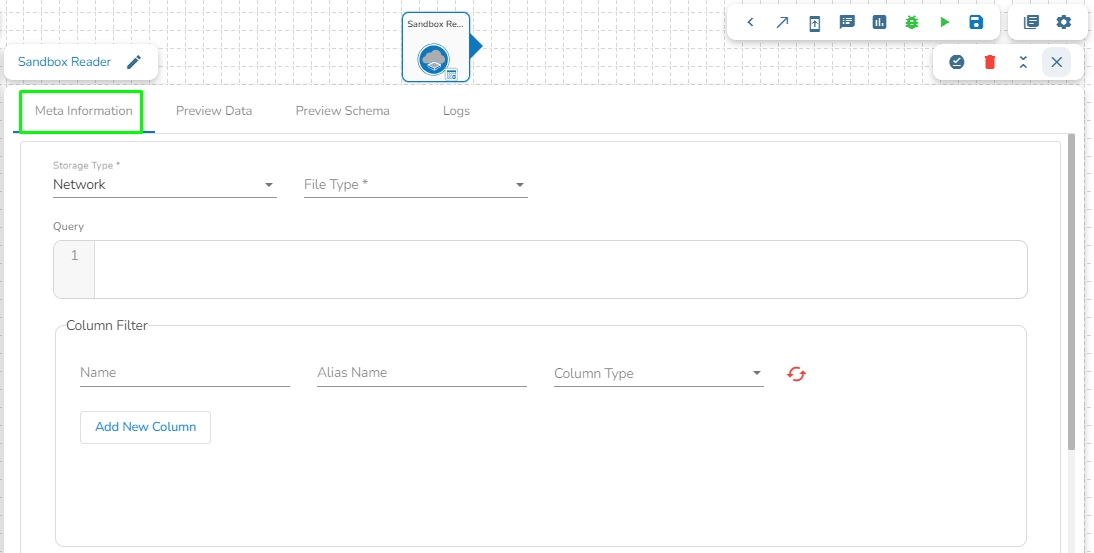

Sandbox File: Select the file name from the drop-down.

File Type: Select the file type from the drop down.

There are four(5) types of file extensions are available under it:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

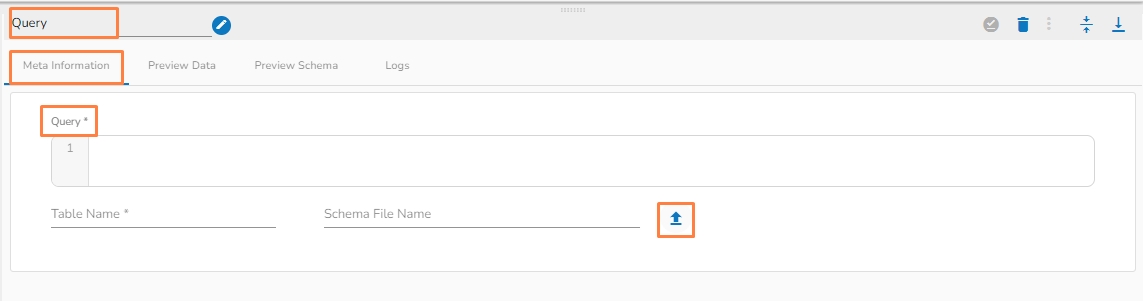

Query: Provide Spark SQL query in this field.

Host IP Address: Enter the host IP Address for Elastic Search.

Port: Enter the port to connect with Elastic Search.

Index ID: Enter the Index ID to read a document in elastic search. In Elasticsearch, an index is a collection of documents that share similar characteristics, and each document within an index has a unique identifier known as the index ID. The index ID is a unique string that is automatically generated by Elasticsearch and is used to identify and retrieve a specific document from the index.

Resource Type: Provide the resource type. In Elasticsearch, a resource type is a way to group related documents together within an index. Resource types are defined at the time of index creation, and they provide a way to logically separate different types of documents that may be stored within the same index.

Is Date Rich True: Enable this option if any fields in the reading file contain date or time information. The "date rich" feature in Elasticsearch allows for advanced querying and filtering of documents based on date or time ranges, as well as date arithmetic operations.

Username: Enter the username for elastic search.

Password: Enter the password for elastic search.

Query: Provide a spark SQL query.

PODs are enabled with autoscaling based on CPU and Memory Parameters.

All Pods are configured to have two instances, each deployed in different Nodes, using the node affinity parameter.

PODs are configured with self-healing, which mean when there is a failure a new POD is spun up.

Port: Enter the port for the given IP Address.

Database name: Enter the Database name.

Table name: Provide a single or multiple table names. If multiple table name has be given, then enter the table names separated by comma(,).

User name: Enter the user name for the provided database.

Password: Enter the password for the provided database.

Driver: Select the driver from the drop down. There are 6 drivers supported here: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Query: Write the create table(DDL) query.

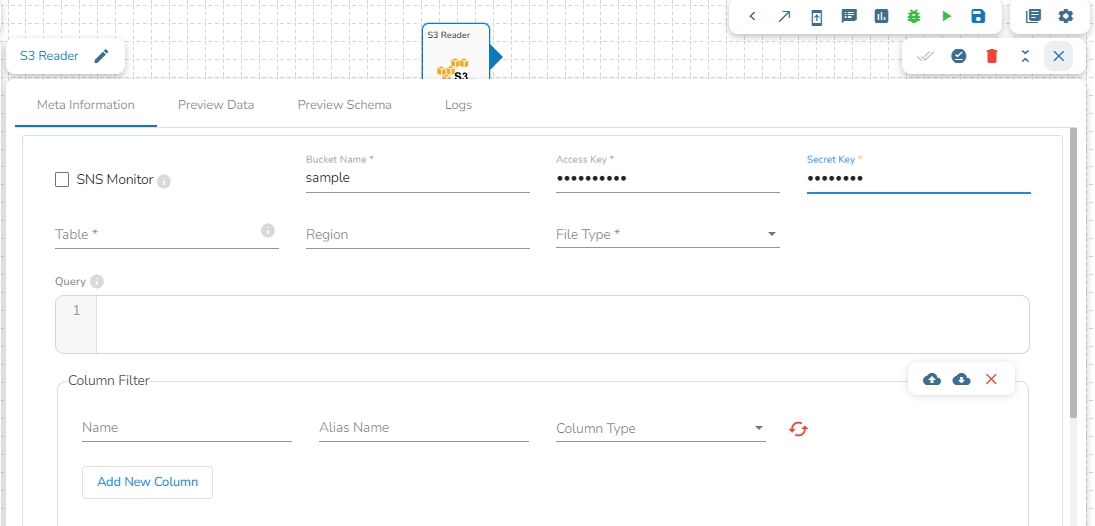

Region (*): Provide S3 region.

Access Key (*): Access key shared by AWS to login

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which is to be read

File Type (*): Select a file type from the drop-down menu (CSV, JSON, PARQUET, AVRO are the supported file types).

Save Mode: Select the Save mode from the drop down.

Append

Schema File Name: Upload spark schema file in JSON format.

Traditional data transformation operation are sequential process where developer design and develop the logic and test and deploy it. BDB Data Pipeline allows the user to adopt the agile non-linear approach which reduces the time to market by 50 to 60 %

On the Scheduler List page, users will find the following details:

Scheduler Name: The name of the scheduler component as given in the pipeline.

Scheduler Time: The time set in the scheduler component.

Next Run Time: The next scheduled run time of the scheduler.

Pipeline Name: The name of the pipeline where the scheduler is configured and used. Clicking on this option will directly redirect the user to the selected pipeline.

Host IP Address: Enter the host IP Address for Elastic Search.

Port: Enter the port to connect with Elastic Search.

Index ID: Enter the Index ID to read a document in elastic search. In Elasticsearch, an index is a collection of documents that share similar characteristics, and each document within an index has a unique identifier known as the index ID. The index ID is a unique string that is automatically generated by Elasticsearch and is used to identify and retrieve a specific document from the index.

Mapping ID: Provide the Mapping ID. In Elasticsearch, a mapping ID is a unique identifier for a mapping definition that defines the schema of the documents in an index. It is used to differentiate between different types of data within an index and to control how Elasticsearch indexes and searches data.

Resource Type: Provide the resource type. In Elasticsearch, a resource type is a way to group related documents together within an index. Resource types are defined at the time of index creation, and they provide a way to logically separate different types of documents that may be stored within the same index.

Username: Enter the username for elastic search.

Password: Enter the password for elastic search.

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

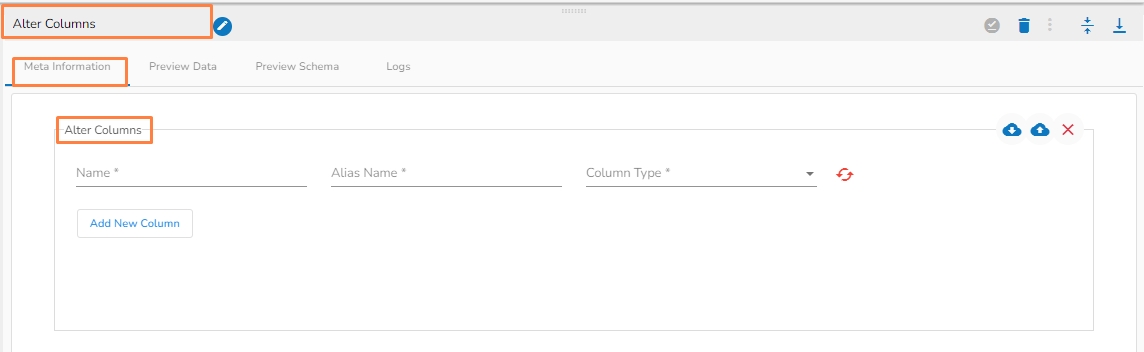

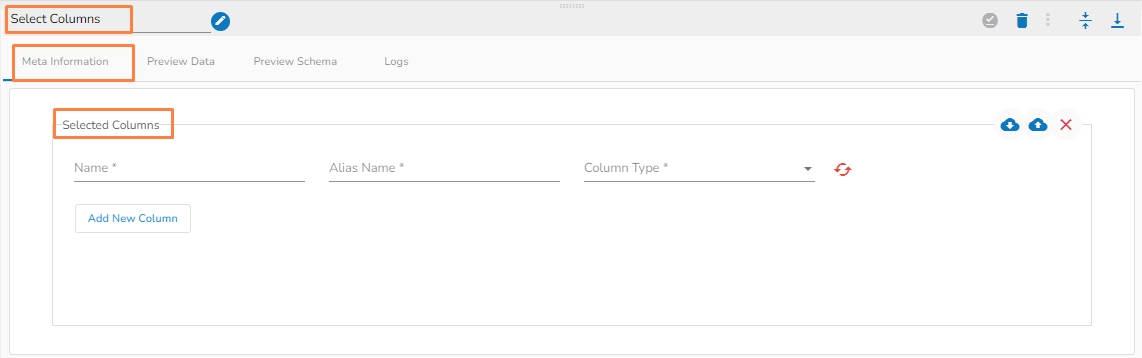

Selected columns: The user can select the specific column, provide some alias name and select the desired data type of that column.

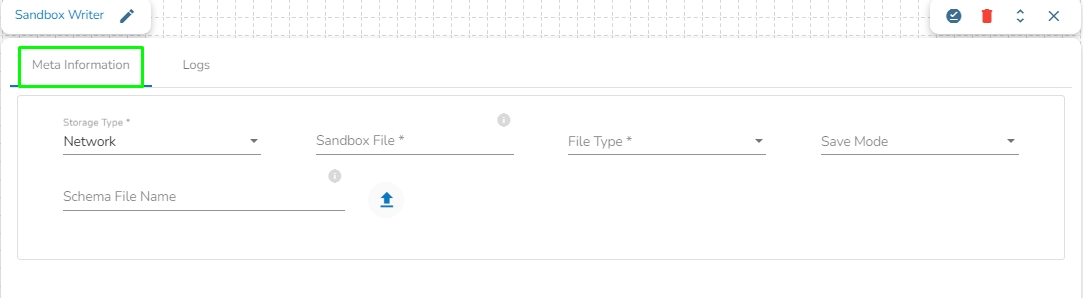

Sandbox File: Enter the file name.

File Type: Select the file type in which the data has to be written. There are 4 files types supported here:

CSV

JSON

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

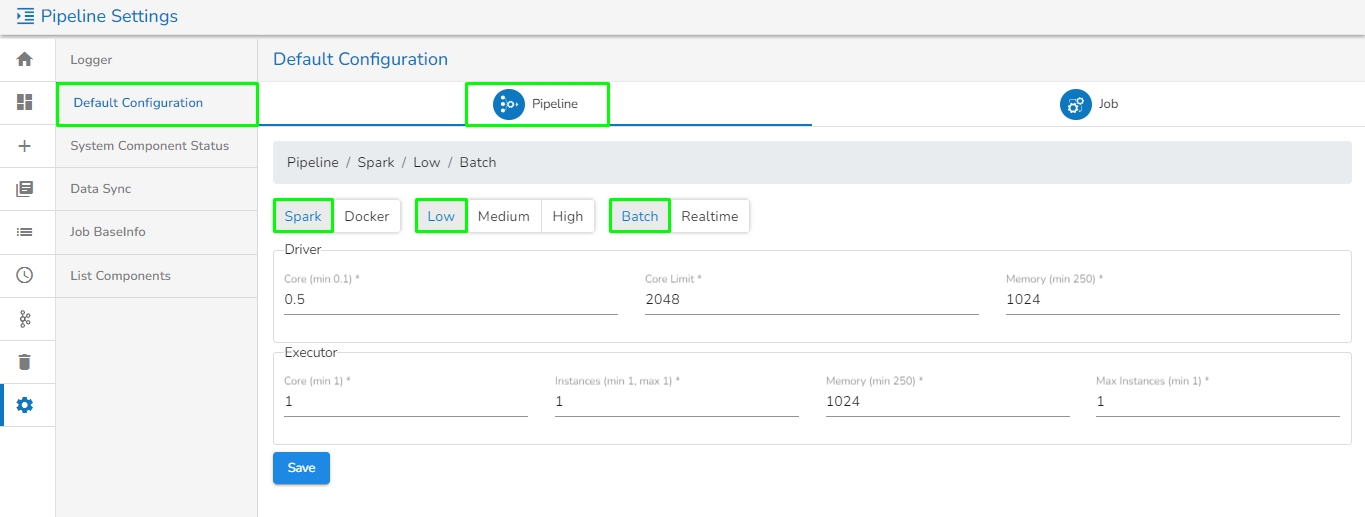

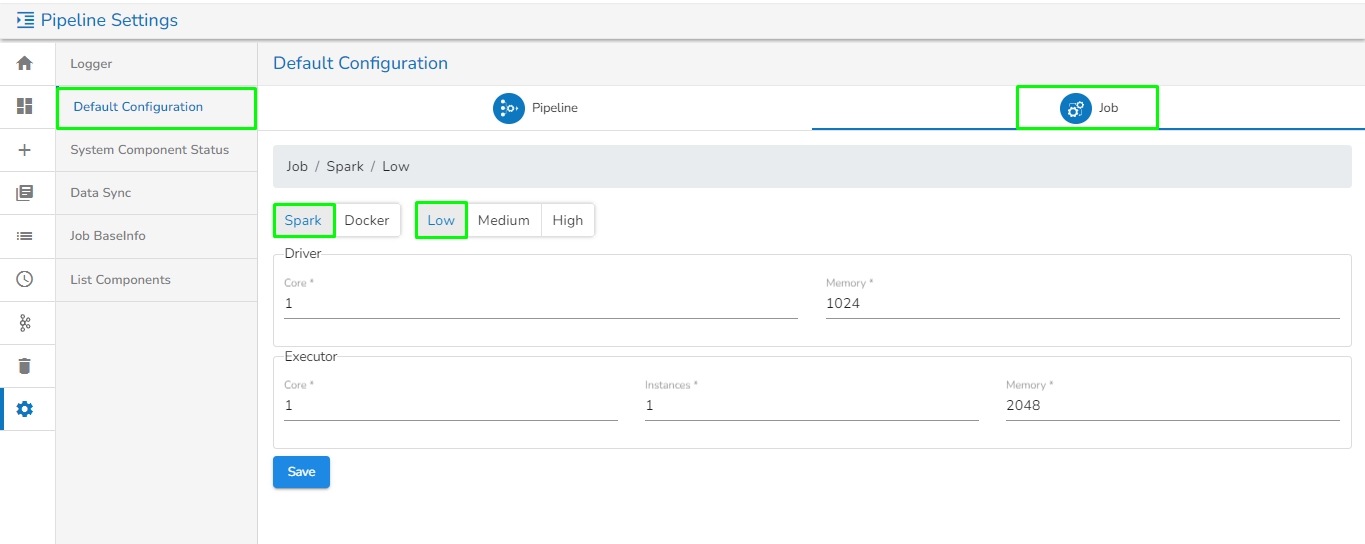

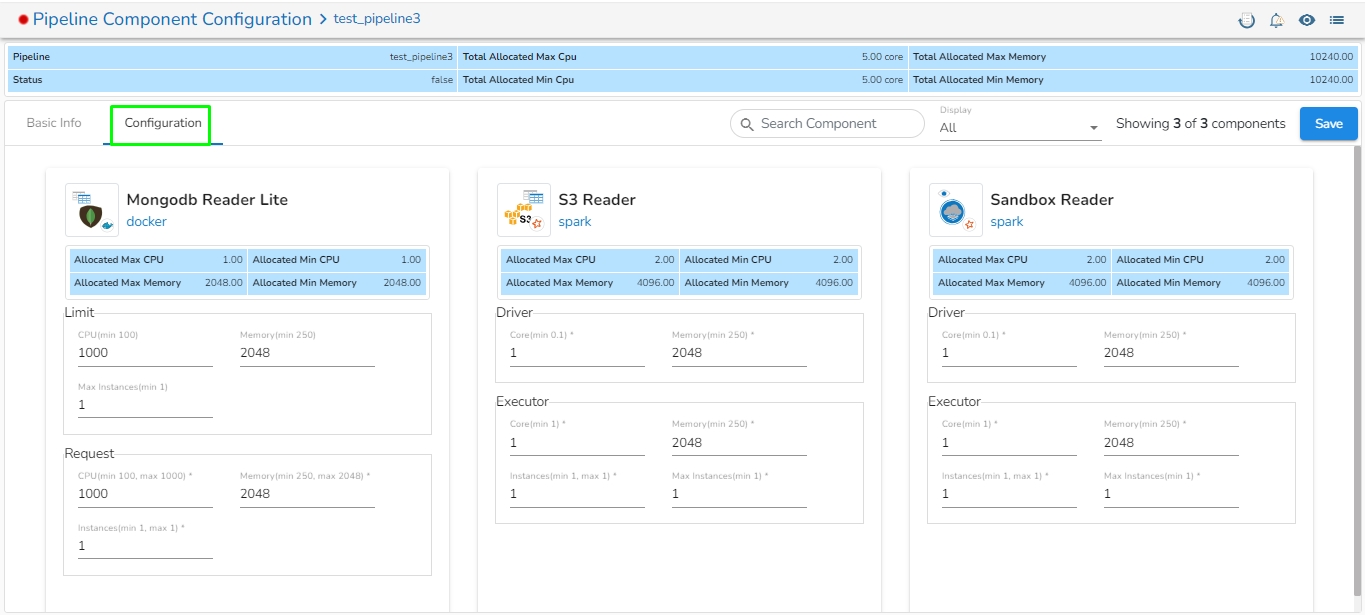

This section focuses on the Configuration tab provided for any Pipeline component.

For each component that gets deployed, we have an option to configure the resources i.e., Memory and CPU.

We have two deployment types:

Docker

Spark

Go through the given illustration to understand how to configure a component using the Docker deployment type.

After we save the component and pipeline, the component gets saved with the default configuration of the pipeline i.e., Low, Medium, and High. After we save the pipeline, we can see the configuration tab in the component. There are multiple things.

For the Docker components, we have the Request and Limit configurations.

We can see the CPU and Memory options to be configured.

CPU: This is the CPU configuration where we can specify the number of cores that we need to assign to the component.

Memory: This option is to specify how much memory you want to dedicate to that specific component.

Instances: The number of instances is used for parallel processing. If we give N no. of instances those many pods will get deployed.

Go through the below given walk-through to understand the steps to configure a component with Spark configuration type.

The Spark Components configuration is slightly different from the Docker components. When the spark components are deployed, there are two pods that come up:

Driver

Executor

Provide the Driver and executor configurations separately.

Instances: The number of instances is used for parallel processing. If we give N no. of instances in executors configuration those many executors pods will get deployed.

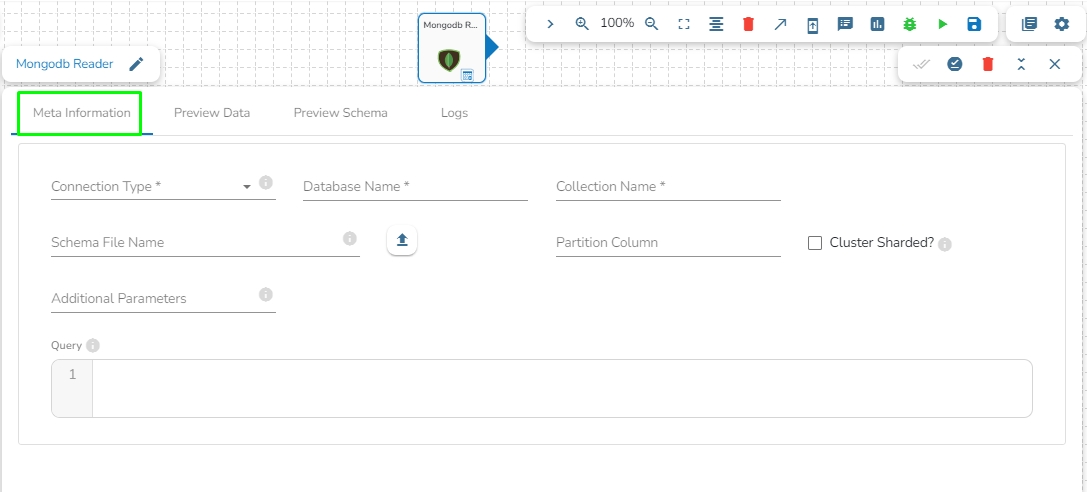

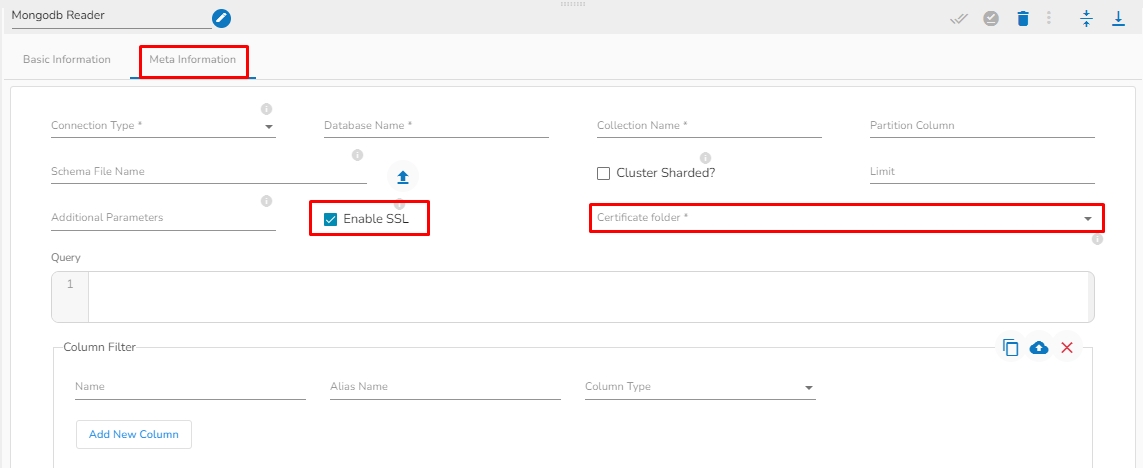

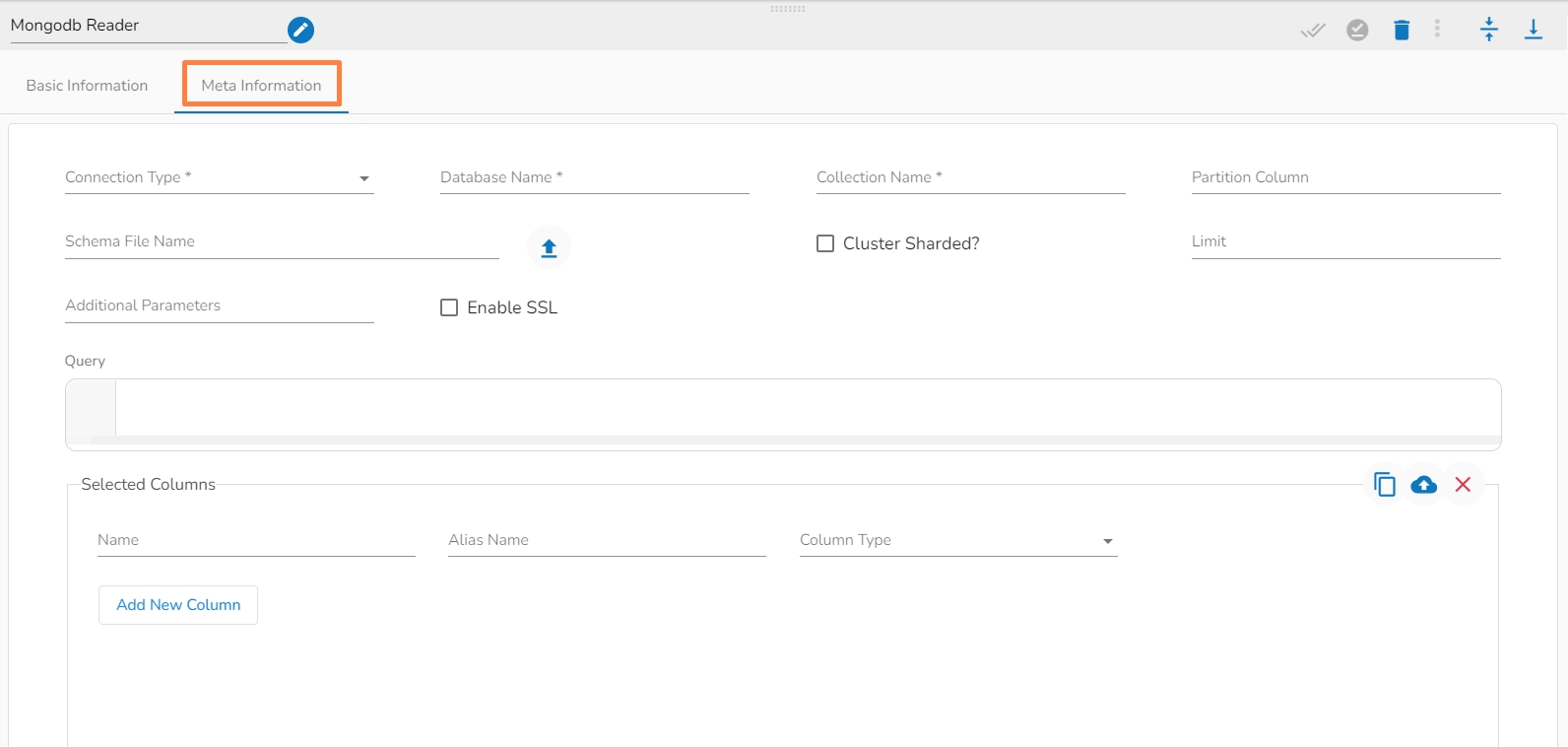

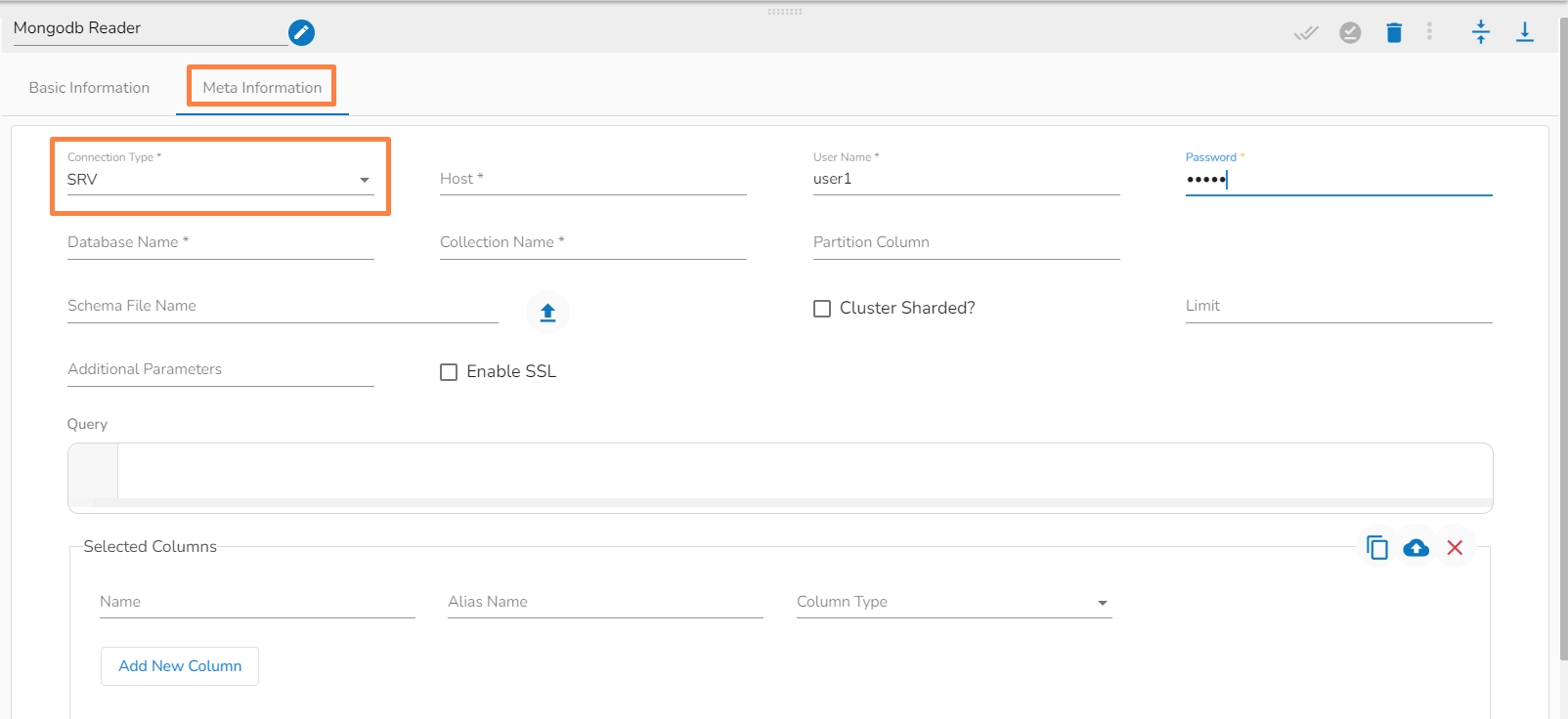

This task is used to read data from MongoDB collection.

Drag the MongoDB reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

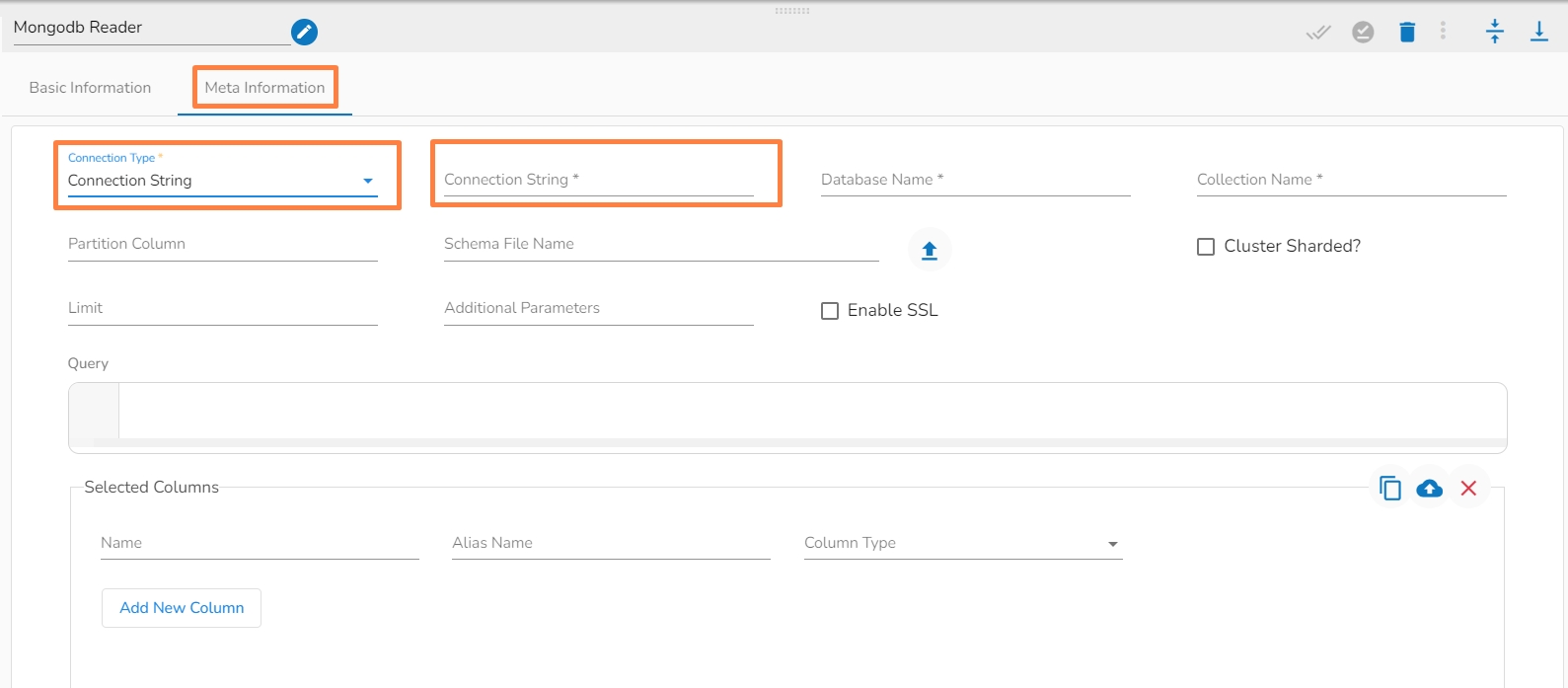

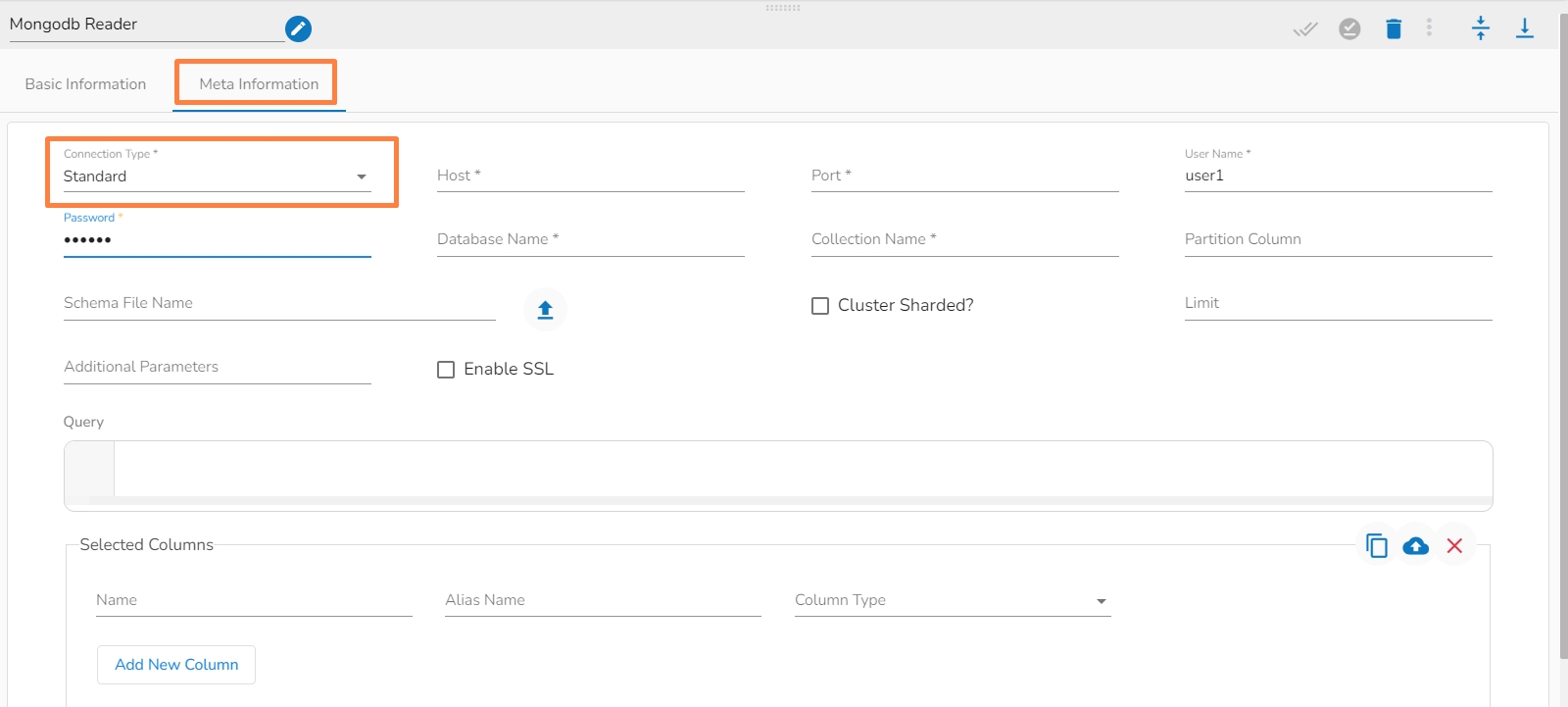

Connection Type: Select the connection type from the drop-down:

Standard

SRV

Connection String

Port (*): Provide the Port number (It appears only with the Standard connection type).

Host IP Address (*): The IP address of the host.

Username (*): Provide a username.

Password (*): Provide a valid password to access the MongoDB.

Database Name (*): Provide the name of the database where you wish to write data.

Additional Parameters: Provide details of the additional parameters.

Cluster Shared: Enable this option to horizontally partition data across multiple servers.

Schema File Name: Upload Spark Schema file in JSON format.

Query: Please provide Mongo Aggregation query in this field.

This section provides the steps involved in creating a new Pipeline flow.

Check out the below-given illustration on how to create a new pipeline.

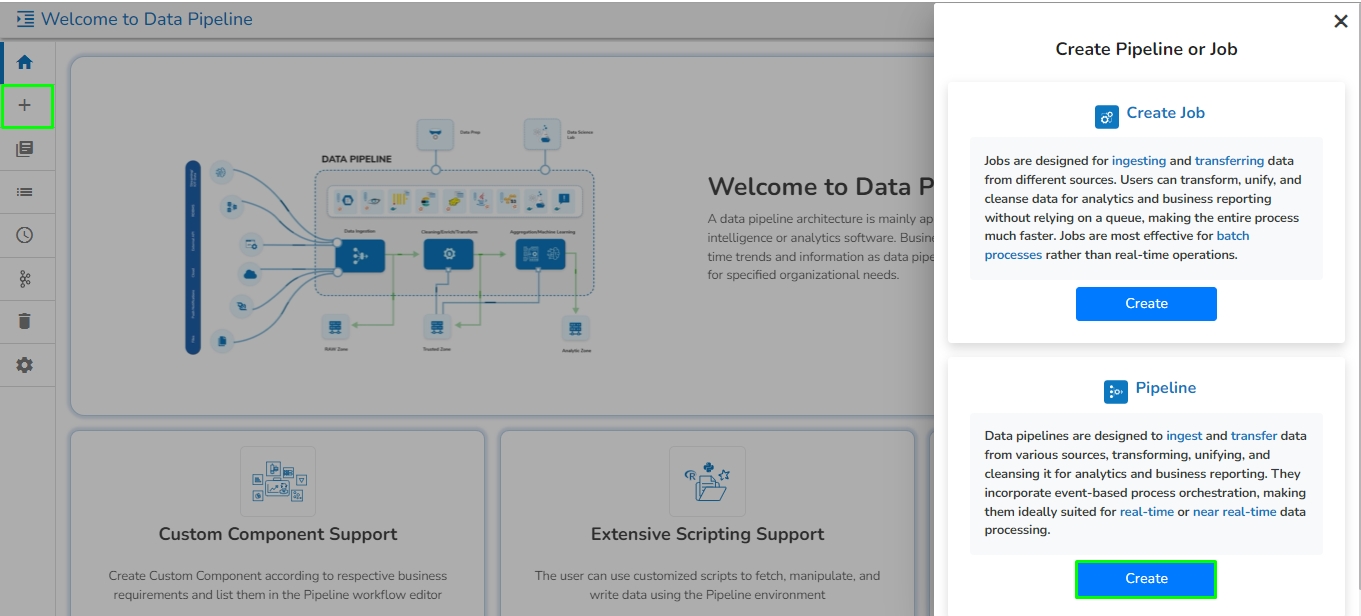

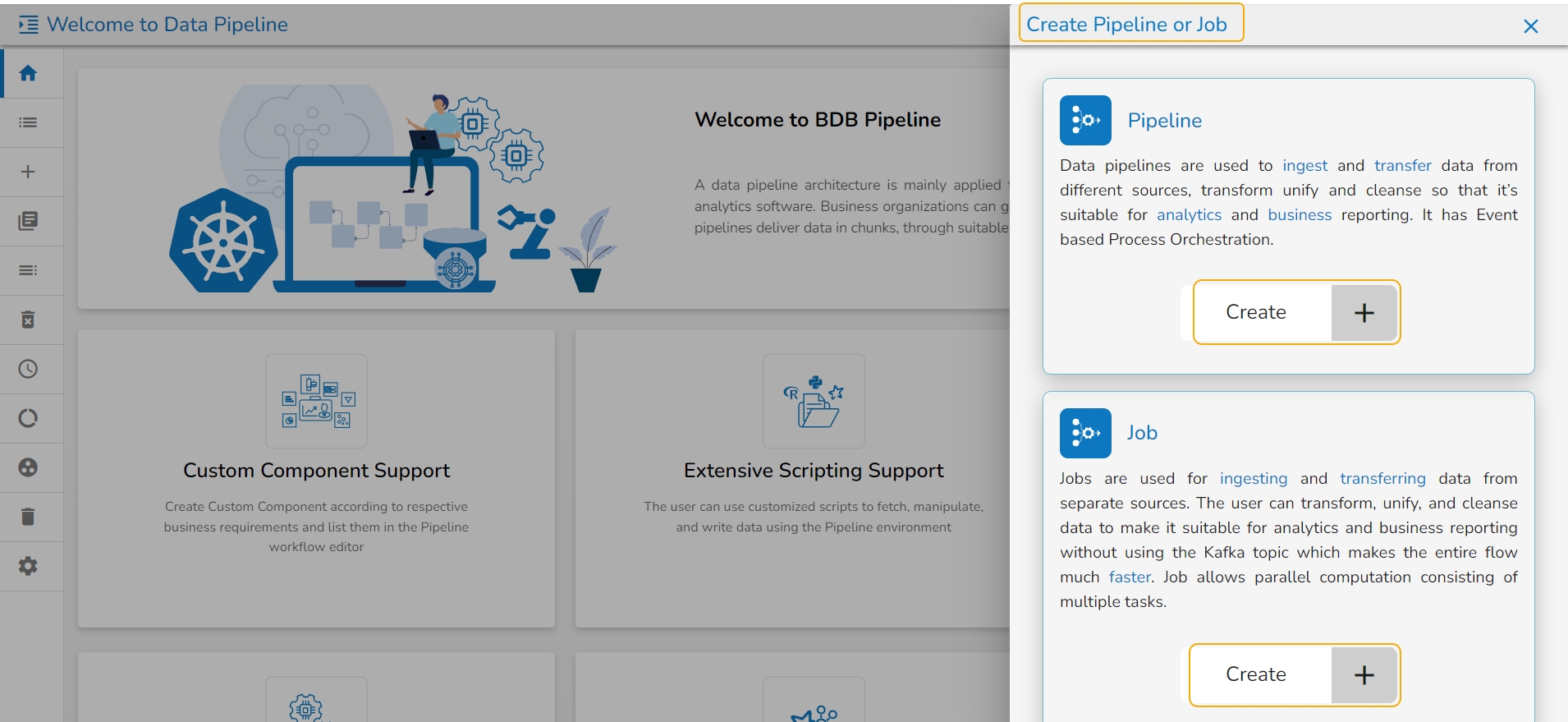

Navigate to the Create Pipeline or Job interface.

Click the Create option provided for Pipeline.

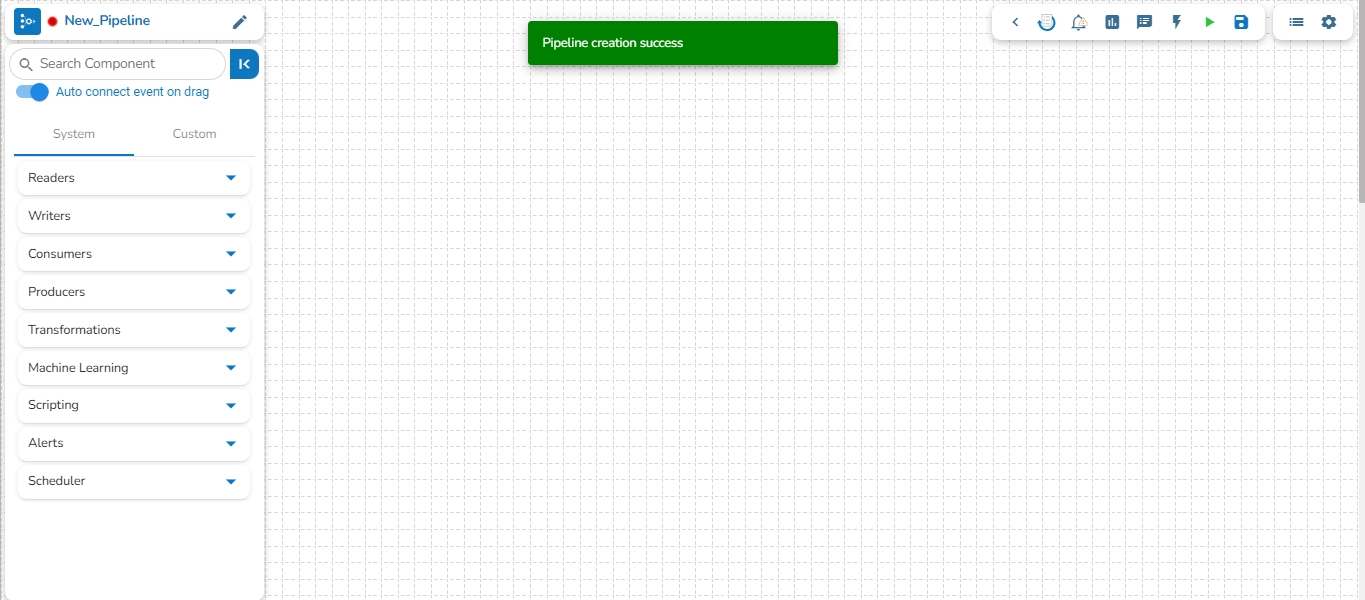

The New Pipeline window opens asking for the basic information.

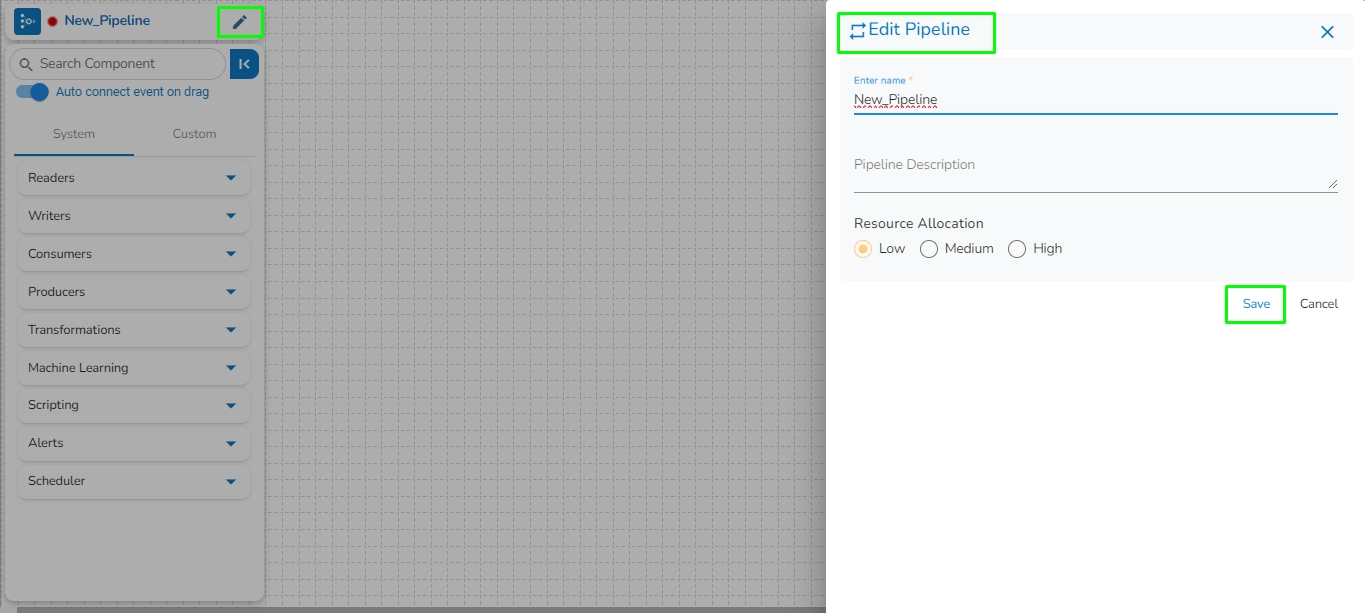

Enter a name for the new Pipeline.

Describe the Pipeline (Optional).

Select a resource allocation option using the radio button- the given choices are:

Click the Save option to create the pipeline. By clicking the Save option, the user gets redirected to the pipeline workflow editor.

A success message appears to confirm the creation of a new pipeline.

The Pipeline Editor page opens for the newly created pipeline.

Resource allocation can be changed anytime by clicking on top left edit icon near pipeline name.

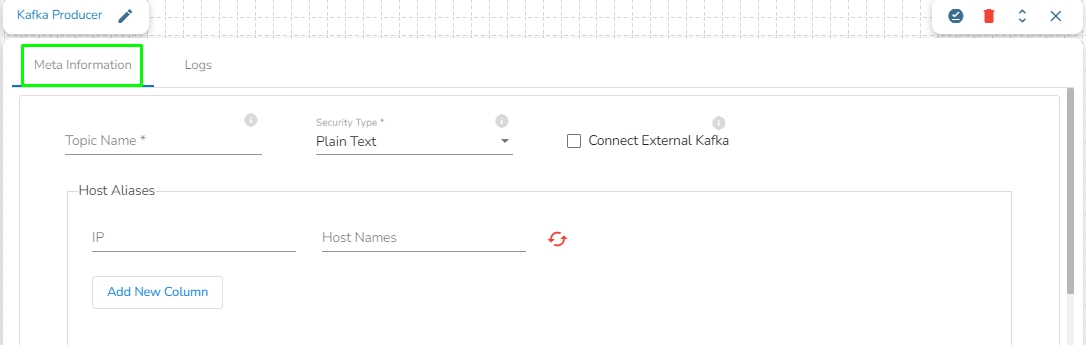

In Apache Kafka, a "producer" is a client application or program that is responsible for publishing (or writing) messages to a Kafka topic.

A Kafka producer sends messages to Kafka brokers, which are then distributed to the appropriate consumers based on the topic, partitioning, and other configurable parameters.

Drag the Kafka Producer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Topic Name: Specify topic name where user want to produce data.

Security Type: Select the security type from drop down:

Plain Text

SSL

Is External: User can produce the data to external Kafka topic by enabling 'Is External' option. ‘Bootstrap Server’ and ‘Config’ fields will display after enable 'Is External' option.

Bootstrap Server: Enter external bootstrap details.

Config: Enter configuration details.

Host Aliases: In Apache Kafka, a host alias (also known as a hostname alias) is an alternative name that can be used to refer to a Kafka broker in a cluster. Host aliases are useful when you need to refer to a broker using a name other than its actual hostname.

IP: Enter the IP.

Host Names: Enter the host names.

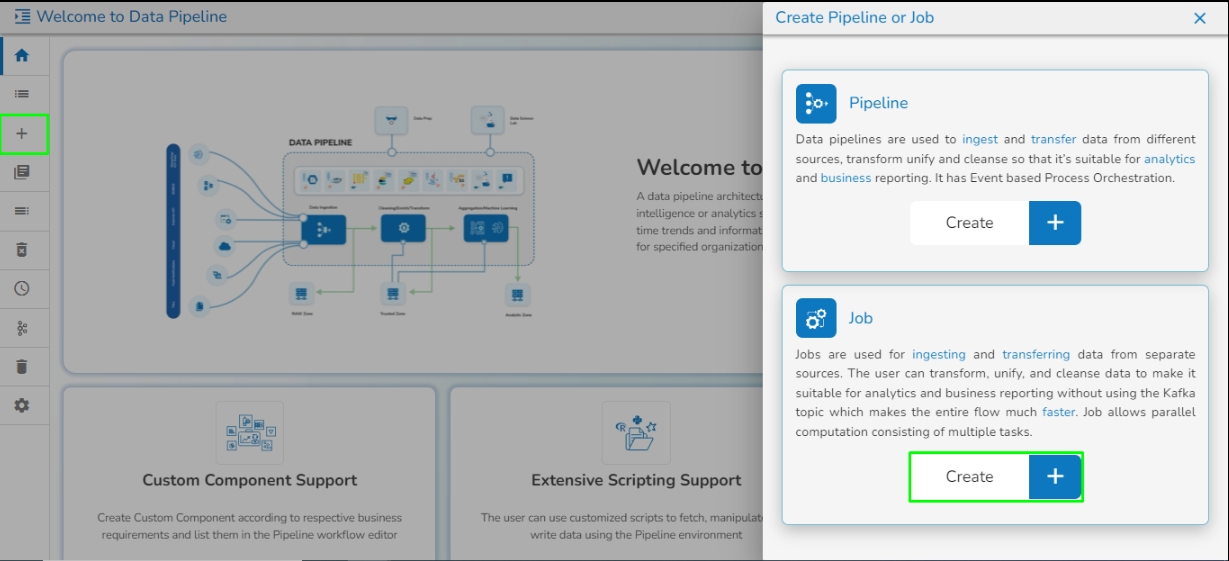

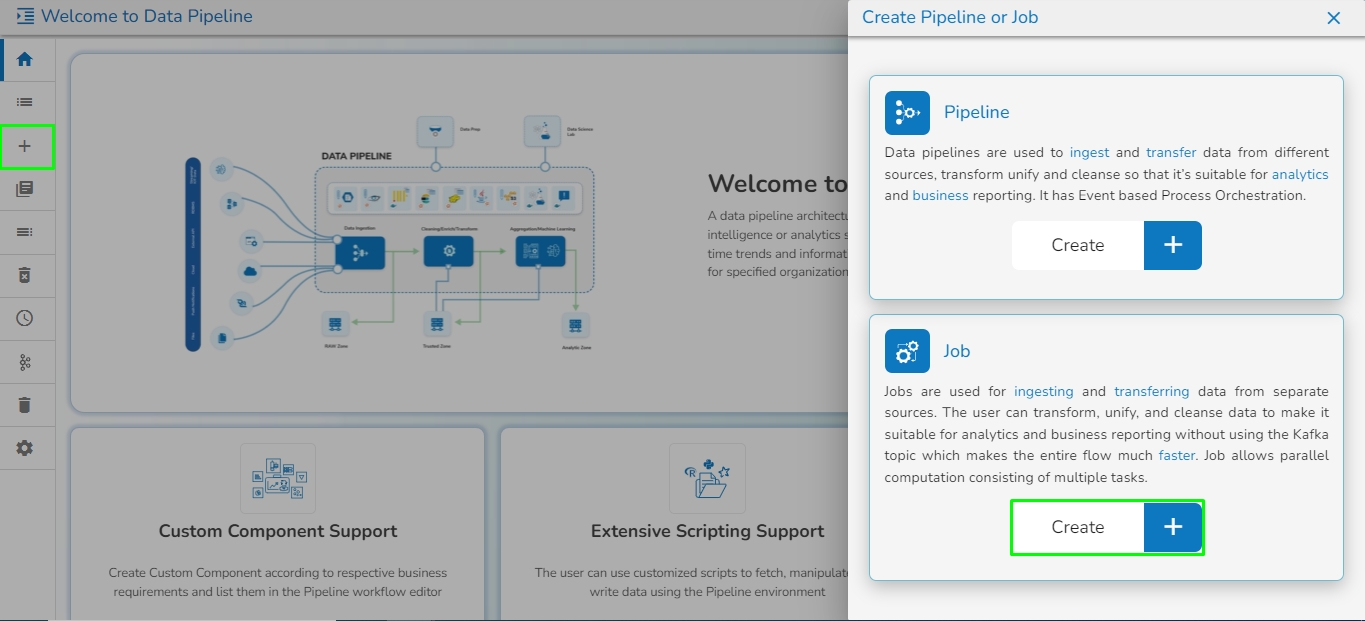

Create a new Pipeline or Job based on the selected create option.

The Pipeline Homepage offers the Create option to initiate with Pipeline or Job creation based on the need of user.

Navigate to the Pipeline List page.

Click the Create icon.

The Create Pipeline or Job user interface opens prompting to create a new Pipeline and Job.

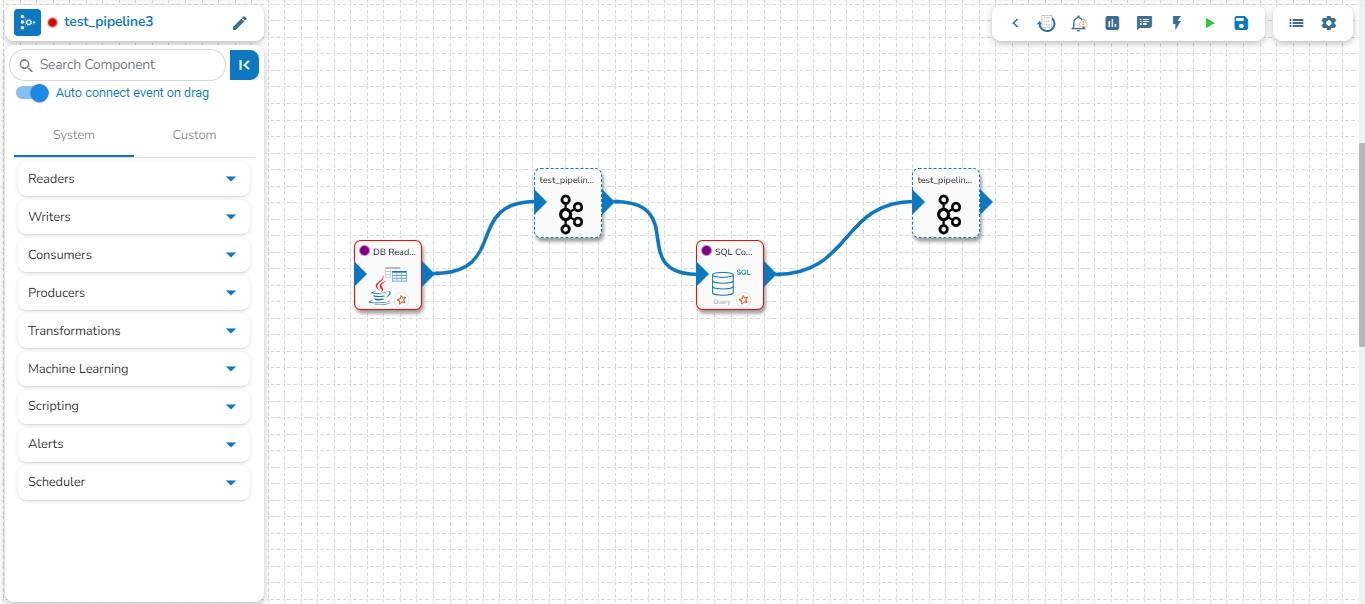

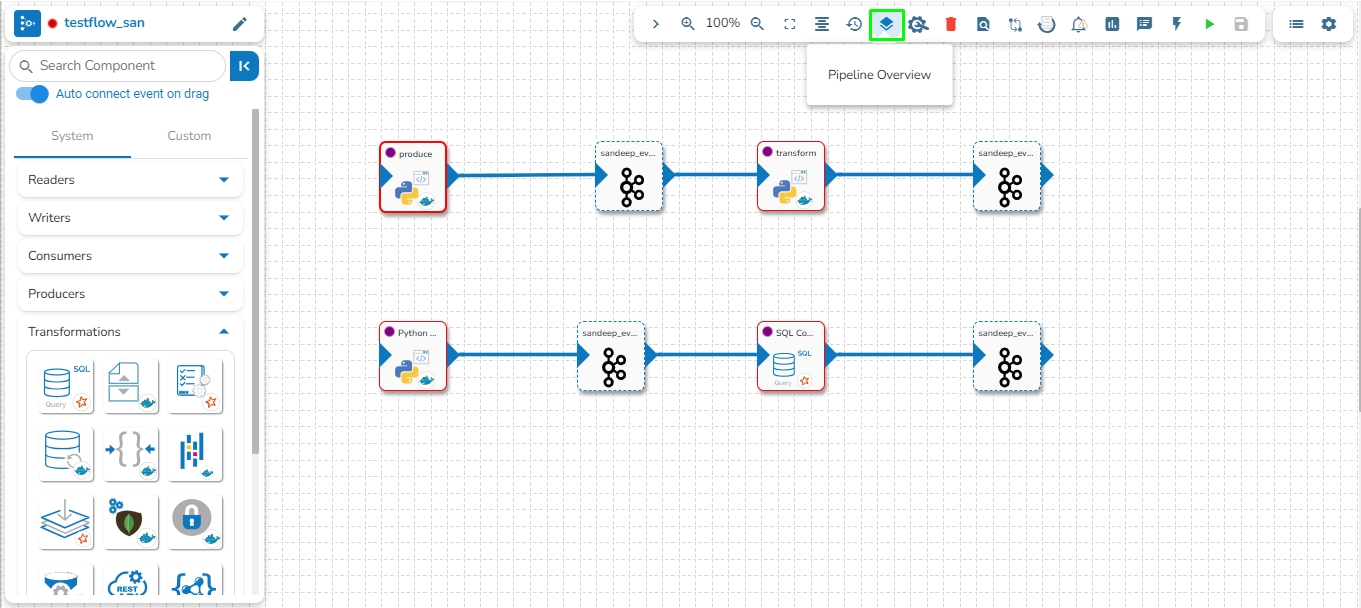

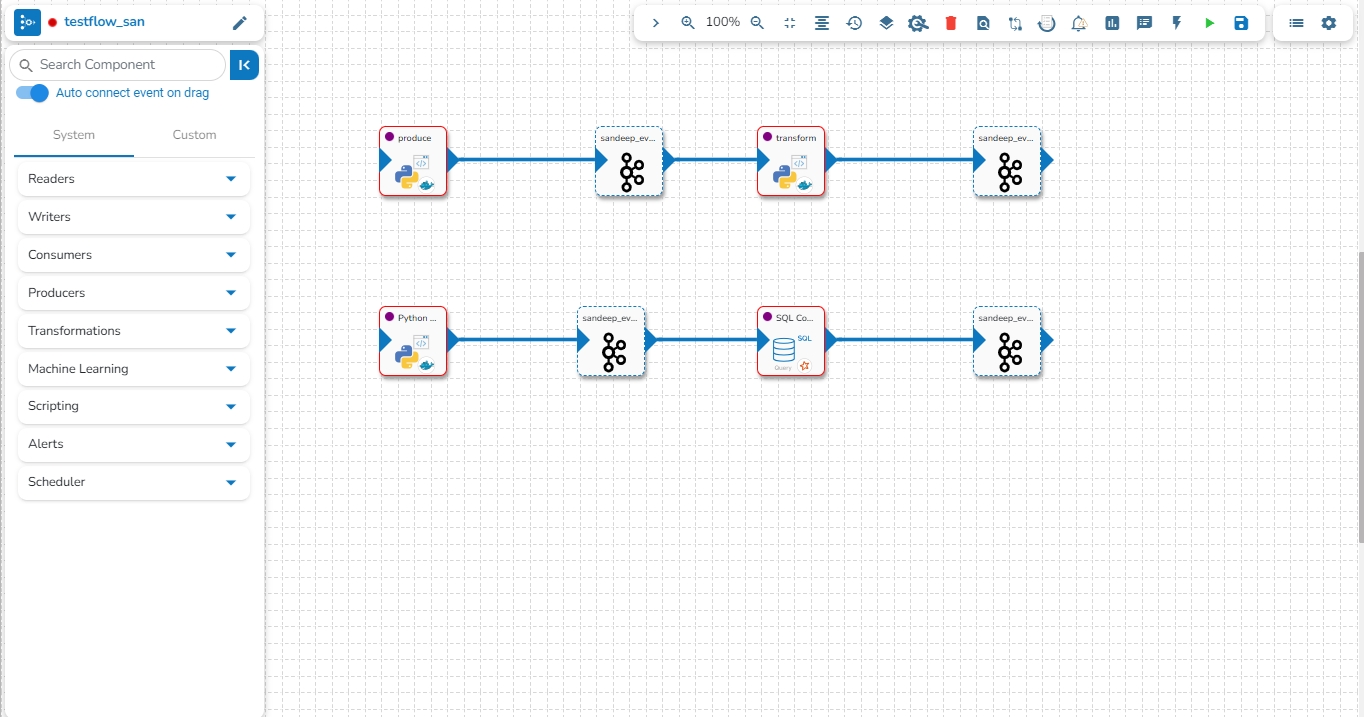

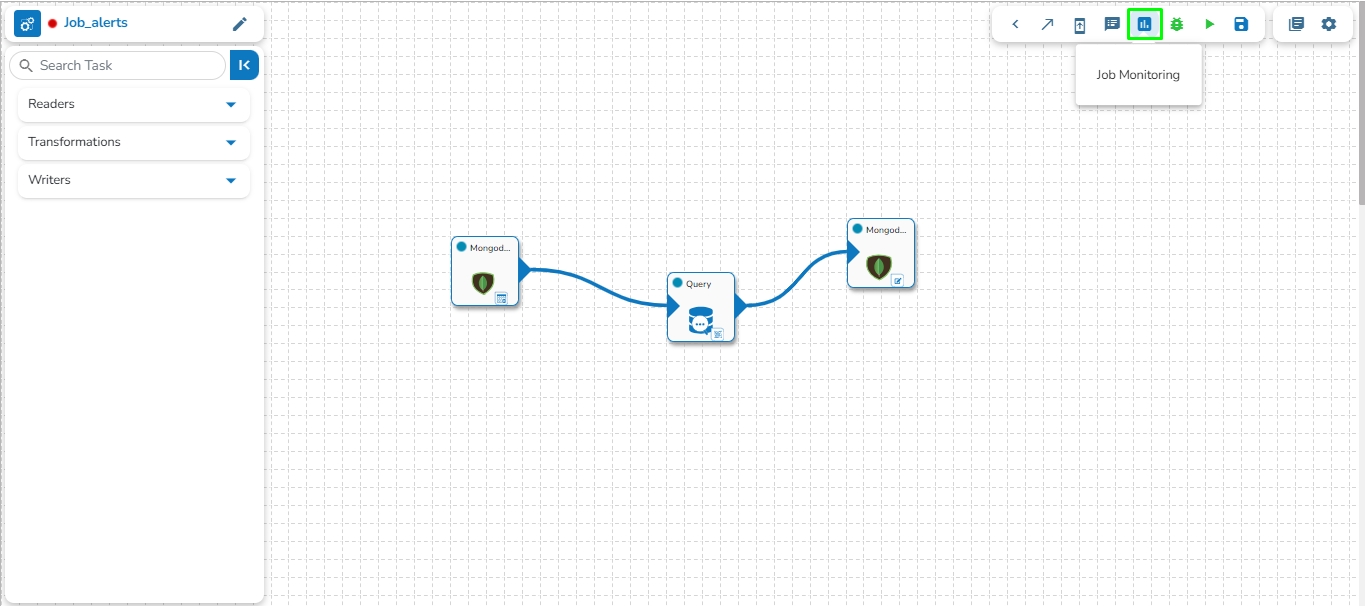

The Pipeline Workflow Editor contains Toolbar, Component Panel, and the Right-side Panel together with the Design Canvas for the user to create a pipeline flow.

The Pipeline Workflow consists of three main elements:

The above-given workflow shows the basic workflow to ingest data into a database using the Data Pipeline. It can be seen in the above workflow that data is read from a source using a reader component (DB Reader) and is then written to a destination location using a writer component (DB Writer).

In the successive slides, the user can find the detailed working of pipeline workflow design and the several pipeline components.

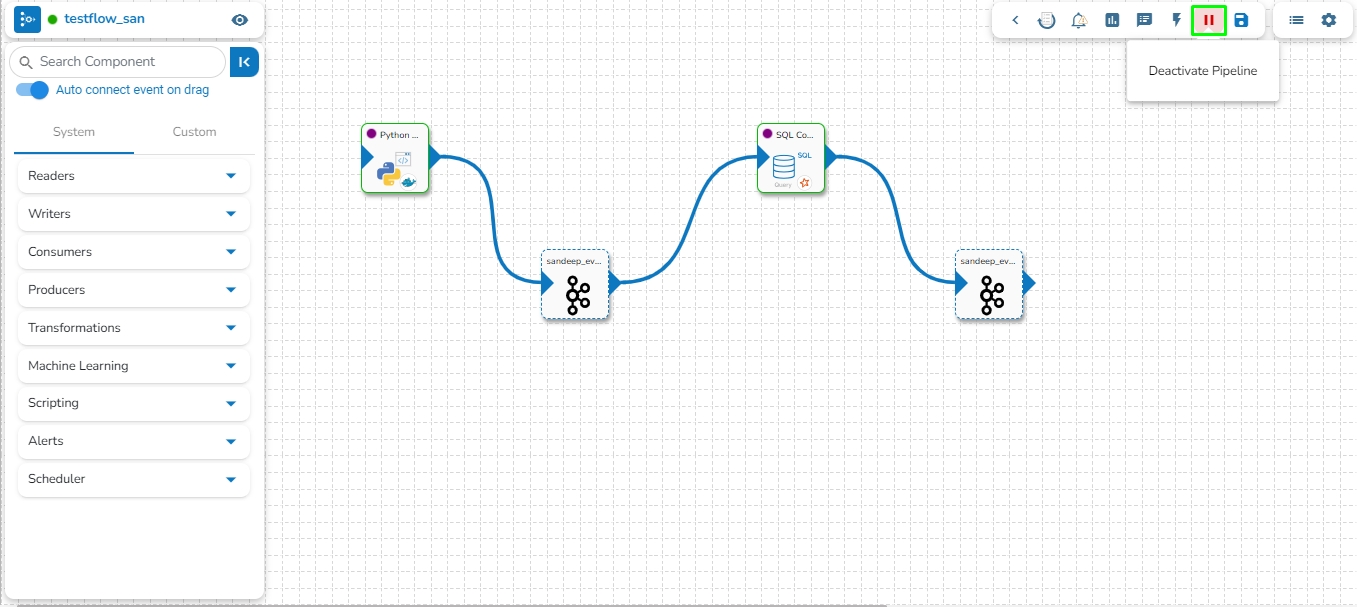

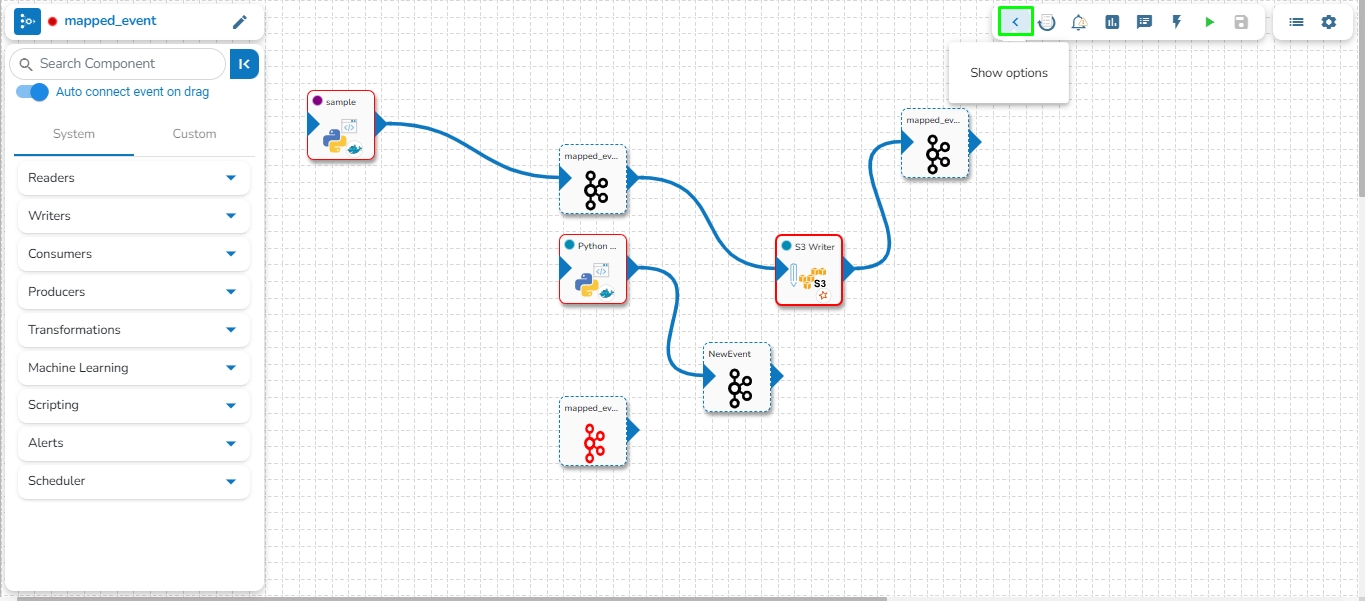

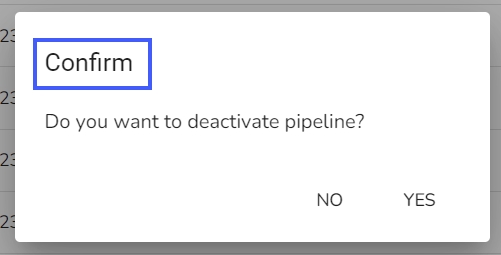

The user can activate/deactivate the pipeline by clicking on the/icon as shown in the image below:

Activation will deploy all the components based on their respective invocation types. When the pipeline is deactivated all the components go down and will halt the process.

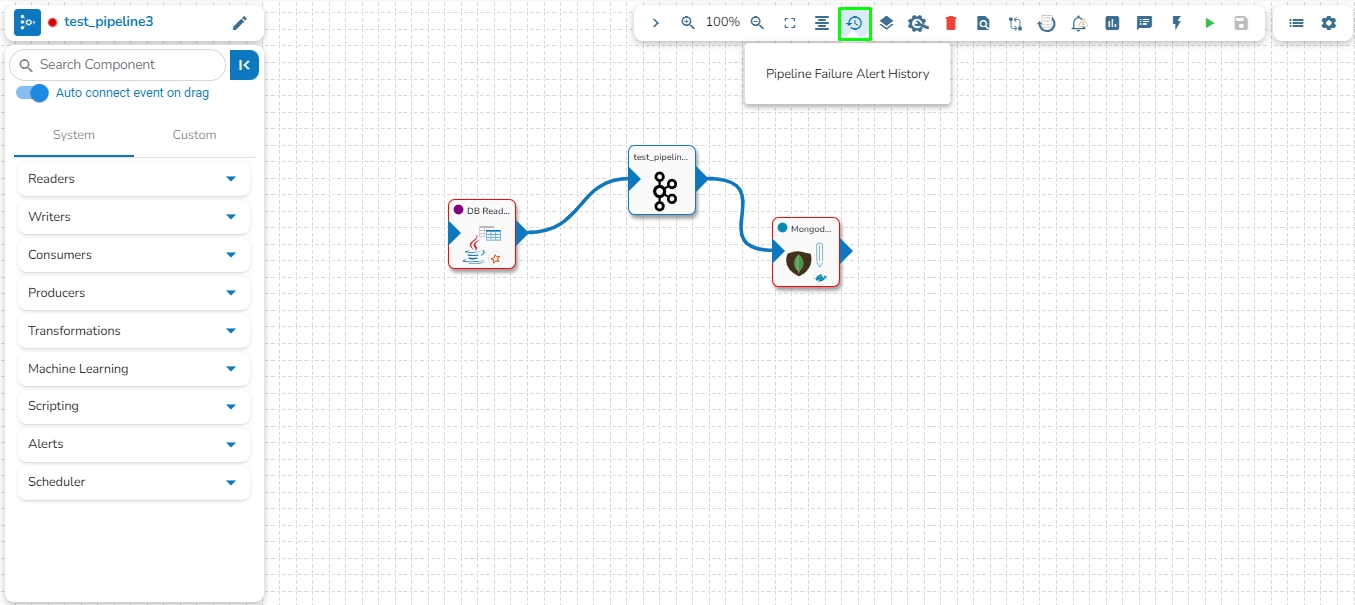

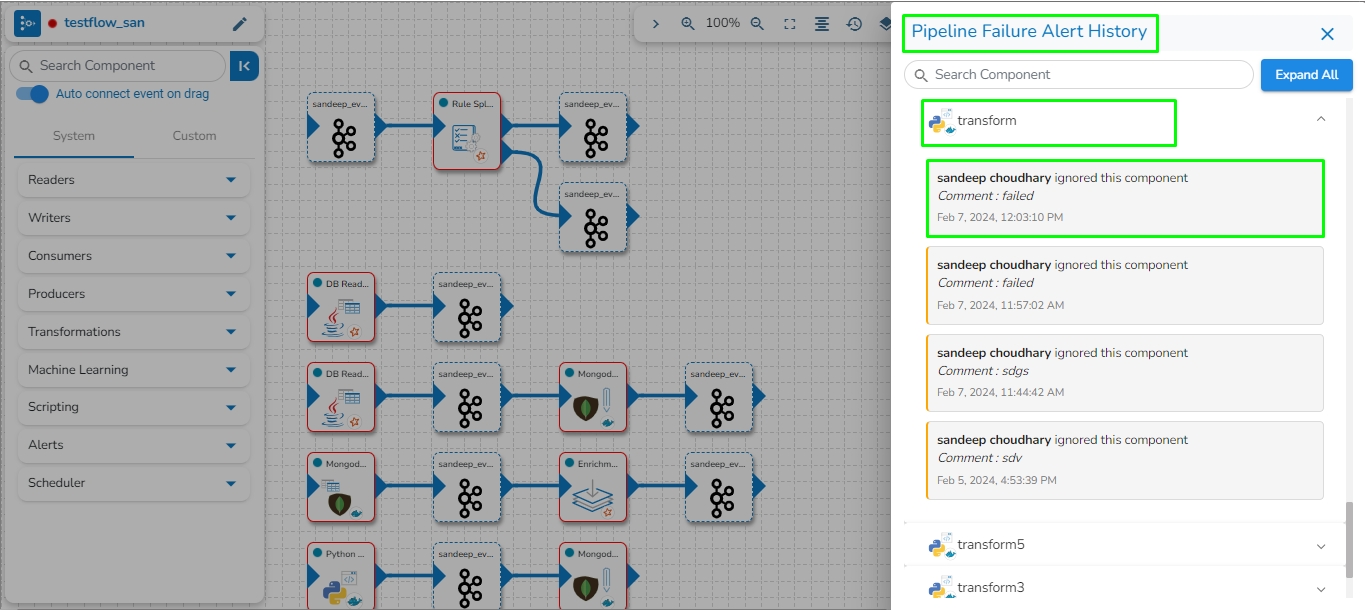

This feature will display the failure history of all the components used in the pipeline.

Click the Pipeline Failure Alert History icon from the header panel of the Pipeline Editor.

A panel window will open from the right side, displaying the failure history of the components used in the pipeline.

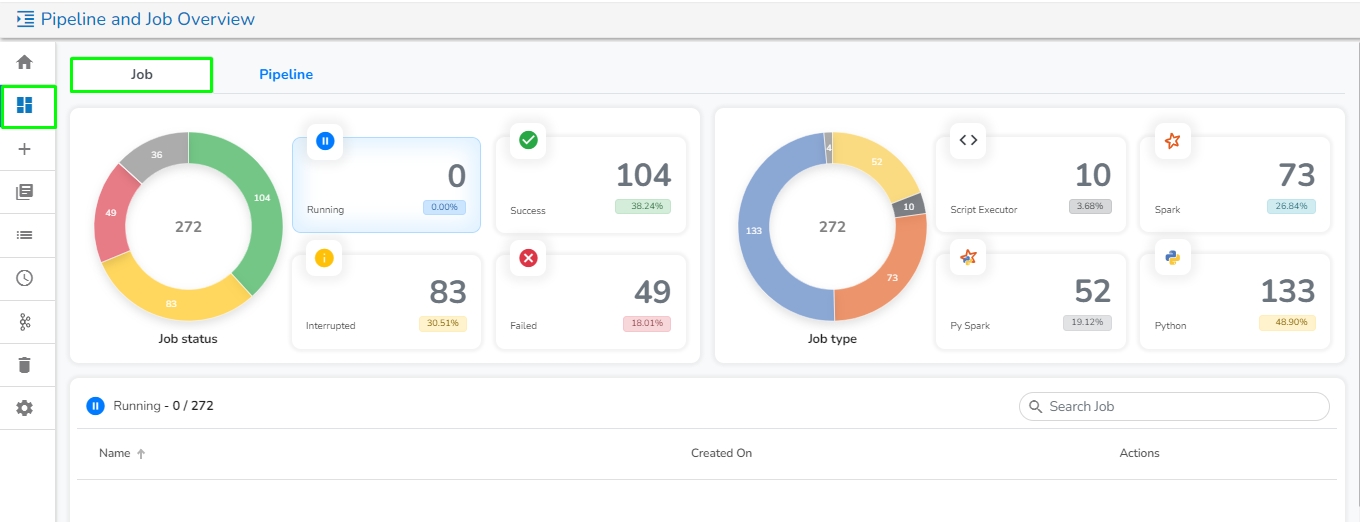

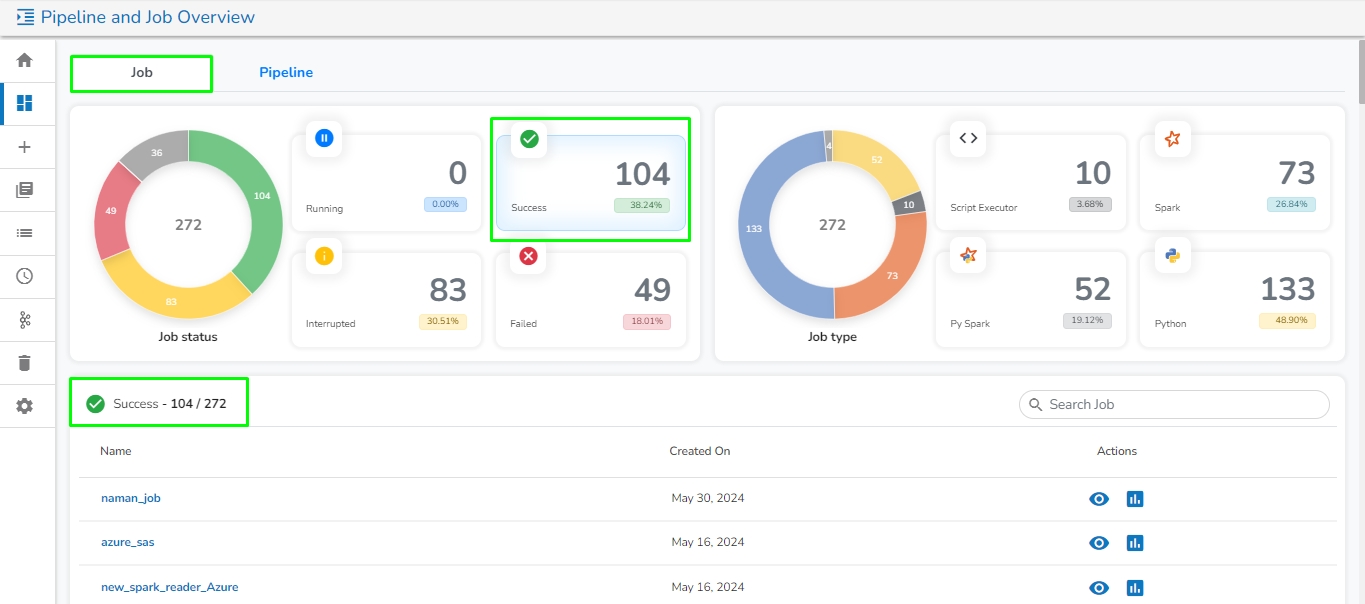

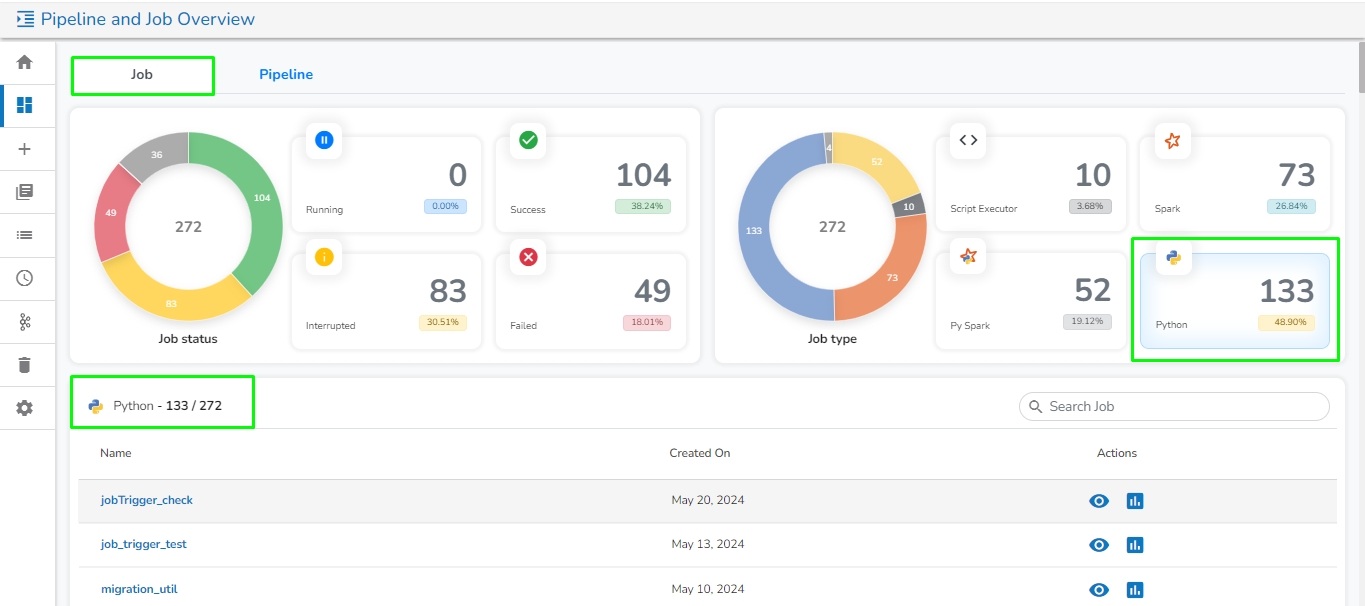

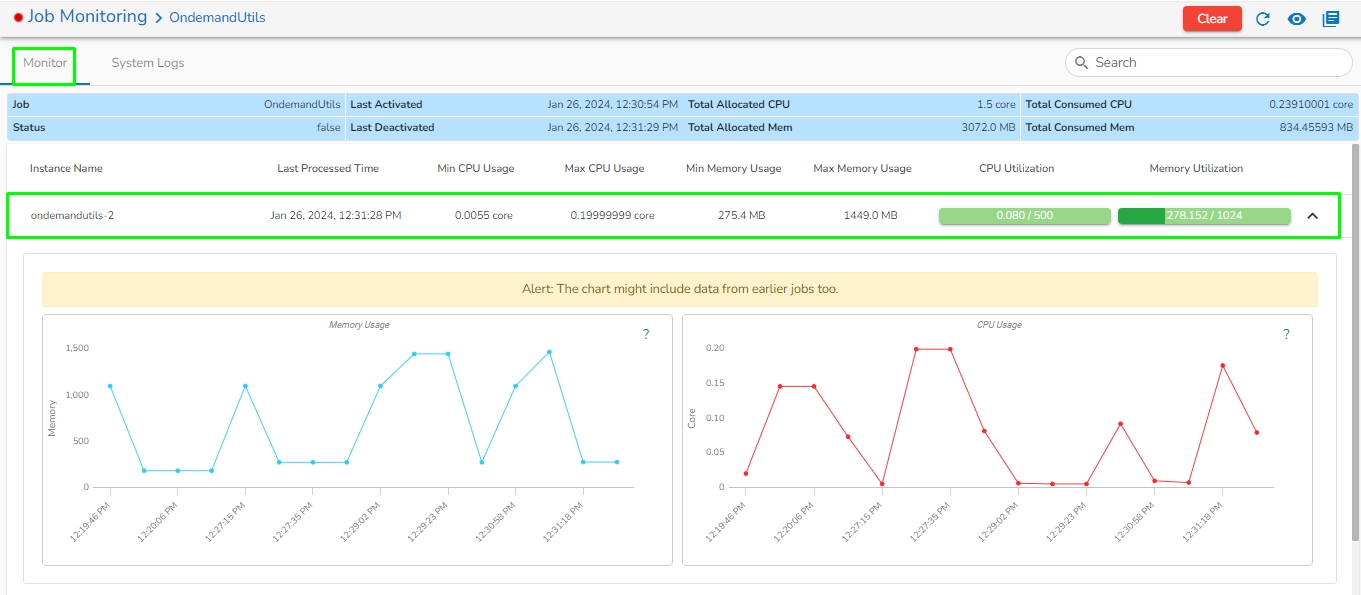

Please go through the below given demonstration for the Job Overview page.

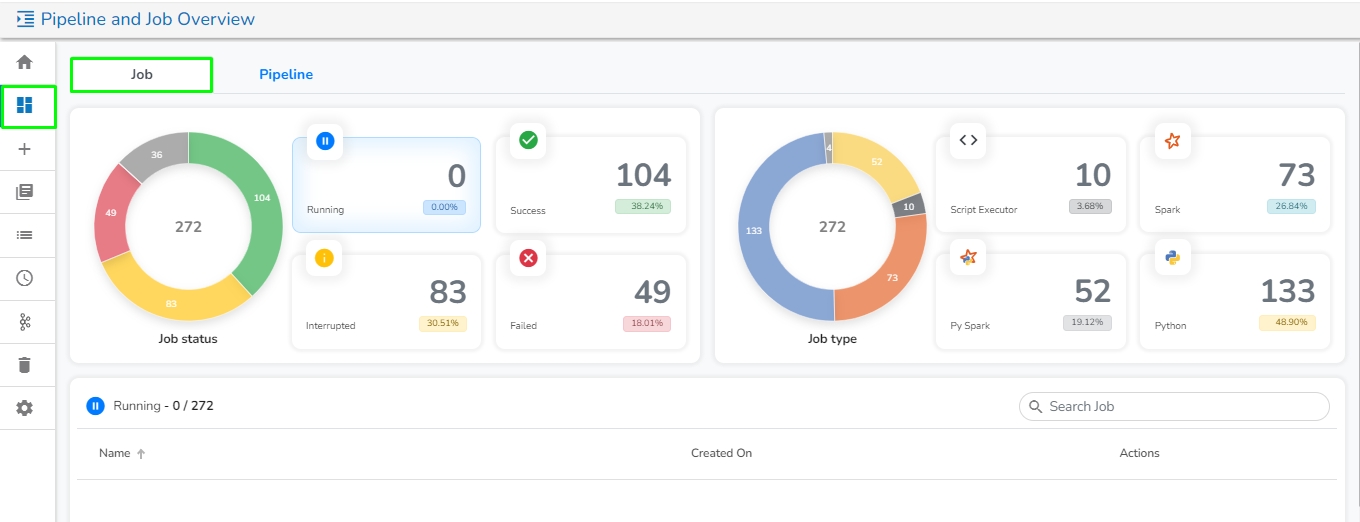

This tab will open by default once the user navigates to the Pipeline and Job Overview page. It contains the following information of the Jobs in graphical format:

Job Status:

This section displays the total number of Jobs created along with their count and percentage in a tile manner the following different running status :

Running: This section displays the number and total percentage of running jobs.

Success: This section displays the number and total percentage of successfully ran jobs.

Interrupted: This section displays the number and total percentage of interrupted jobs.

Failed: This section displays the number and total percentage of failed jobs.

Once the user clicks on any of the status, it will list down all the jobs related to that particular category along with the following option:

View: Redirects the user to the selected job workspace.

Monitor: Redirects the user to the for the selected job.

Job Type:

It displays the total number and percentage of jobs created for the following categories in the graphical format:

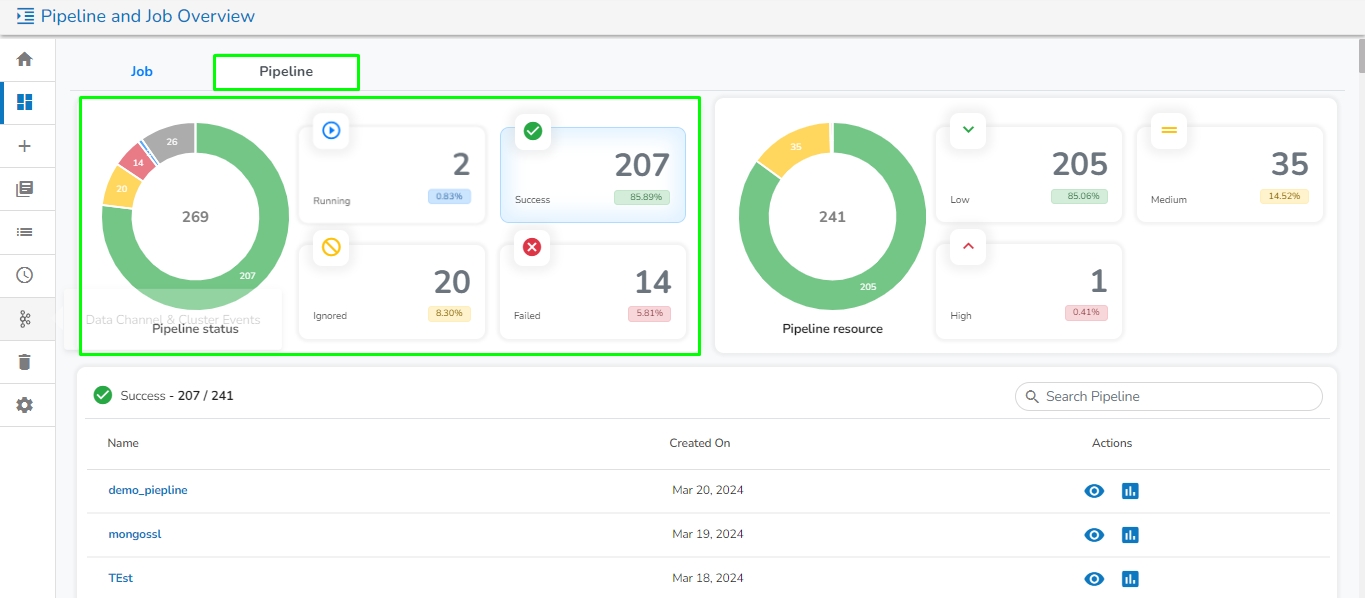

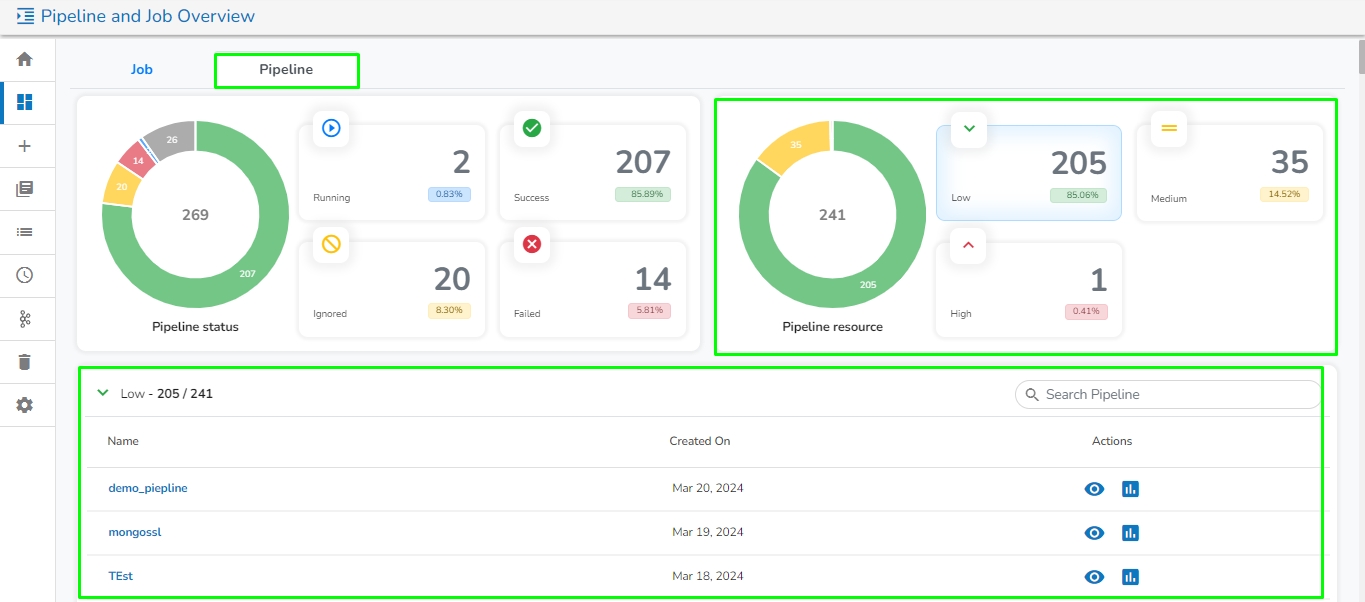

This page shows a summary of Pipelines in a graphical manner.

Please go through the below given demonstration for the Pipeline Overview page.

This page contains the following information of the Pipelines in the graphical format:

This section displays the total number of pipelines created along with their count and percentage in a tile manner for the following different running statuses:

Running: Displays the number and total percentage of running pipelines.

Success: Displays the number and total percentage of successfully executed pipelines.

Ignored:

It displays the total number and percentage of pipeline created for the following resource type in the graphical format:

Low

Medium

High

Components are broadly classified into:

Component Panel

Expand component group and select

Search using the search bar in the component panel

Drag and Drop the components to the workflow editor.

Any Pipeline System component can be easily dragged to the workflow it includes the following steps to use a System component in the Pipeline Workflow editor:

Drag and Drop the components to the workflow editor.

Search using the search bar in the component panel

Expand component group and select

Check out the illustration on how to use a system pipeline component.

Clicking on the Update icon allows users to save the pipeline. It is recommended to update the pipeline every time you make changes in the workflow editor.

On a successful update of the pipeline, you get a notification as given below:

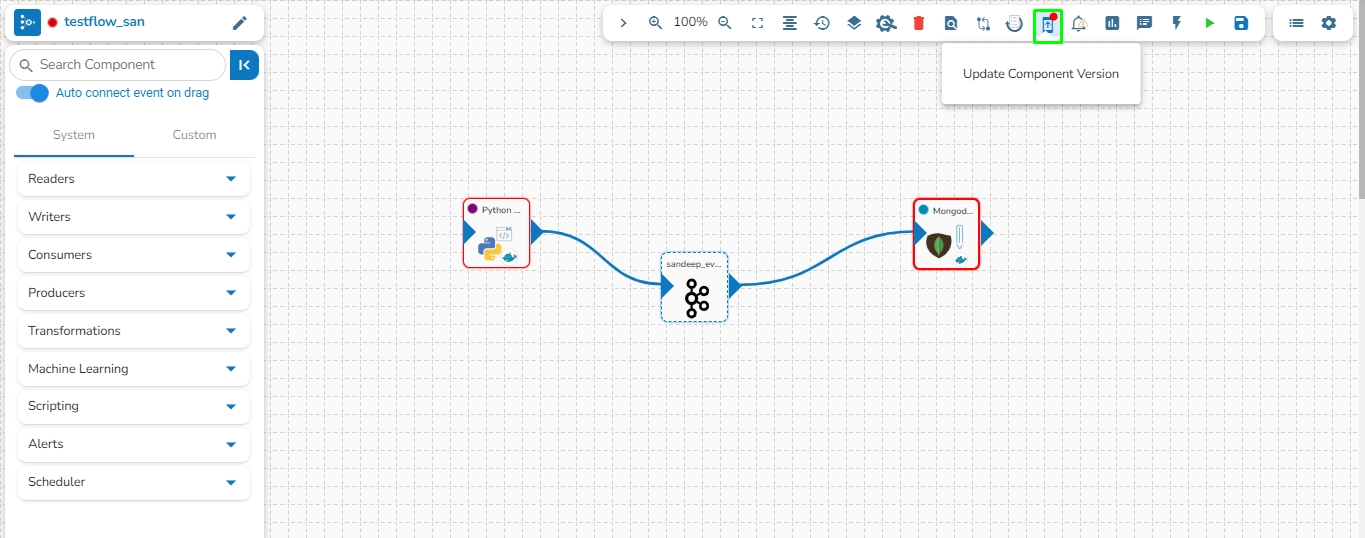

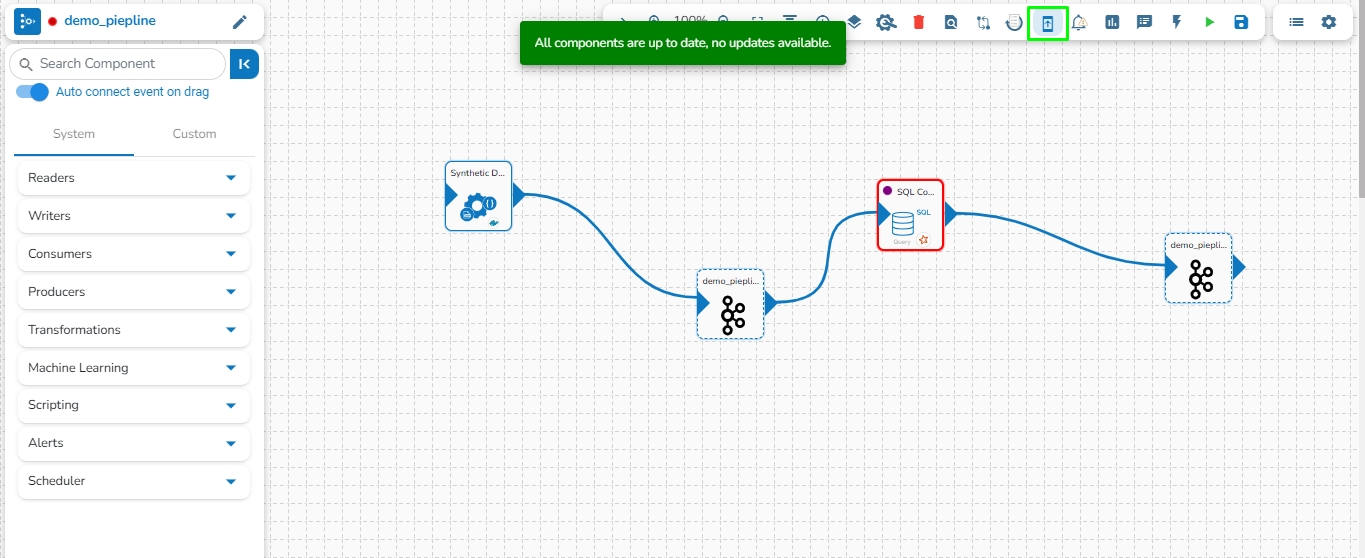

Users can update the version of the used pipeline components through this icon.

This option allows us to update the Pipeline components to their latest versions.

Navigate to the Pipeline Editor page.

Click the Update Component Version icon. The icon will display a red dot indicating that an updated component version is available for the selected pipeline workflow.

A confirmation dialog box appears.

Click the YES option.

A notification message appears to confirm that the component version is updated.

The Update Component Version gets disabled to indicate that all the pipeline components are up to date.

This feature allows users to update the older version of the component with the latest version in the pipeline.

Follow the below given steps to update the component version in the pipeline:

Navigate to the pipeline toolbar panel.

Click on the Update Component Version option.

After clicking on the option, all components with older versions in the pipeline will be updated to the latest version. A success message will appear stating, "Components Version Updated Successfully".

A message will appear stating, "All components are up to date, no updates available" if all components already have the latest version.

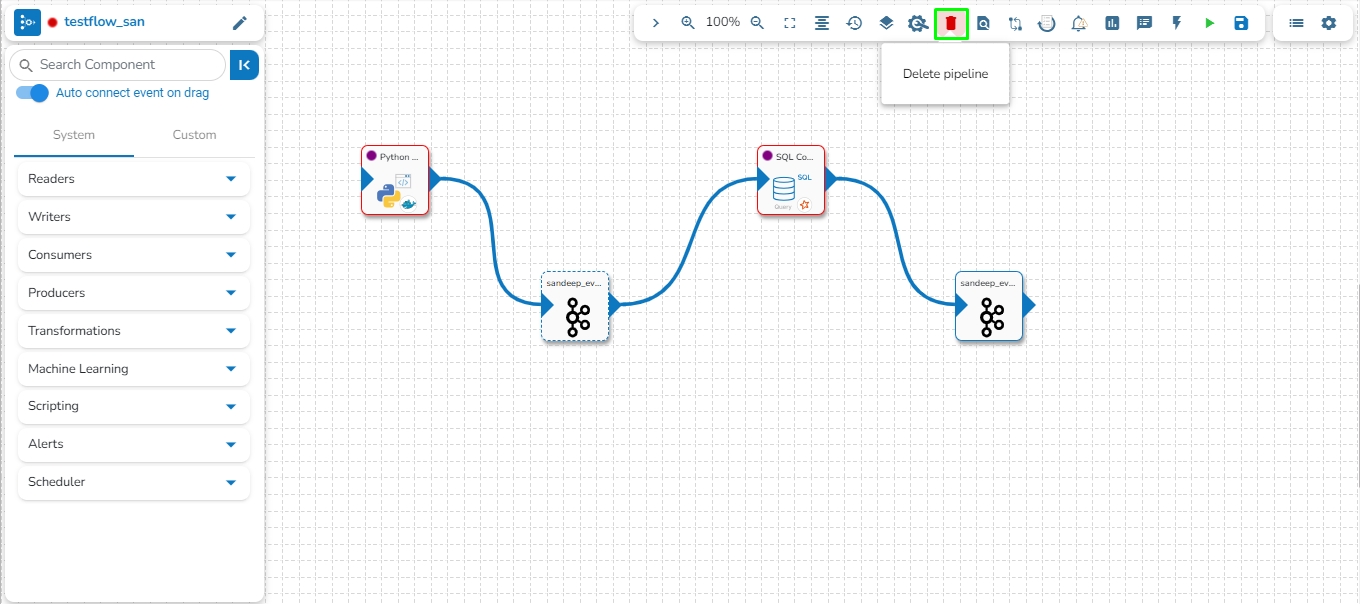

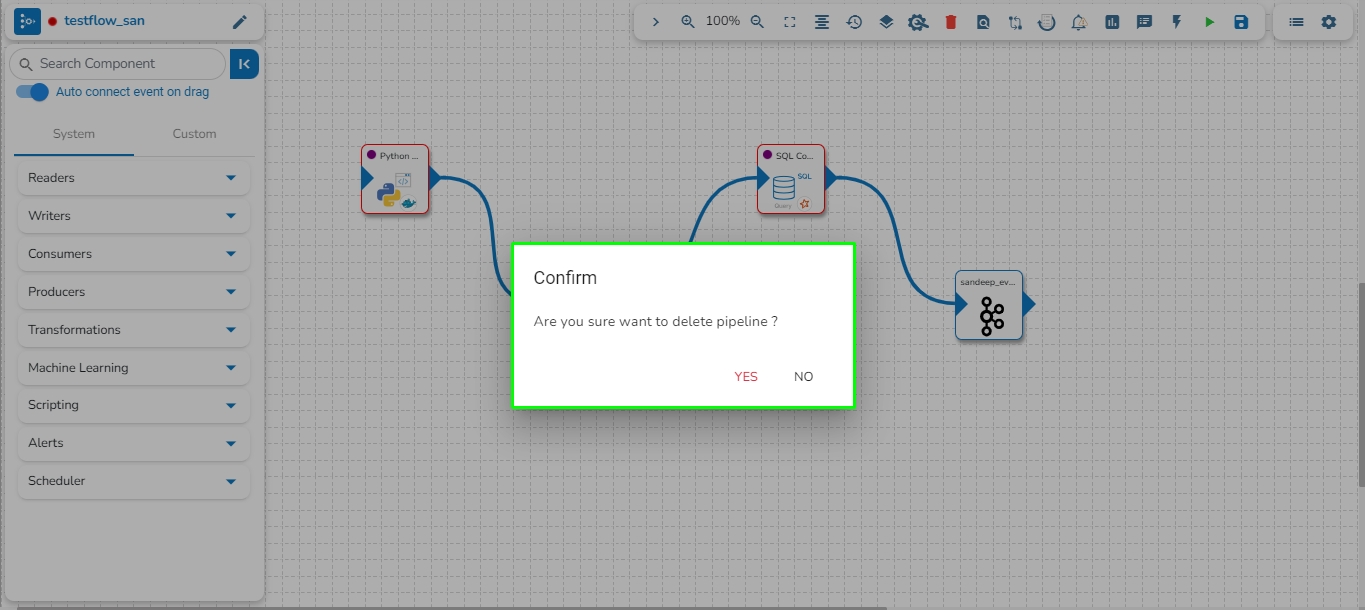

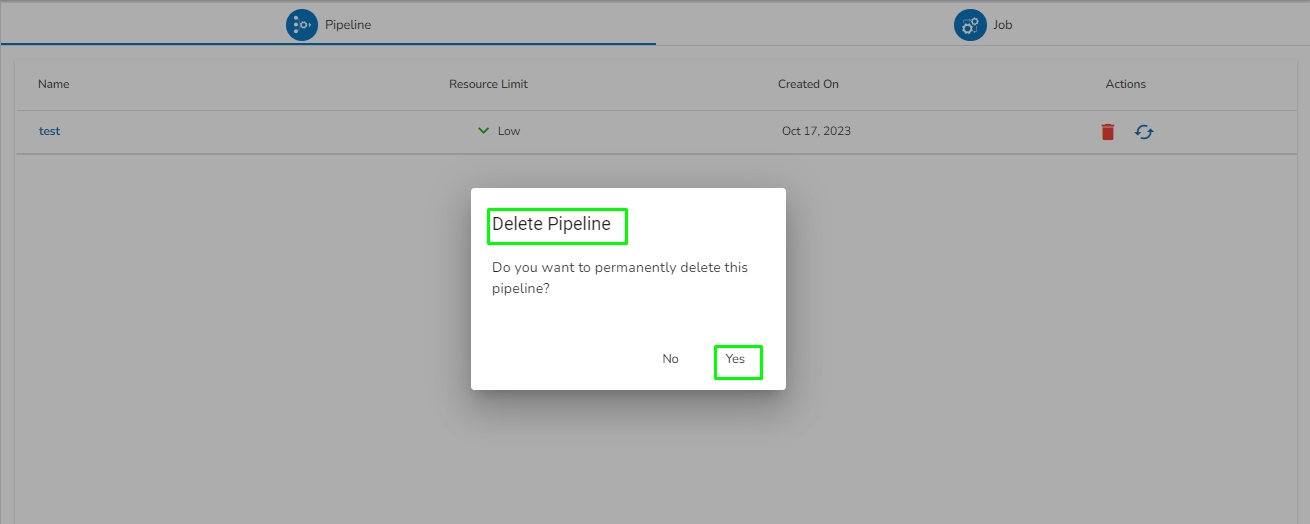

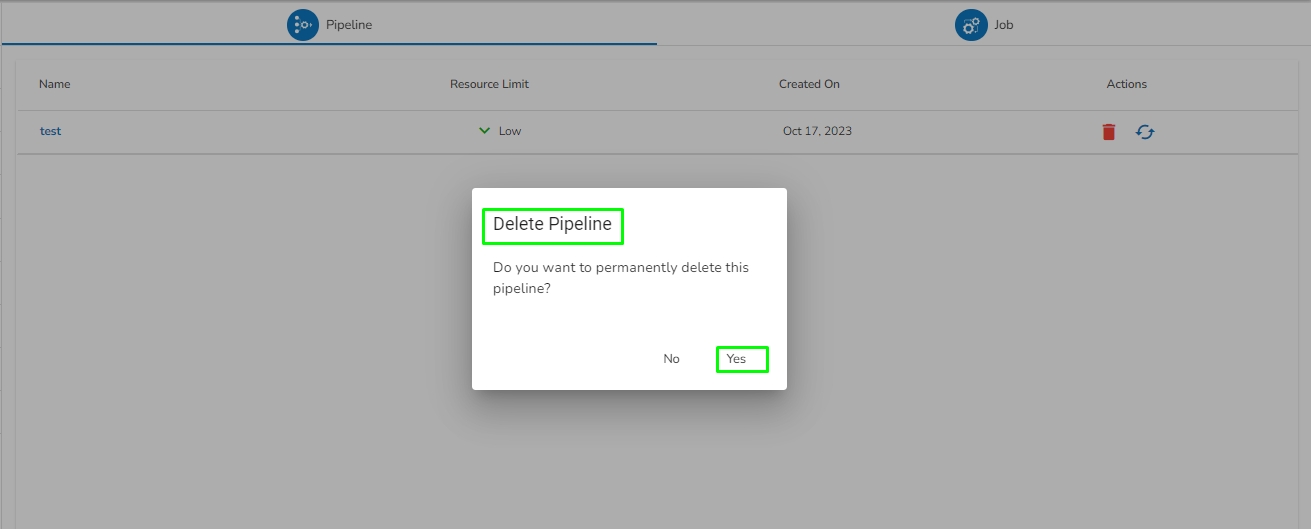

The user can delete their pipeline using this feature, accessible from either the List Pipelines page or the Pipeline Workflow Editor page.

Navigate to the Pipeline Workflow Editor page.

Click the Delete icon.

A dialog box opens to assure the deletion.

Select the YES option.

A notification message appears.

The user gets redirected to the Pipeline List page and the selected Pipeline gets removed from the Pipeline List.

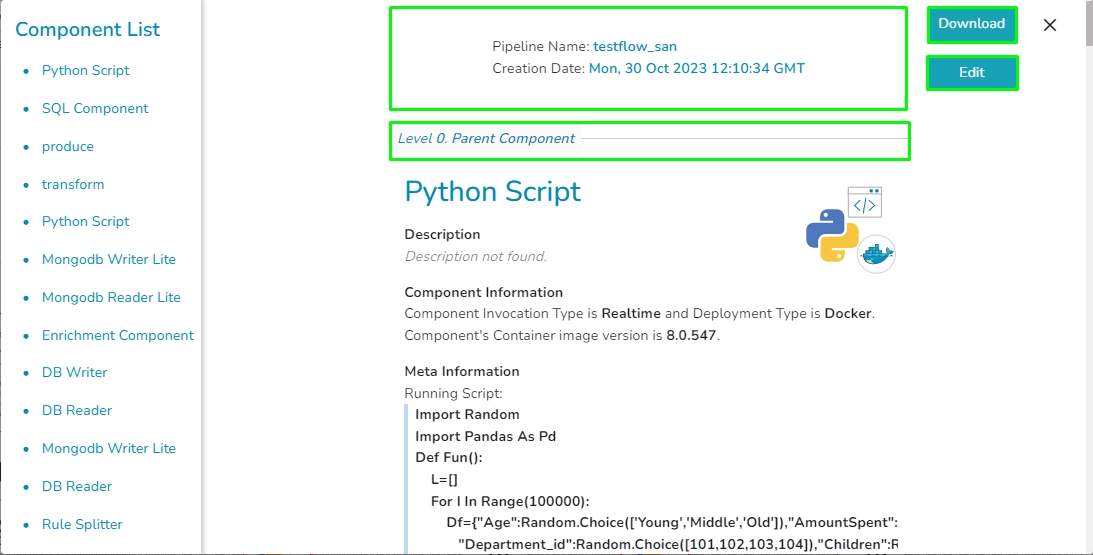

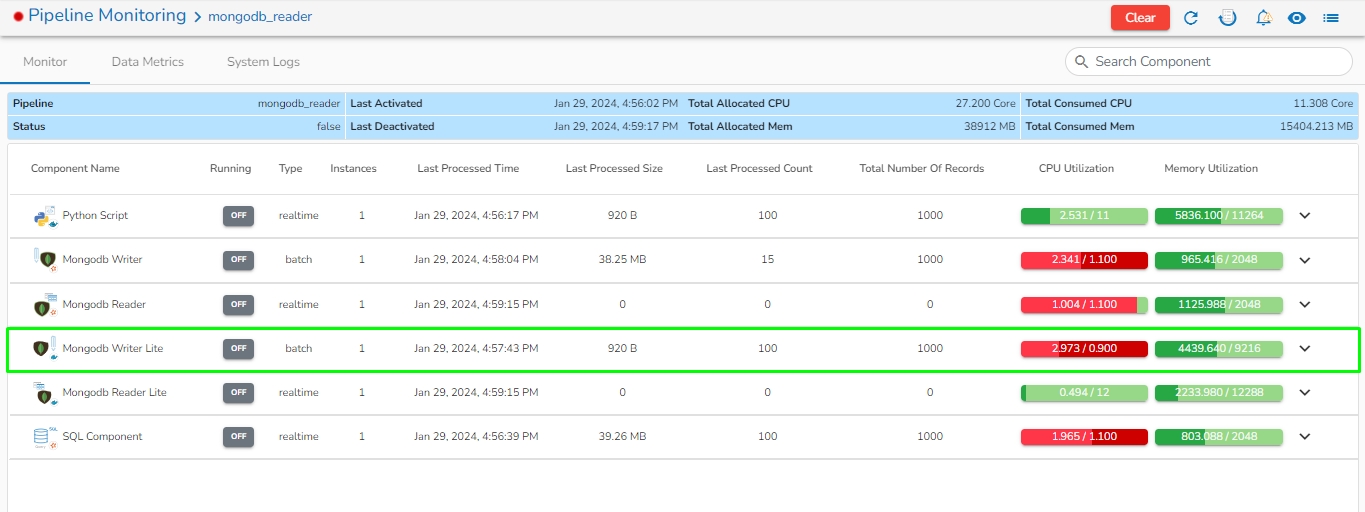

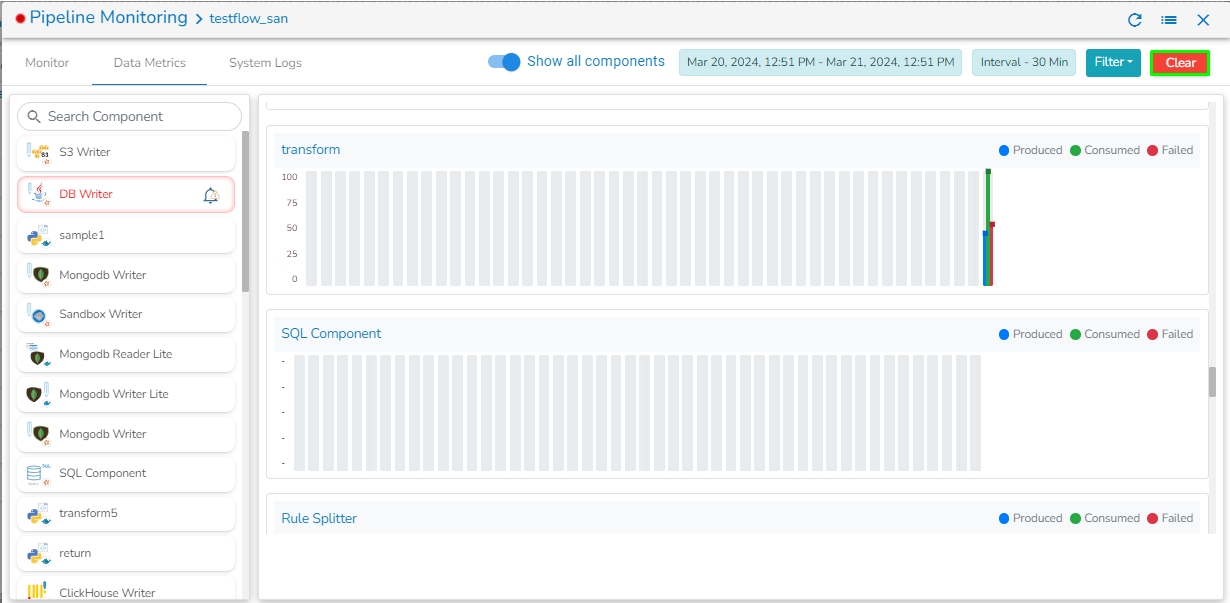

This page provides an overview of all the components used in the pipeline in a single place.

The Pipeline Overview feature enables users to view and download comprehensive information about all the components used in the selected pipeline on a single page. Users can access meta information, resource configuration, and other details directly from the pipeline overview page, streamlining the process of understanding and managing the components associated with the pipeline.

Check out the given demonstration to understand the Pipeline Overview page.

The user can access the Pipeline Overview page by clicking the Pipeline Overview icon as shown in the below given image:

Component List: All the components used in the pipeline will be listed here.

Creation Date: Date and time when the pipeline created.

Download: The Download option enables users to download all the information related to the pipeline. This comprehensive download includes details such as all components used in the pipeline, meta information, resource configuration, component deployment type, and more.

Edit:

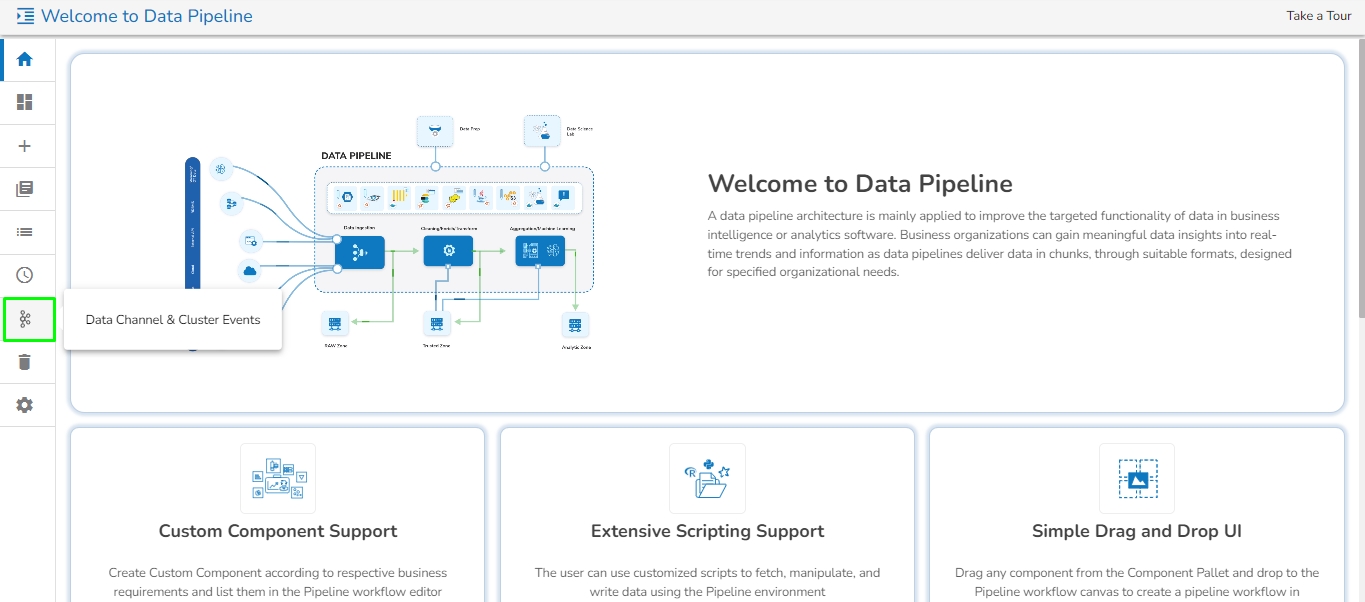

Data pipelines are used to ingest and transfer data from different sources, transform unify and cleanse so that it’s suitable for analytics and business reporting.

“It is a collection of procedures that are carried either sequentially or even concurrently when transporting data from one or more sources to destination. Filtering, enriching, cleansing, aggregating, and even making inferences using AI/ML models may be part of these pipelines”.

Data pipelines are the backbone of the modern enterprise, Pipelines move, transform and store data so that enterprise can generate/take decision without delays. Some of these decisions are automated via AI/ML models in real-time.

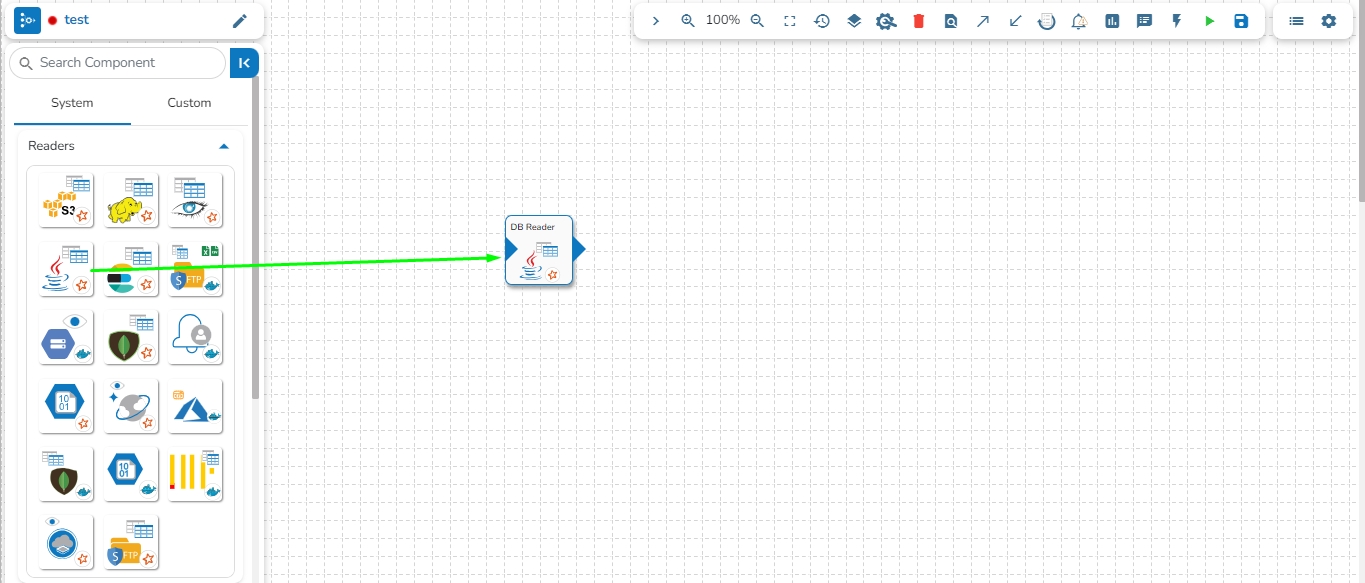

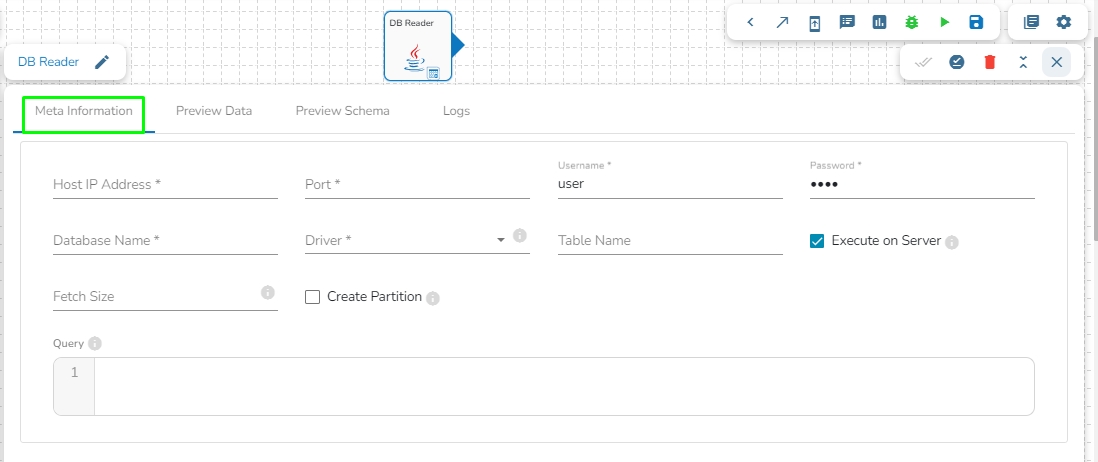

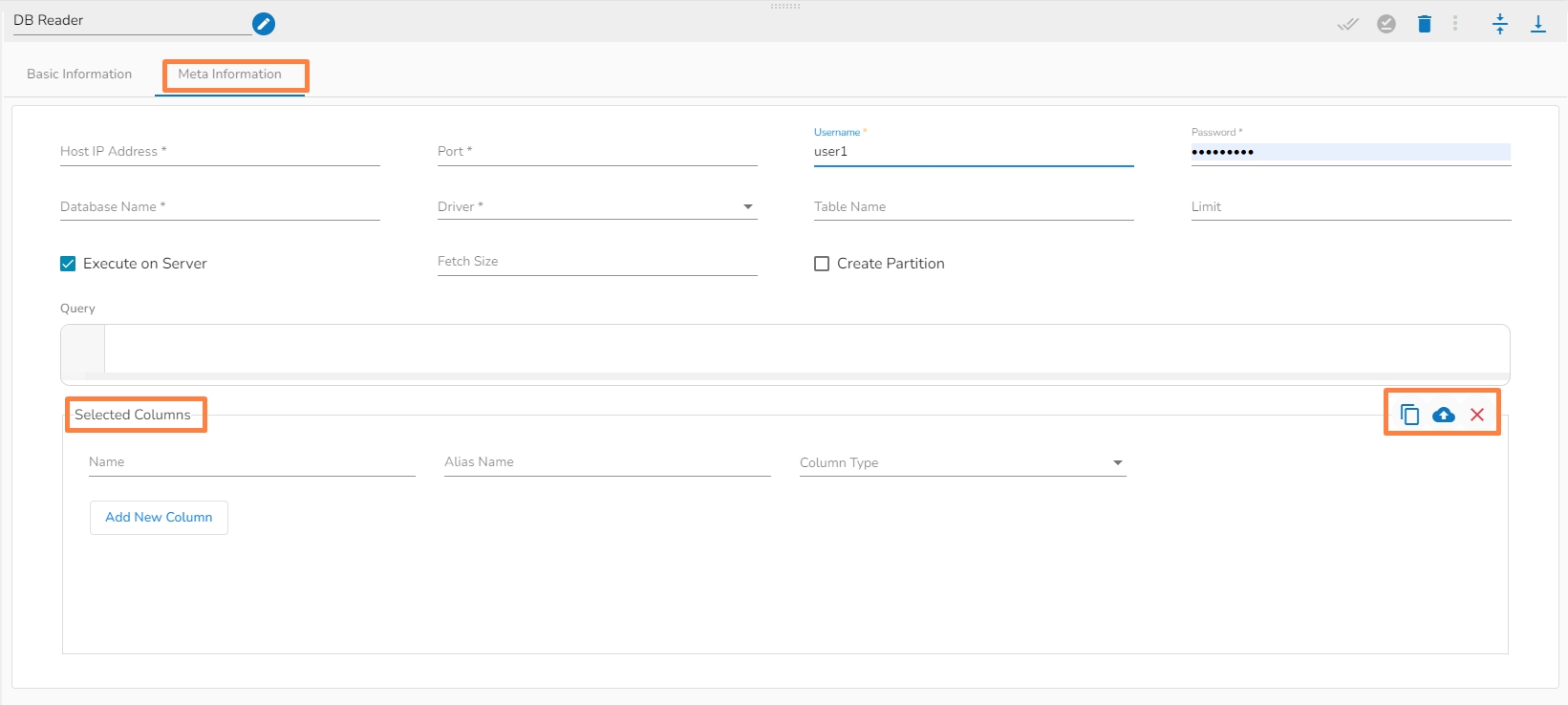

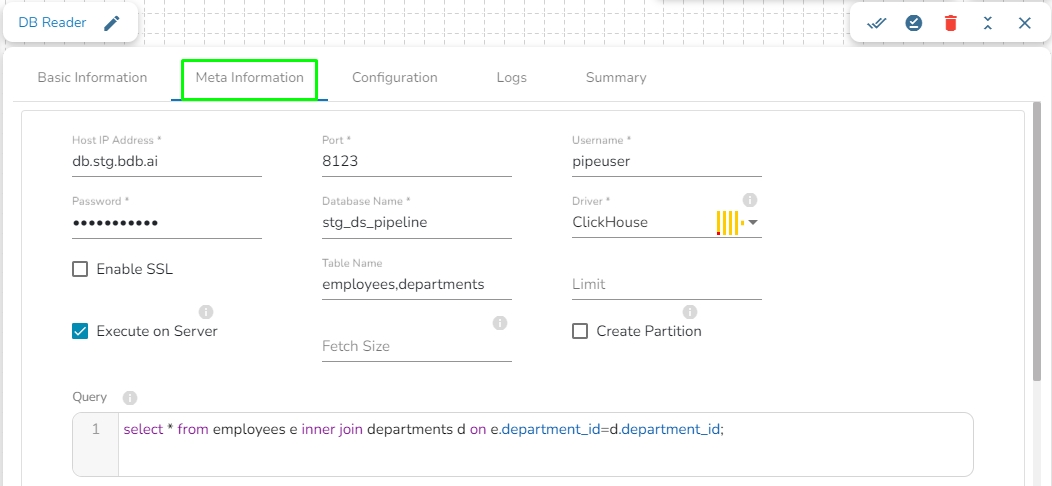

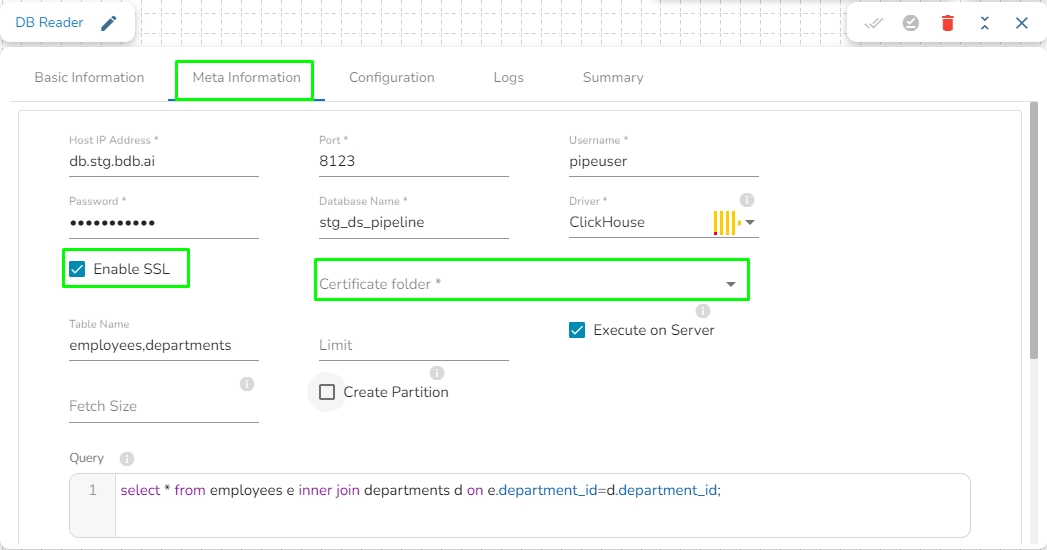

This task is used to read the data from the following databases: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Drag the DB reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

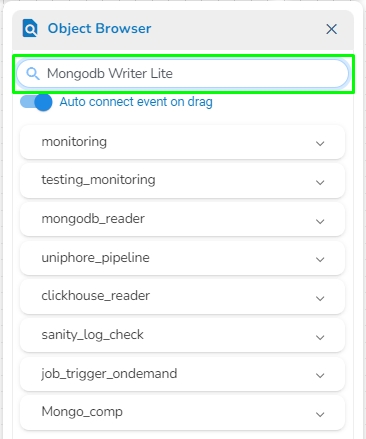

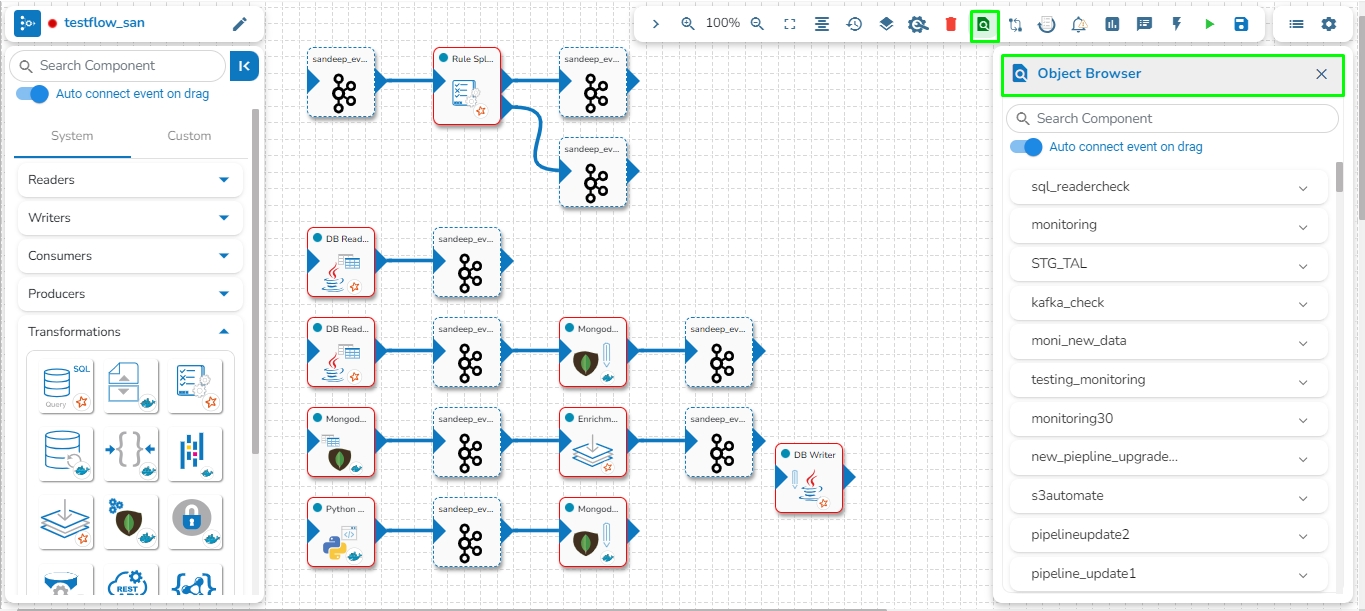

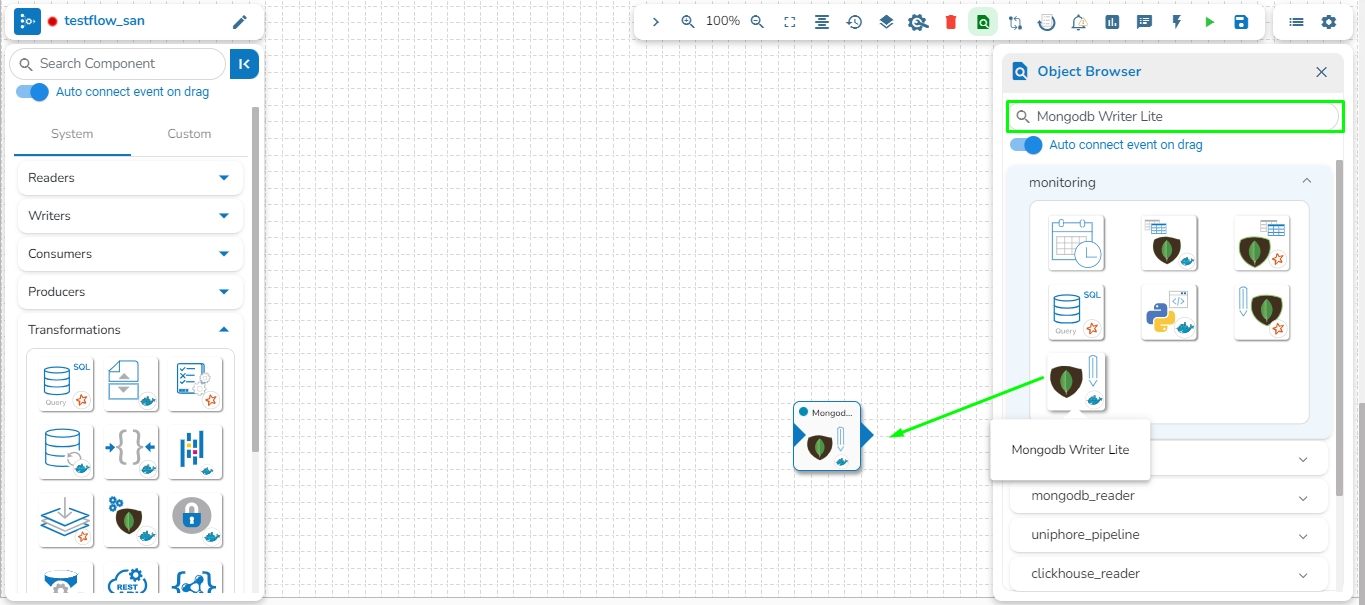

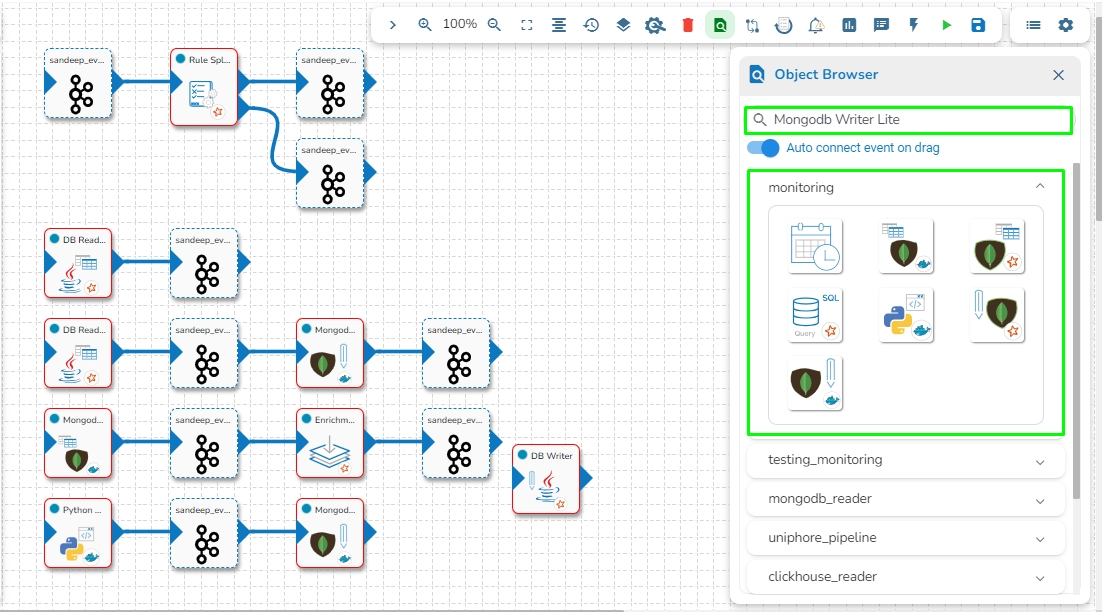

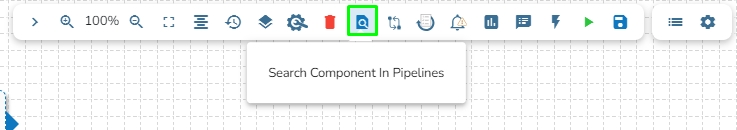

This feature helps the user to search a specific component across all the existing pipelines. The user can drag the required components to the pipeline editor to create a new pipeline workflow.

Click the Search Component in pipelines icon from the header panel of the Pipeline Editor.

The Object Browser window opens displaying all the existing pipeline workflows.

HDFS stands for Hadoop Distributed File System. It is a distributed file system designed to store and manage large data sets in a reliable, fault-tolerant, and scalable way. HDFS is a core component of the Apache Hadoop ecosystem and is used by many big data applications.

This task reads the file located in HDFS (Hadoop Distributed File System).

Drag the HDFS reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

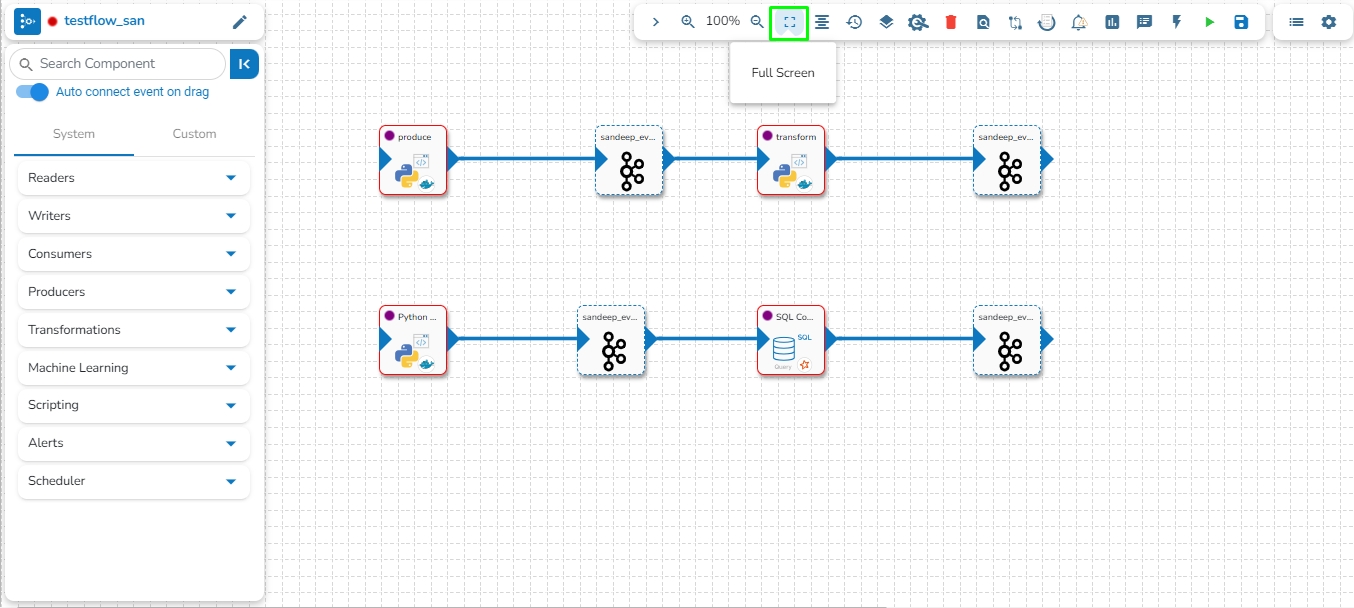

The Full Screen icon presents the Pipeline Editor page in the full screen.

Navigate to the Pipeline Workflow Editor page.

Click the Full Screenicon from the toolbar.

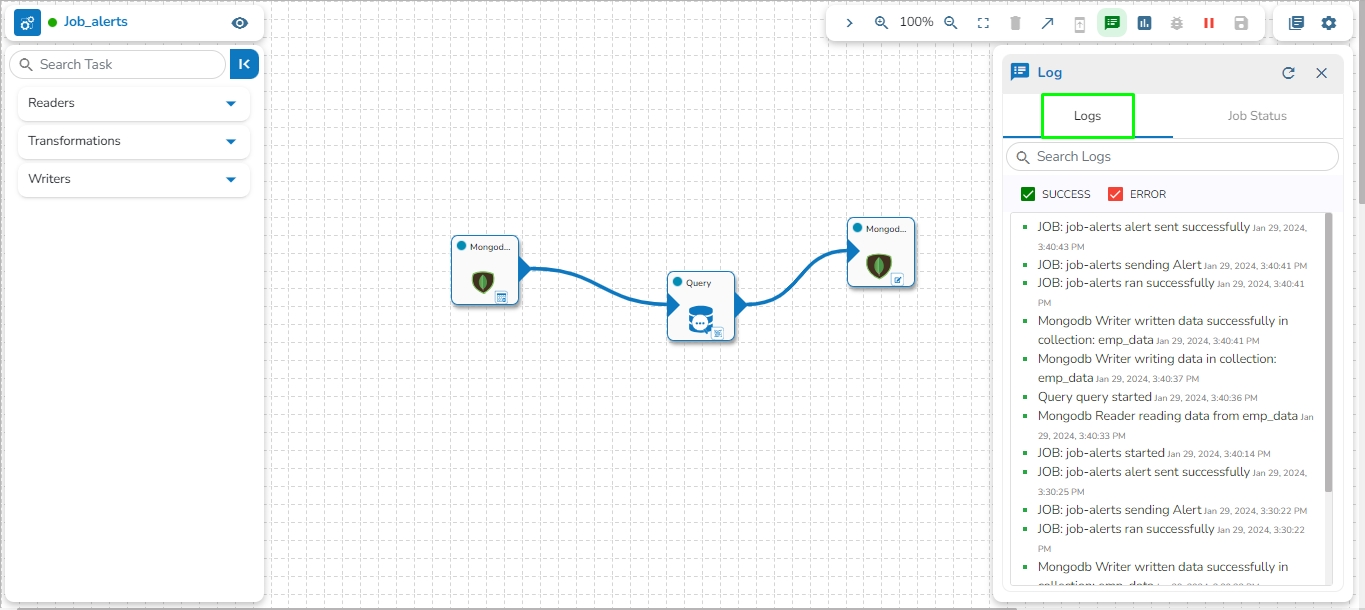

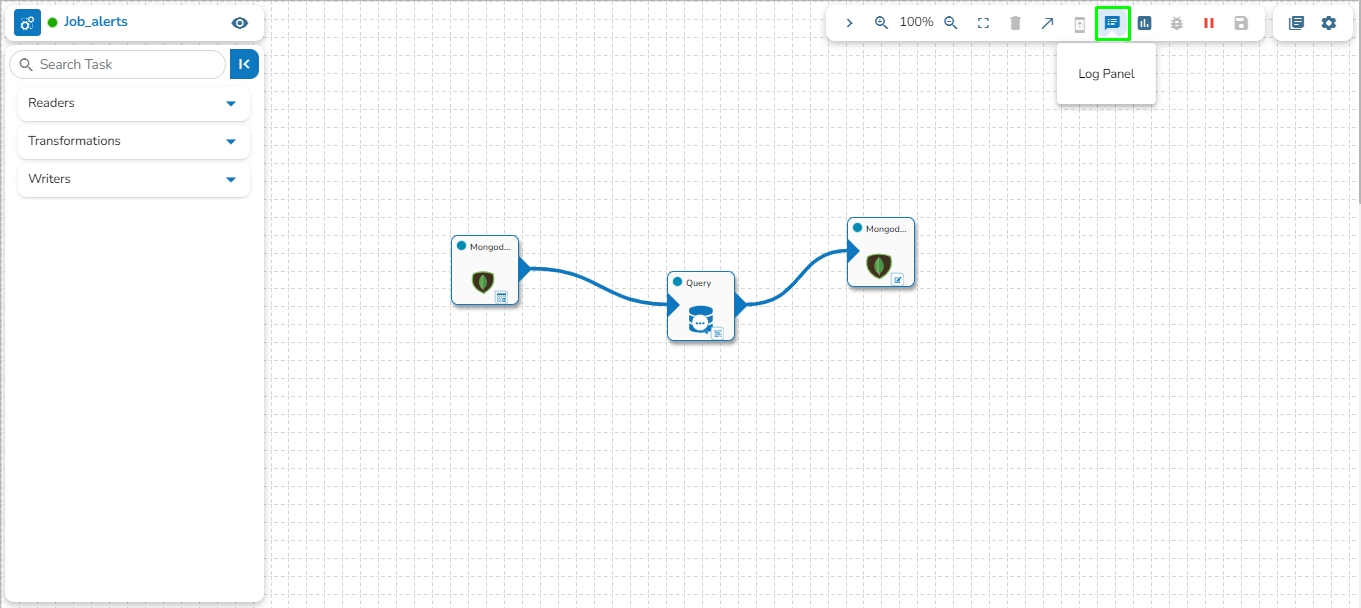

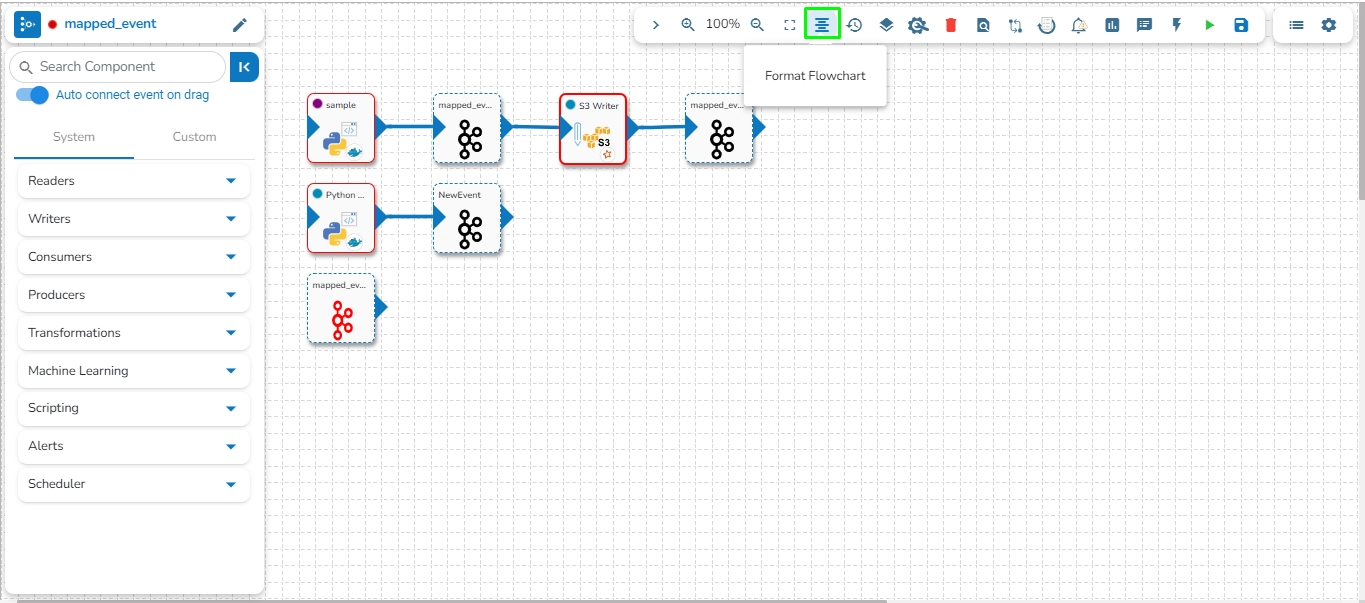

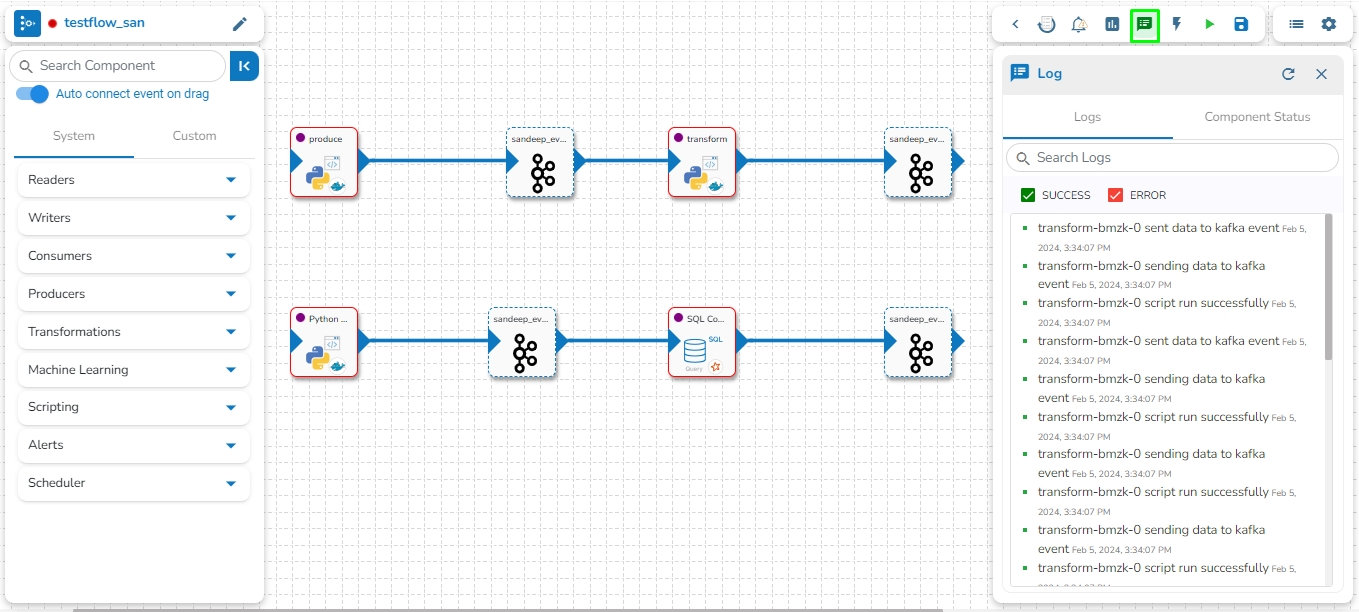

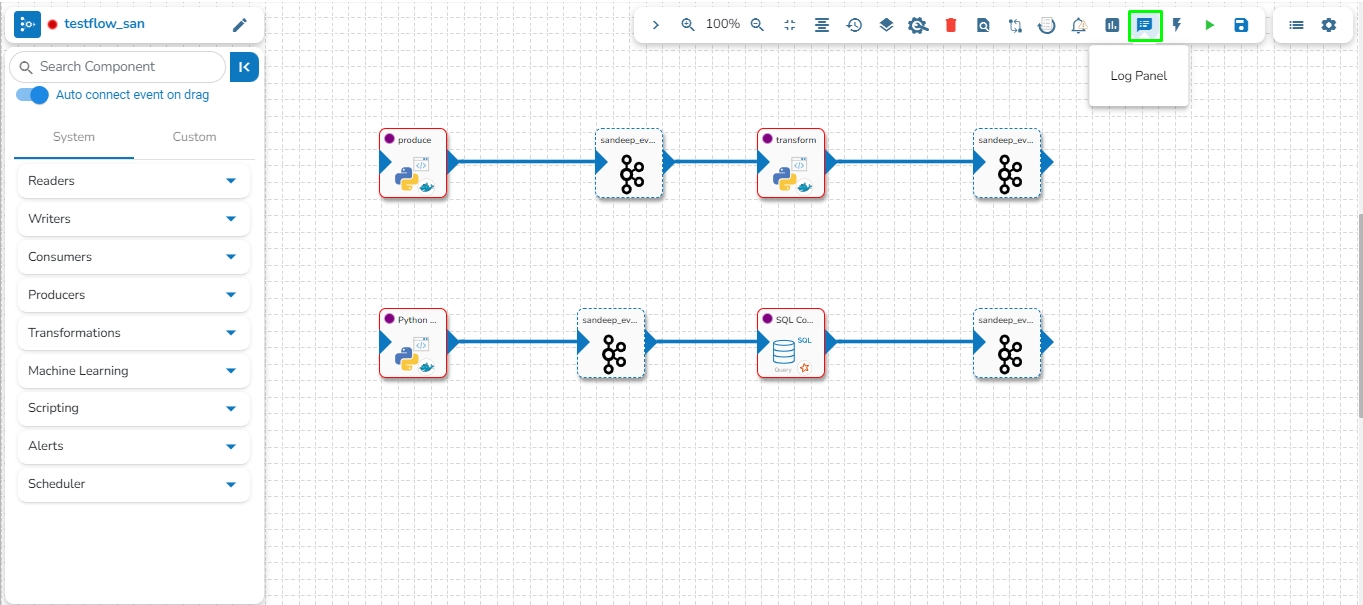

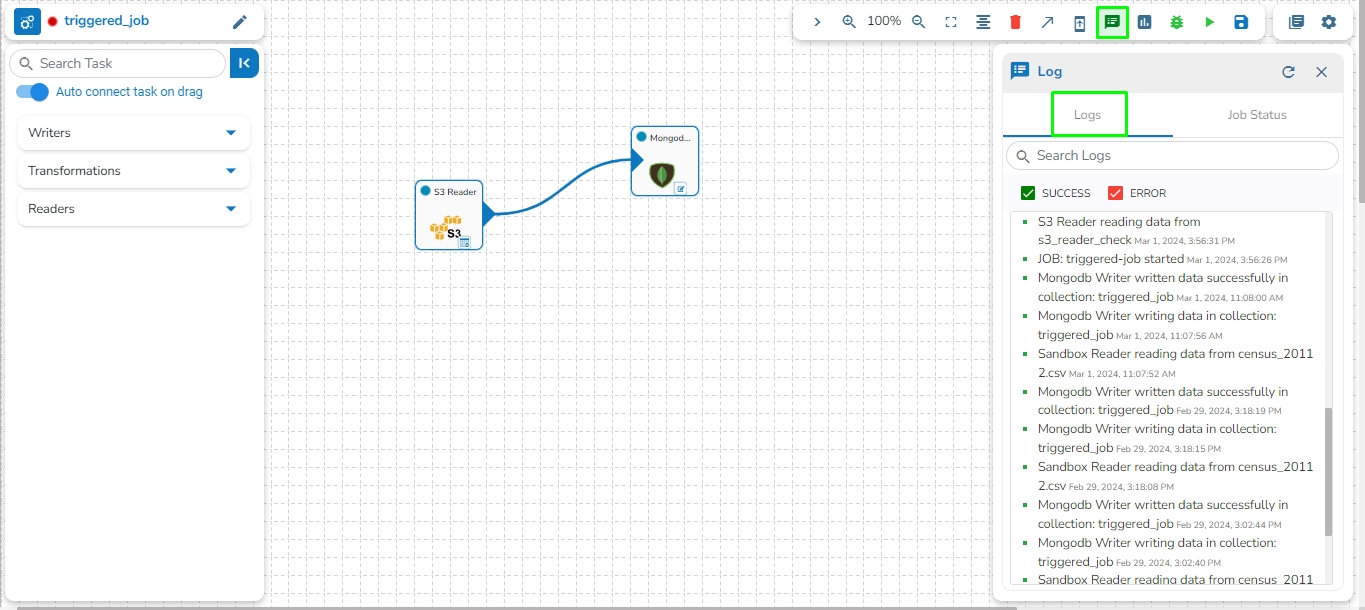

The Toggle Log Panel displays the Logs and Component Status tabs for the Pipeline/Job Workflows.

Navigate to the Pipeline Editor page.

Make sure the Pipeline is in the active state (Activate the Pipeline).

Arrange the components used in the Pipeline/Job Workflow.

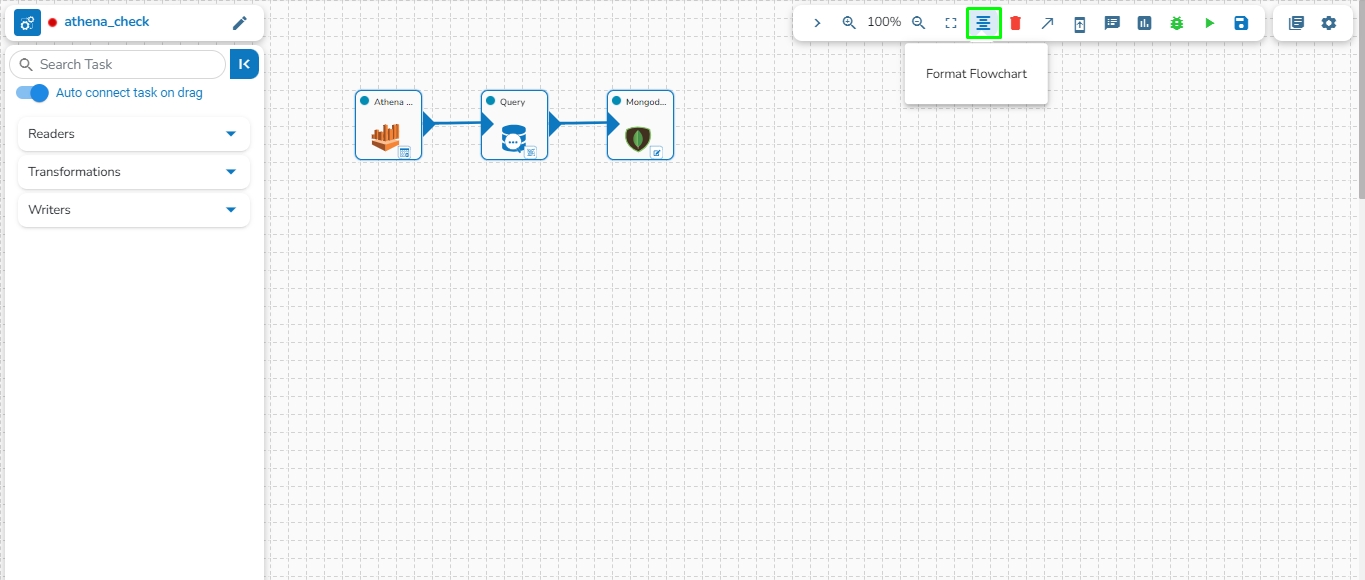

This feature enables users to arrange the components/tasks used in the Pipeline/Job in a formatted manner.

Please see the given video to understand the Format Flowchart option.

Follow these steps to format flowchart in the pipeline:

Go to the pipeline toolbar and click on Show Options to expand the toolbar.

A way to scale up the processing speed of components.

A feature to scale your component to the max number of instances to reduce the data processing lag automatically. This feature detects the need to scale up the components in case of higher data traffic.

All components have option of Intelligent scaling which is ability of the system to dynamically adjust the scale or capacity of the reader component based on the current demand and available resources. It involves automatically optimizing the resources allocated to the component to ensure efficient and effective processing of tasks.

Low

Medium

High

Once the user clicks on any of the job type, it will list down all the jobs related to that particular job type along with the following option:

View: Redirects the user to the selected job workspace.

Monitor: Redirects the user to the monitoring page for the selected job.

Failed: Displays the number and total percentage of pipelines where failure has occurred.

Once the user clicks on any of the status, it will list down all the pipelines related to that particular category along with the following option:

View: Redirects the user to the selected pipeline workspace.

Monitor: Redirects the user to the monitoring page for the selected pipeline.

Once the user clicks on any of the resource type, it will list down all the pipelines related to that particular resource type along with the following option:

View: Redirects the user to the selected job workspace.

Monitor: Redirects the user to the for the selected job.

In the pipeline overview page, components are listed based on their hierarchical level, denoted by Levels 0 and 1. A component at level 1 indicates a dependency on the data from the preceding component, where the previous component serves as the Parent Component. For components without dependencies, designated as Level 0, their Parent Component is set to None, signifying no connection to any previous component in the pipeline.

It can handle both Streaming and batch data seamlessly. The Data pipeline offers an extensive list of data processing components that help you automate the entire data workflow, Ingestion, transformations, and running AI/ML models.

Readers: Your repository of data can be a reader for you. It could be a database, a file, or a SaaS application. Read Readers.

Connecting Components: The component that pulls or receives data from your source can be events/ connecting components for you. These Kafka-based messaging channels help to create a data flow. Read Connecting Components.

Writers: The databases or data warehouses to which the Pipelines load the data. Read Writers.

Transforms: The series of transformation components that help to cleanse, enrich, and prepare data for smooth analytics. Read .

Producers: Producers are the components that can be used to produce/generate streaming data to external sources. Read .

Machine Learning: The Model Runner components allow the users to use the models created on the Python workspace of the Data Science Workbench or saved models from the Data Science Lab to be consumed in a pipeline. Read .

Consumers: These are the real-time / Streaming component that ingests data or monitor for changes in data objects from different sources to the pipeline. Read .

Alerts: These components facilitate user notification on various channels like Teams, Slack, and email based on their preferences. Notifications can be delivered for success, failure, or other relevant events, depending on the user's requirement. Read .

Scripting: The Scripting components allow users to write custom scripts and integrate them into the pipeline as needed. Read .

Scheduler: The Scheduler component enables users to schedule their pipeline at a specific time according to their requirements.

Standard

SRV

Connection String

Port (*): Provide the Port number (It appears only with the Standard connection type).

Host IP Address (*): The IP address of the host.

Username (*): Provide a username.

Password (*): Provide a valid password to access the MongoDB.

Database Name (*): Provide the name of the database where you wish to write data.

Additional Parameters: Provide details of the additional parameters.

Schema File Name: Upload Spark Schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append: This operation adds the data to the collection.

Ignore: "Ignore" is an operation that skips the insertion of a record if a duplicate record already exists in the database. This means that the new record will not be added, and the database will remain unchanged. "Ignore" is useful when you want to prevent duplicate entries in a database.

Upsert: It is a combination of "update" and "insert". It is an operation that updates a record if it already exists in the database or inserts a new record if it does not exist. This means that "upsert" updates an existing record with new data or creates a new record if the record does not exist in the database.

Port: Enter the port for the given IP Address.

Database name: Enter the Database name.

Table name: Provide a single or multiple table names. If multiple table name has be given, then enter the table names separated by comma(,).

User name: Enter the user name for the provided database.

Password: Enter the password for the provided database.

Driver: Select the driver from the drop down. There are 7 drivers supported here: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Fetch Size: Provide the maximum number of records to be processed in one execution cycle.

Create Partition: This is used for performance enhancement. It's going to create the sequence of indexing. Once this option is selected, the operation will not execute on server.

Partition By: This option will appear once create partition option is enabled. There are two options under it:

Auto Increment: The number of partitions will be incremented automatically.

Index: The number of partitions will be incremented based on the specified Partition column.

Query: Enter the spark SQL query in this field for the given table or table(s). Please refer the below image for making query on multiple tables.

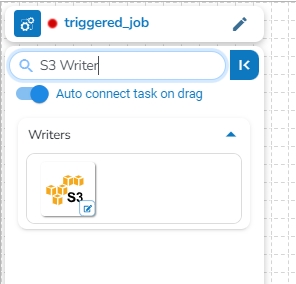

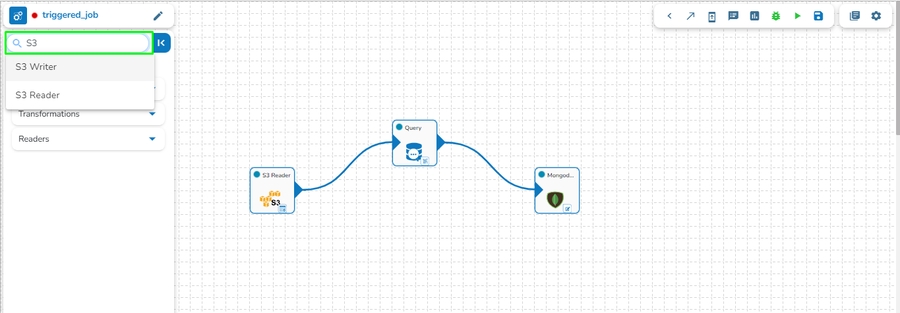

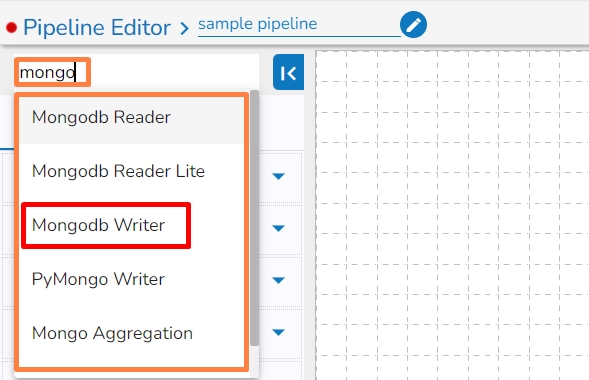

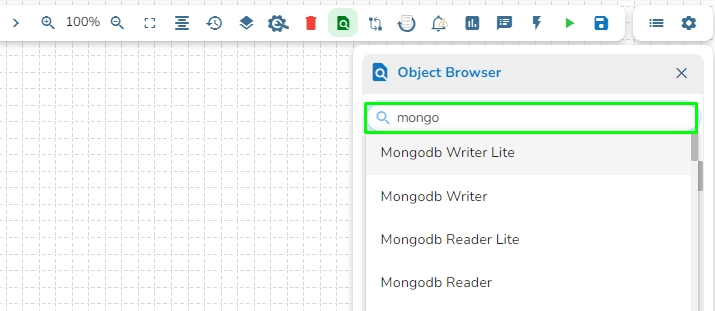

The user can search a component using the Search Component space.

The user gets prompt suggestions while searching for a component.

Once the component name is entered, the pipeline workflows containing the searched component get listed below.

The user can click the expand/ collapse icon to expand the component panel for the selected pipeline.

The user can drag a searched component from the Object Browser and drop to the Pipeline Editor canvass.

Host IP Address: Enter the host IP address for HDFS.

Port: Enter the Port.

Zone: Enter the Zone for HDFS. Zone is a special directory whose contents will be transparently encrypted upon write and transparently decrypted upon read.

File Type: Select the File Type from the drop down. The supported file types are:

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Path: Provide the path of the file.

Partition Columns: Provide a unique Key column name to partition data in Spark.

The Pipeline Workflow Editor opens in full screen and the icon changes toicon.

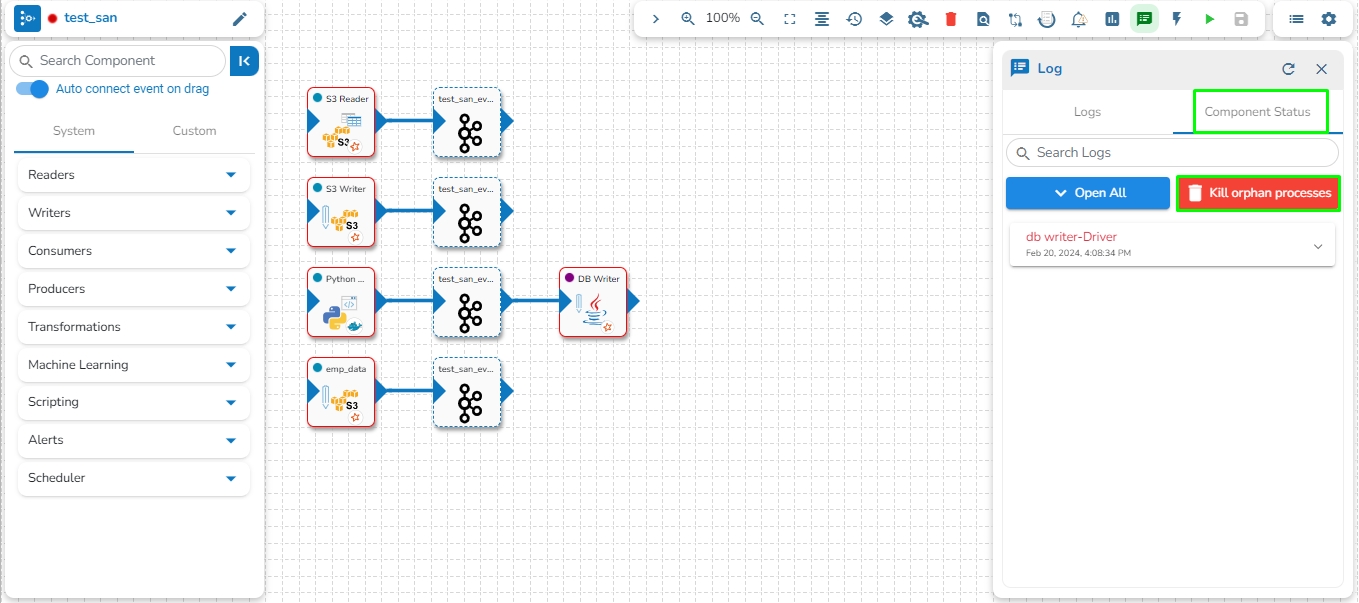

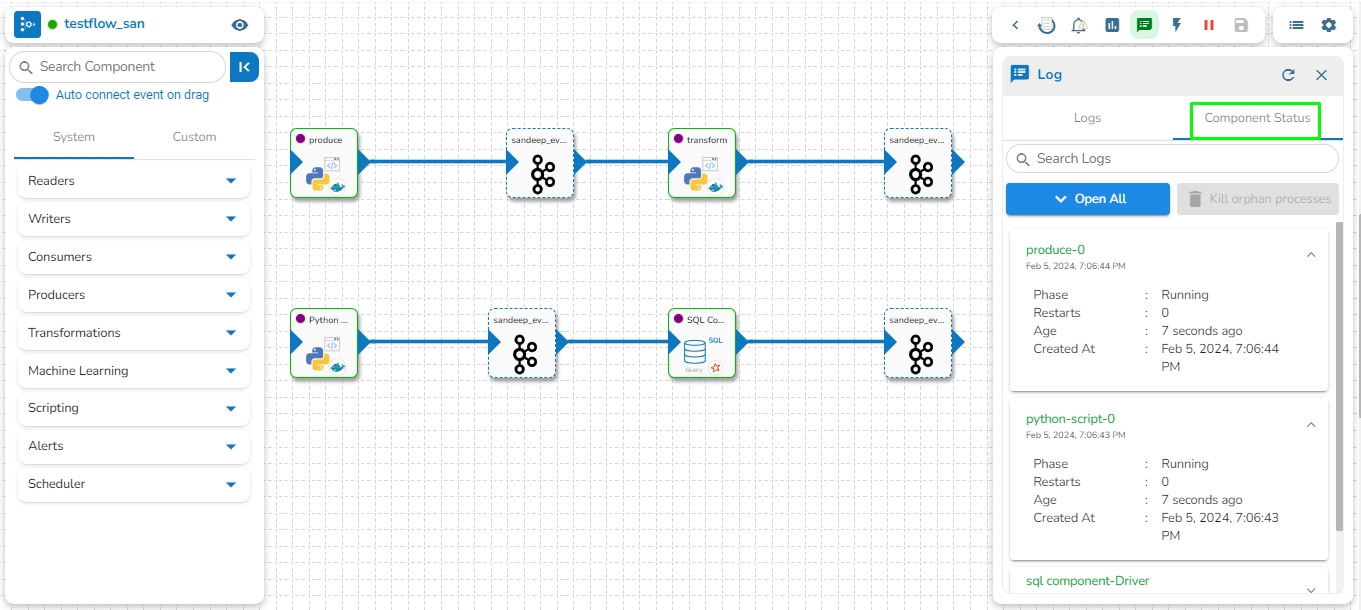

A Log panel toggles displaying the collective component logs of the pipeline/Job under the Logs tab.

Select the Component Status tab from the Log panel to display the status of the component containers. By selecting the Open All option, it will list all the components.

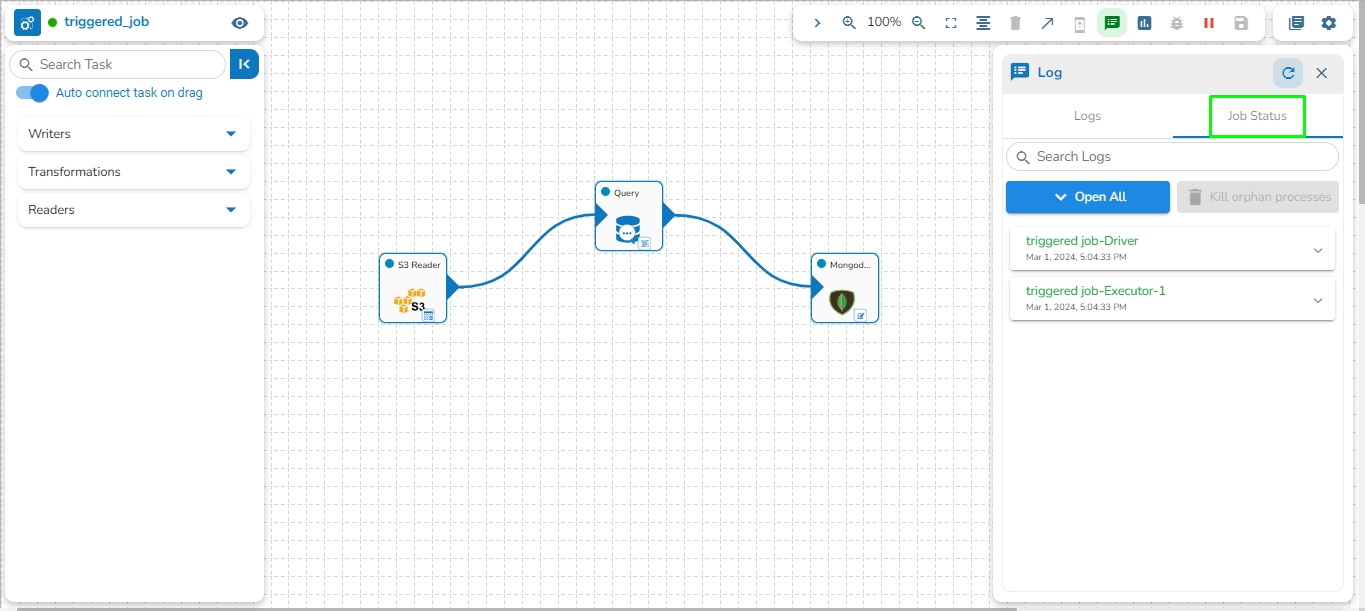

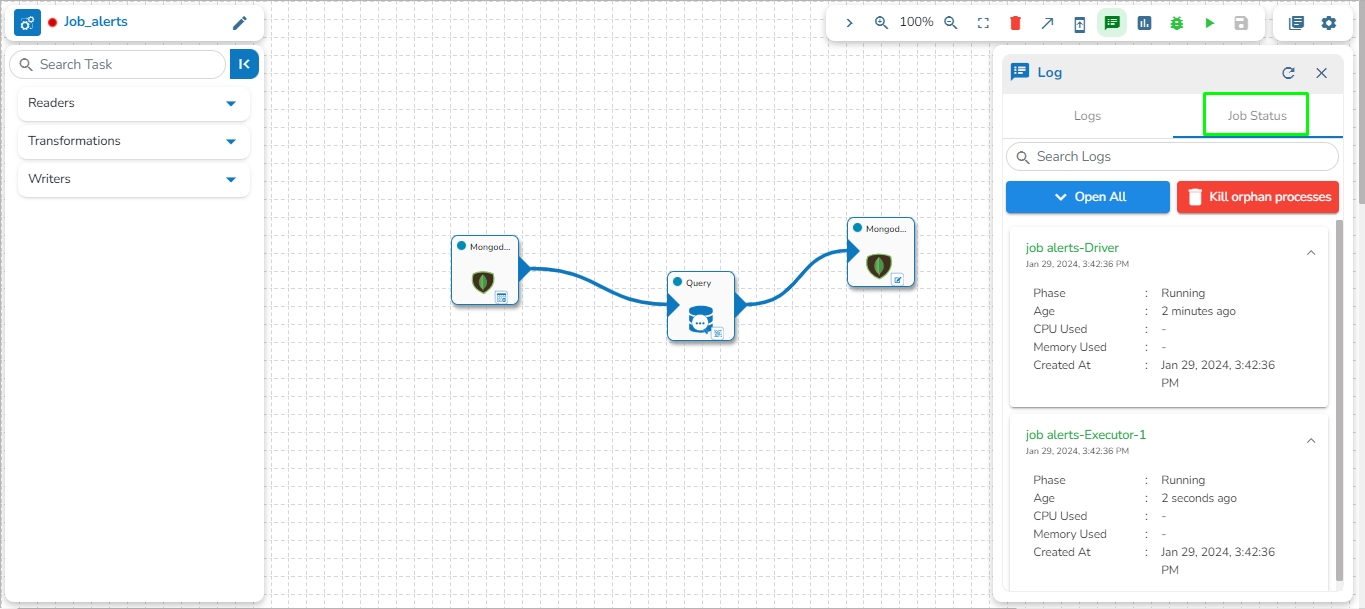

Select the Job Status tab from the Log panel to display the status of the pod of the selected Job.

This feature provides the capability to kill all Orphan Pods associated with any component in the pipeline/Jobs if they persist after deactivation. Orphan Processes are the processes that remain active in the backend even after deactivating the pipeline.

The user can access the Kill Orphan Processes option under the Component Status tab of the Log Panel.

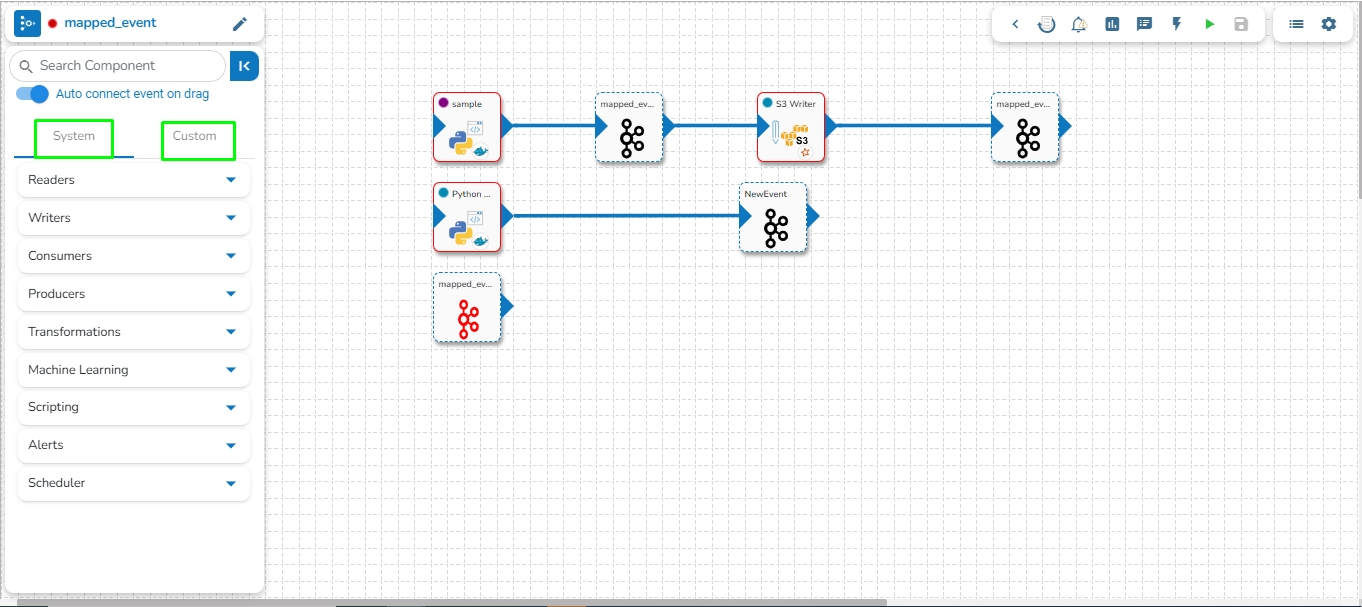

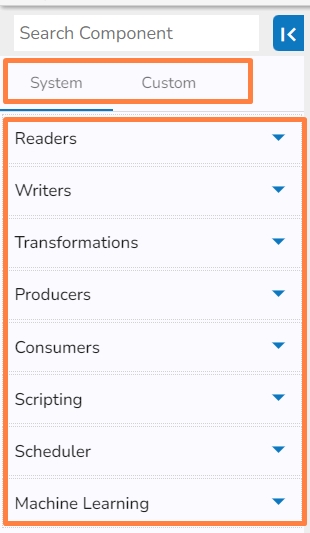

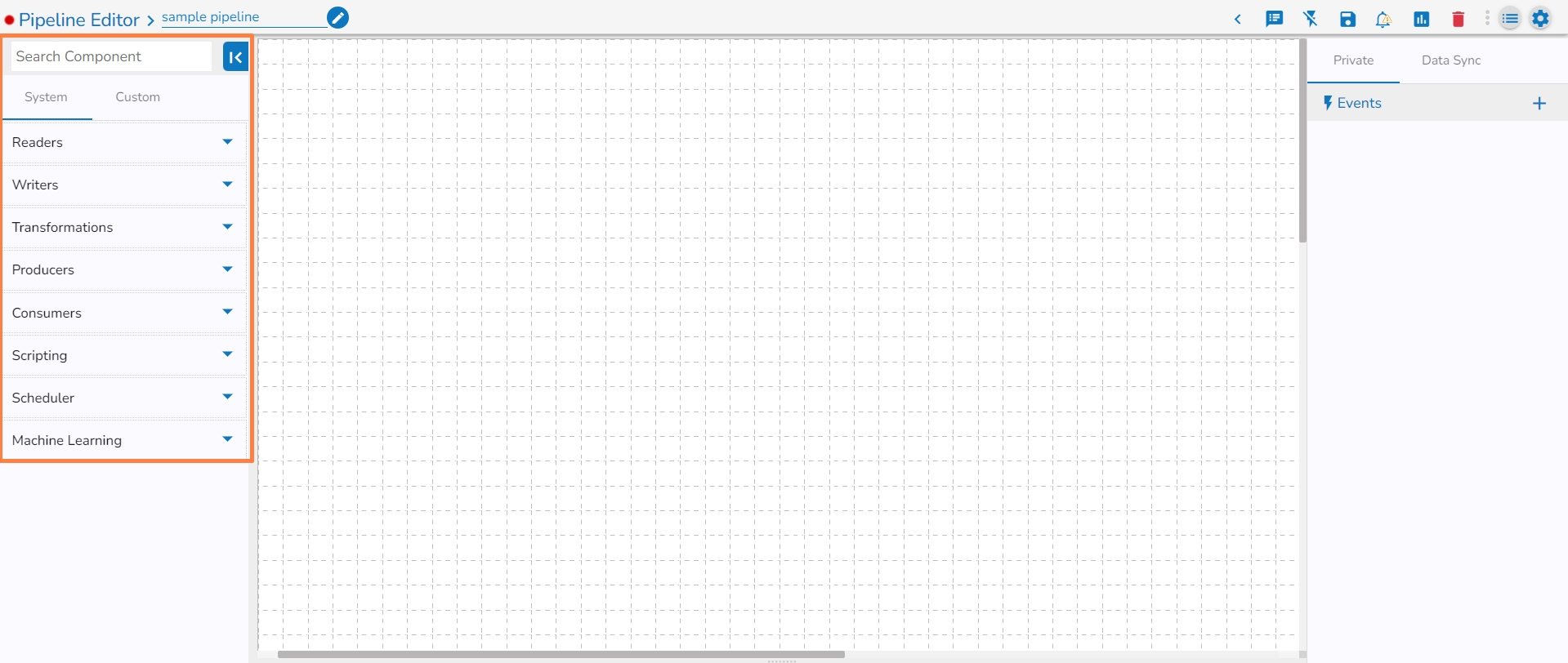

The Component Panel displays categorized list of all the pipeline components. The Components are majorly divided into two groups:

System

Custom

System Components- The pre-designed pipeline components are listed under the System tab.

Custom Components- The Custom tab lists all the customized pipeline components created by the user.

The Component gets grouped based on the Component type. E.g., All the reader components are provided under the Reader menu tab of the Component Pallet.

A search bar is provided to search across the 50+ components. It helps to find a specific component by inserting the name in the Search Bar.

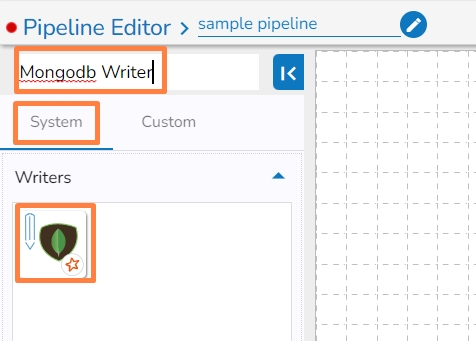

Navigate to the Search bar provided in the Component Pallet.

Type in the given search bar.

The user gets prompt suggestions for the searched components. E.g., While searching hd, it lists HDFS Reader and HDFS Writer components.

Select an option from the prompted choices.

The searched component appears under the System tab. (E.g., HDFS Writer component as displayed in the below image).

Click on the Format Flowchart option to arrange the pipeline in the formatted way.

Please Note:

If you have selected intelligent scaling option make sure to give enough resources to component so that it can auto-scale based on the load.

Components will scale up if there is a lag of more than 60% and if the lag goes less than 10% component pods will automatically scale down. This lag percentage is configurable.

Adding components to a pipeline workflow.

Check out the below given walk-through to add components to Pipeline Workflow editor canvas.

The Component Pallet is situated on the left side of the User Interface on the Pipeline Workflow. It has the System and Custom components tabs listing the various components.

The System components are displayed in the below given image:

Once the Pipeline gets saved in the pipeline list, the user can add components to the canvas. The user can drag the required components to the canvas and configure it to create a Pipeline workflow or Data flow.

Navigate to the existing data pipeline from the List Pipelines page.

Click the View icon for the pipeline.

The Pipeline Editor opens for the selected pipeline.

Drag and drop the new required components or make changes in the existing component’s meta information or change the component configuration (E.g., the DB Reader is dragged to the workspace in the below-given image).

Once dragged and dropped to the pipeline workspace, components can be directly connected to the nearest Kafka Event. To enable the auto-connect feature, the user needs to ensure that the Auto connect event on drag option is enabled, which is the default setting.

Click on the dragged component and configure the Basic Information tab, which opens by default.

Open the Meta Information tab, which is next to the Basic Information tab, and configure it.

Make sure to click the Save Component in Storage icon to update the component details and pipeline to reflect the recent changes in the pipeline. (The user can drag and configure other required components to create the Pipeline Workflow.

Click the Update Pipeline icon to save the changes.

A success message appears to assure that the pipeline has been successfully updated.

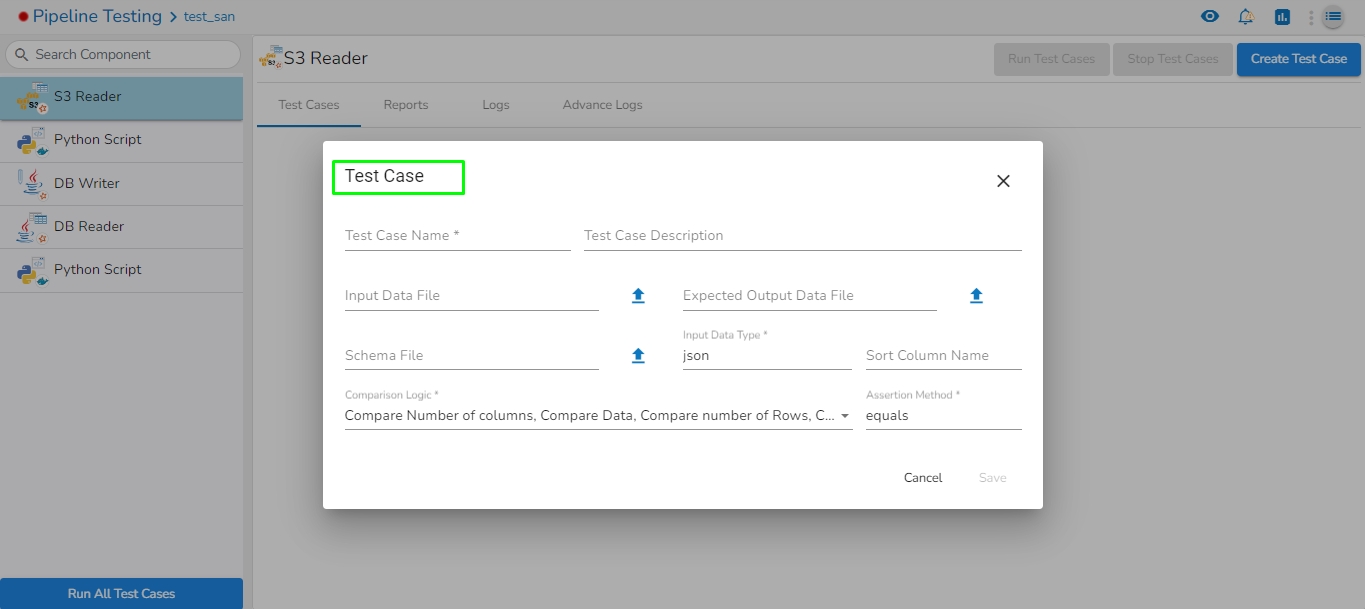

This task reads the file from Amazon S3 bucket.

Please follow the below mentioned steps to configure meta information of S3 reader task:

Drag the S3 reader task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Bucket Name (*): Enter S3 bucket name.

Region (*): Provide the S3 region.

Access Key (*): Access key shared by AWS to login..

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which is to be read

File Type (*): Select a file type from the drop-down menu (CSV, JSON, PARQUET, AVRO, XML are the supported file types)

Limit: Set a limit for the number of records.

Query: Insert an SQL query (it supports query containing a join statement as well).

Access Key (*): Access key shared by AWS to login

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which has to be read

File Type (*):

Provide a unique Key column name to partition data in Spark.

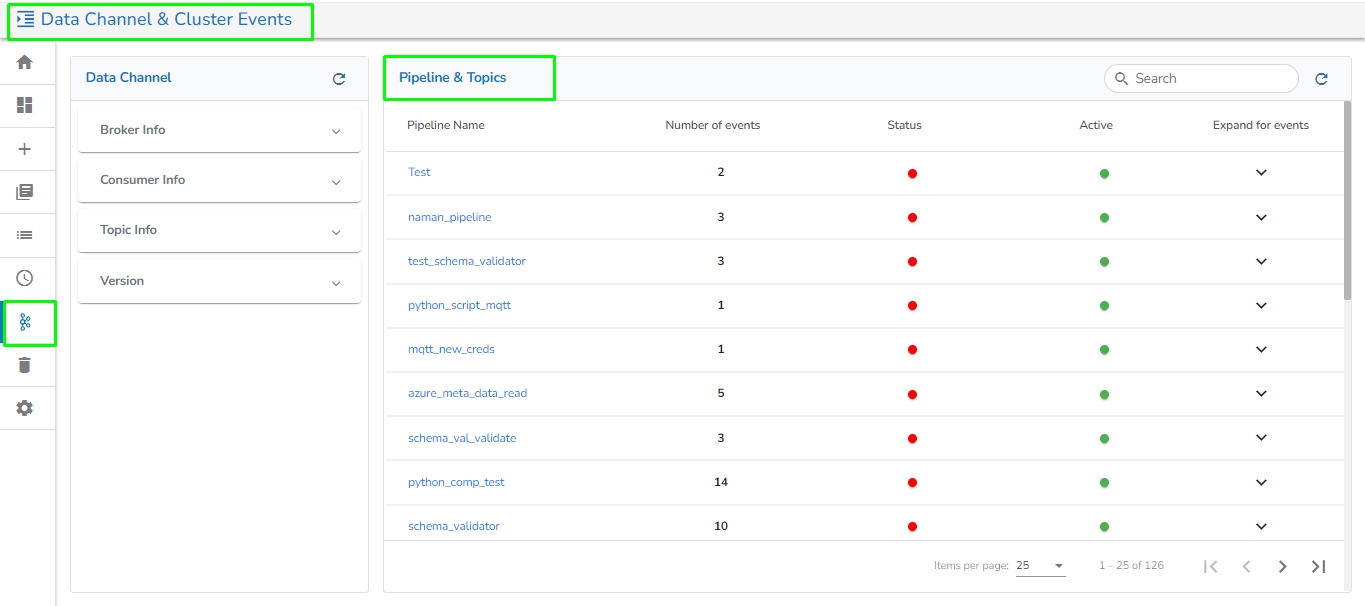

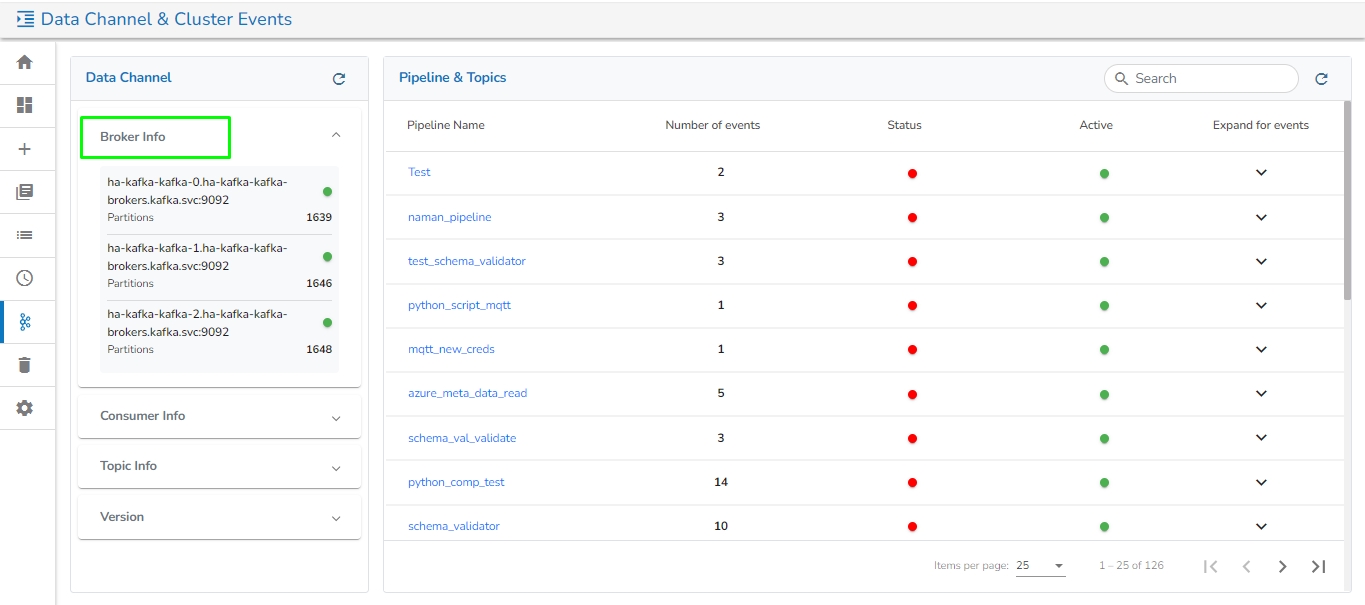

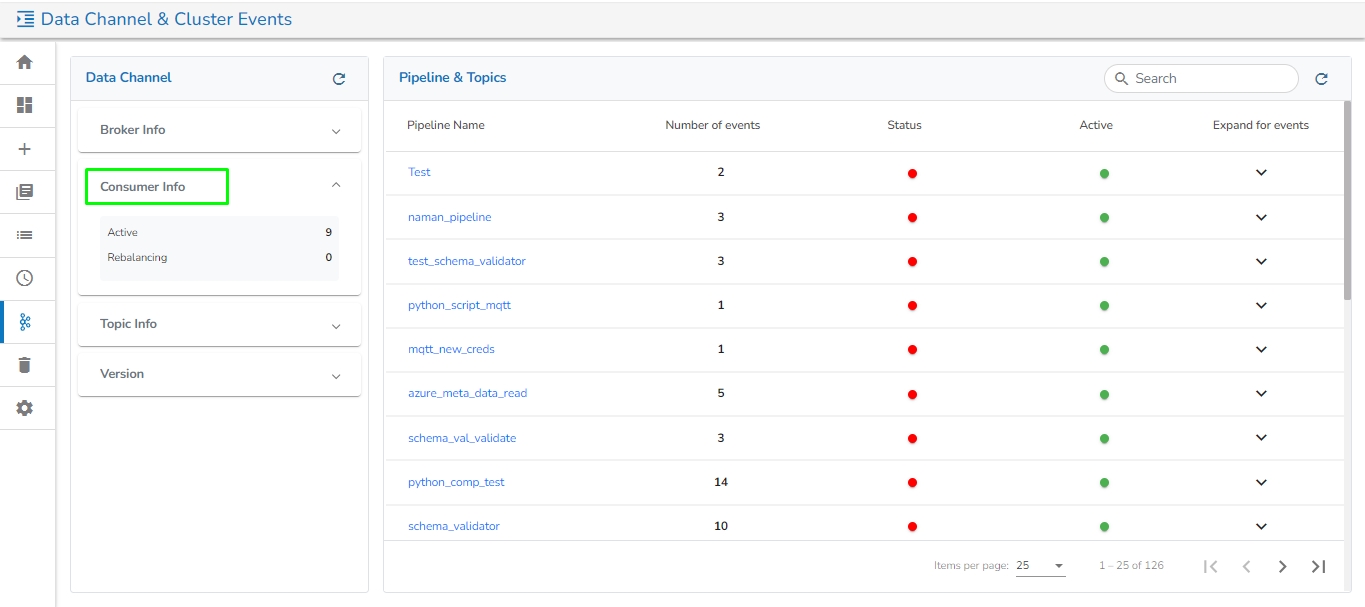

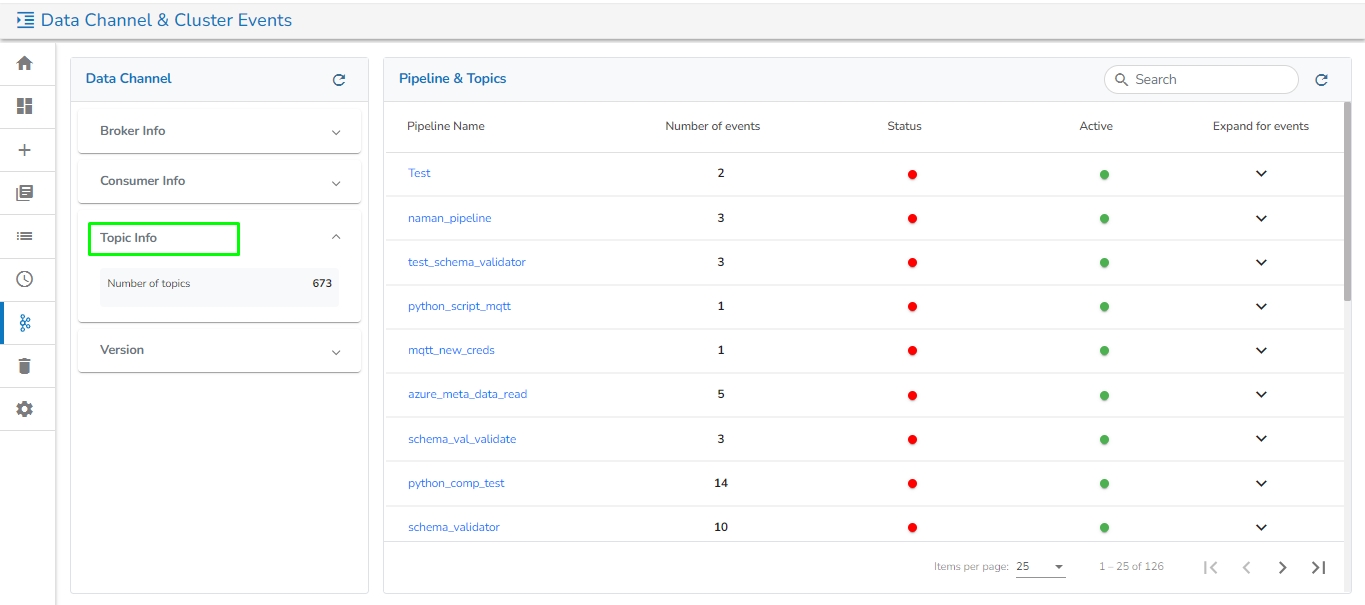

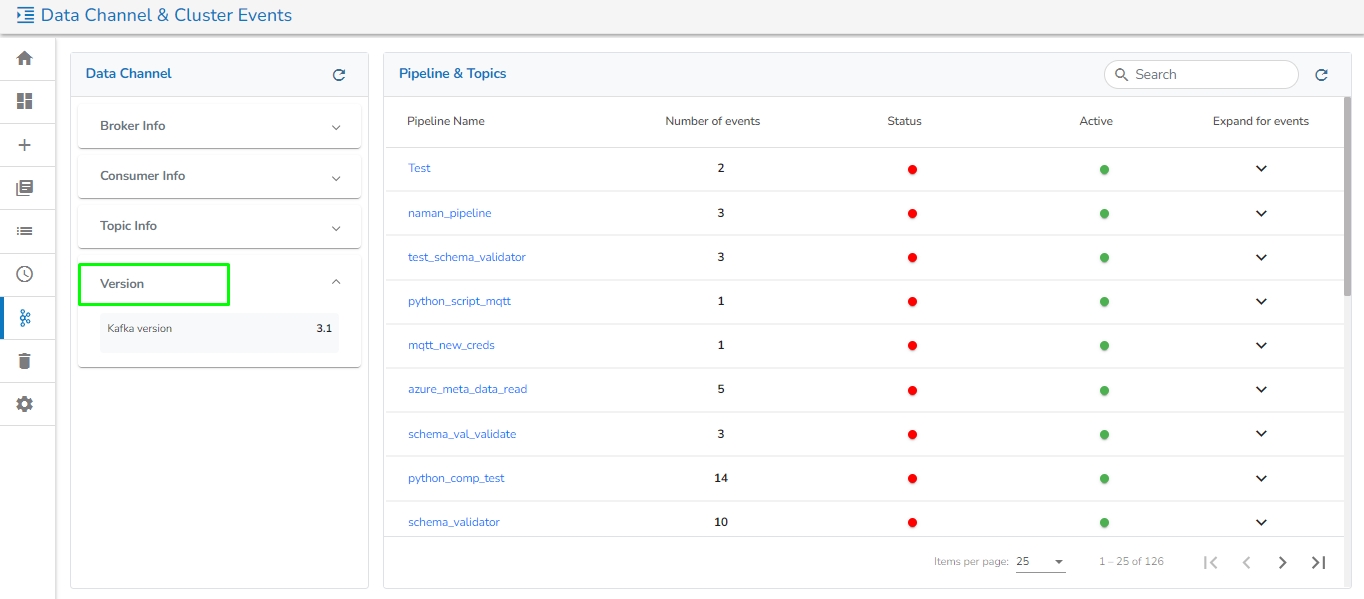

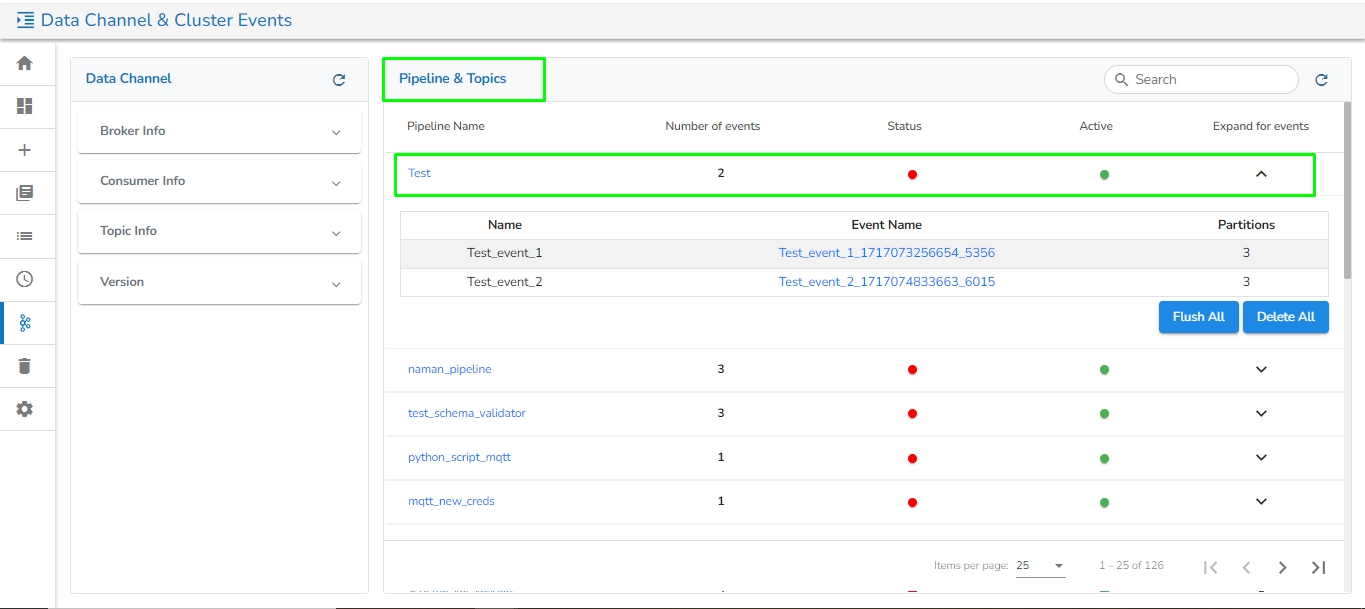

The Data Channel & Cluster Events page presents a comprehensive list of all Broker Info, Consumer Info, Topic Info, Kafka Version, and all the events used in the pipeline. It allows users to flush/delete the events.

Go through the below-given demonstration for the Data Chanel & Cluster Event page.

Navigate to the Pipeline Homepage.

Click the Data Channel & Cluster Events icon.

The Data Channel & Cluster Events page opens.

The list opens displaying the Data Channel & Cluster Events information.

The Data Channel includes the following information:

Broker Info: It will list all Kafka brokers and display the number of partitions used for each broker.

Consumer Info: It will display the number of active and rebalancing consumers.

Topic Info: It will display the number of topics.

Version information: It will display the Kafka version.

The Clustered Events page includes the following information:

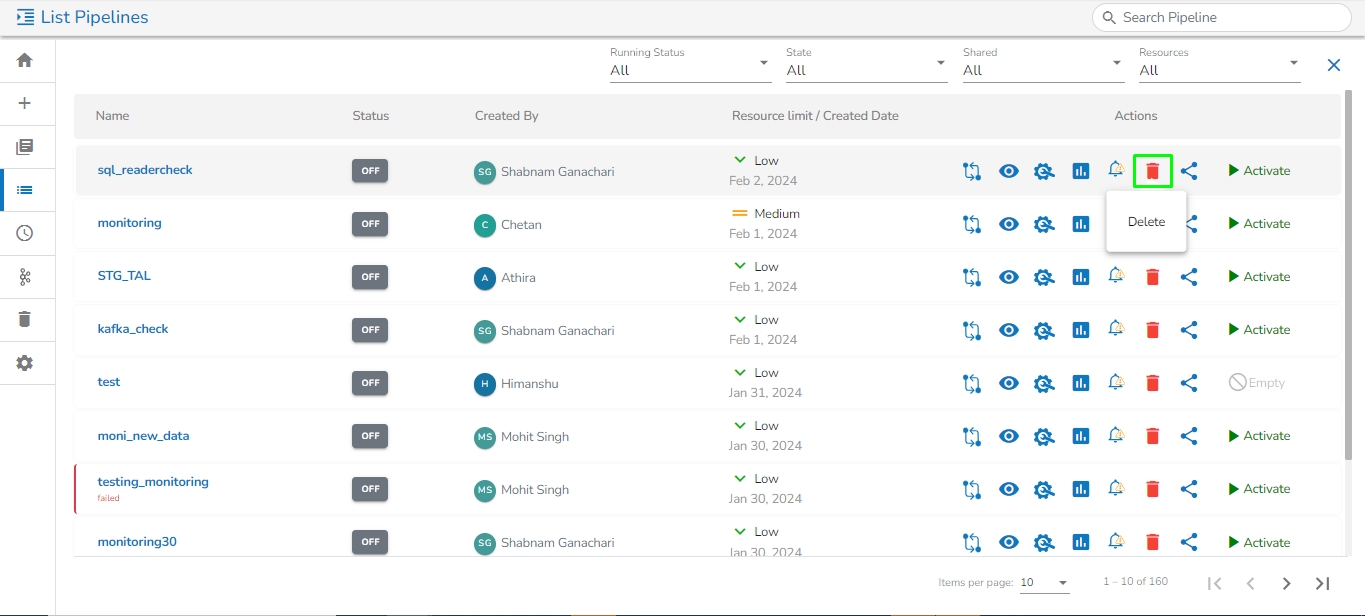

On this page, all the pipelines will be listed along with the following details:

Pipeline Name: The name of the pipeline.

Number of Events: The number of Kafka events created in the selected pipeline.

Status: The running status of the pipeline, indicated by Green if active and Red otherwise.

Expand for Events: Click here to expand the selected row for a particular pipeline. This will list all Kafka events associated with the chosen pipeline along with the following information:

The user gets two options to apply to the listed Kafka events for the pipeline:

Flush All: This will flush all topic data in the selected pipeline.

Delete All: This will delete all topics in the selected pipeline.

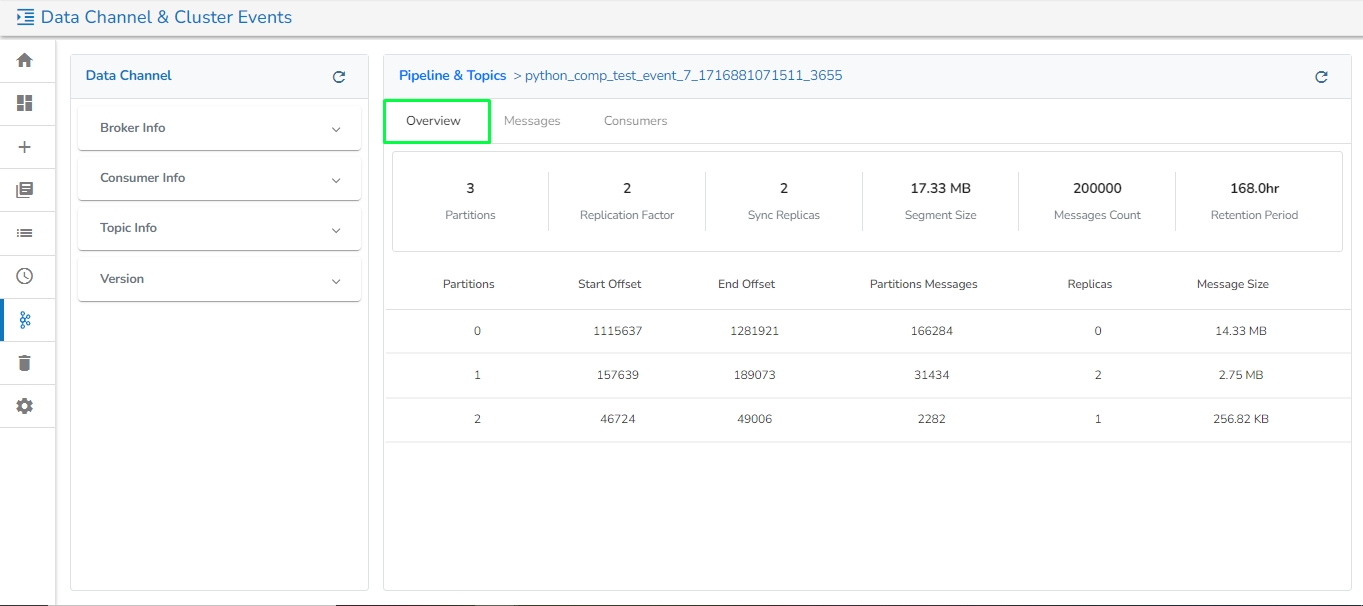

Once the user clicks on the Event Name after expanding the row for the selected pipeline, the following information will be displayed on the new page for the selected Kafka Event:

This tab contains the following information for the selected Kafka Event:

Partitions: The number of partitions in the Kafka Event.

Replication Factor: Displays the replication factor of the Kafka topic. This refers to the number of copies of a Kafka topic's data maintained across different broker nodes within the Kafka cluster, ensuring high availability and fault tolerance data.

Sync Replicas: Displays the number of in-sync replicas of the Kafka topic. In-sync replicas (ISRs) are a subset of replicas fully synchronized with the leader replica for a partition. These replicas have the latest data as the leader and are capable of taking over as the leader if the current leader fails.

Additionally, this tab lists all the partition details along with their start and end offset, the number of messages in each partition, the number of replicas for each partition, and the size of the messages held by each partition.

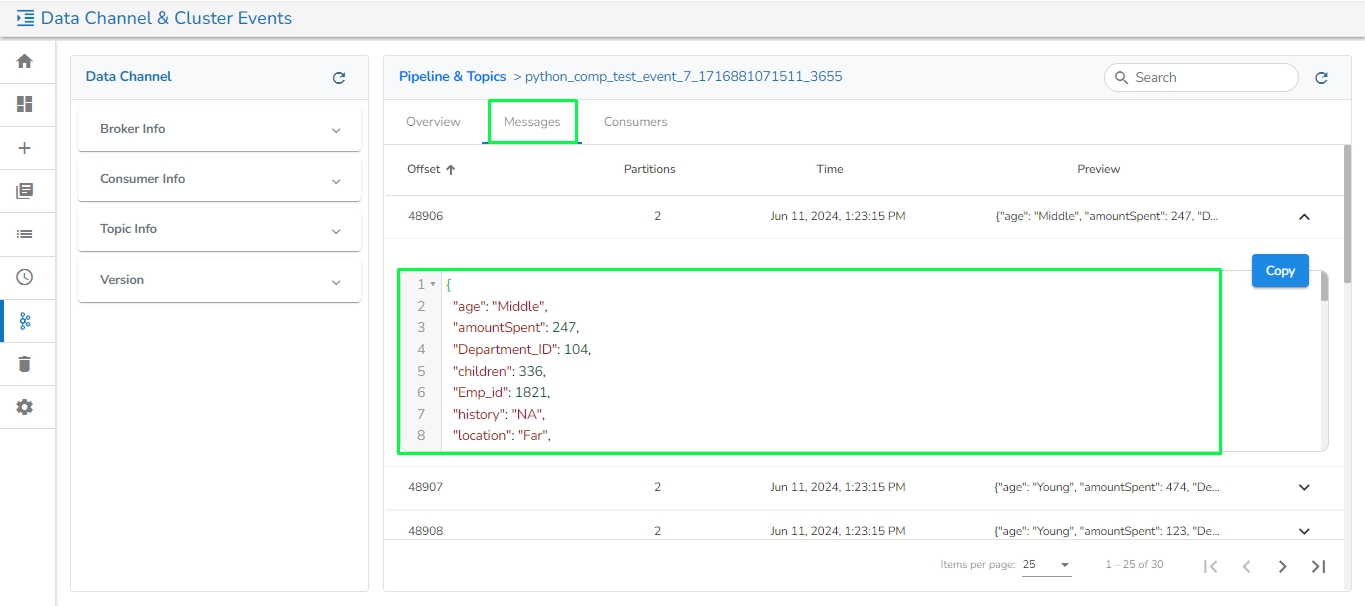

This tab contains the following information for the selected Kafka Event:

Offset: Shows the offset number of the partition. An offset is a unique identifier assigned to each message within a partition.

Partitions: Displays the partition number where the offset belongs.

Time: It mentions the date and time of the message when it was stored at the offset.

Preview: This option helps the user to view and copy the message stored at the selected offset.

This tab shows details of consumers connected to Kafka Topic.

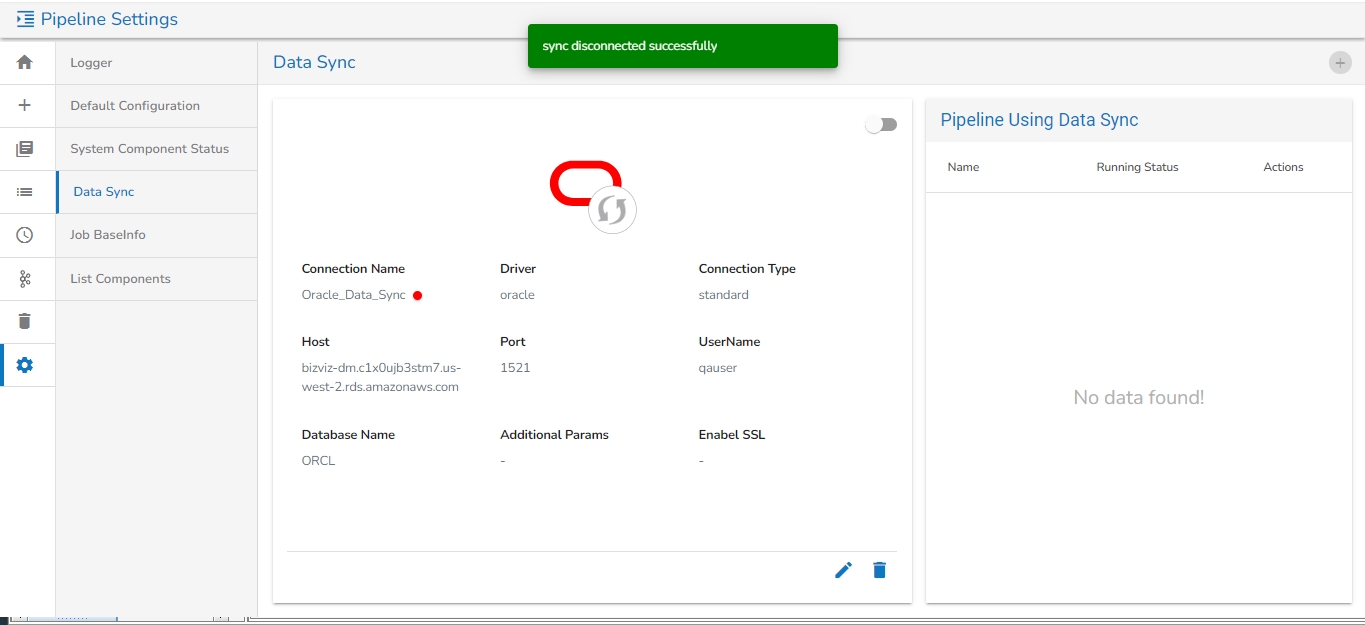

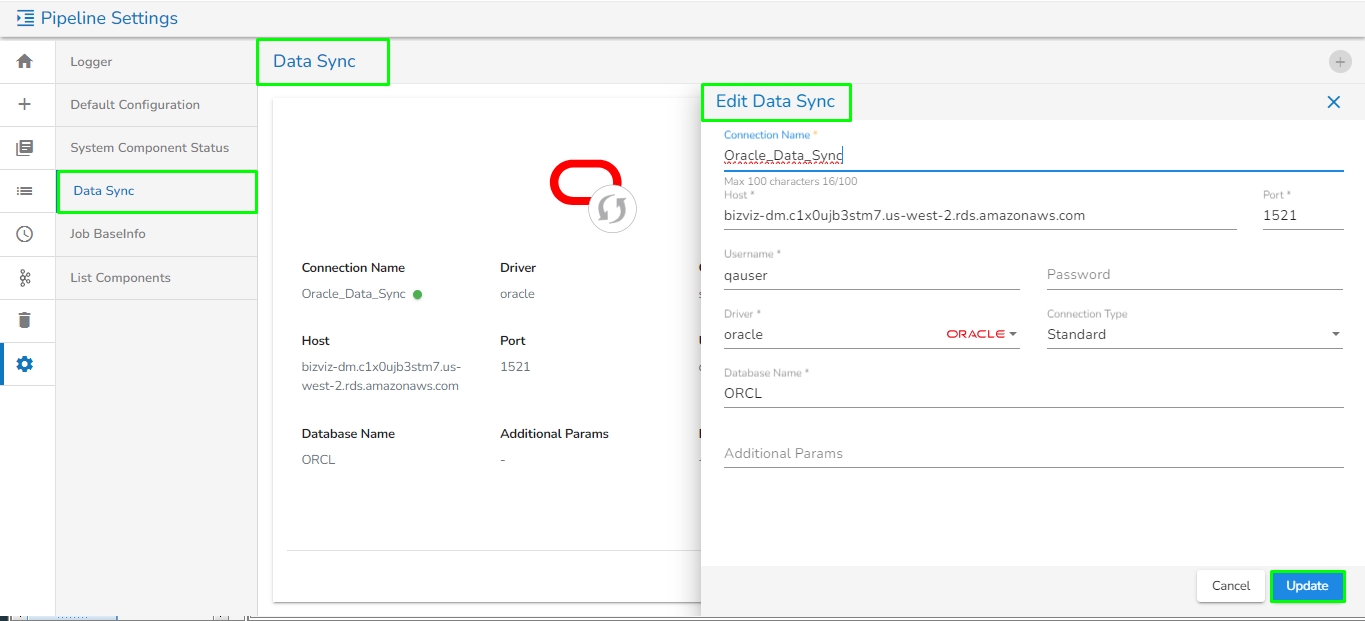

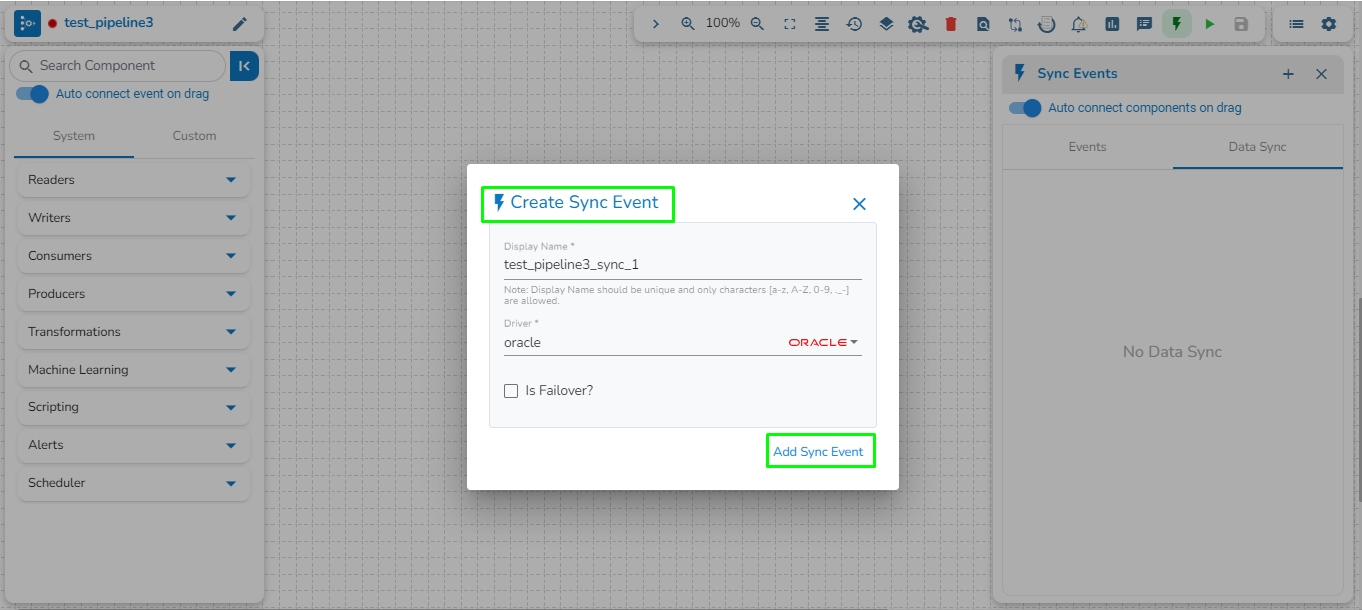

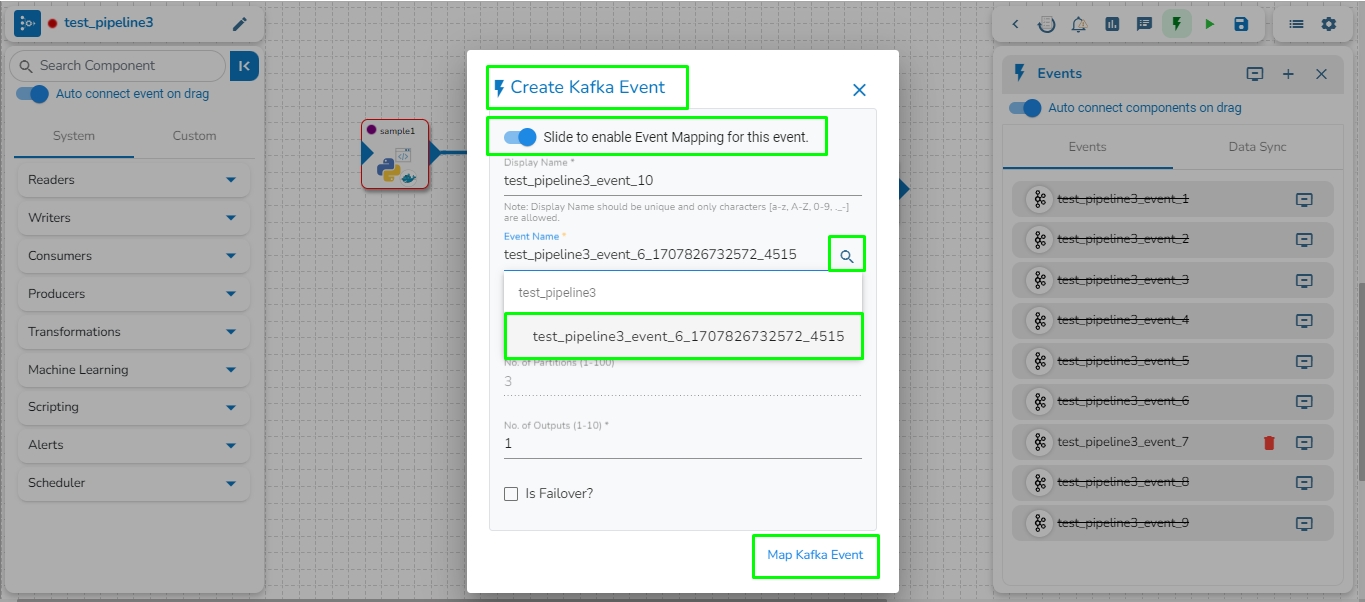

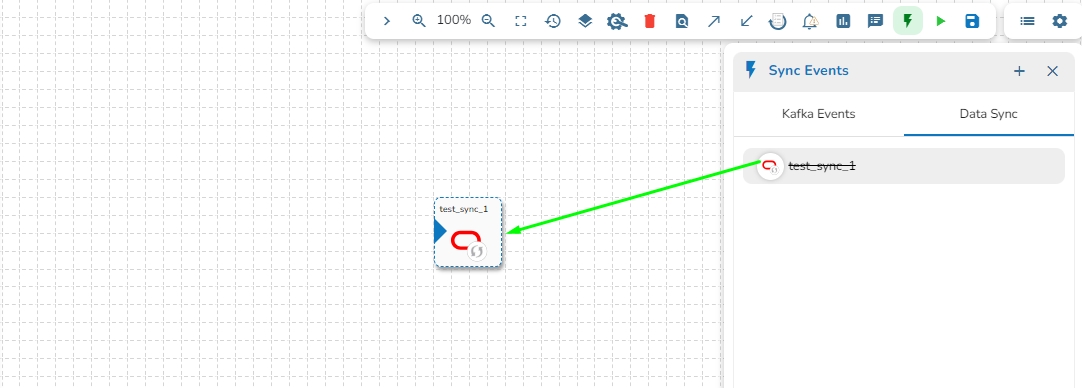

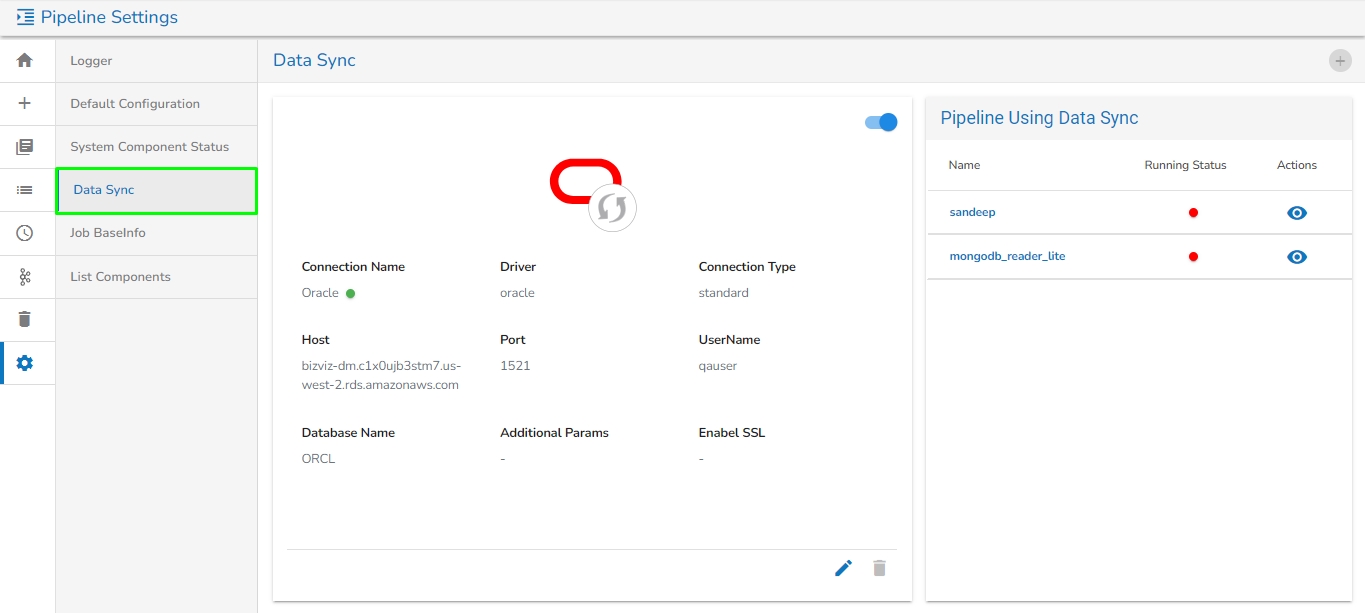

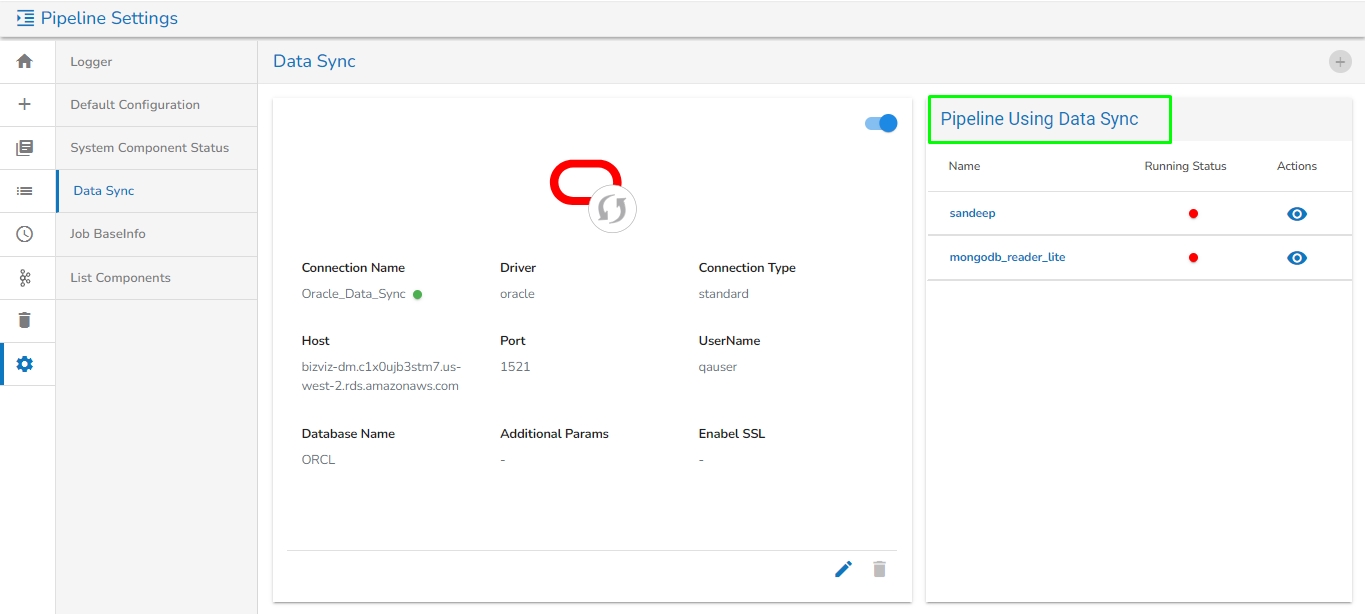

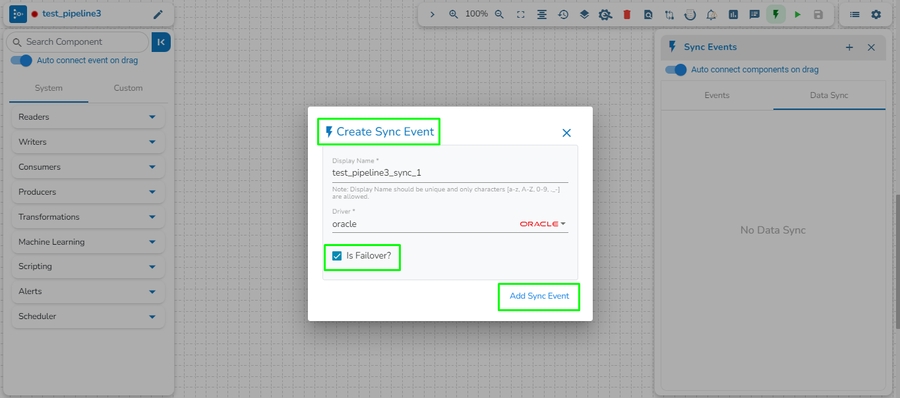

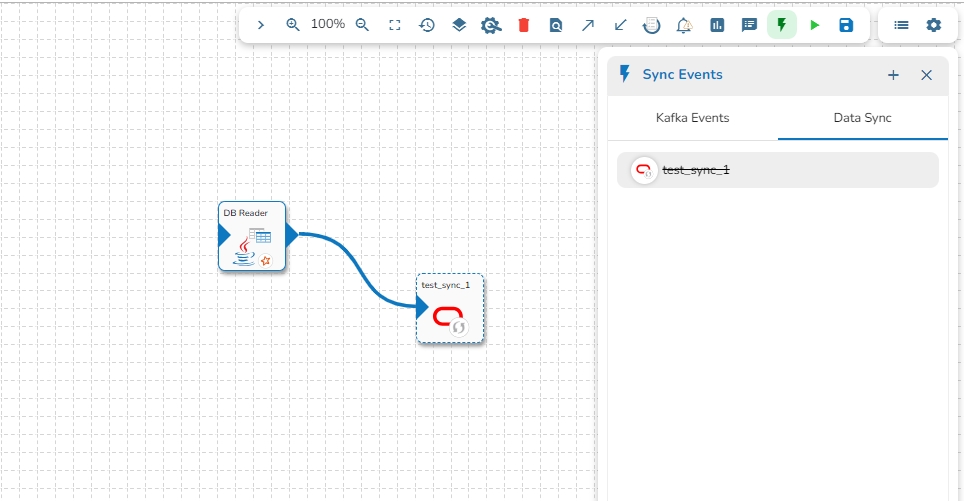

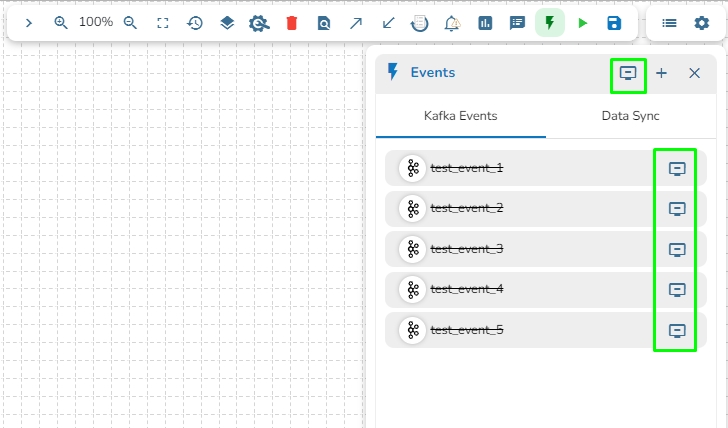

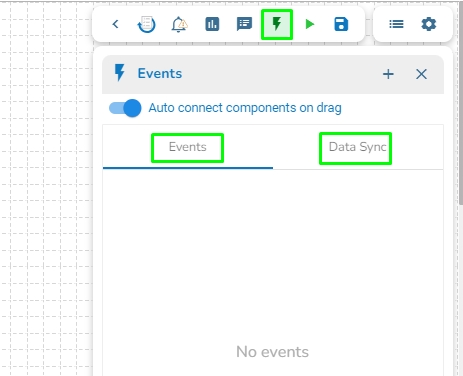

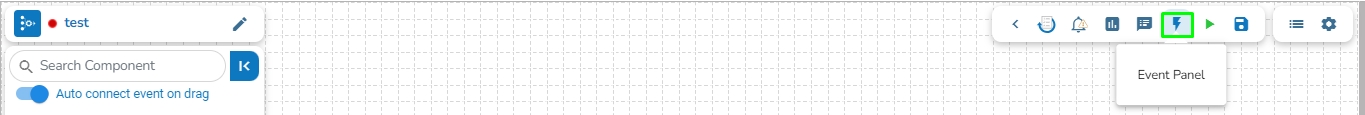

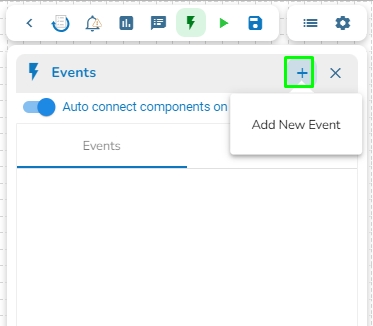

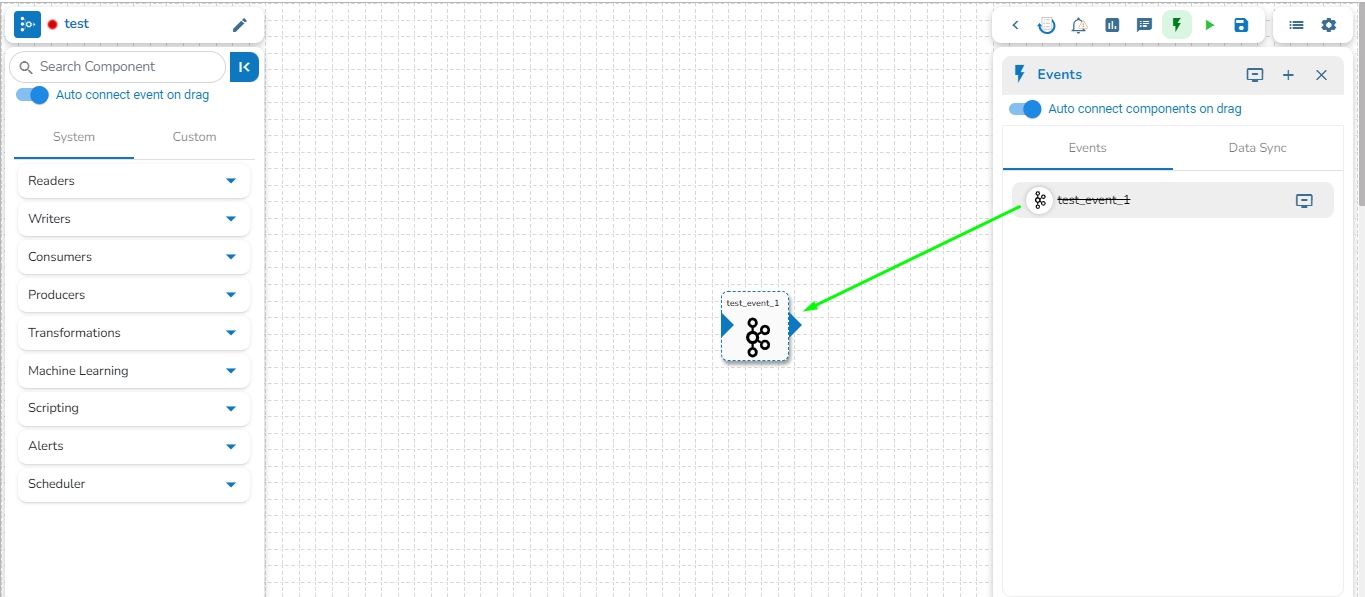

The user can access the Event Panel to create a new Event. We have two options in the Toggle Event Panel:

Private (Event/ Kafka Topic)

Data Sync

The user can create an Event (Kafka Topic) that can be used to connect two pipeline components.

Navigate to the Pipeline Editor page.

Click the Event Panel icon.

The Event panel opens.

Click the Add New Event icon.

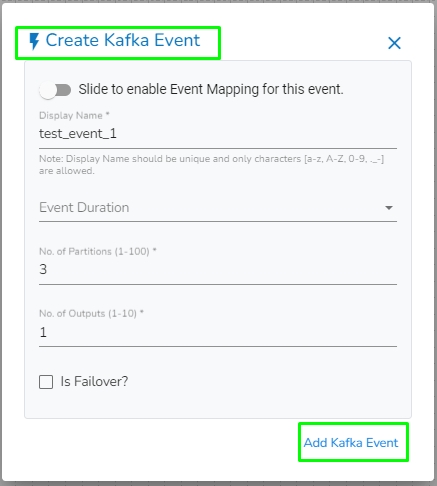

The New Event dialog box opens.

Enable the Event Mapping option to map the Event.

Provide the required information.

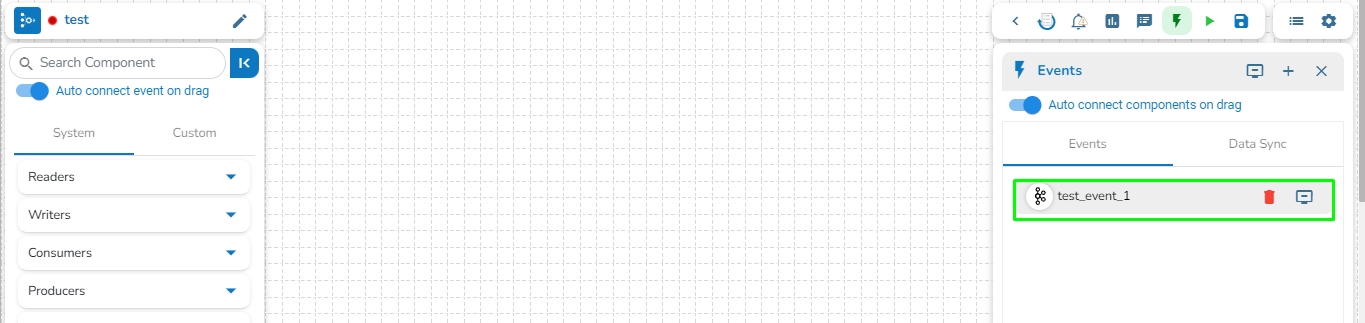

A confirmation message appears.

The new Event gets created and added to the Event Panel.

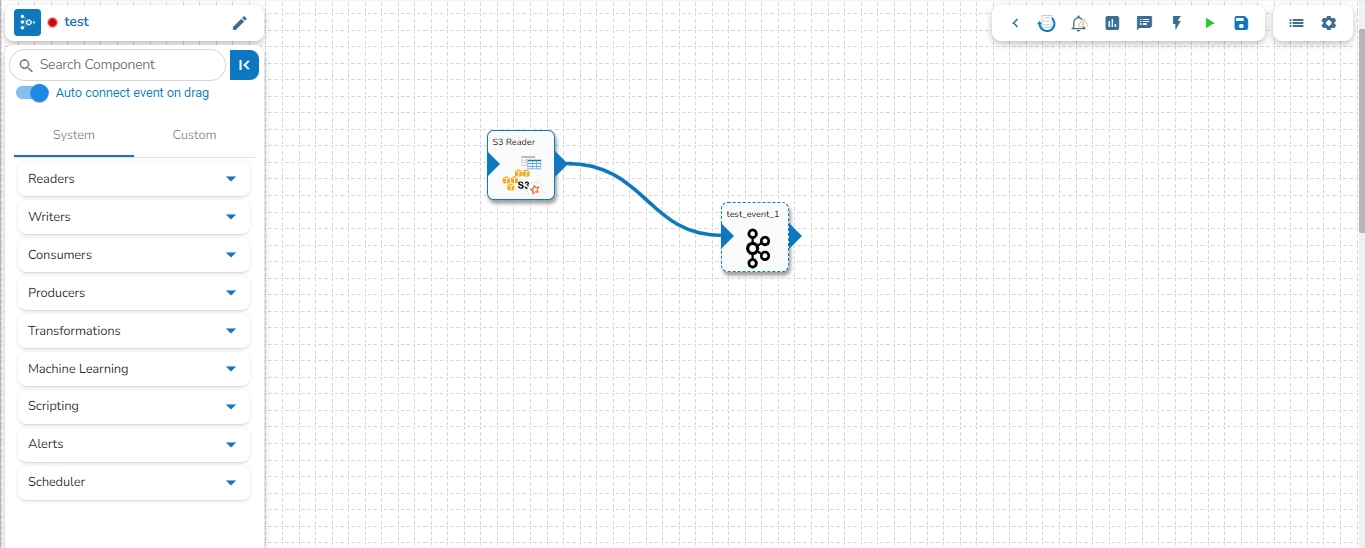

Drag and drop the Event from the Event Panel to the workflow editor.

You can drag a pipeline component from the

Connect the dragged component to the dragged Event to create a pipeline flow of data.

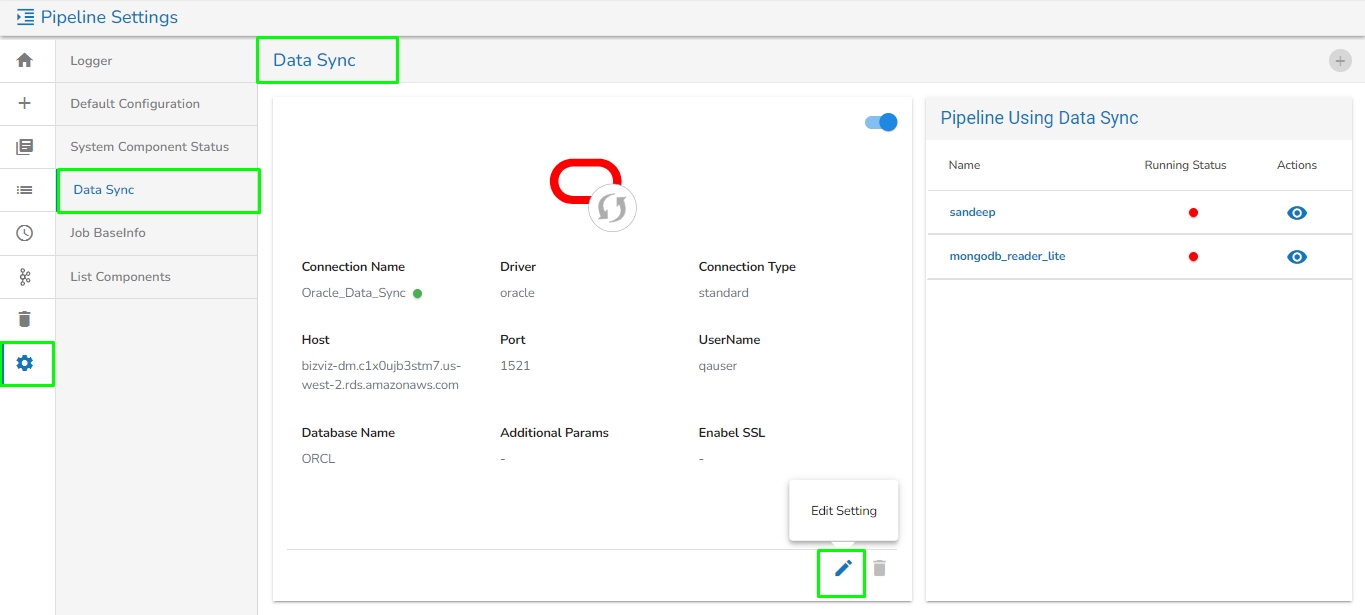

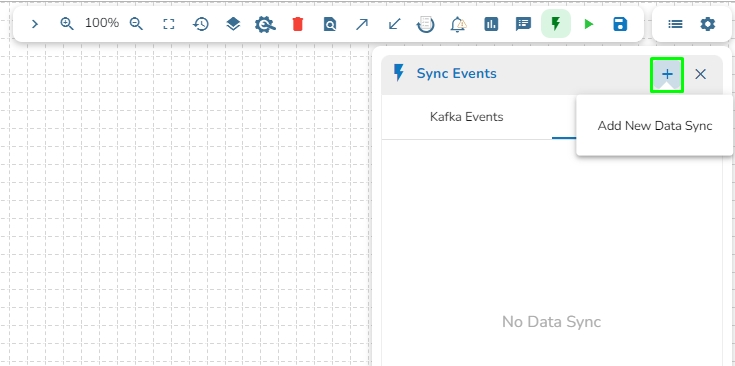

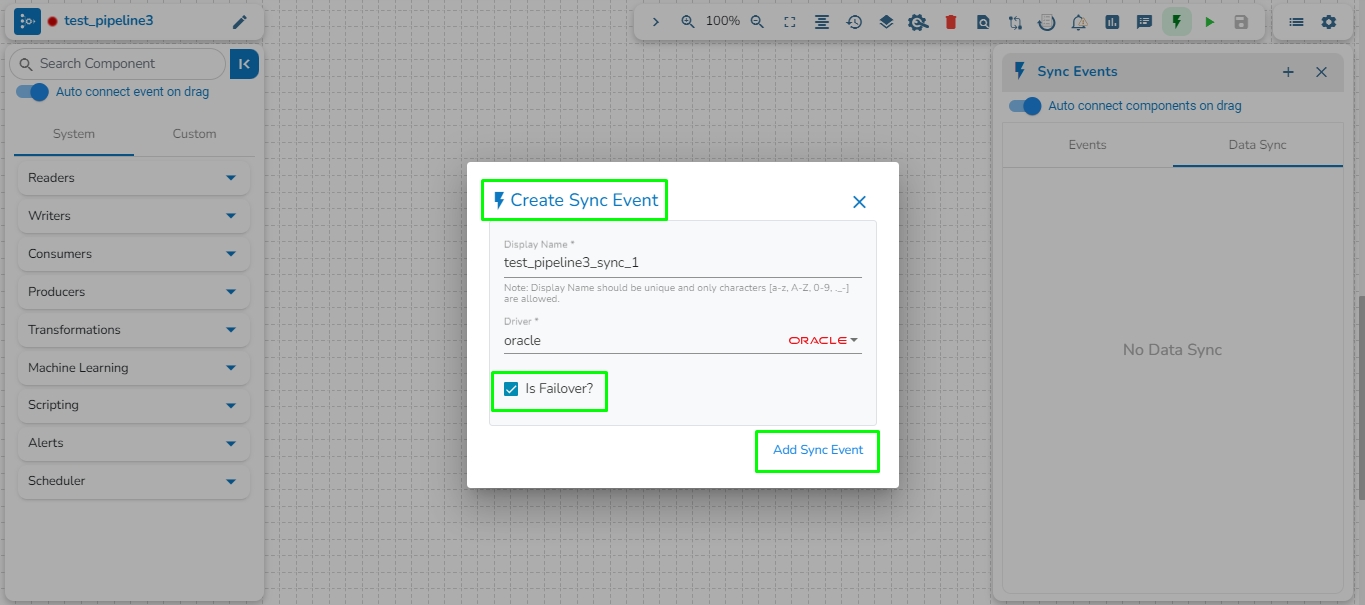

The user can directly read the data with the reader and write to a Data Sync.

The user can add a new Data Sync from the toggle event panel to the workflow editor by clicking on ‘+’ icon.

Specify the display name and connection id and click on save.

Drag and drop the Data Sync from event panel to workflow editor.

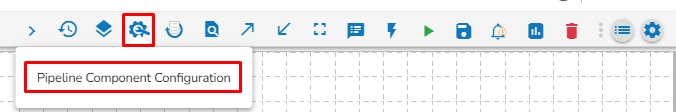

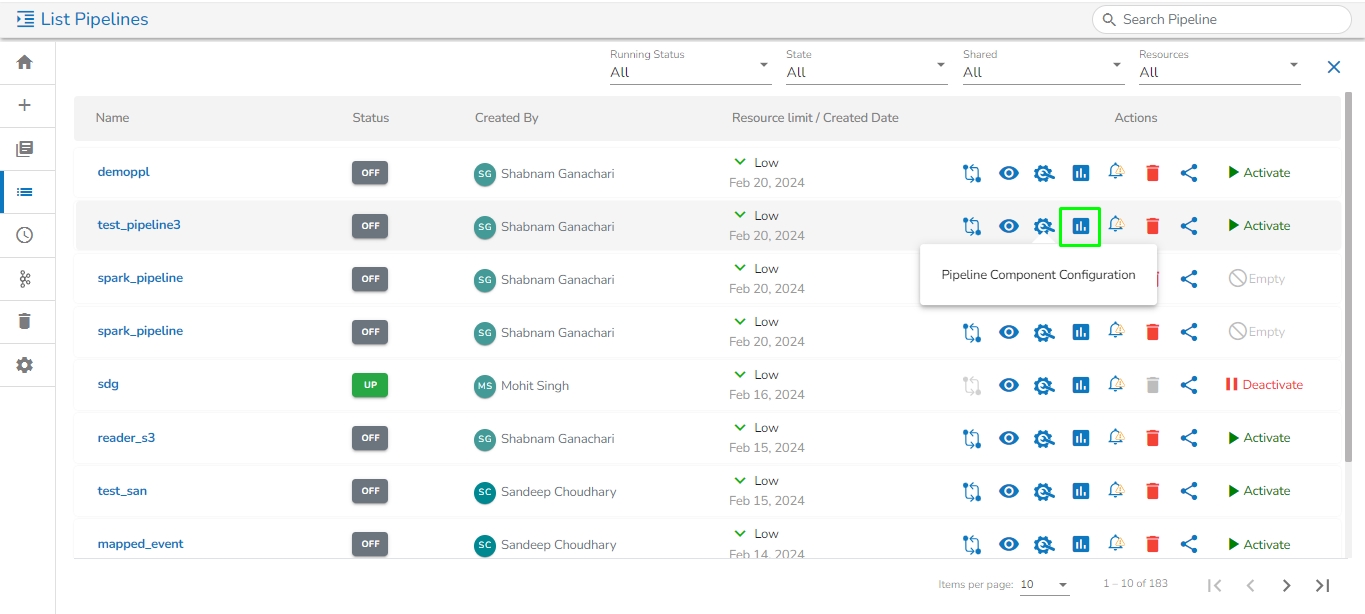

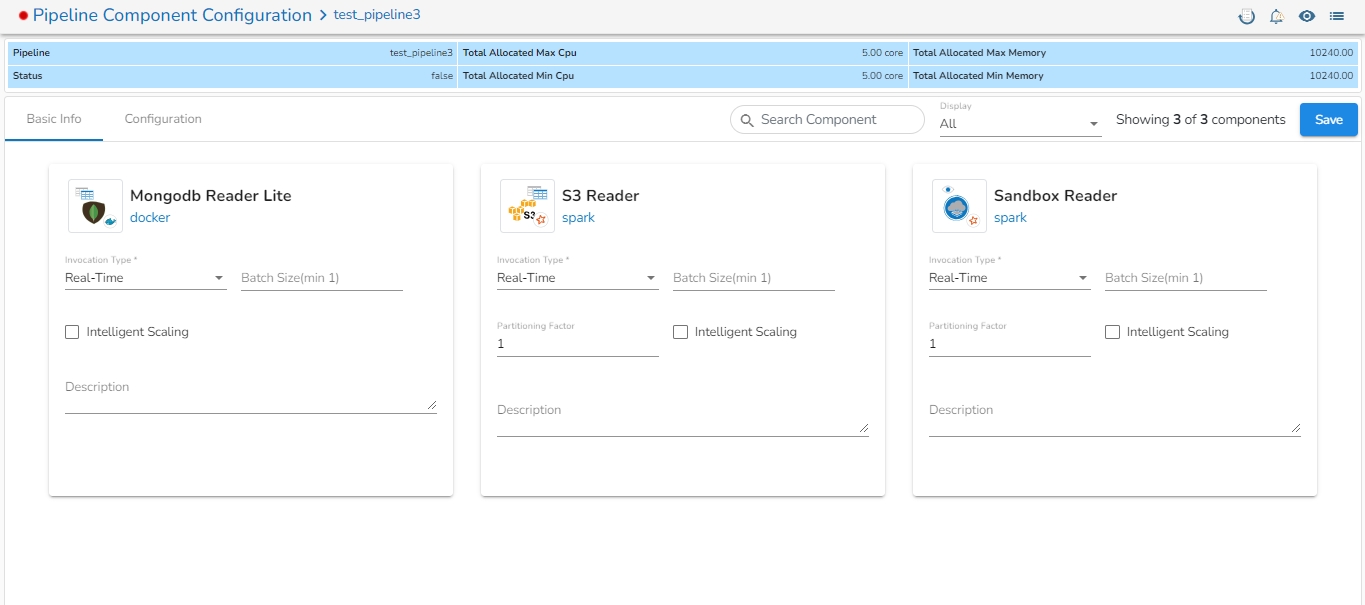

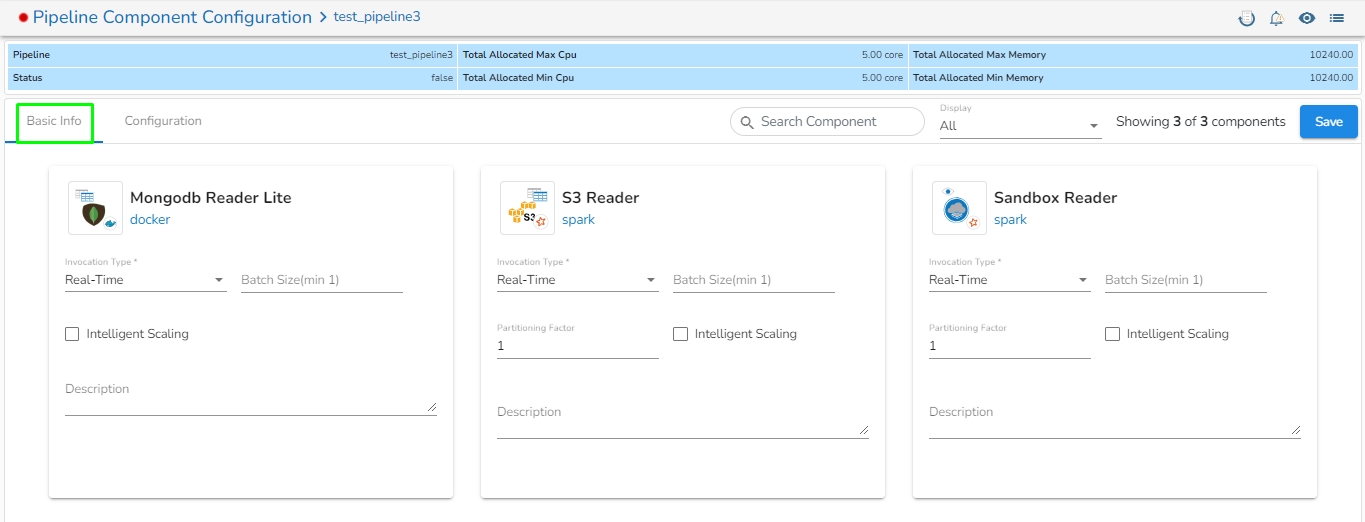

The feature that allows users to configure all the components of a pipeline on a single page is available on the list pipeline page. With this feature, users no longer have to click on each individual component to configure it. By having all the relevant configuration options on a single page, this feature reduces the time and number of clicks required to configure the pipeline components.

Click the Pipeline Component Configuration icon from the header panel of the Pipeline Editor. The user can either access this option form the pipeline tool or from the list pipeline page.

All the components used in the selected pipeline will be listed on the configuration page.

There will the following information displayed at the top of Pipeline Component Configuration page.

Pipeline: Name of the pipeline.

Status: It indicates the running status of the pipeline. 'True' indicates the Pipeline is active, while 'False' indicates inactivity.

The user will find two tabs on the Configuration Page:

Basic Info

Configuration

In the Basic Info tab, the user can configure basic information for the components such as invocation type, batch size, description, or intelligent scaling.

On the Configuration tab, the user can provide resources such as Memory and CPU to the components, as well as set the number of minimum and maximum instances for the components.

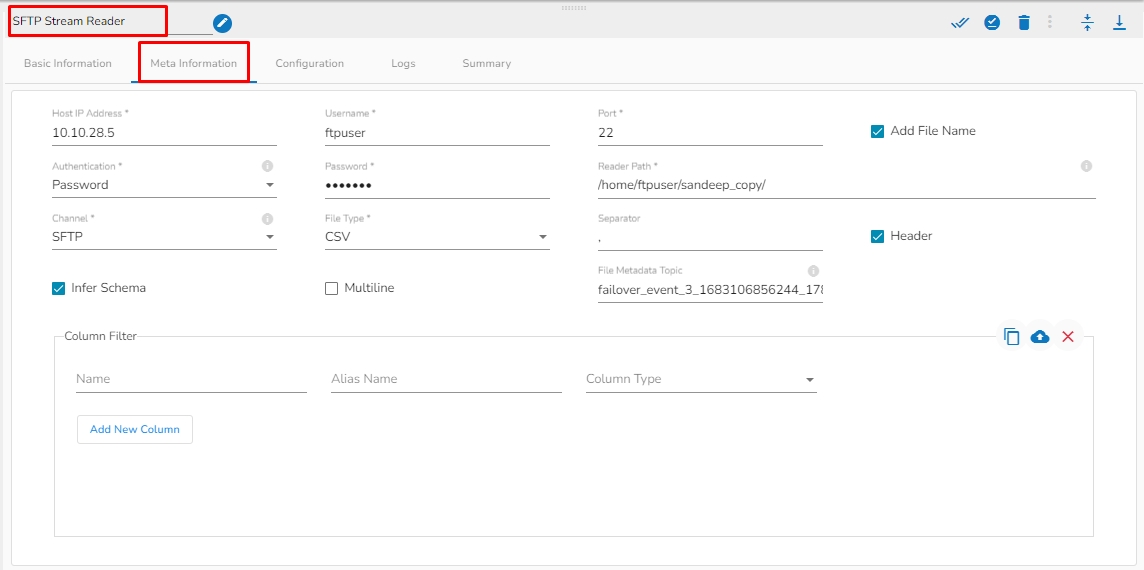

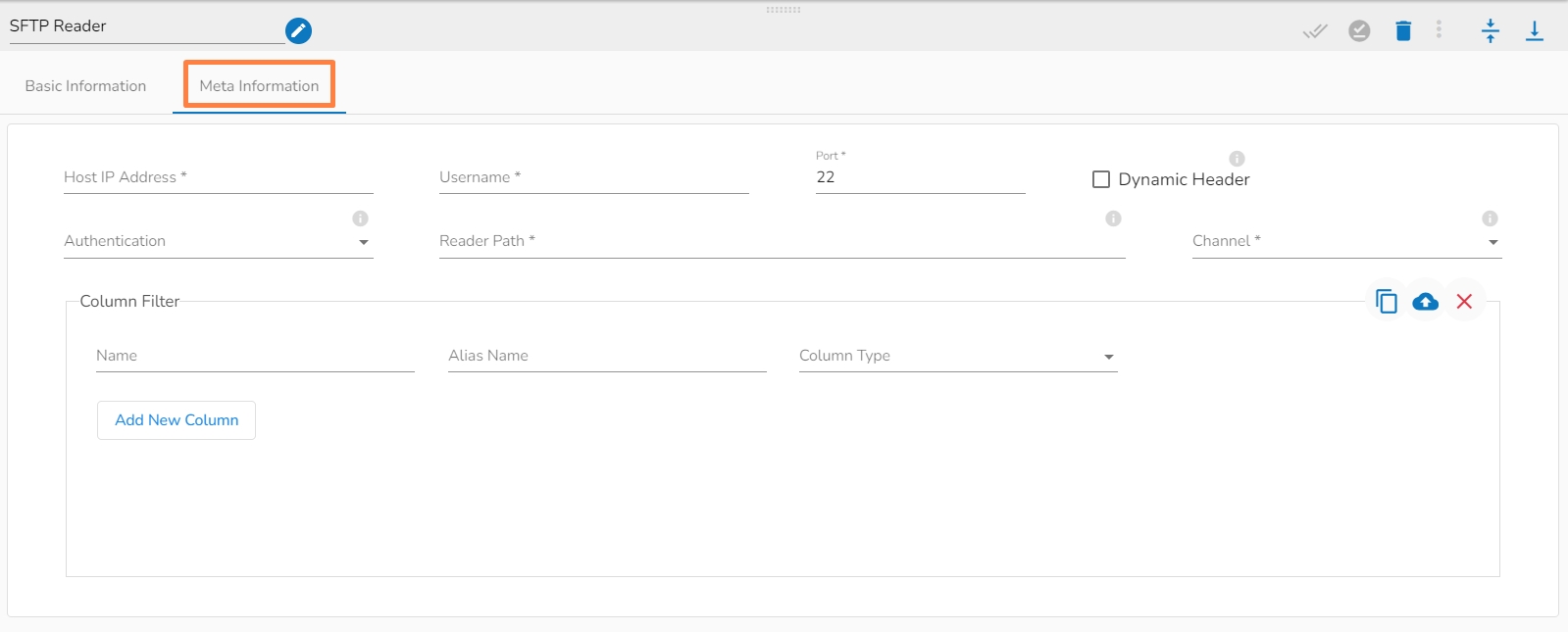

SFTP Reader is designed to read and access files stored on an SFTP server. SFTP readers typically authenticate with the SFTP server using a username and password or SSH key pair, which grants access to the files stored on the server

All component configurations are classified broadly into the following sections:

Meta Information

Please follow the demonstration to configure the SFTP Reader and its meta information.

Please go through the below given steps to configure SFTP Reader component:

Host: Enter the host.

Username: Enter username for SFTP reader.

Port: Provide the Port number.

Dynamic Header: It can automatically detect the header row in a file and adjust the column names and number of columns as necessary.

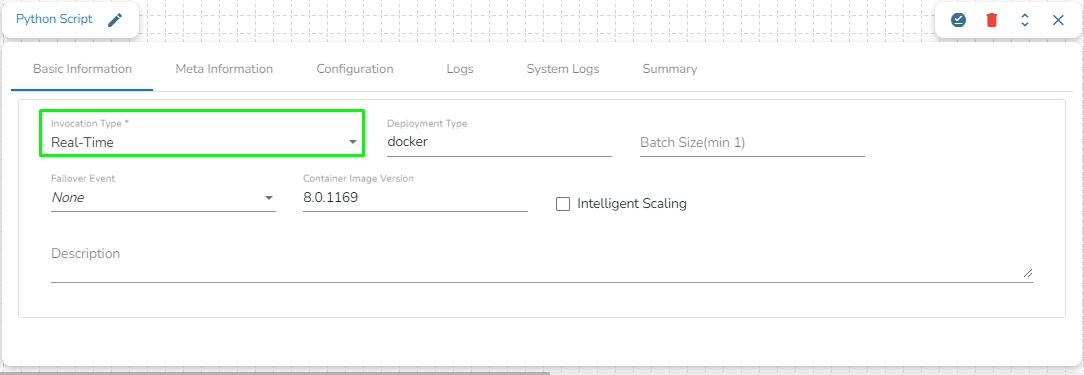

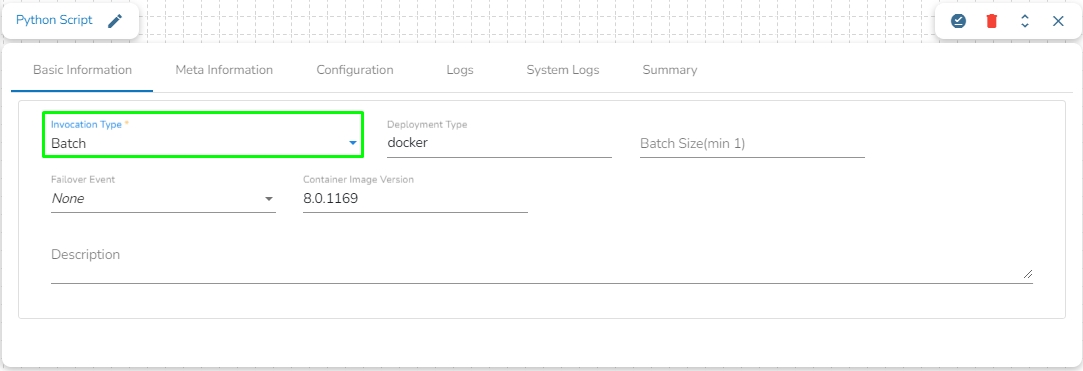

This page pays attention to describe the Basic Info tab provided for the pipeline components. This tab has to be configured for all the components.

The Invocation-Type config decides the type of deployment of the component. There are following two types of invocations:

Real-Time

Batch

When the Component has the real-time invocation, the component never goes down when the pipeline is active. This is for situations where you want to keep the component ready all the time to consume data.

When "Realtime" is selected as the invocation type, we have an additional option to scale up the component called "Intelligent Scaling."

Please refer to the following page to learn more about .

When the component has the batch invocation type then the component needs a trigger to initiate the process from the previous event. Once the process of the component is finished and there are no new events to process the component goes down.

These are really helpful in Batch or scheduled operations where the data is not streaming or real-time.

The pipeline components process the data in micro-batches. This batch size is given to define the maximum number of records that you want to process in a single cycle of operation; This is really helpful if you want to control the number of records being processed by the component if the unit record size is huge. You can configure it in the base config of the components.

The below given illustration displays how to update the Batch Size configuration.

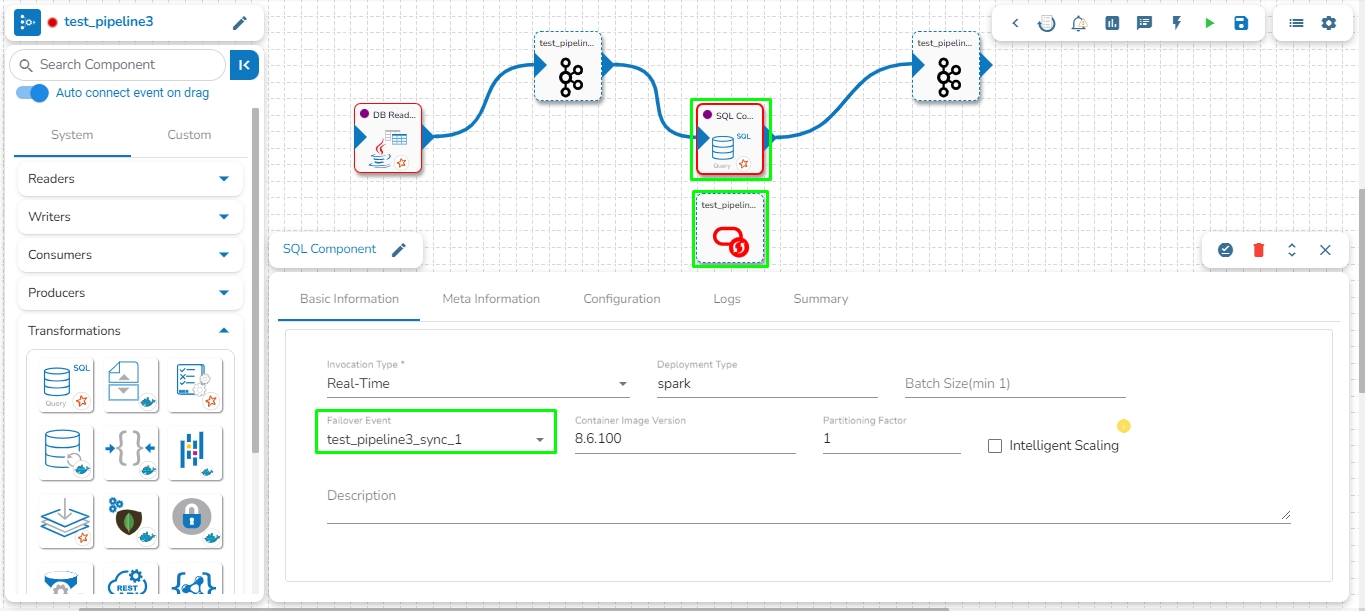

We can create a failover event and map it in the component base configuration, so that if the component fails it audits all the failure messages with the data (if available) and timestamp of the error.

Go through the illustration given below to understand the Failover Events.

An Elasticsearch reader component is designed to read and access data stored in an Elasticsearch index. Elasticsearch readers typically authenticate with Elasticsearch using username and password credentials, which grant access to the Elasticsearch cluster and its indexes.

All component configurations are classified broadly into the following sections:

Meta Information

Please follow the given demonstration to configure the component.

Host IP Address: Enter the host IP Address for Elastic Search.

Port: Enter the port to connect with Elastic Search.

Index ID: Enter the Index ID to read a document in Elasticsearch. In Elasticsearch, an index is a collection of documents that share similar characteristics, and each document within an index has a unique identifier known as the index ID. The index ID is a unique string that is automatically generated by Elasticsearch and is used to identify and retrieve a specific document from the index.

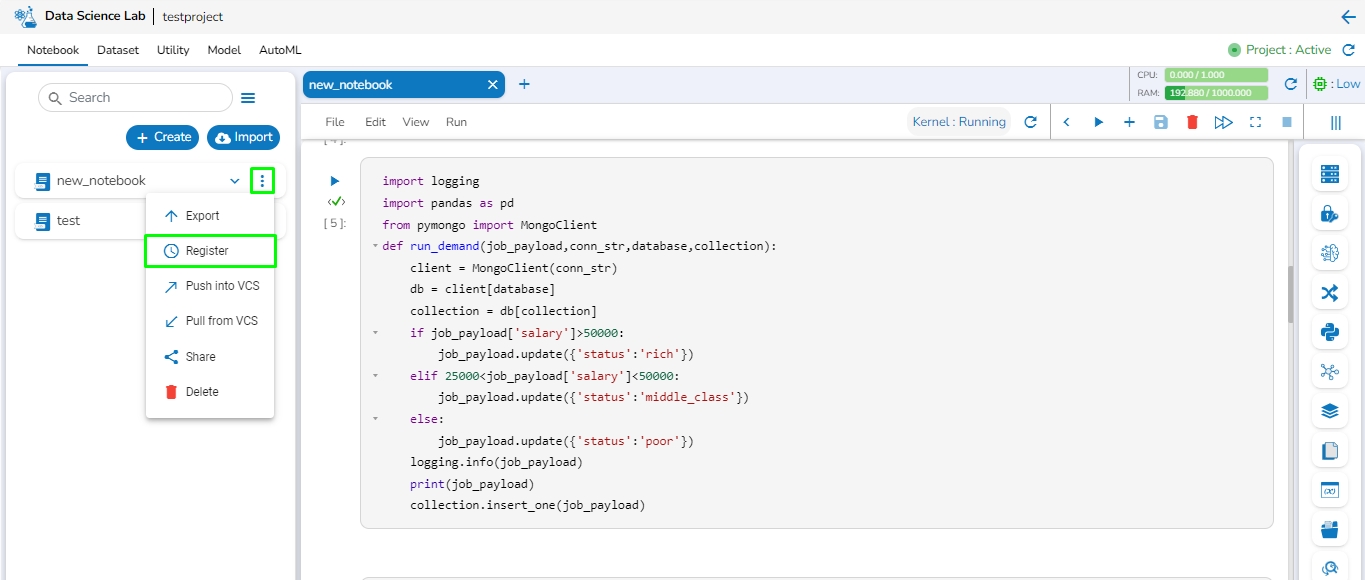

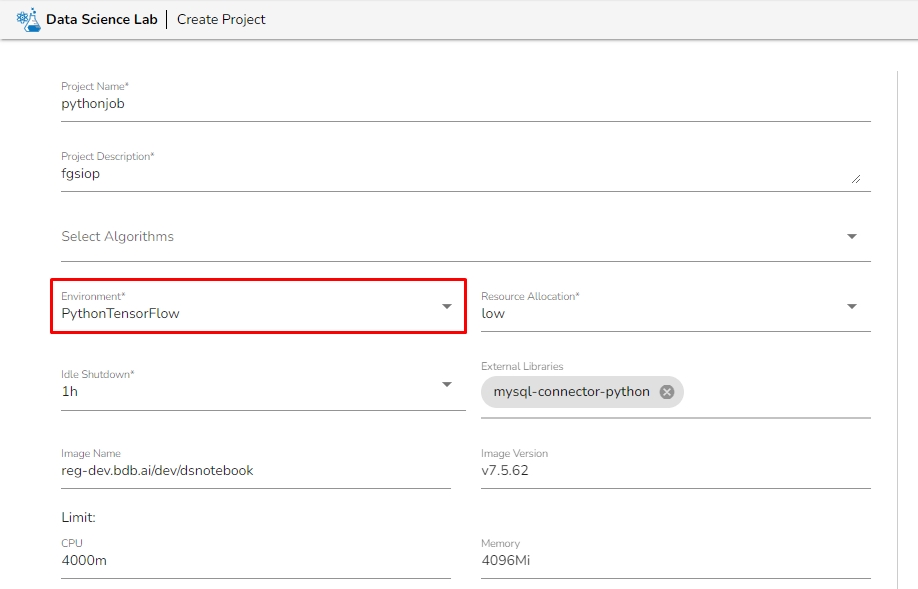

The Register Feature enables users to directly create jobs from DsLab notebook. Using this feature, users can create the following types of jobs:

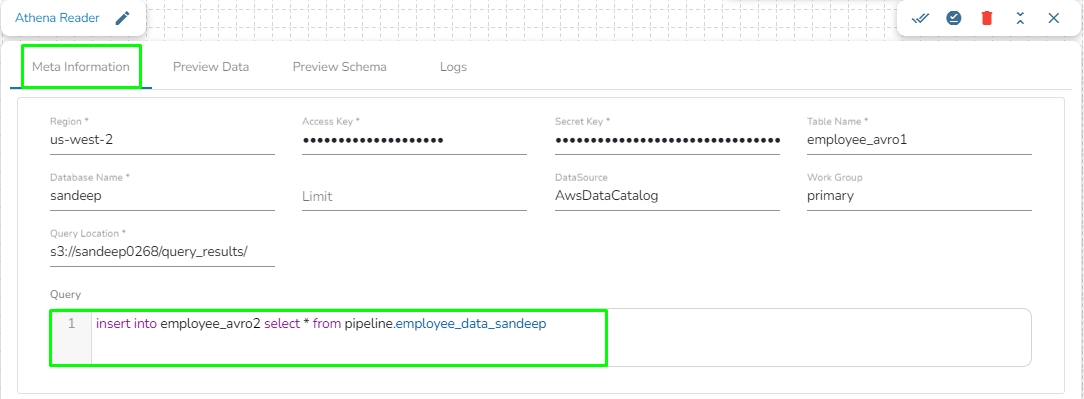

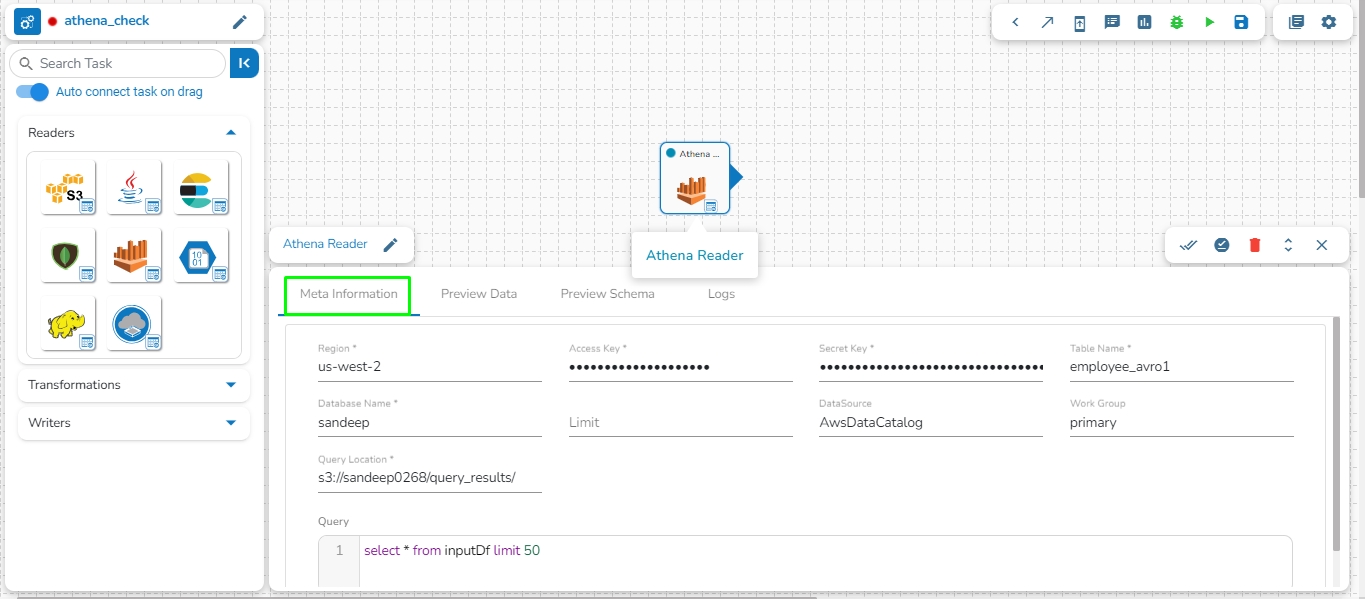

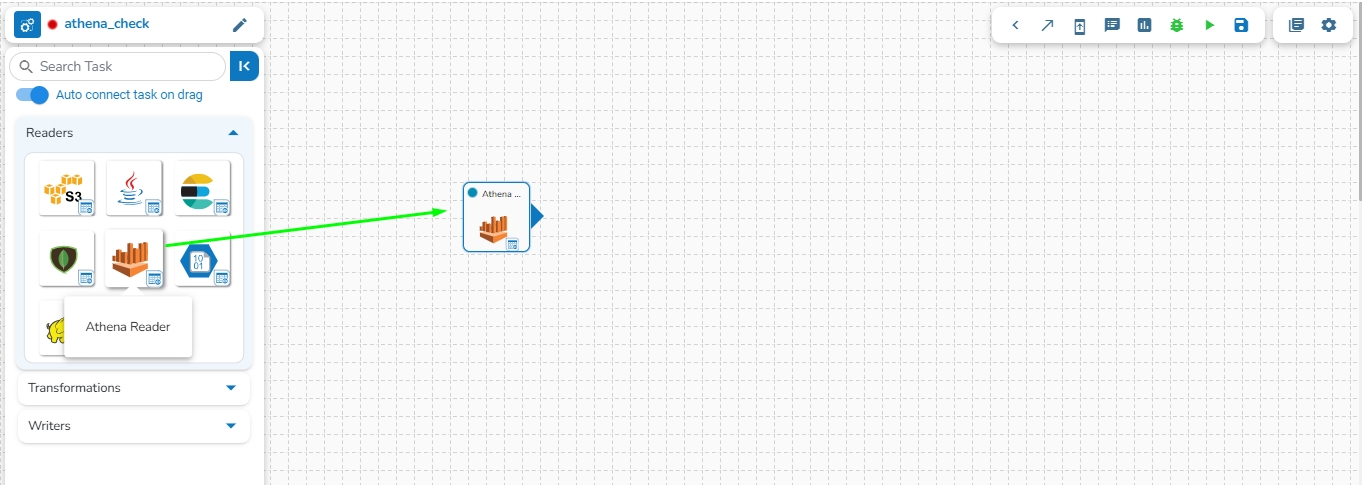

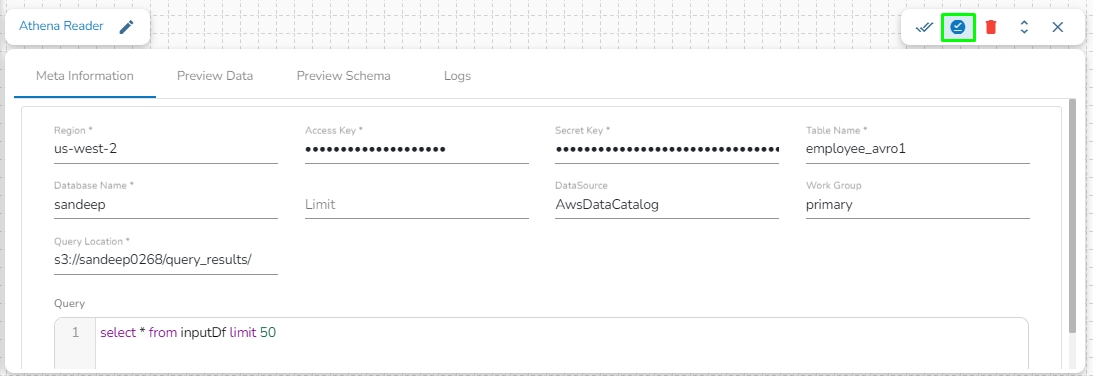

Amazon Athena is an interactive query service that easily analyzes data directly in Amazon Simple Storage Service (Amazon S3) using standard SQL. With a few actions in the AWS Management Console, you can point Athena at your data stored in Amazon S3 and begin using standard SQL to run ad-hoc queries and get results in seconds.

Athena Query Executer task enables users to read data directly from the external table created in AWS Athena.

Please Note: Please go through the below given demonstration to configure Athena Query Executer in Jobs.

The Alert feature in the job allows users to send an alert message to the specified channel (Teams or Slack) in the event of either the success or failure of the configured job. Users can also choose both success and failure options to send an alert for the configured job.

Webhook URL: Provide the Webhook URL of the selected channel group where the Alert message needs to be sent.

Write Python scripts and run them flawlessly in the Jobs.

This feature allows users to write their own Python script and run their script in the Jobs section of Data Pipeline module.

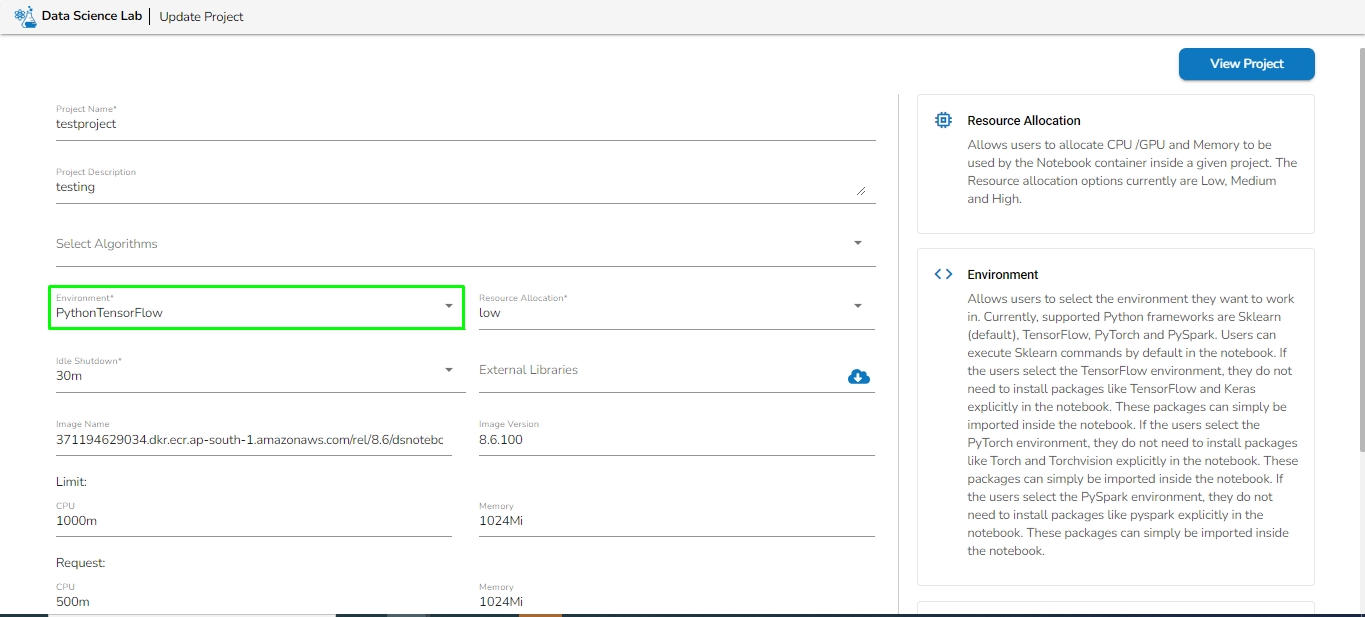

Before creating the Python Job, the user has to create a project in the Data Science Lab module under Python Environment. Please refer the below image for reference:

After creating the Data Science project, the users need to activate it and create a Notebook where they can write their own Python script. Once the script is written, the user must save it and export it to be able to use it in the Python Jobs.

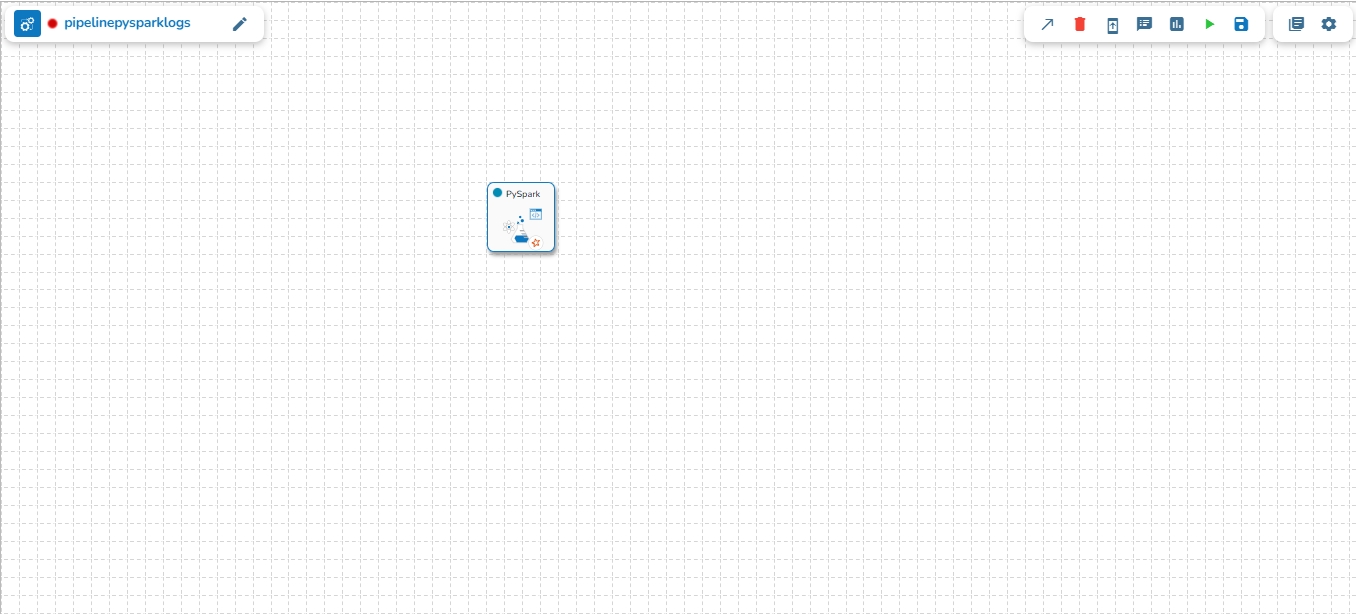

This section provides detailed information on the Jobs to make your data process faster.

Jobs are used for ingesting and transferring data from separate sources. The user can transform, unify, and cleanse data to make it suitable for analytics and business reporting without using the Kafka topic which makes the entire flow much faster.

Check out the given demonstration to understand how to create and activate a job.

Navigate to the Data Pipeline homepage.

There is a resource configuration tab while configuring the components.

The Data Pipeline contains an option to configure the resources i.e., Memory & CPU for each component that gets deployed.

There are two types of components-based deployment types:

Docker

Spark

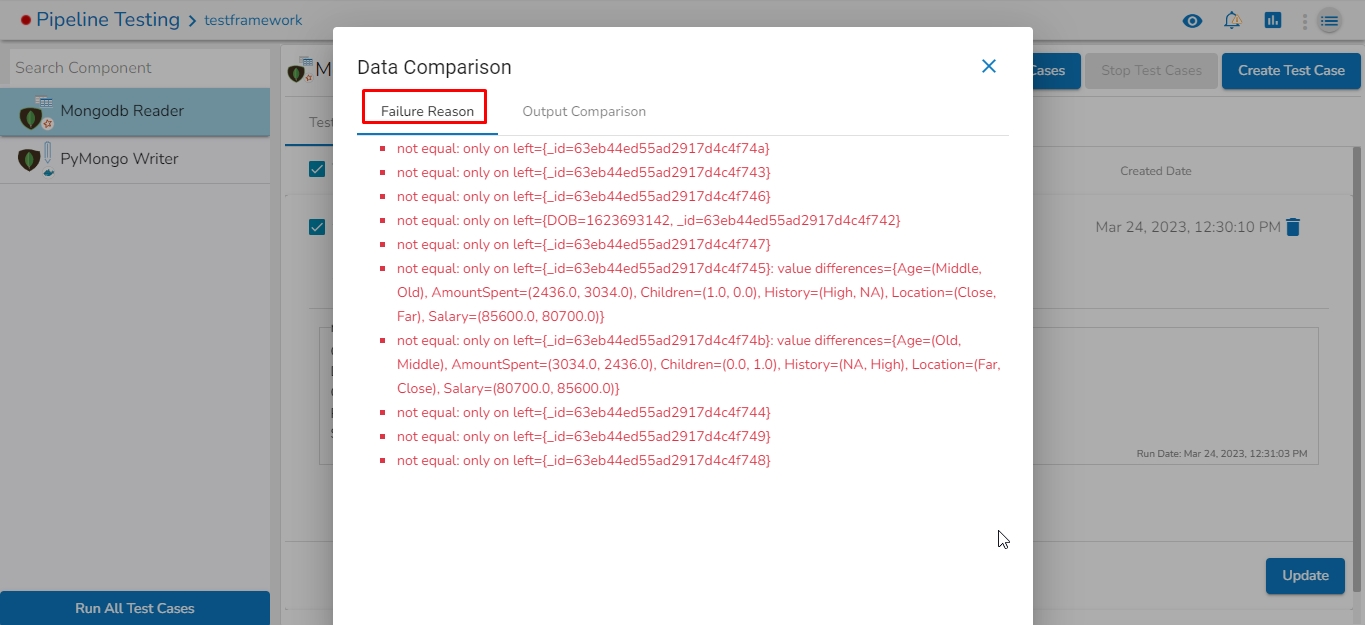

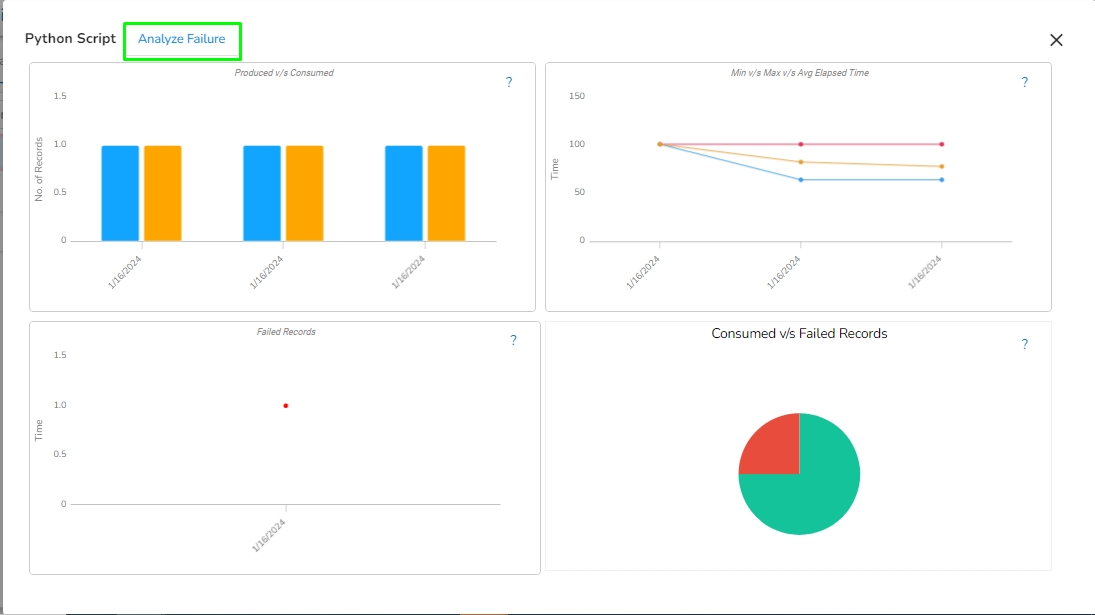

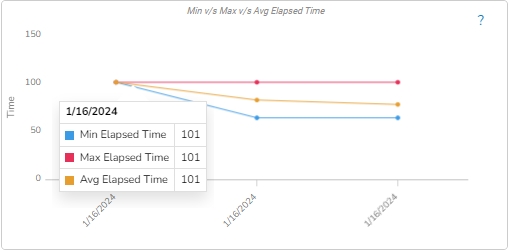

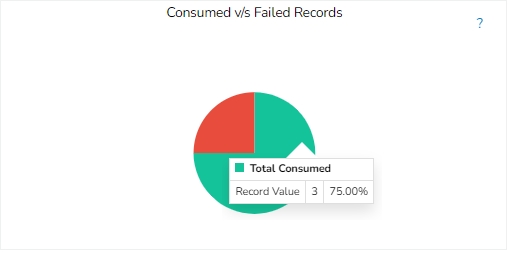

The Failure Analysis page allows users to analyze the reasons for the failure of the component used in the pipeline.

Check out the below given walk-through for failure analysis in the Pipeline Workflow editor canvas.

Navigate to the Pipeline Editor page.

Row Tags: Provide the row tags from the XML files.

Join Row Tags: Enable this option to join multiple row tags.

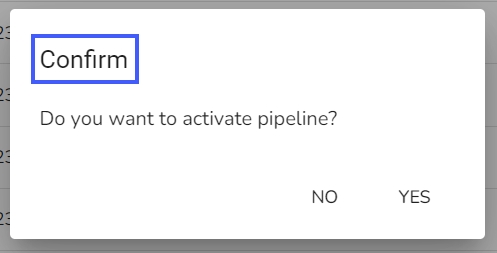

A dialog window opens to confirm the action of pipeline activation.

Click the YES option to activate the pipeline.

A success message appears confirming the activation of the pipeline.

Another success message appears to confirm that the pipeline has been updated.

The Status for the pipeline gets changed on the Pipeline List page.

Limit: Set limit for the number of records

Query: Insert an SQL query (it supports query containing a join statement as well)

CSV: The Header and Infer Schema fields get displayed with CSV as the selected File Type. Enable Header option to get the Header of the reading file and enable Infer Schema option to get true schema of the column in the CSV file.

JSON: The Multiline and Charset fields get displayed with JSON as the selected File Type. Check-in the Multiline option if there is any multiline string in the file.

PARQUET: No extra field gets displayed with PARQUET as the selected File Type.

AVRO: This File Type provides two drop-down menus.

Compression: Select an option out of the Deflate and Snappy options.

Compression Level: This field appears for the Deflate compression option. It provides 0 to 9 levels via a drop-down menu.

XML: Select this option to read XML file. If this option is selected, the following fields will get displayed:

Infer schema: Enable this option to get true schema of the column.

Path: Provide the path of the file.

Root Tag: Provide the root tag from the XML files.

Name: Display the name of the Kafka event in the pipeline.

Event Name: Name of the Kafka event.

Partitions: Number of partitions in the Kafka event.

Messages Count: Displays the number of messages in the Kafka topic.

Retention Period: Displays the retention period of the Kafka topic in hours. The retention period of a Kafka topic determines how long Kafka retains the messages in a topic before deleting them.

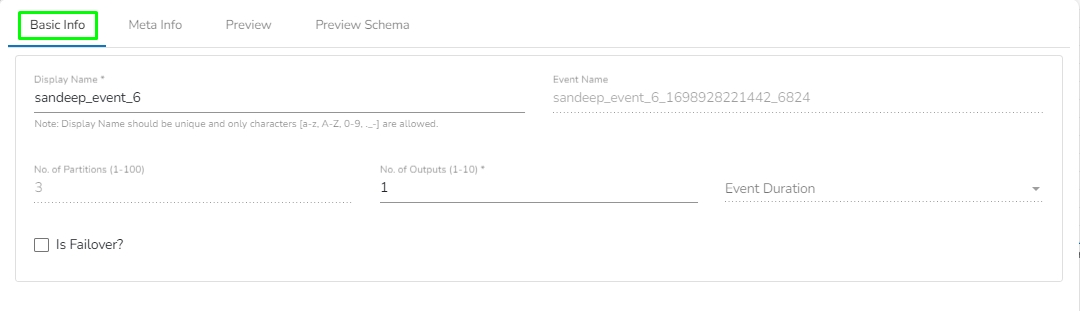

Provide a display name for the event (A default name based on the pipeline name appears for the Event).

Select the Event Duration from the drop-down menu (It can be set from 4 to 168 hours as per the given options).

Number of partitions (You can choose out of 1 to 50).

Number of outputs (You can choose out of 1-3) (The maximum number of outputs must not exceed the no. of Partition).

Enable the Is Failover? option if you wish to create a failover Event.

Click the Add Event option to save the new Event.

Total Allocated Min CPU: Minimum allocated CPU in cores.

Total Allocated Max Memory: Maximum allocated Memory in MB.

Total Allocated Min Memory: Minimum allocated Memory in MB.

Authentication: Select an authentication option using the drop-down list.

Password: Provide a password to authenticate the SFTP component

PEM/PPK File: Choose a file to authenticate the SFTP component. The user must upload a file if this authentication option is selected.

Reader Path: Enter the path from where the file has to be read.

Channel: Select a channel option from the drop-down menu (the supported channel is SFTP).

Column filter: Select the columns that you want to read and if you change the name of the column, then put that name in the alias name section otherwise keep the alias name the same as the column name and then select a Column Type from the drop-down menu.

Use the Download Data and Upload File options to select the desired columns.

Upload File: The user can upload the existing system files (CSV, JSON) using the Upload File icon.

Download Data (Schema): Users can download the schema structure in JSON format by using the Download Data icon.

Resource Type: Provide the resource type. In Elasticsearch, a resource type is a way to group related documents together within an index. Resource types are defined at the time of index creation, and they provide a way to logically separate different types of documents that may be stored within the same index.

Is Date Rich True: Enable this option if any fields in the reading file contain date or time information. The date rich feature in Elasticsearch allows for advanced querying and filtering of documents based on date or time ranges, as well as date arithmetic operations.

Username: Enter the username for elastic search.

Password: Enter the password for elastic search.

Query: Provide a spark SQL query.

Follow the below-given steps to create a Python Job using the register feature:

Create a project in the DSLab module under the Python environment. Refer to the below given image for guidance.

Once the project is created, create a Notebook and write the scripts. It supports scripts running through multiple code cells.

After writing the script, select the Register option from the Notebook options. Refer to the following image for reference.

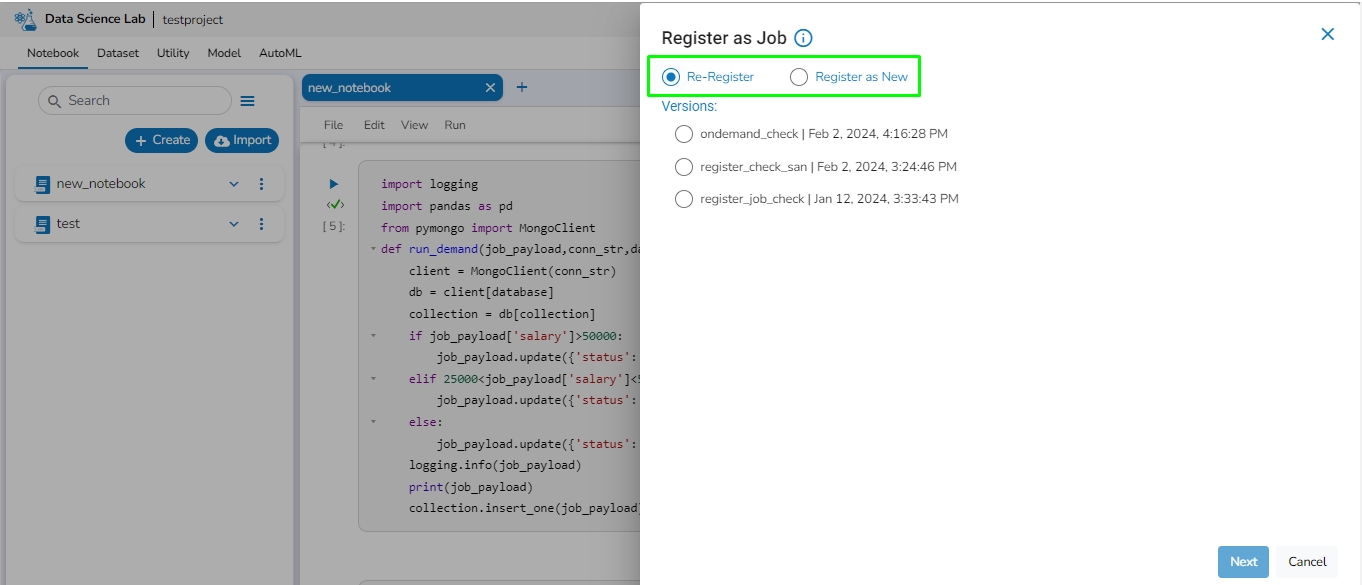

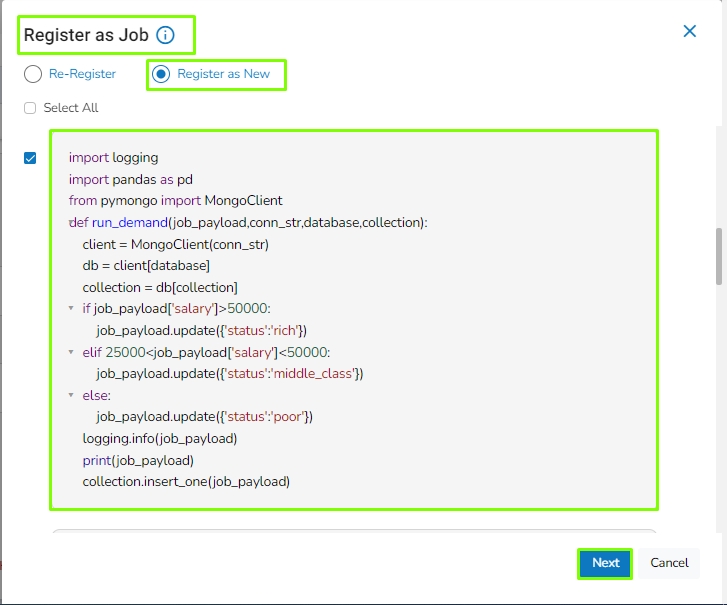

The Register as Job panel will open on the right side of the window with two options:

Register as New: This will create a new Job.

Re-Register: If the Job is already created and the user wants to make changes to the scripts, it will update the existing Job with the latest changes.

Please go through the walkthrough below to register as a new Job.

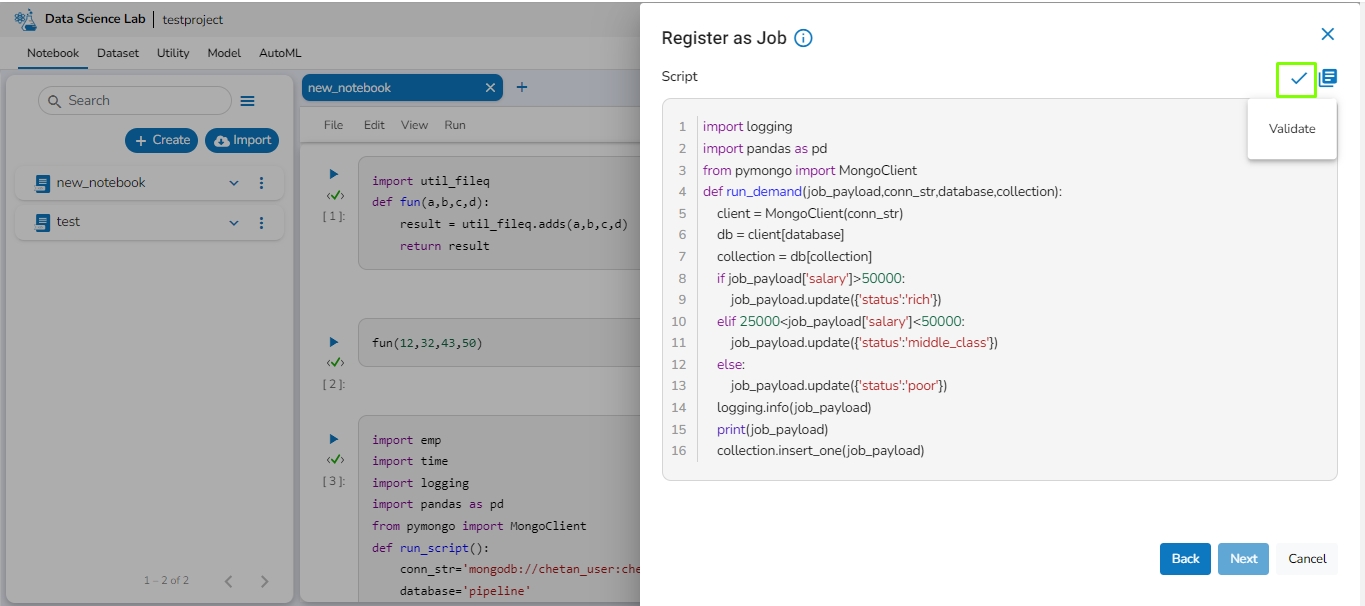

After clicking on the Register option and selecting the Register as New option, the user needs to choose the desired notebook cell that should be executed with the Job. The user can select multiple cells from the notebook according to requirement and then click on the Next option.

Now, the user needs to validate the script of the selected cell by clicking on the Validate option. Additionally, the user can select the External Libraries used in the project by clicking on the External Libraries option next to the Validate option. The Next option won't be enabled until the user validates the script. After validating the script, click on the Next option.

Enter scheduler name: Name of the job. (It will create a scheduled job if the user is creating a Python Job).

Scheduler description: Description of the job.

Start function: Select the start function from the validated script to start the job from the given start function.

Job BaseInfo: Python (This field will be pre-selected if the DSLab project is created under the Python environment.)

Docker config: Select the desired configuration for the job and provide the resources (CPU & Memory) for executing the job.

Provide the resources required to run the Python Job in the Limit and Request section.

Limit: Enter the max CPU and Memory required for the Python Job.

Request: Enter the CPU and Memory required for the job at the start.

Instances: Enter the number of instances for the Python Job.

On demand: Check this option if a Python Job (On demand) needs to be created. In this scenario, the Job will not be scheduled.

Payload: This option will only appear if the On demand option is checked. Enter the payload in the form of a JSON Array containing JSON objects. For more details about the Python Job (On demand), refer to this link:

Concurrency Policy: Select the desired concurrency policy. For more details about the Concurrency Policy, check this link:

Alert: This feature in the Job allows the users to send an alert message to the specified channel (Teams or Slack) in the event of either the success or failure of the configured Job. Users can also choose both success and failure options to send an alert for the configured Job. Check the following link to configure the Alert:

Click on the Save option to create a job.

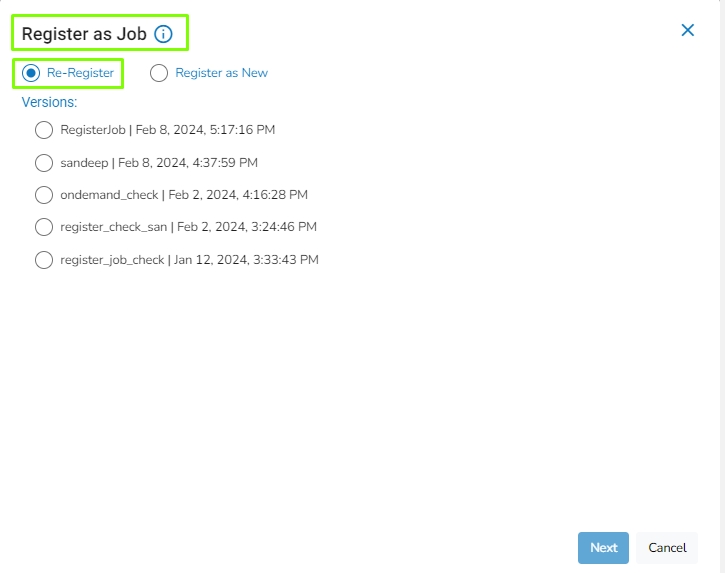

This feature allows the user to update an existing job with the latest changes. Select the Re-register option if the Job is already created and the user wants to make changes to the scripts.

After selecting the Re-Register option, the system will display all previous versions of registered Jobs from the chosen notebook.

The user must choose the Job to be Re-Registered with the latest changes and proceed by clicking Next.

Validate the script of the selected cell by clicking on the Validate option. Additionally, the user can choose External Libraries by clicking on the option next to Validate. The Next option remains disabled until the script is validated.

After script validation, proceed by clicking Next.

Now, the user needs to provide all the required information for Re-registering the job, following the same steps outlined in the Register as New feature.

Finally, click on the Save option to complete the Re-Registration of the job.

Region: Enter the region name where the bucket is located.

Access Key: Enter the AWS Access Key of the account that must be used.

Secret Key: Enter the AWS Secret Key of the account that must be used.

Table Name: Enter the name of the external table created in Athena.

Database Name: Name of the database in Athena in which the table has been created.

Limit: Enter the number of records to be read from the table.

Data Source: Enter the Data Source name configured in Athena. Data Source in Athena refers to your data's location, typically an S3 bucket.

Workgroup: Enter the Workgroup name configured in Athena. The Workgroup in Athena is a resource type to separate query execution and query history between Users, Teams, or Applications running under the same AWS account.

Query location: Enter the path where the results of the queries done in the Athena query editor are saved in the CSV format. Users can find this path under the Settings tab in the Athena query editor as Query Result Location.

Query: Enter the Spark SQL query.

Sample Spark SQL query that can be used in Athena Reader:

Type: Message Card. (This field will be Pre-filled)

Theme Color: Enter the Hexadecimal color code for ribbon color in the selected channel. Please refer the image given at the bottom of this page for the reference.

Sections: In this tab, the following fields are there:

Activity Title: This is the title of the alert which has to be to sent on the Teams channel. Enter the Activity Title as per the requirement.

Activity Subtitle: Enter the Activity Subtitle. Please refer the image given at the bottom of this page for the reference.

Text: Enter the text message which should be sent along with Alert.

Webhook URL: Provide the Webhook URL of the selected channel group where the Alert message needs to be sent.

Attachments: In this tab, the following fields are there:

Title: This is the title of the alert which has to be to sent on the selected channel. Enter the Activity Title as per the requirement.

Color: Enter the Hexadecimal color code for ribbon color in the Slack channel. Please refer the image given at the bottom of this page for the reference.

Text: Enter the text message which should be sent along with Alert.

Footer: The "Footer" typically refers to additional information or content appended at the end of a message in a Slack channel. This can include details like a signature, contact information, or any other supplementary information that you want to include with your message. Footers are often used to provide context or additional context to the message content.

Footer Icon: In Slack, the footer icon refers to an icon or image that is displayed at the bottom of a message or attachment. The footer icon can be a company logo, an application icon, or any other image that represents the entity responsible for the message. Enter image URL as the value of Footer icon.

Follow these steps to set the Footer icon in Slack:

Go to the desired image that has to be used as the footer icon.

Sample image URL for Footer icon:

Sample Hexadecimal Color code which can be used in Job Alert.

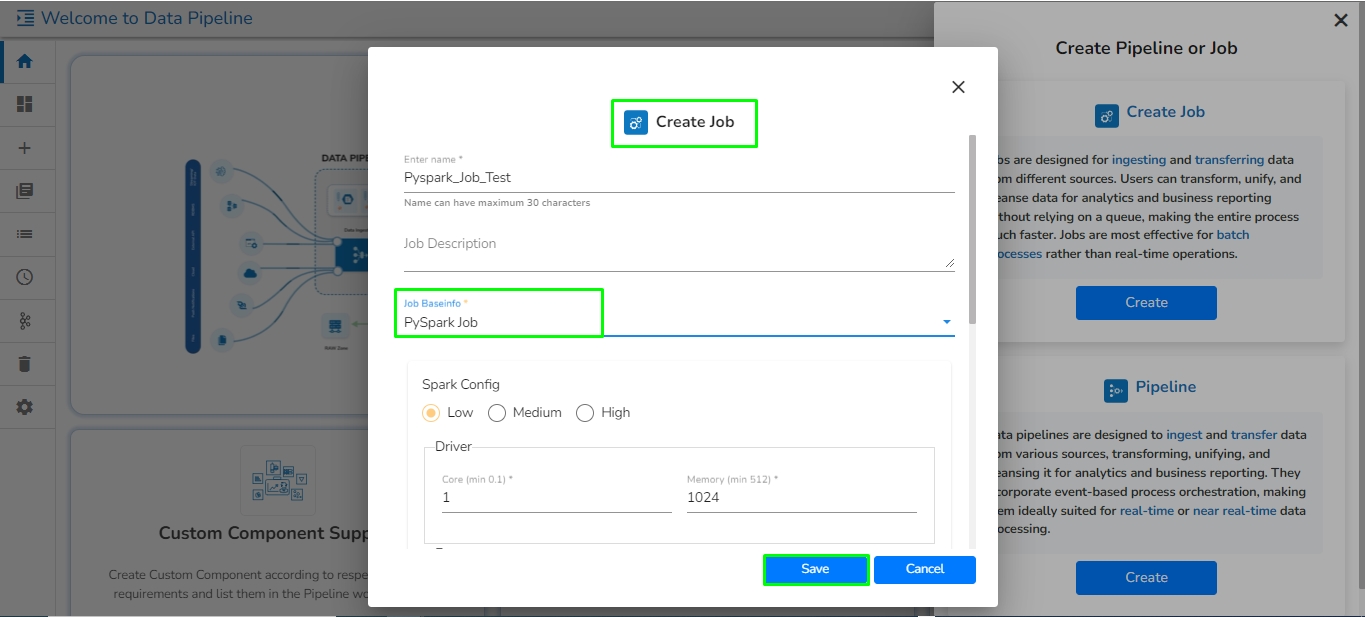

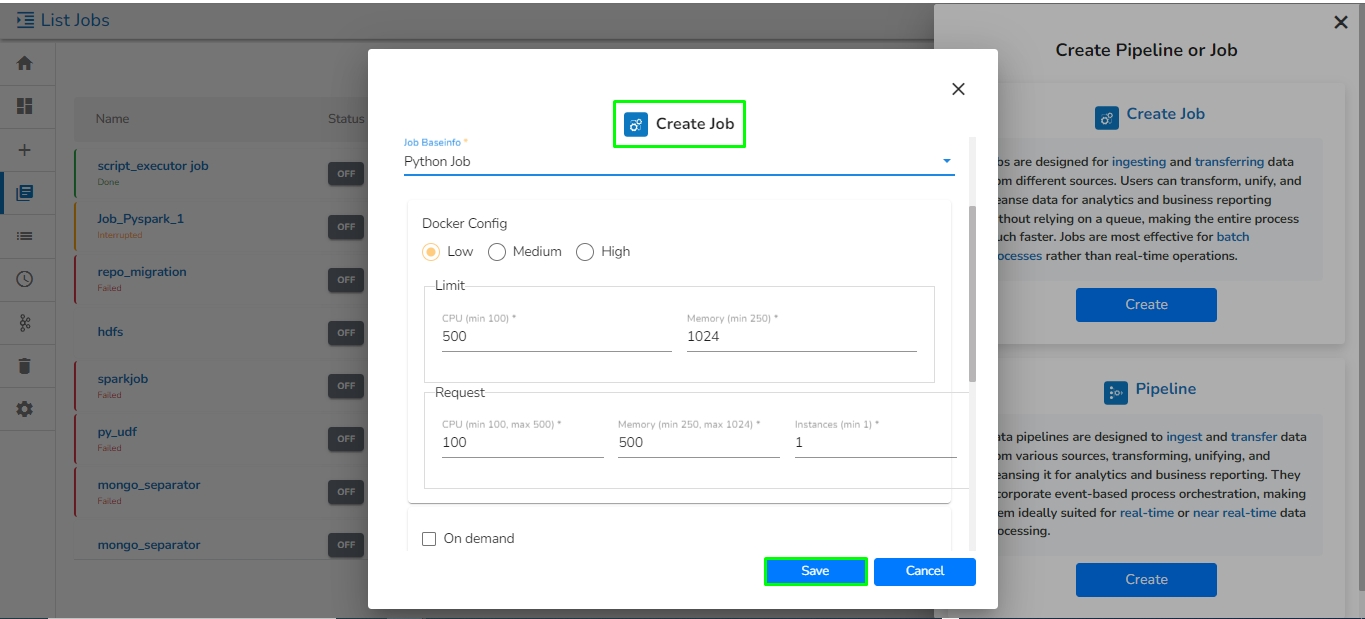

Navigate to the Data Pipeline module homepage.

Open the pipeline homepage and click on the Create option.

The new panel opens from right hand side. Click on Create button in Job option.

Enter a name for the new Job.

Describe the Job (Optional).

Job Baseinfo: Select Python Job from the drop-down.

Trigger By: There are 2 options for triggering a job on success or failure of a job:

Success Job: On successful execution of the selected job the current job will be triggered.

Failure Job: On failure of the selected job the current job will be triggered.

Is Scheduled?

A job can be scheduled for a particular timestamp. Every time at the same timestamp the job will be triggered.

Job must be scheduled according to UTC.

On demand: Check the "On demand" option to create an on-demand job. For more information on Python Job (On demand), check .

Docker Configuration: Select a resource allocation option using the radio button. The given choices are:

Low

Medium

High

Provide the resources required to run the python Job in the limit and Request section.

Limit: Enter max CPU and Memory required for the Python Job.

Request: Enter the CPU and Memory required for the job at the start.

Alert: Please refer to the page to configure alerts in job.

Click the Save option to save the Python Job.

The Python Job gets saved, and it will redirect the users to the Job Editor workspace.

Check out the below given demonstration configure a Python Job.

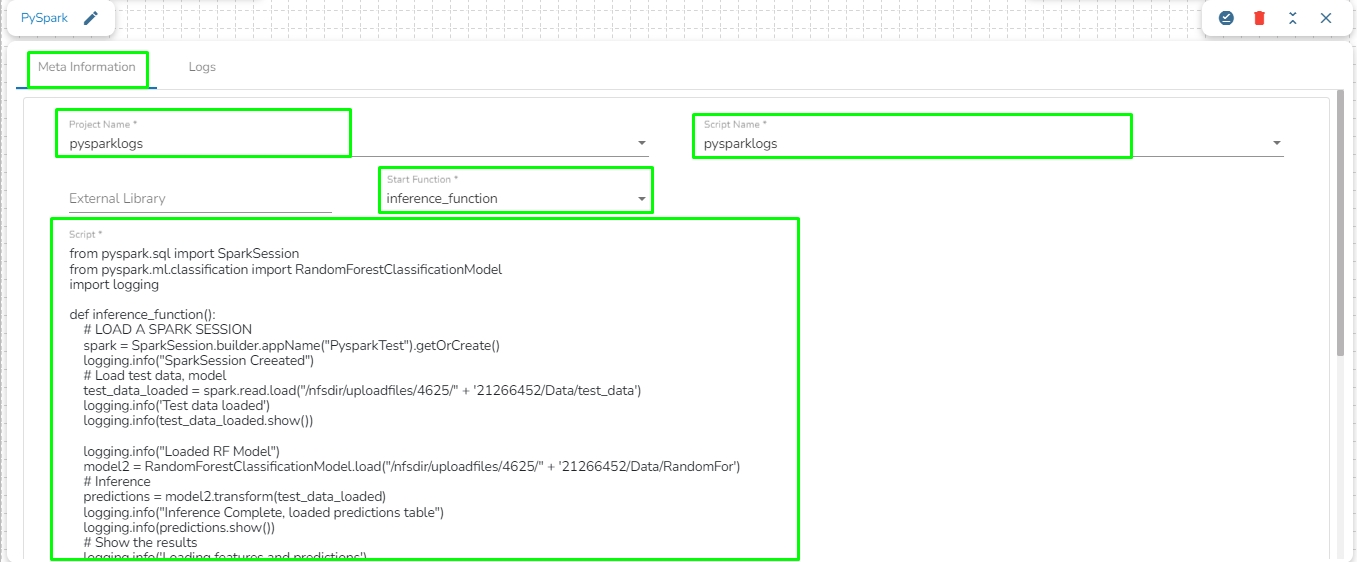

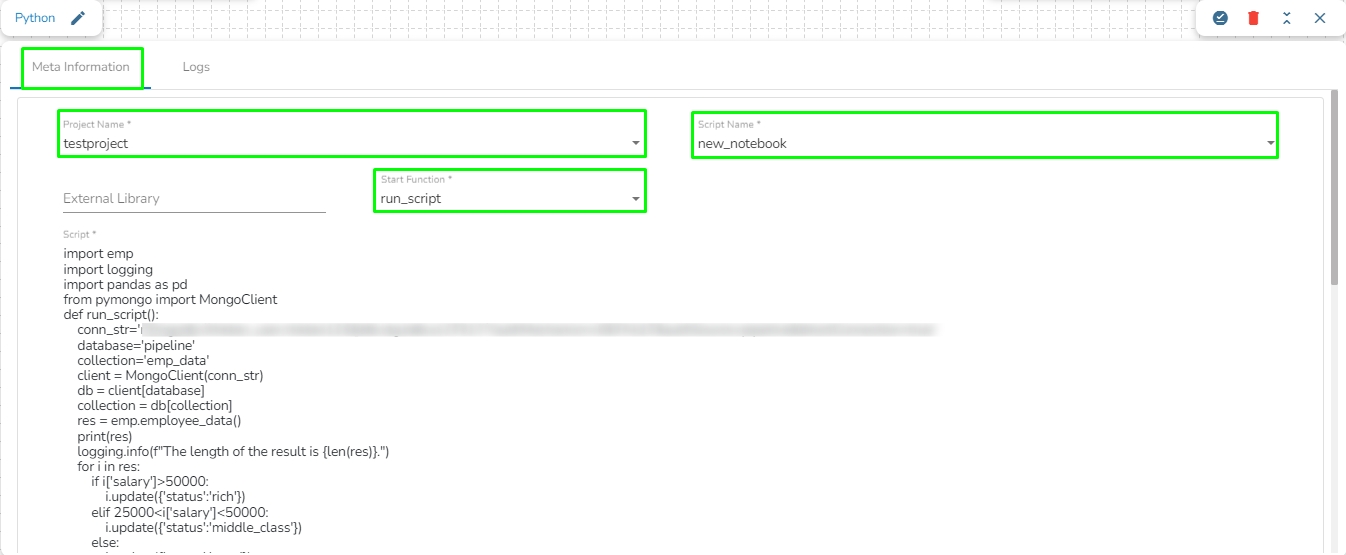

Once the Python Job is created, follow the below given steps to configure the Meta Information tab of the Python Job.

Project Name: Select the same Project using the drop-down menu where the Notebook has been created.

Script Name: This field will list the exported Notebook names which are exported from the Data Science Lab module to Data Pipeline.

External Library: If any external libraries are used in the script the user can mention it here. The user can mention multiple libraries by giving comma (,) in between the names.

Start Function: Here, all the function names used in the script will be listed. Select the start function name to execute the python script.

Script: The Exported script appears under this space.

Input Data: If any parameter has been given in the function, then the name of the parameter is provided as Key, and value of the parameters has to be provided as value in this field.

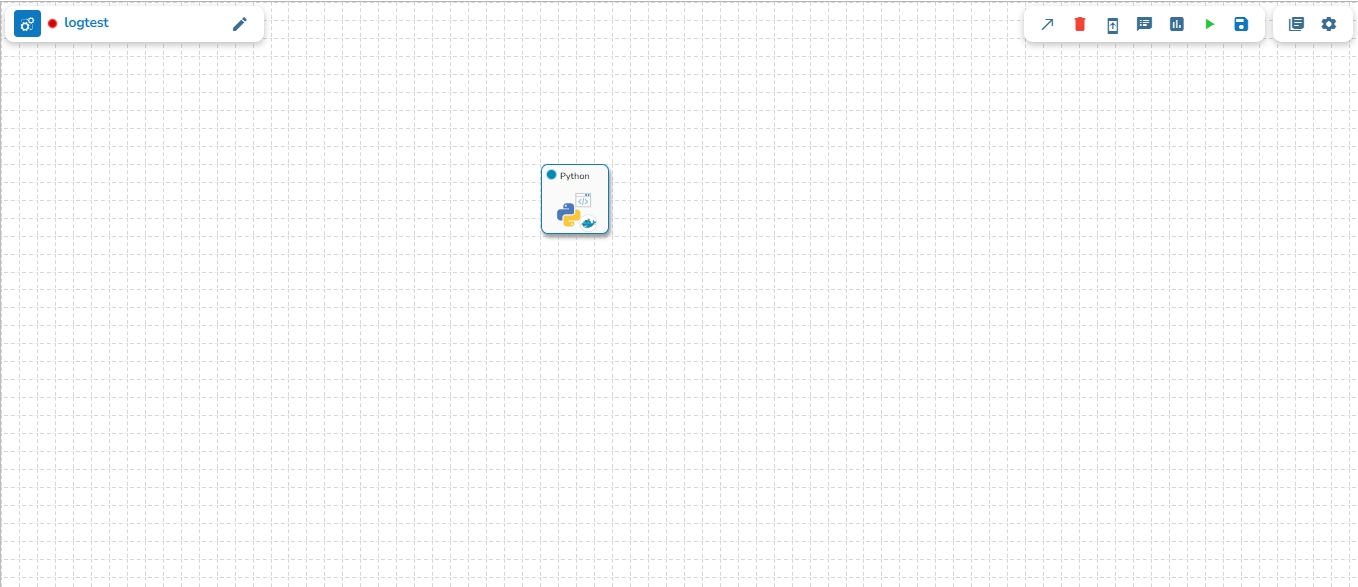

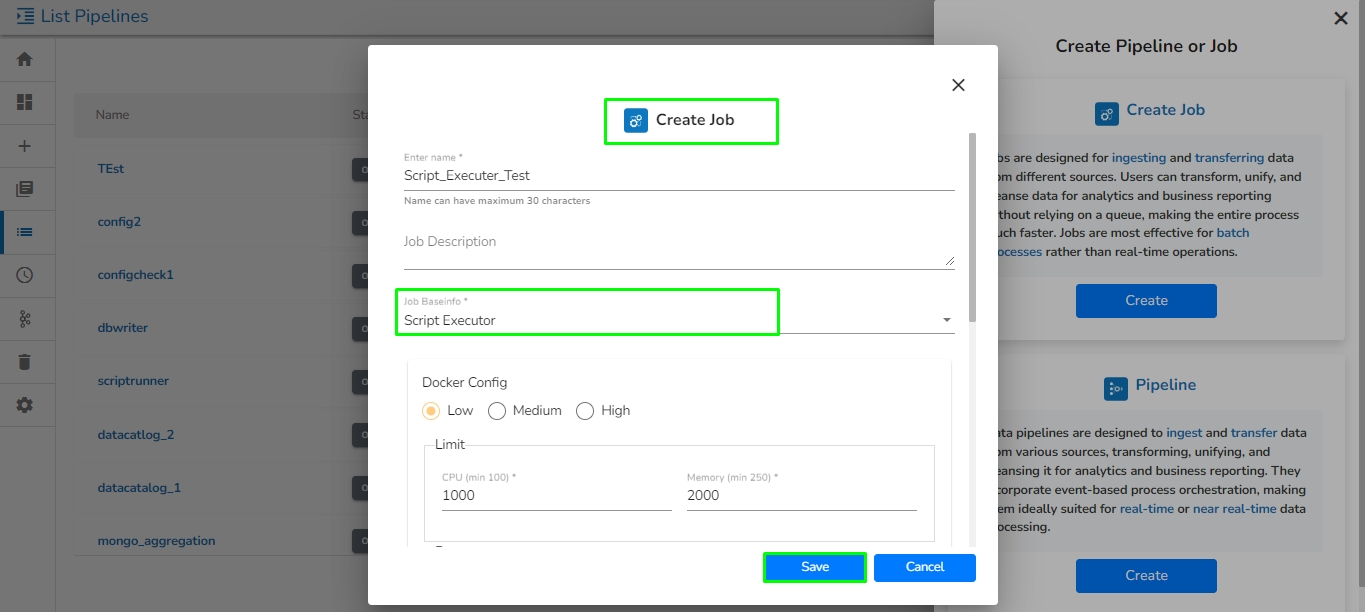

Click on the Create icon.

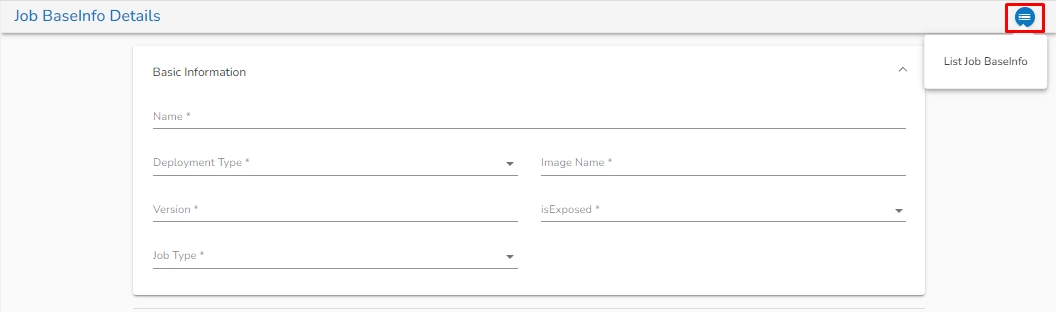

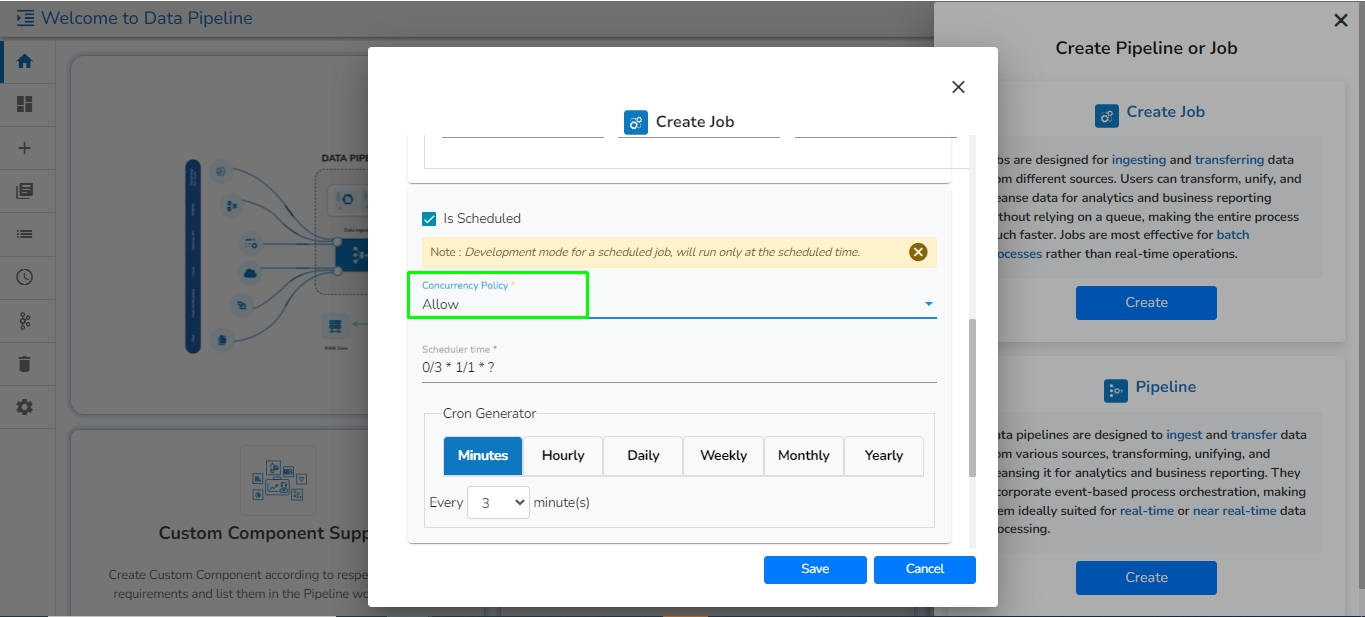

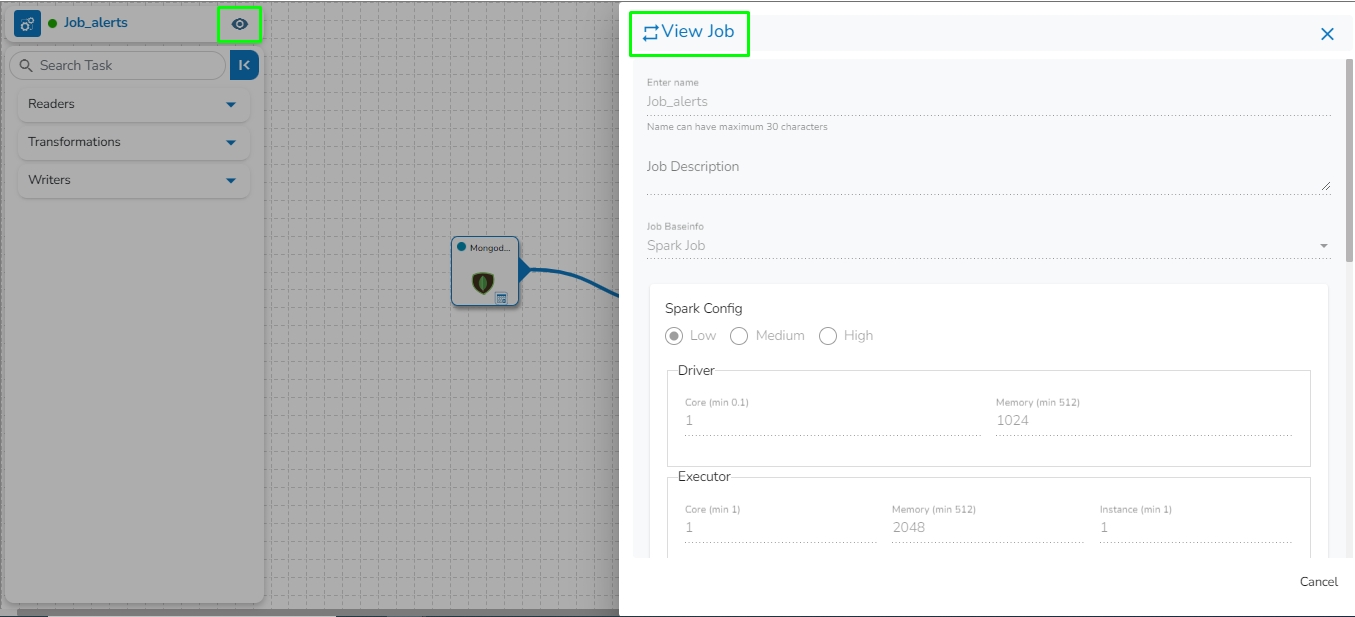

Navigate to the Create Pipeline or Job interface.

The New Job dialog box appears, redirecting the user to create a new Job.

Enter a name for the new Job.

Describe the Job(Optional).

Job Baseinfo: In this field, there are the following options:

Trigger By: There are 2 options for triggering a job on success or failure of a job:

Success Job: On successful execution of the selected job the current job will be triggered.

Failure Job: On failure of the selected job the current job will be triggered.

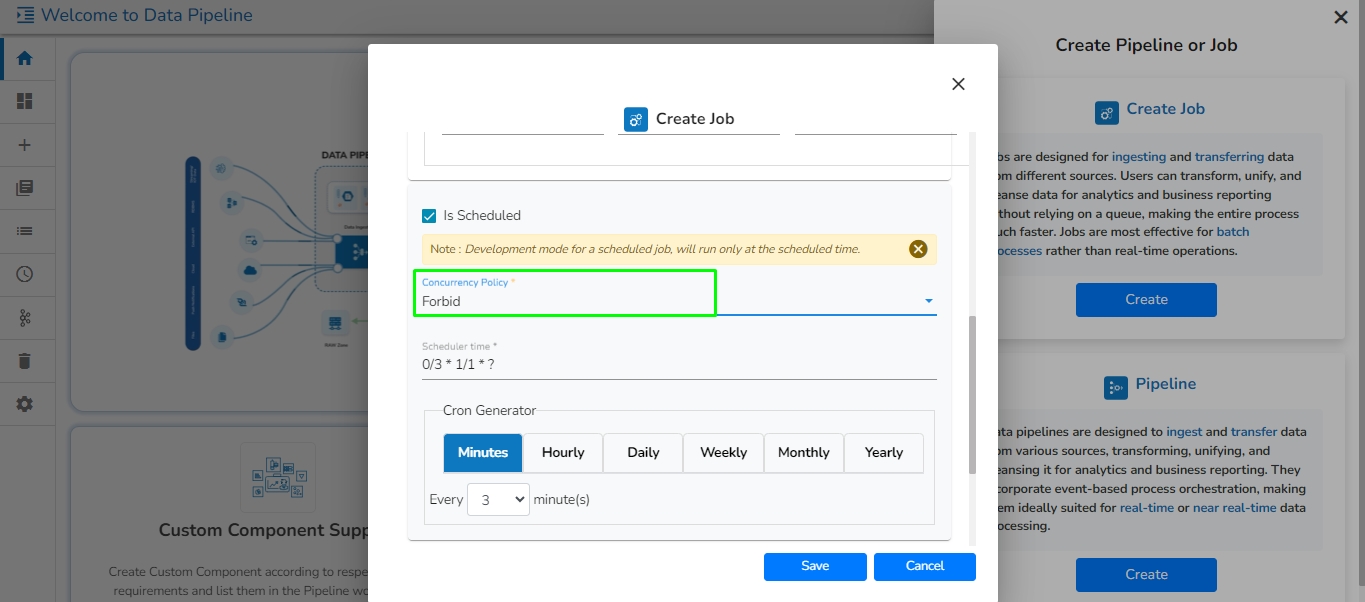

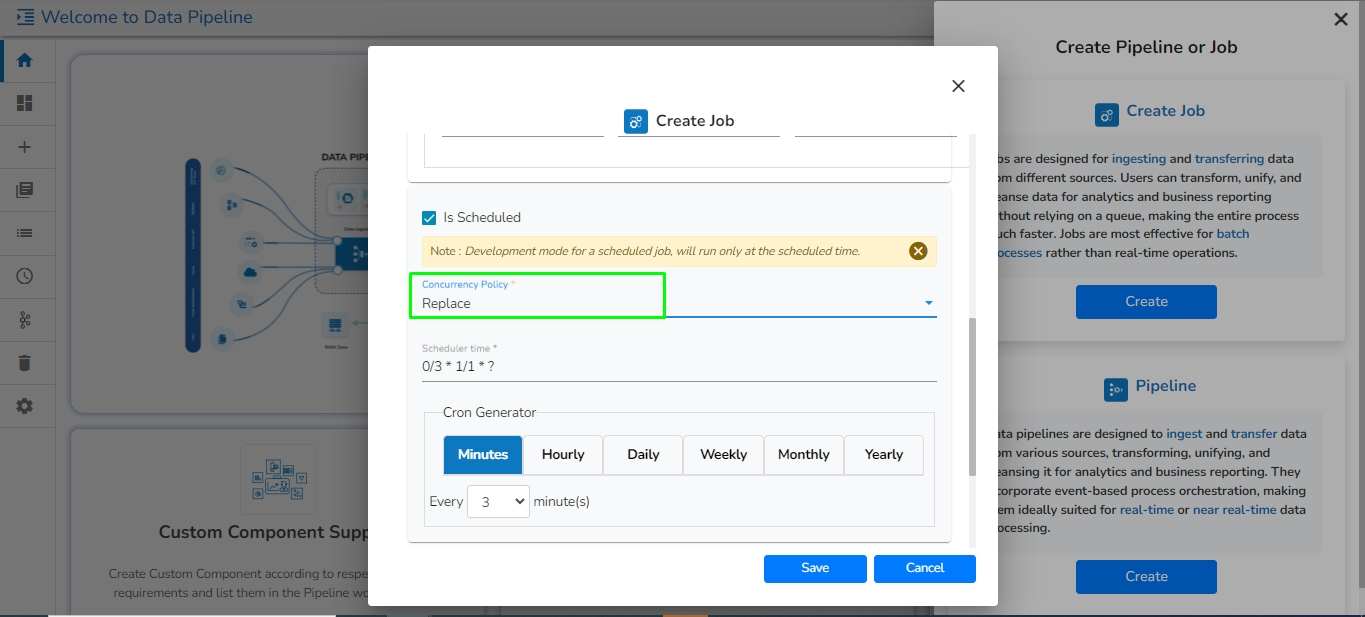

Is Scheduled?

A job can be scheduled for a particular timestamp. Every time at the same timestamp the job will be triggered.

Job must be scheduled according to UTC.

Concurrency Policy: Concurrency policy schedulers are responsible for managing the execution and scheduling of concurrent tasks or threads in a system. They determine how resources are allocated and utilized among the competing tasks. Different scheduling policies exist to control the order, priority, and allocation of resources for concurrent tasks.

There are 3 Concurrency Policy available:

Allow: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will run in parallel with the previous tasks.

Forbid: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the next task will wait until all the previous tasks are completed.

Replace: If a job is scheduled for a specific time and the first process is not completed before the next scheduled time, the previous task will be terminated and the new task will start processing.

Spark Configuration

Select a resource allocation option using the radio button. The given choices are:

Low

Alert: There are 2 options for sending an alert:

Success: On successful execution of the configured job, the alert will be sent to selected channel.

Failure: On failure of the configured job, the alert will be sent to selected channel.

Please go through the given link to configure the Alerts in Job:

Click the Save option to create the job.

A success message appears to confirm the creation of a new job.

The Job Editor page opens for the newly created job.

After we save the component and pipeline, The component gets saved with the default configuration of the pipeline i.e. Low, Medium, and High. After the users save the pipeline, we can see the configuration tab in the component. There are multiple things:

There are Request and Limit configurations needed for the Docker components.

The users can see the CPU and Memory options to be configured.

CPU: This is the CPU config where we can specify the number of cores that we need to assign to the component.

Memory: This option is to specify how much memory you want to dedicate to that specific component.

Instances: The number of instances is used for parallel processing. If the users. give N no of instances those many pods will be deployed.

Spark Component has the option to give the partition factor in the Basic Information tab. This is critical for parallel spark jobs.

Please follow the given example to achieve it:

E.g., If the users need to run 10 parallel spark processes to write the data where the number of inputs Kafka topic partition is 5 then, they will have to set the partition count to 2[i.e., 5*2=10 jobs]. Also, to make it work the number of cores * number of instances should be equal to 10.2 cores * 5

instances =10 jobs.

The configuration of the Spark Components is slightly different from the Docker components. When the spark components are deployed, there are two pods that come up:

Driver

Executor

Provide the Driver and Executor configurations separately.

Instances: The number of instances used for parallel processing. If we give N as the number of instances in the Executor configuration N executor pods will get deployed.

Click the Failure Analysis icon.

The Failure Analysis page opens.

Search Component: A Search bar is provided to search all components associated with that pipeline. It helps to find a specific component by inserting the name in the Search Bar.

Component Panel: It displays all the components associated with that pipeline.

Filter: By default, the selected component instance Id will be displayed in the filter field. Records will be displayed based on the instance id of the selected component. It filters the failure data based on the applied filter.

Please Note the Filter Format of some of the field types.

Field Value Type

Filter Format

String

data.data_desc:” "ignition"

Integer

data.data_id:35

Float

data.lng:95.83467601

Boolean

data.isActive:true

Project: By default, the pipeline_Id and _id are selected from the records. If the user does not want to select and select any field then that field will be set with 0/1 (0 to exclude and 1 to include), displaying the selected column.

Sort: By default, records are displayed in descending order based on the “_id” field. Users can change ascending order by choosing Ascending option.

Limit: By default, 10 records are displayed. Users can modify the records limit according to the requirement. The maximum limit is 1000.

Find: It filters/sorts/limits the records and projects the fields by clicking on the find button.

Reset: If the user clicks on the Reset button, then all the fields must be reset with a default value.

Cause: The cause of the failure gets displayed by a click on any failed data.

The component failure is indicated by a red color flag in the Pipeline Workflow. The user gets redirected to the Failure Analysis page by clicking on the red flag.

Navigate to any Pipeline Editor page.

Create or access a Pipeline workflow and run it.

If any component fails while running the Pipeline workflow, a red color flag pops-up on the top right side of the component.

Click the red flag to open the Failure Analysis page.