Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

All the available Writer Task components for a Job are explained in this section.

Writers are a group of components that can write data to different DB and cloud storages.

There are Eight(8) Writers tasks in Jobs. All the Writers tasks is having the following tabs:

Meta Information: Configure the meta information same as doing in pipeline components.

Preview Data: Only ten random data can be previewed in this tab only when the task is running in Development mode.

Preview schema: Spark schema of the data will be shown in this tab.

Logs: Logs of the tasks will display here.

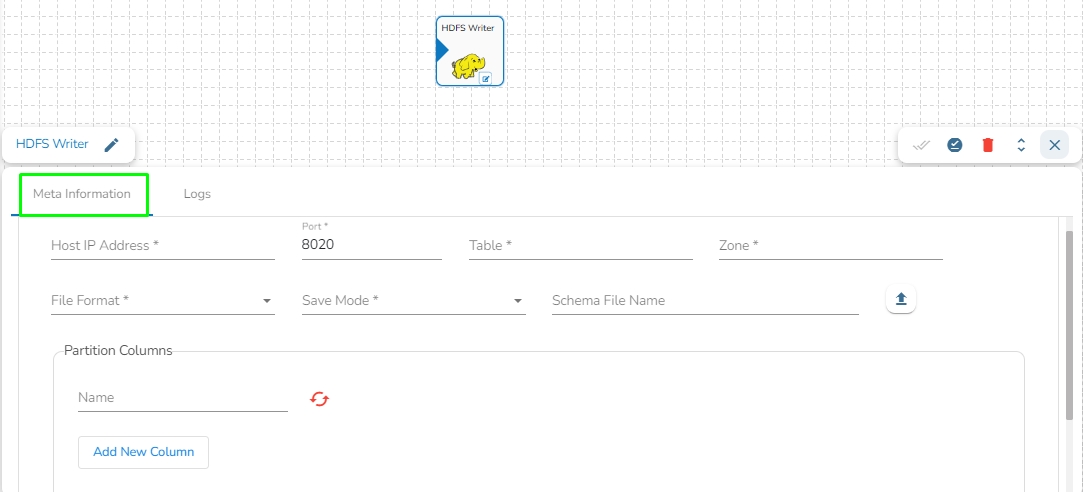

HDFS stands for Hadoop Distributed File System. It is a distributed file system designed to store and manage large data sets in a reliable, fault-tolerant, and scalable way. HDFS is a core component of the Apache Hadoop ecosystem and is used by many big data applications.

This task writes the data in HDFS(Hadoop Distributed File System).

Drag the HDFS writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the host IP address for HDFS.

Port: Enter the Port.

Table: Enter the table name where the data has to be written.

Zone: Enter the Zone for HDFS in which the data has to be written. Zone is a special directory whose contents will be transparently encrypted upon write and transparently decrypted upon read.

File Format: Select the file format in which the data has to be written:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the save mode.

Schema file name: Upload spark schema file in JSON format.

Partition Columns: Provide a unique Key column name to partition data in Spark.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

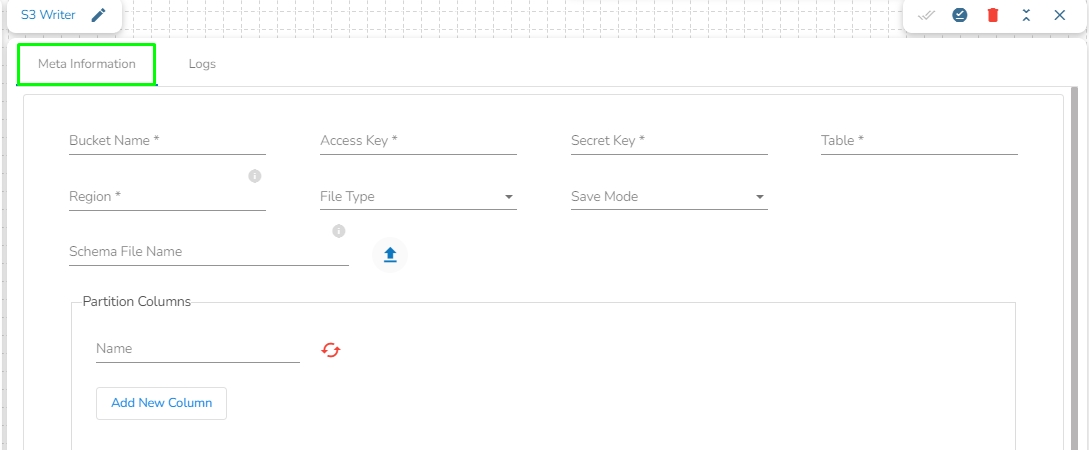

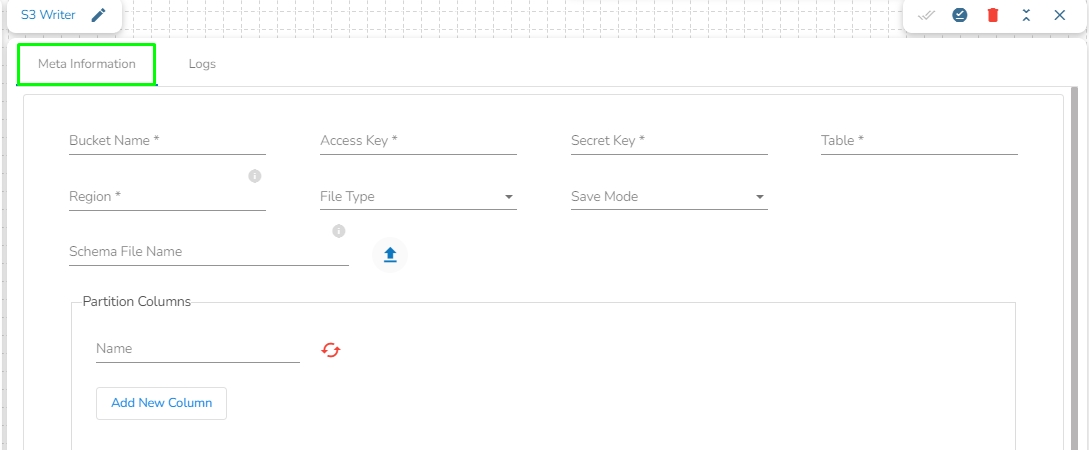

This task is used to write the data in Amazon S3 bucket.

Drag the S3 writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Bucket Name (*): Enter S3 Bucket name.

Region (*): Provide S3 region.

Access Key (*): Access key shared by AWS to login

Secret Key (*): Secret key shared by AWS to login

Table (*): Mention the Table or object name which is to be read

File Type (*): Select a file type from the drop-down menu (CSV, JSON, PARQUET, AVRO are the supported file types).

Save Mode: Select the Save mode from the drop down.

Append

Schema File Name: Upload spark schema file in JSON format.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

Azure is a cloud computing platform and service. It provides a range of cloud services, including infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS) offerings, as well as tools for building, deploying, and managing applications in the cloud.

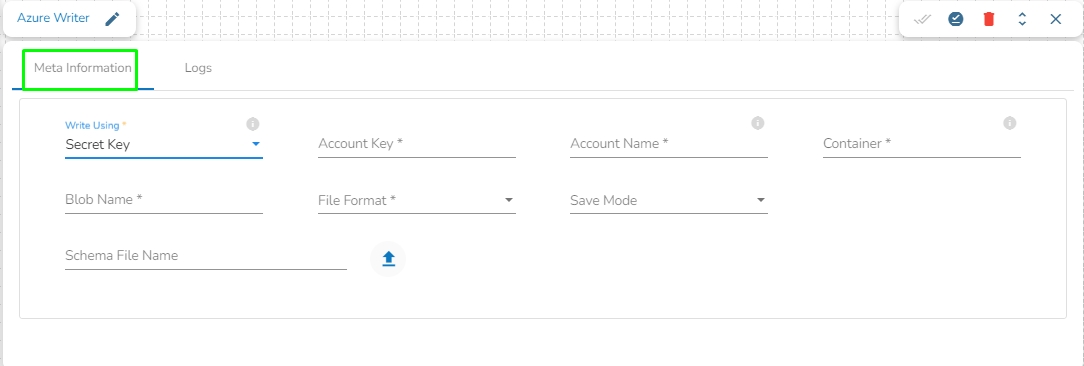

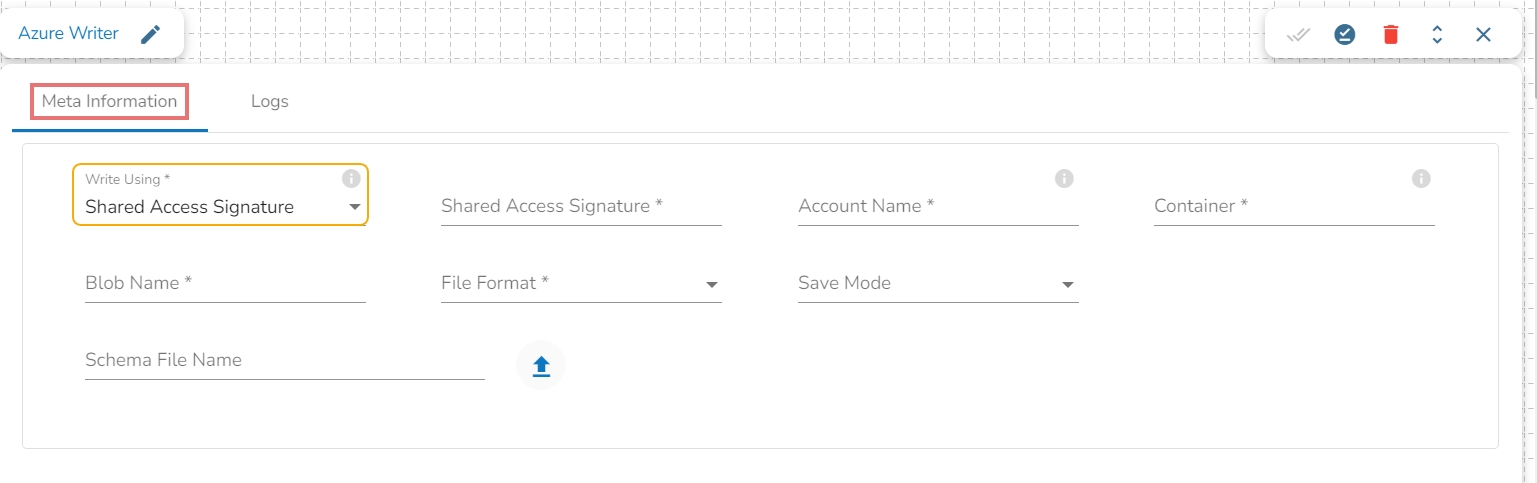

Azure Writer task is used to write the data in the Azure Blob Container.

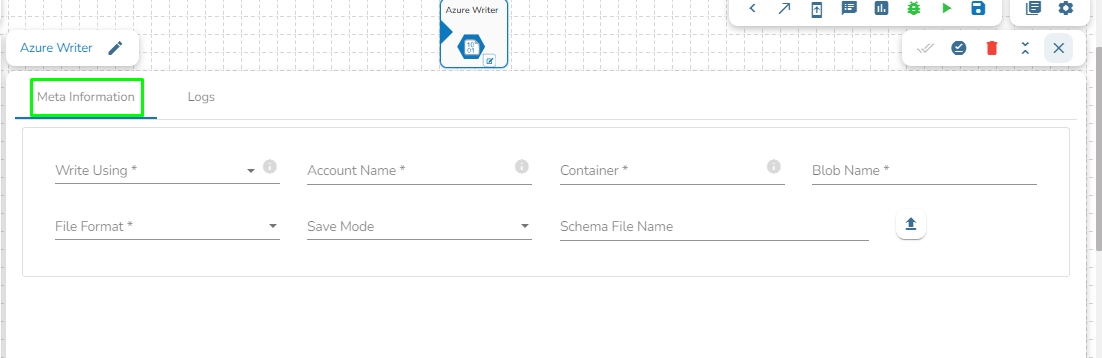

Drag the Azure writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Write using: There are three(3) options available under this tab:

Shared Access Signature:

Secret Key

Principal Secret

Provide the following details:

Shared Access Signature: This is a URI that grants restricted access rights to Azure Storage resources.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Blob Name: Enter the Blob name. A blob is a type of object storage that is used to store unstructured data, such as text or binary data, like images or videos.

File Format: There are four(4) types of file extensions are available under it, select the file format in which the data has to be written:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

Account Key: Enter the azure account key. In Azure, an account key is a security credential that is used to authenticate access to storage resources, such as blobs, files, queues, or tables, in an Azure storage account.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Blob Name: Enter the Blob name. A blob is a type of object storage that is used to store unstructured data, such as text or binary data, like images or videos.

File type: There are four(4) types of file extensions are available under it:

CSV

JSON

PARQUET

AVRO

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

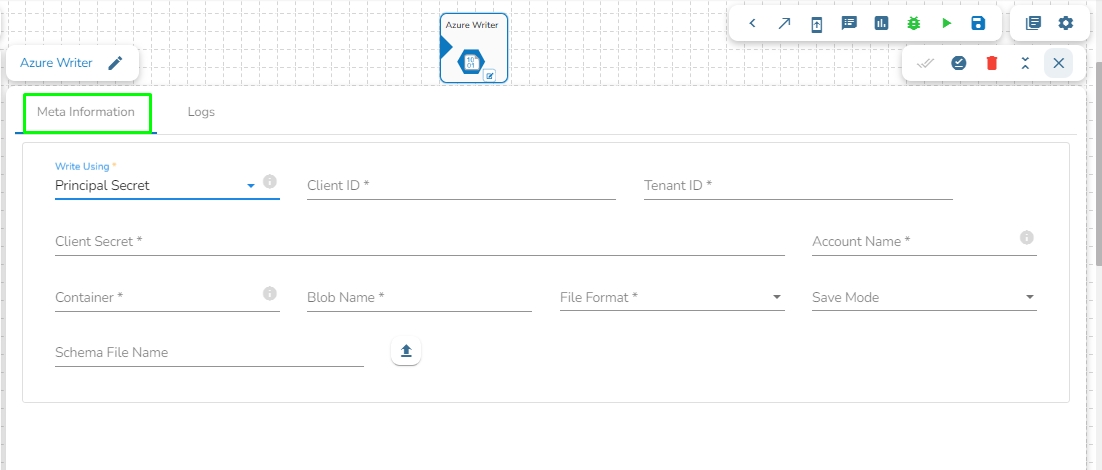

Provide the following details:

Client ID: Provide Azure Client ID. The client ID is the unique Application (client) ID assigned to your app by Azure AD when the app was registered.

Tenant ID: Provide the Azure Tenant ID. Tenant ID (also known as Directory ID) is a unique identifier that is assigned to an Azure AD tenant, which represents an organization or a developer account. It is used to identify the organization or developer account that the application is associated with.

Client Secret: Enter the Azure Client Secret. Client Secret (also known as Application Secret or App Secret) is a secure password or key that is used to authenticate an application to Azure AD.

Account Name: Provide the Azure account name.

Container: Provide the container name from where the blob is located. A container is a logical unit of storage in Azure Blob Storage that can hold blobs. It is similar to a directory or folder in a file system, and it can be used to organize and manage blobs.

Blob Name: Enter the Blob name. A blob is a type of object storage that is used to store unstructured data, such as text or binary data, like images or videos.

File type: There are four(4) types of file extensions are available under it:

CSV

JSON

PARQUET

AVRO

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

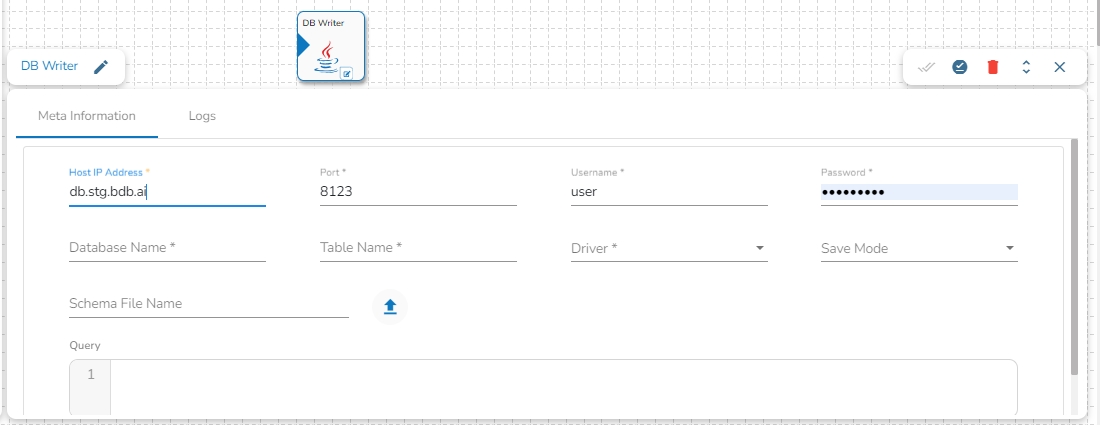

This task is used to write data in the following databases: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Drag the DB writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the Host IP Address for the selected driver.

Port: Enter the port for the given IP Address.

Database name: Enter the Database name.

Table name: Provide a single or multiple table names. If multiple table name has be given, then enter the table names separated by comma(,).

User name: Enter the user name for the provided database.

Password: Enter the password for the provided database.

Driver: Select the driver from the drop down. There are 6 drivers supported here: MYSQL, MSSQL, Oracle, ClickHouse, Snowflake, PostgreSQL, Redshift.

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Query: Write the create table(DDL) query.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

Elasticsearch is an open-source search and analytics engine built on top of the Apache Lucene library. It is designed to help users store, search, and analyze large volumes of data in real-time. Elasticsearch is a distributed, scalable system that can be used to index and search structured, semi-structured, and unstructured data.

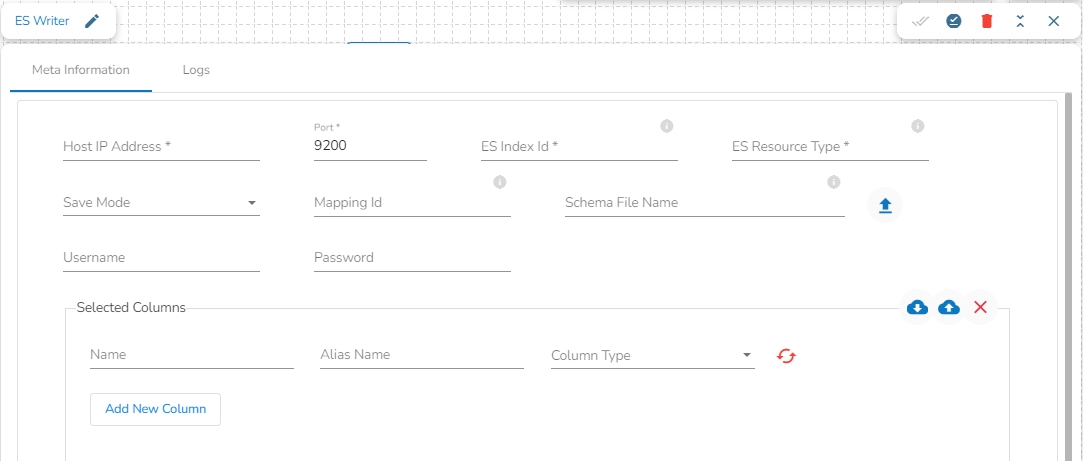

This task is used to write the data in Elastic Search engine.

Drag the ES writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Host IP Address: Enter the host IP Address for Elastic Search.

Port: Enter the port to connect with Elastic Search.

Index ID: Enter the Index ID to read a document in elastic search. In Elasticsearch, an index is a collection of documents that share similar characteristics, and each document within an index has a unique identifier known as the index ID. The index ID is a unique string that is automatically generated by Elasticsearch and is used to identify and retrieve a specific document from the index.

Mapping ID: Provide the Mapping ID. In Elasticsearch, a mapping ID is a unique identifier for a mapping definition that defines the schema of the documents in an index. It is used to differentiate between different types of data within an index and to control how Elasticsearch indexes and searches data.

Resource Type: Provide the resource type. In Elasticsearch, a resource type is a way to group related documents together within an index. Resource types are defined at the time of index creation, and they provide a way to logically separate different types of documents that may be stored within the same index.

Username: Enter the username for elastic search.

Password: Enter the password for elastic search.

Schema File Name: Upload spark schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append

Selected columns: The user can select the specific column, provide some alias name and select the desired data type of that column.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

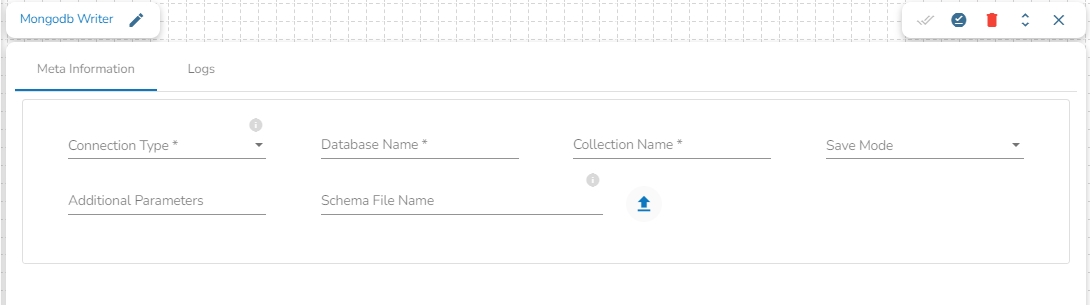

This task writes the data to MongoDB collection.

Drag the MongoDB writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Connection Type: Select the connection type from the drop-down:

Standard

SRV

Connection String

Port (*): Provide the Port number (It appears only with the Standard connection type).

Host IP Address (*): The IP address of the host.

Username (*): Provide a username.

Password (*): Provide a valid password to access the MongoDB.

Database Name (*): Provide the name of the database where you wish to write data.

Additional Parameters: Provide details of the additional parameters.

Schema File Name: Upload Spark Schema file in JSON format.

Save Mode: Select the Save mode from the drop down.

Append: This operation adds the data to the collection.

Ignore: "Ignore" is an operation that skips the insertion of a record if a duplicate record already exists in the database. This means that the new record will not be added, and the database will remain unchanged. "Ignore" is useful when you want to prevent duplicate entries in a database.

Upsert: It is a combination of "update" and "insert". It is an operation that updates a record if it already exists in the database or inserts a new record if it does not exist. This means that "upsert" updates an existing record with new data or creates a new record if the record does not exist in the database.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

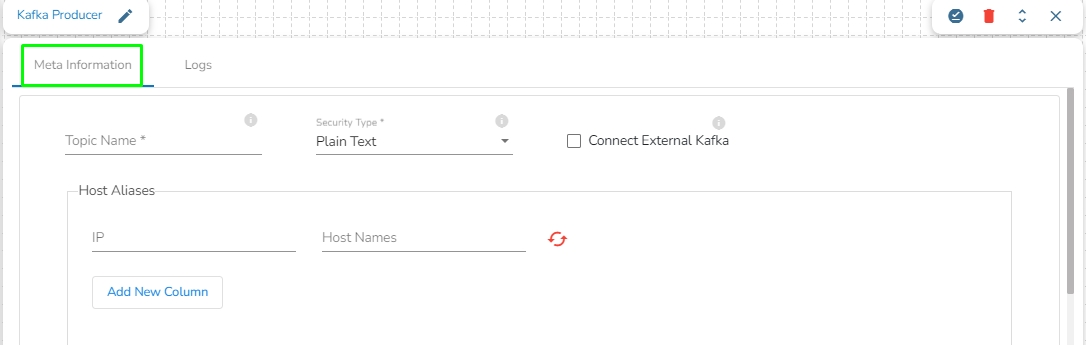

In Apache Kafka, a "producer" is a client application or program that is responsible for publishing (or writing) messages to a Kafka topic.

A Kafka producer sends messages to Kafka brokers, which are then distributed to the appropriate consumers based on the topic, partitioning, and other configurable parameters.

Drag the Kafka Producer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Topic Name: Specify topic name where user want to produce data.

Security Type: Select the security type from drop down:

Plain Text

SSL

Is External: User can produce the data to external Kafka topic by enabling 'Is External' option. ‘Bootstrap Server’ and ‘Config’ fields will display after enable 'Is External' option.

Bootstrap Server: Enter external bootstrap details.

Config: Enter configuration details.

Host Aliases: In Apache Kafka, a host alias (also known as a hostname alias) is an alternative name that can be used to refer to a Kafka broker in a cluster. Host aliases are useful when you need to refer to a broker using a name other than its actual hostname.

IP: Enter the IP.

Host Names: Enter the host names.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.

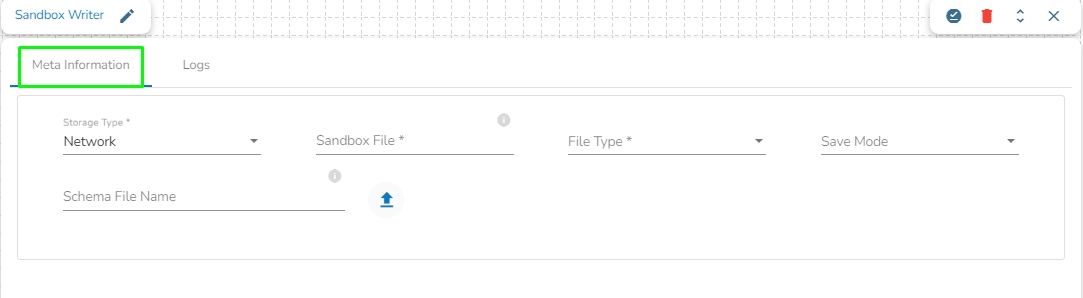

This task writes data to network pool of Sandbox.

Drag the Sandbox writer task to the Workspace and click on it to open the related configuration tabs for the same. The Meta Information tab opens by default.

Storage Type: This field is pre-defined.

Sandbox File: Enter the file name.

File Type: Select the file type in which the data has to be written. There are 4 files types supported here:

CSV

JSON

Save Mode: Select the Save mode from the drop down.

Append

Overwrite

Schema File Name: Upload spark schema file in JSON format.

Please Note: Please click the Save Task In Storage icon to save the configuration for the dragged writer task.