SQL Component

SQL transformer applies SQL operations to transform and manipulate data, providing flexibility and expressiveness in data transformations within a data pipeline.

The SQL component serves as a bridge between the extracted data and the desired transformed data, leveraging the power of SQL queries and database systems to enable efficient data processing and manipulation.

It also provides an option of using aggregation functions on the complete streaming data processed by the component. The user can use SQL transformations on Spark data frames with the help of this component.

All component configurations are classified broadly into the following sections:

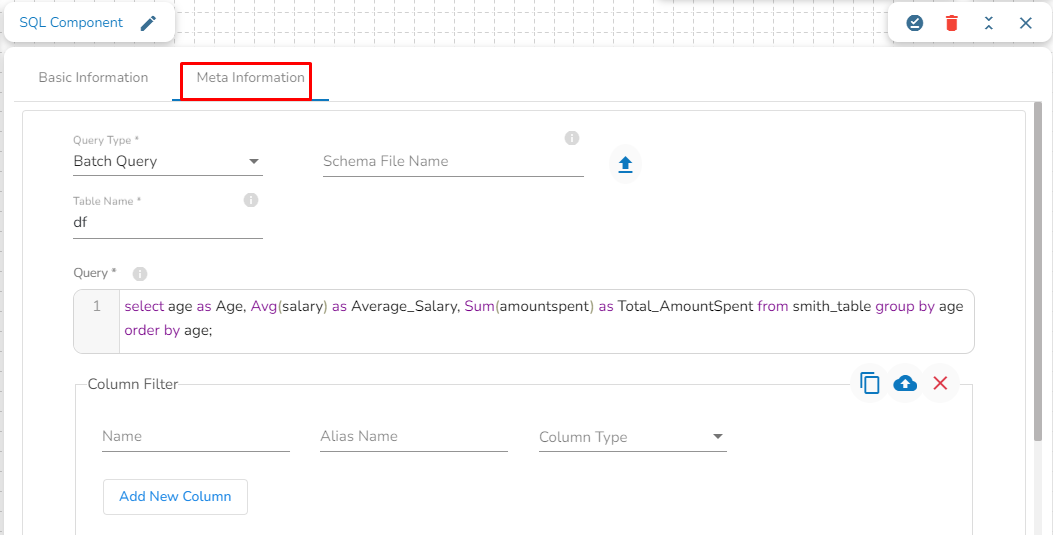

Meta Information

Follow the given steps in the demonstration to configure the SQL transformation component.

Please Note: The schema file that can be uploaded here is a JSON spark schema.

Configuring Meta Information of SQL Component.

Query Type: There are two options available under this field:

Batch Query: When this option is selected, then there is no need to upload a schema file.

Aggregate Query: When this option is selected, it is mandatory to upload the spark schema file in JSON format of the in-event data.

Schema File name: Upload the spark schema file in JSON format when the Aggregate query is selected in the query type field.

Table name: Provide the table name

Query: Write an SQL query in this field.

Selected Columns: select the column name from the table, and provide the Alias name and the desired data type for that column.

Please Note: When Usging Aggregation Mode

Data Writing:

When configured for Aggregate Query mode and connected to DB Sync, the SQL component will not write data to the DB Sync event.

Monitoring:

In Aggregate mode, monitoring details for the SQL component will not be available on the monitoring page.

Running Aggregate Queries Freshly:

If you set the SQL component to Aggregate Query mode and want to run it afresh, clearing the existing event data is recommended. To achieve this:

Copy the component.

Paste the copied component to create a fresh instance.

Running the copied component ensures the query runs without including aggregations from previous runs.