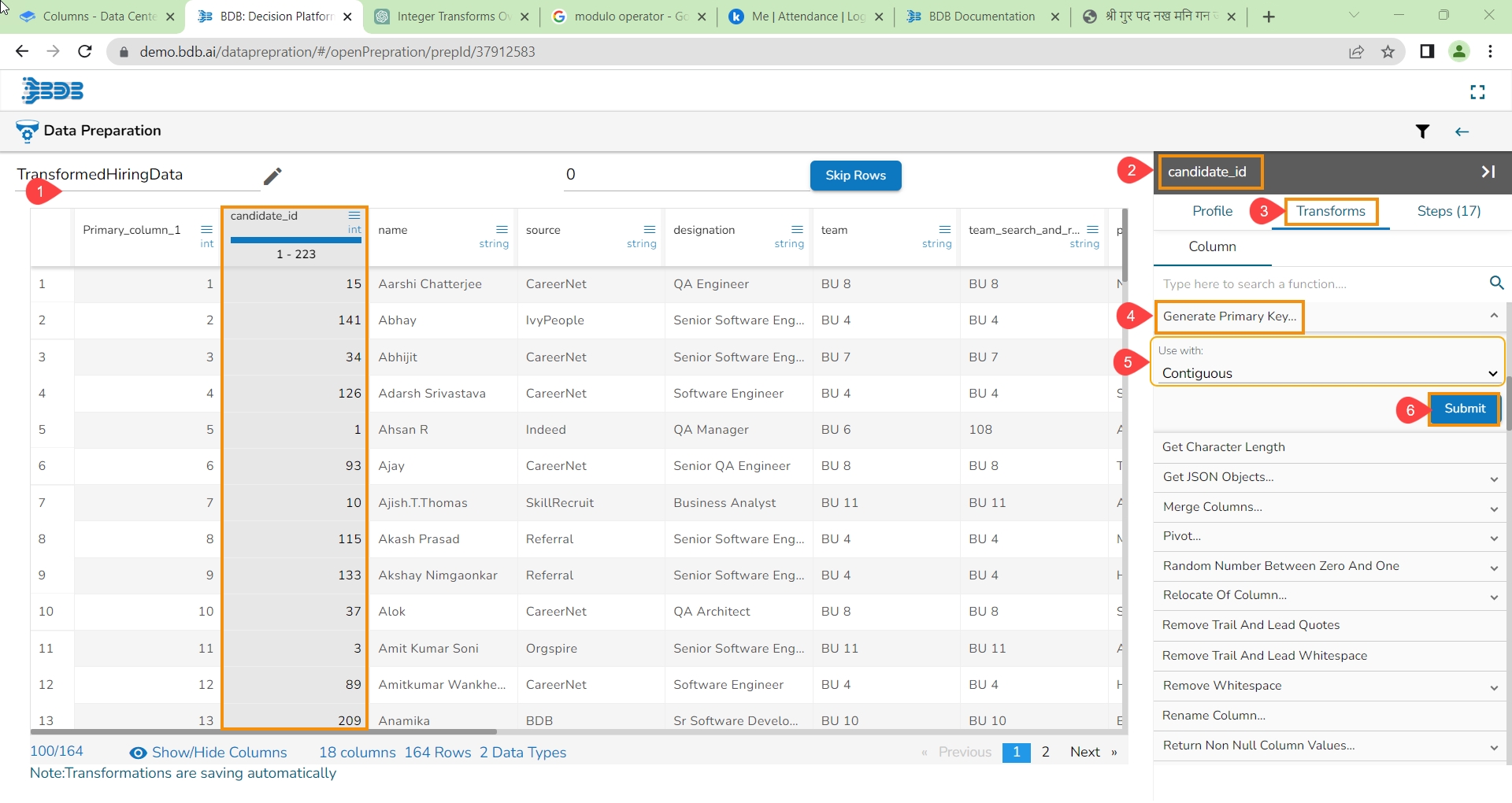

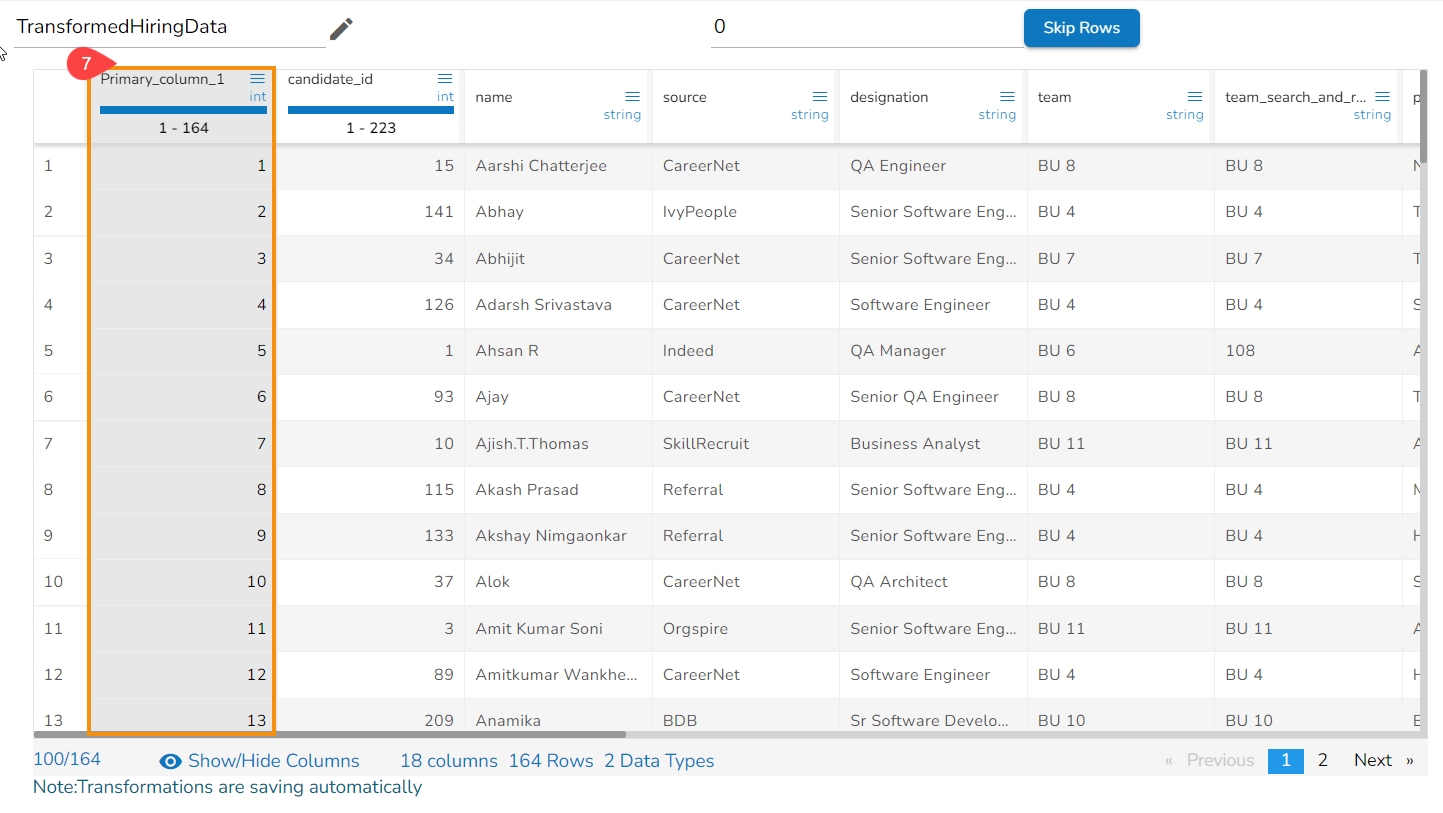

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

The Profile tab gives an overview of the data profile like different patterns of data, distinct values, and occurrences. It also provides Auto-suggested transforms for the selected columns.

The information tab displays the value or statistics of the data. The following aspects are displayed about the chosen data when the column is of string type:

Count: Count of Rows

Valid: Count of Valid Data

Invalid: Count of Invalid Data

Empty: Count of empty cells

Duplicate: Count of Duplicates

Distinct: Distinct Values

When the selected column is of numeric type, other than the details mentioned above the additional displayed information under the ‘Info.’ tab is based on aggregation functions as displayed below:

Minimum

Maximum

Mean

Variance

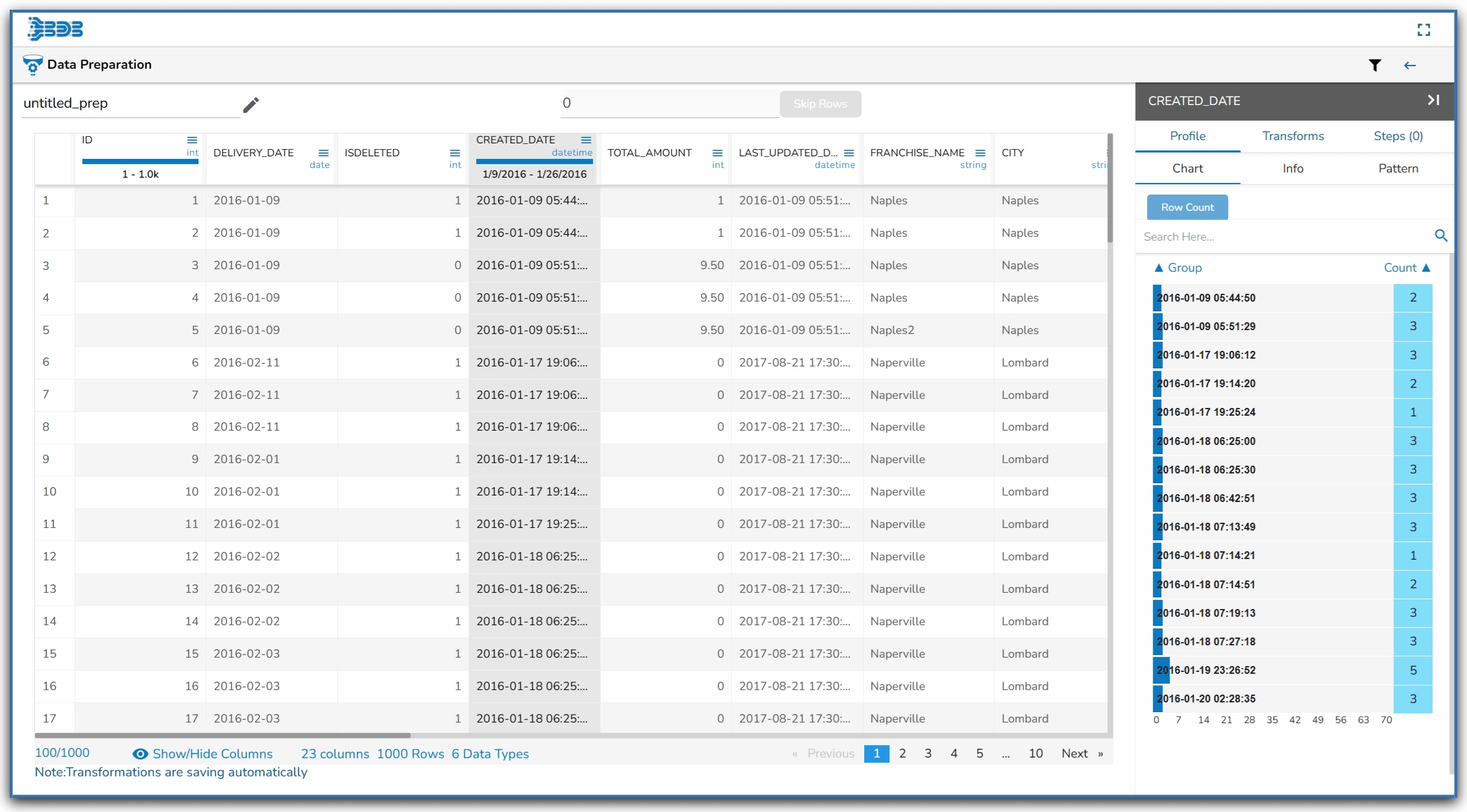

This section focuses on how data patterns and occurrences of each pattern in the dataset sample get plotted in a chart for the selected column.

Please Note: The displayed value is not the actual value; it is just a pattern of the value displayed in the specific column.

The Suggestions option displays a tailor-made list containing suitable transformations from the available comprehensive list for the selected column. These auto-generated Suggestions help the users to clean their desired dataset in a faster and more accurate manner.

Select a column from the dataset.

Open the Profile tab.

Scroll down to see the Suggestions option.

All the Suggestions related to the selected columns are displayed.

Check out the given walk-through on how to use Suggestions for a specific column.

Select a column.

The Suggestions tab will display the auto-suggested transforms for the selected column.

Select a transform using the given checkbox.

Click the Apply option.

The selected transform gets applied to that column (a new column gets added with the applied transform).

This section contains in-built charts (Columns and Bar charts) to display the occurrence of each value for the selected column.

The Bar chart appears to display string value.

The Column chart projects numeric value columns and dates.

The Bar chart can be sorted based on the group or the count of occurrences of a group. The sorted chart displays values in an Ascending or Descending manner.

Use the given Search bar to customize the display of the Bar chart. E.g., By putting the "M" in the Search bar the displayed chart gets customized and displays only the count for the M category.

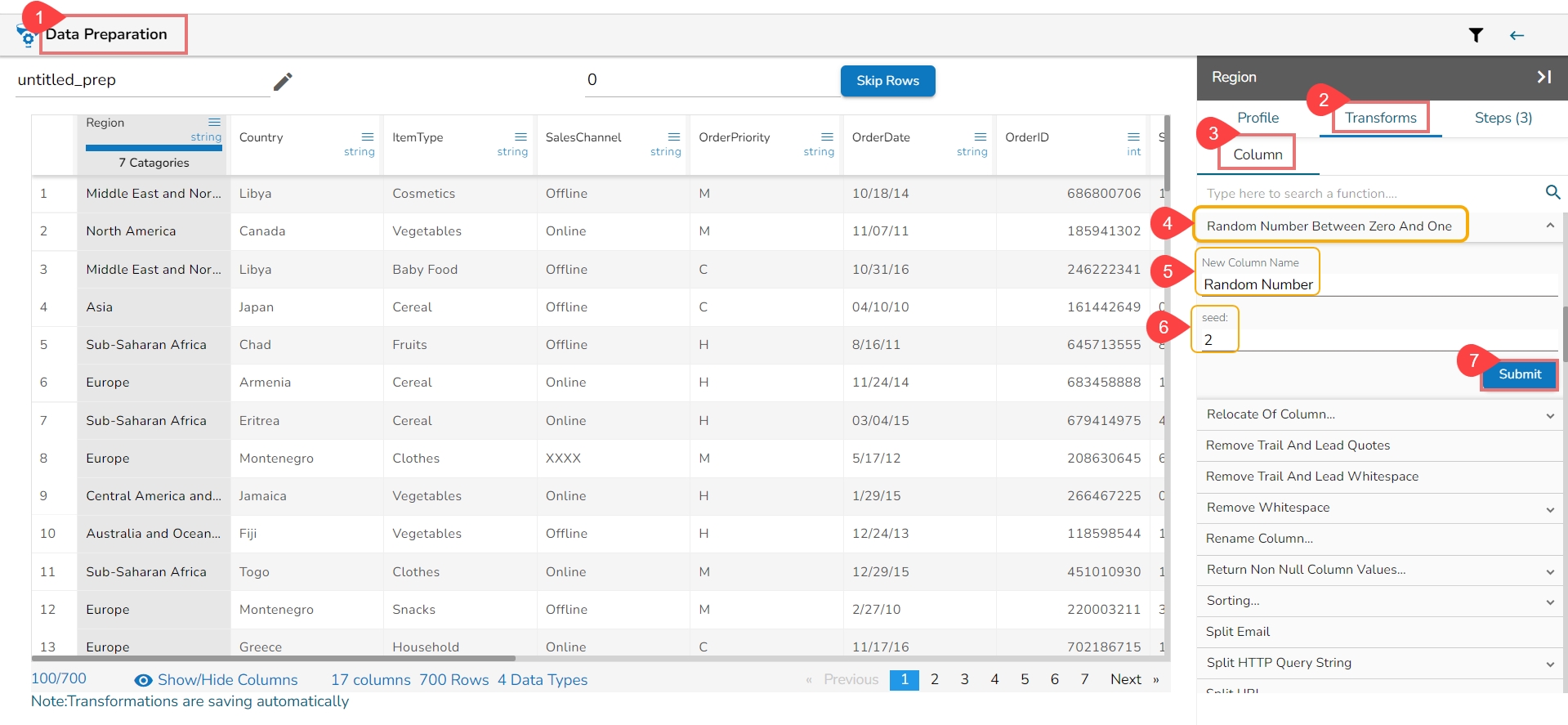

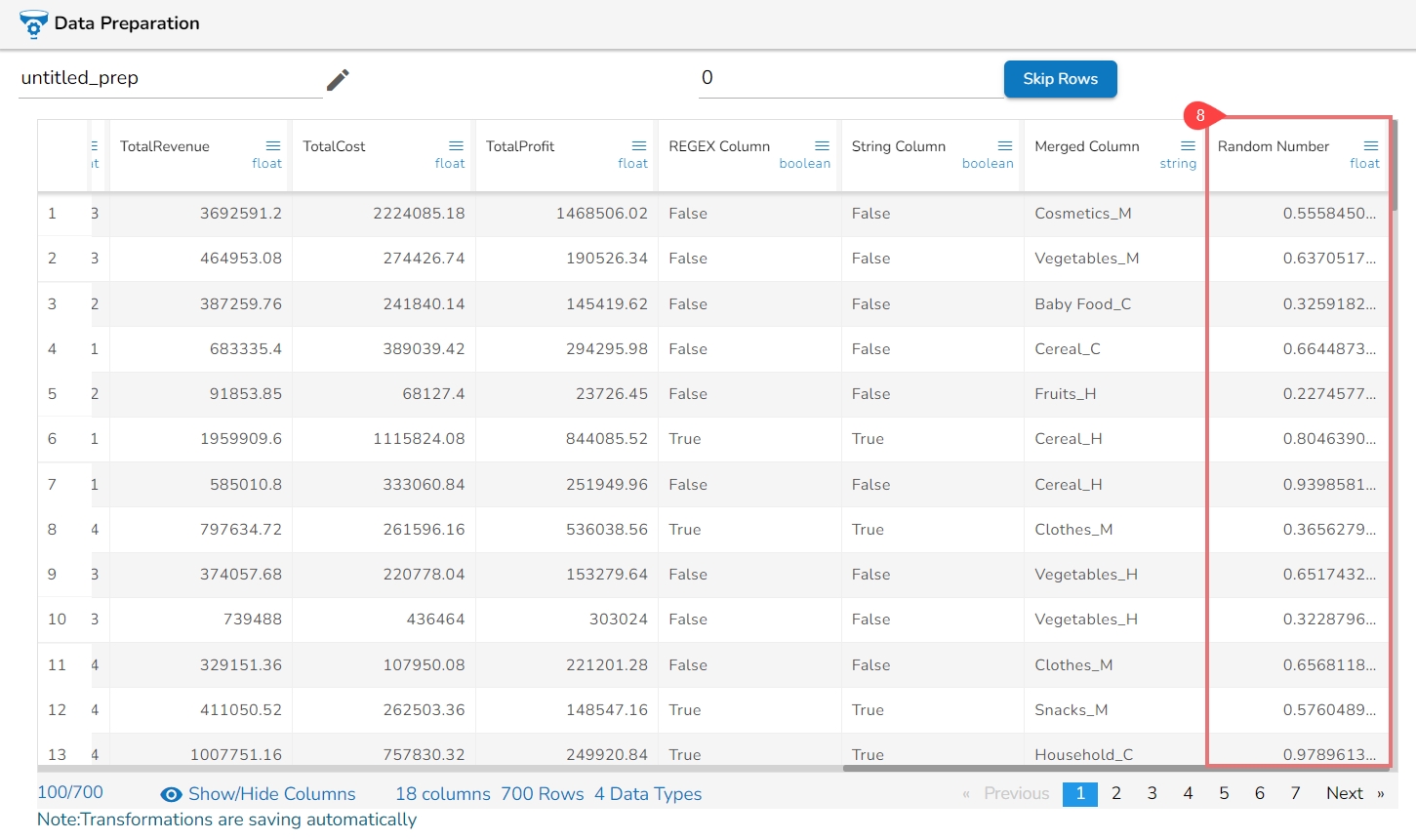

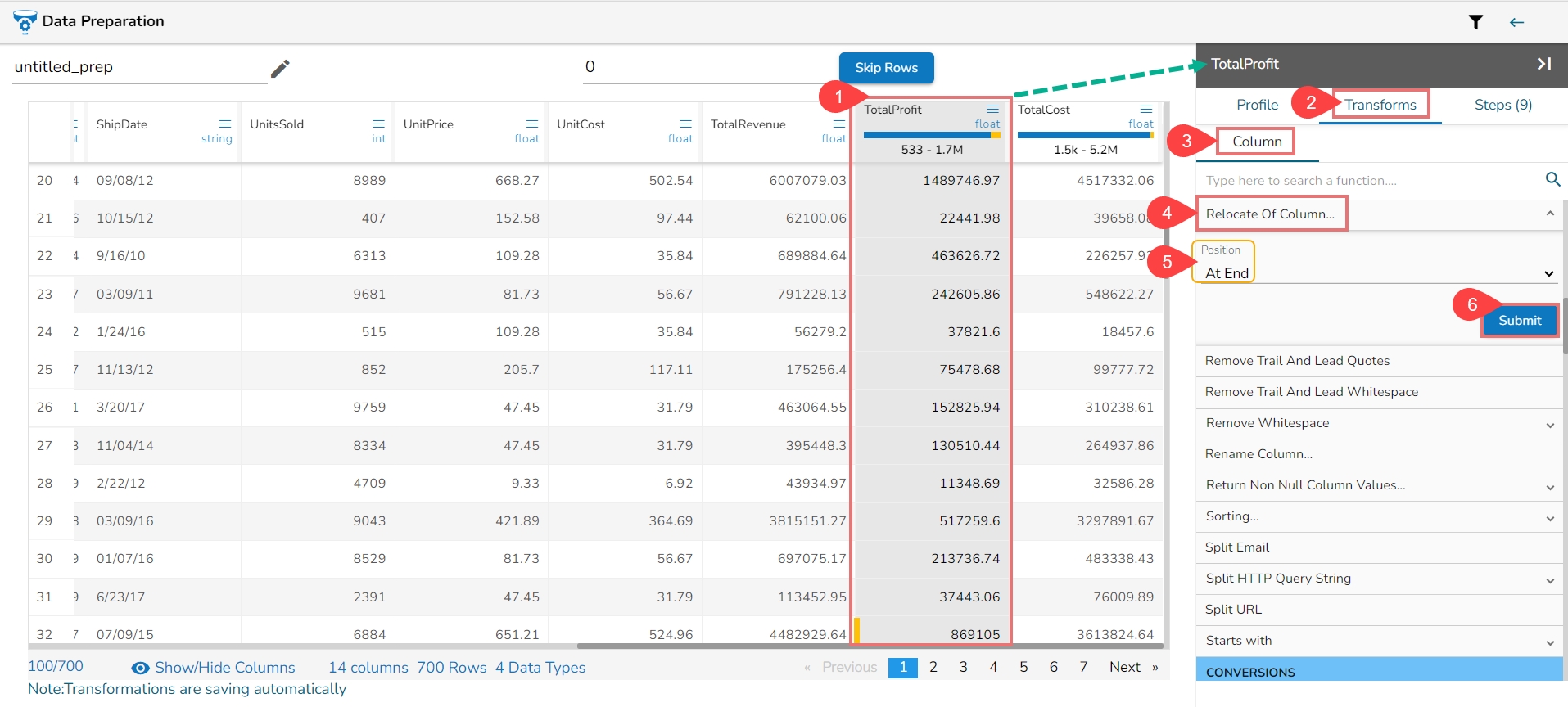

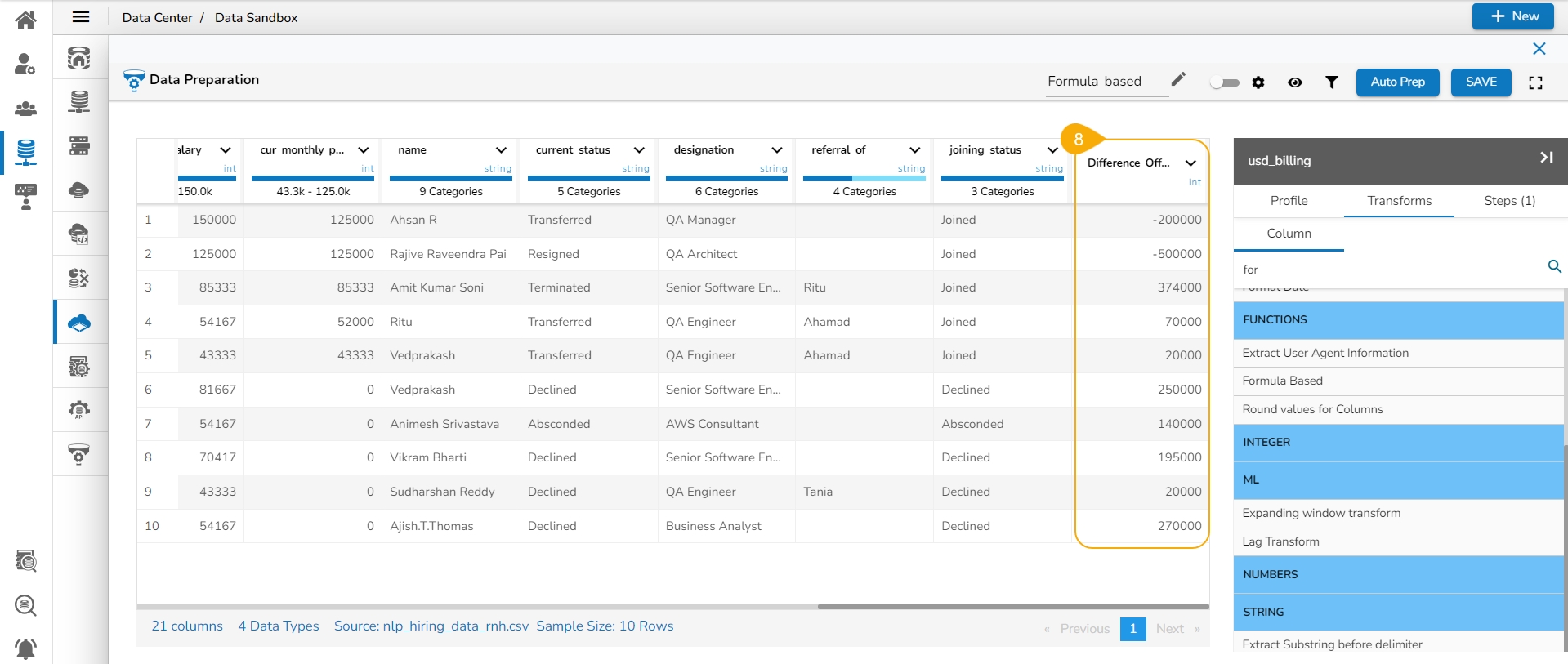

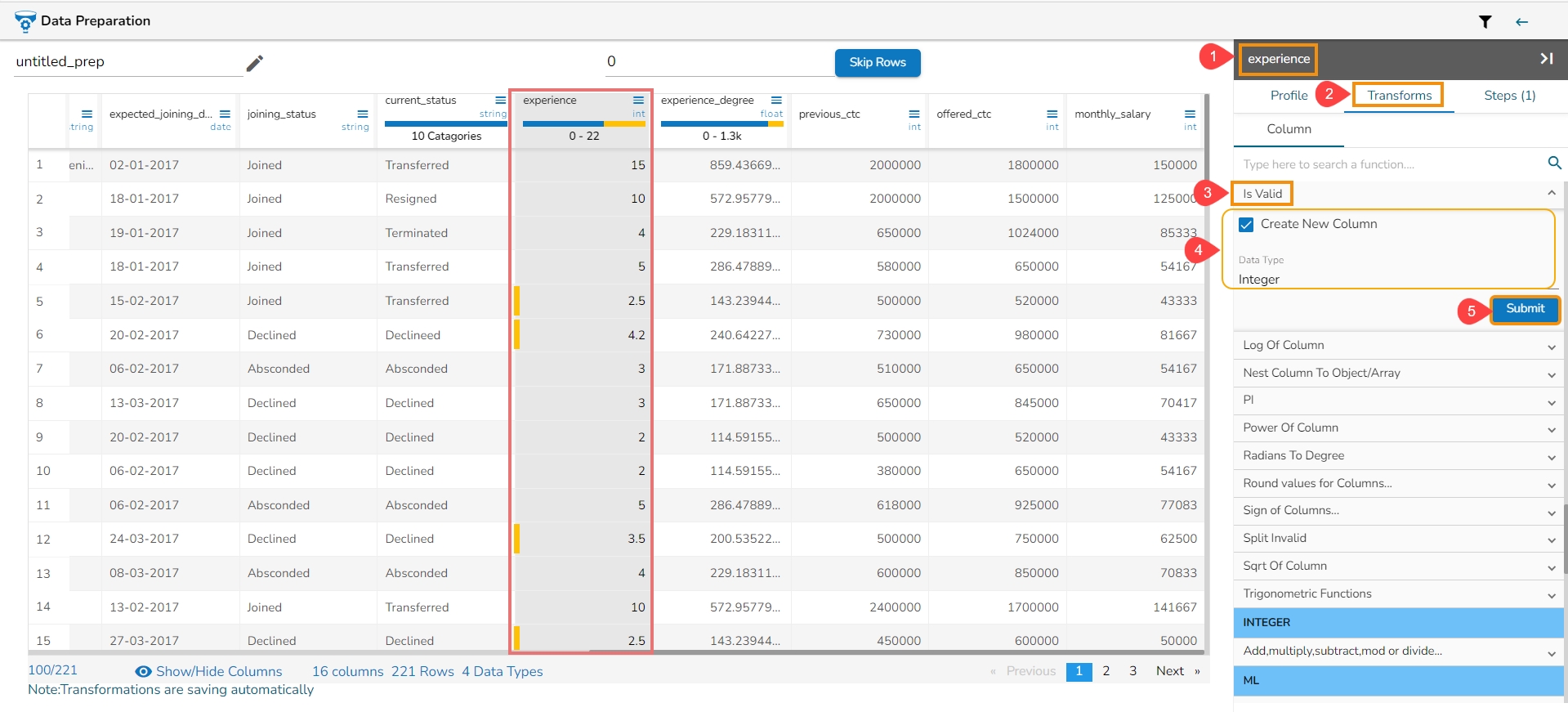

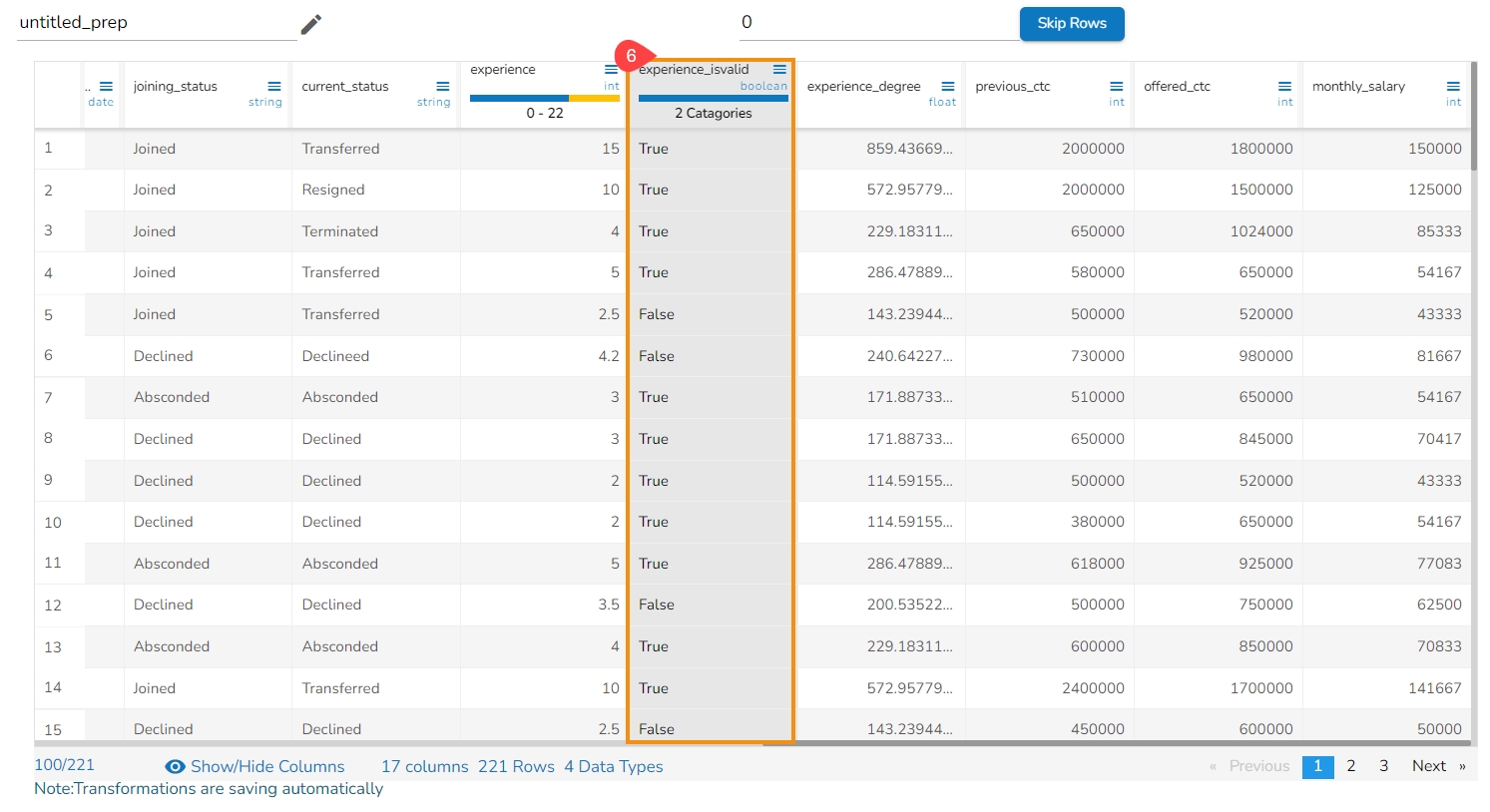

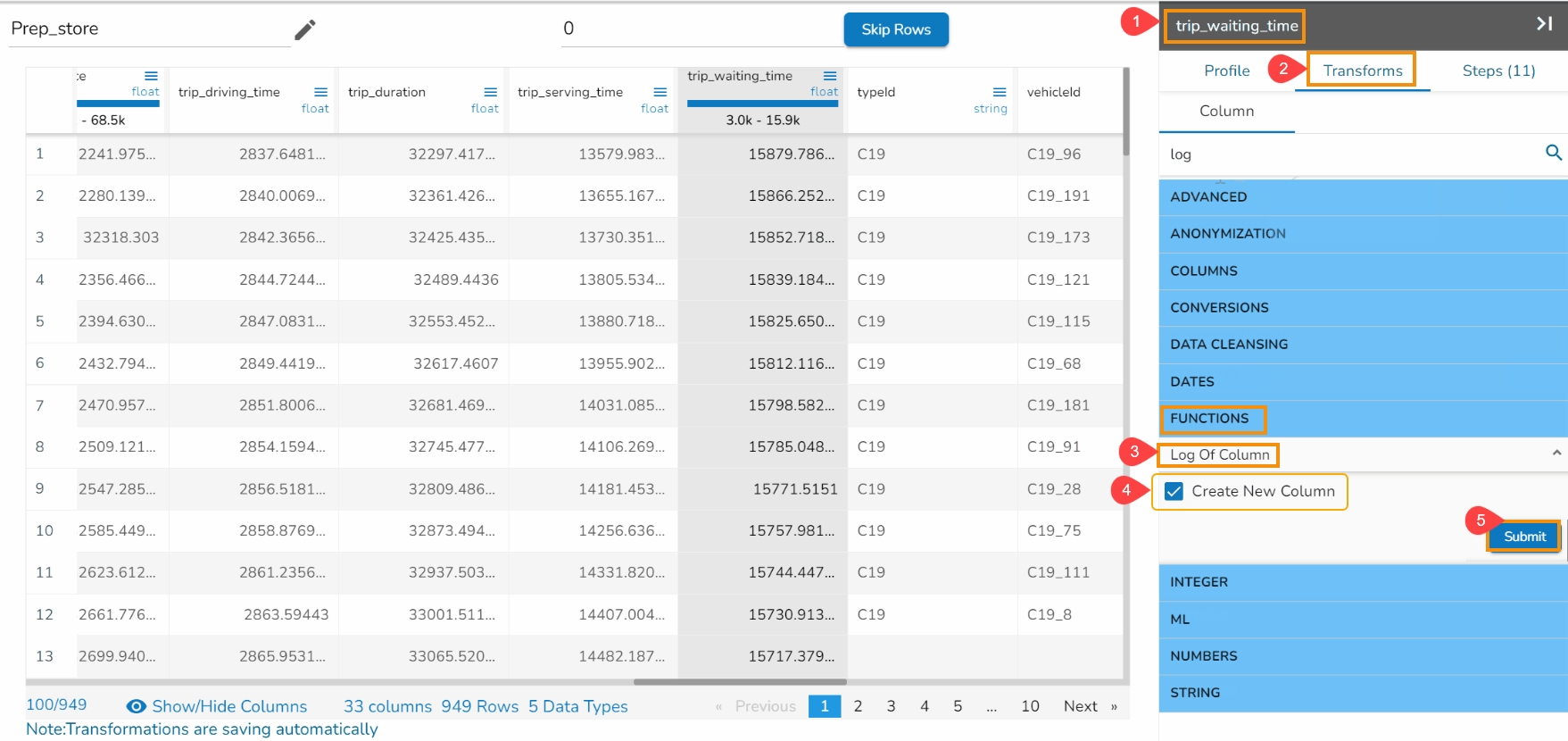

All the available Transforms are explained in this section. Please note that we are in process to update the UI changes for all the Transforms.

The Binarizer transform details are provided under this section.

It converts the value of a numerical column to zero when the value in the column is less than or equals to the threshold value and one if the value in the column is greater than threshold value.

Check out the given illustration on how to apply Binarizer transform.

Steps to apply Binarizer transform:

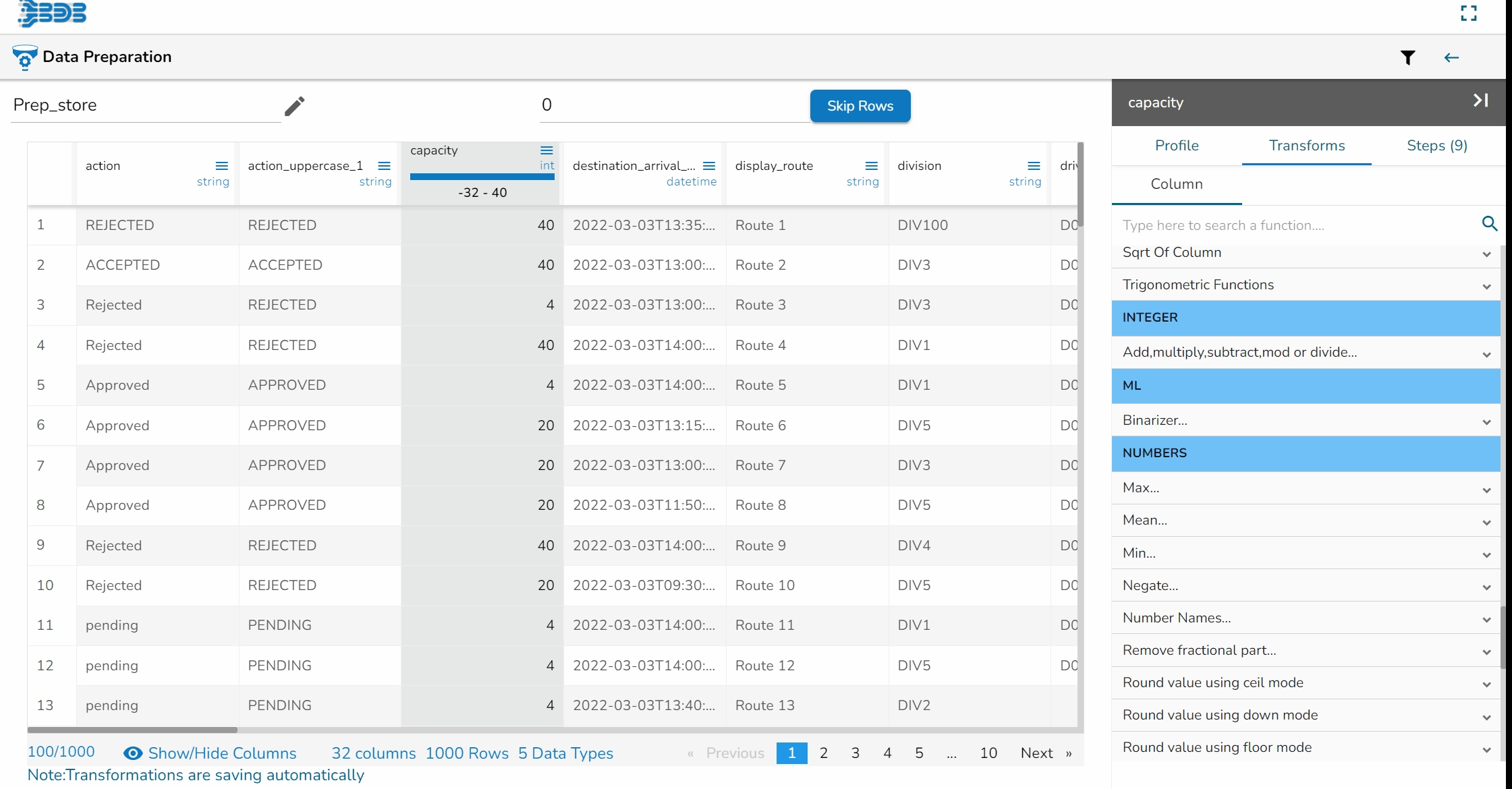

Select a numeric column from the dataset.

Open the Transforms tab.

Select the Binarizer transform from the ML category of transforms.

Provide a Threshold value.

Click the Submit option.

The Dataset gets a new column with the 1 and 0 values by comparing the actual values with the set threshold limit.

Binning, also known as discretization, involves converting continuous data into distinct categories or values. This is commonly done to simplify data analysis, create histogram bins, or prepare data for certain machine learning algorithms. Here are the steps to perform this transformation:

Select a Column: Choose the column containing the continuous data that you want to bin.

Select the Transform: Decide on the method of binning or discretization. This could include equal-width binning, equal-frequency binning, or custom binning based on domain knowledge.

Update the Number of Bin Size: Specify the number of bins or categories you want to create from the continuous data using the Binning/ Discretize values dialog box.

Submit It: Execute the binning process with the chosen column and specified number of bins by clicking the Submit option.

Result: The result will be new columns representing the binned or discretized values of the original continuous data.

By following these steps, you can effectively transform continuous data into discrete categories for further analysis or use in machine learning algorithms.

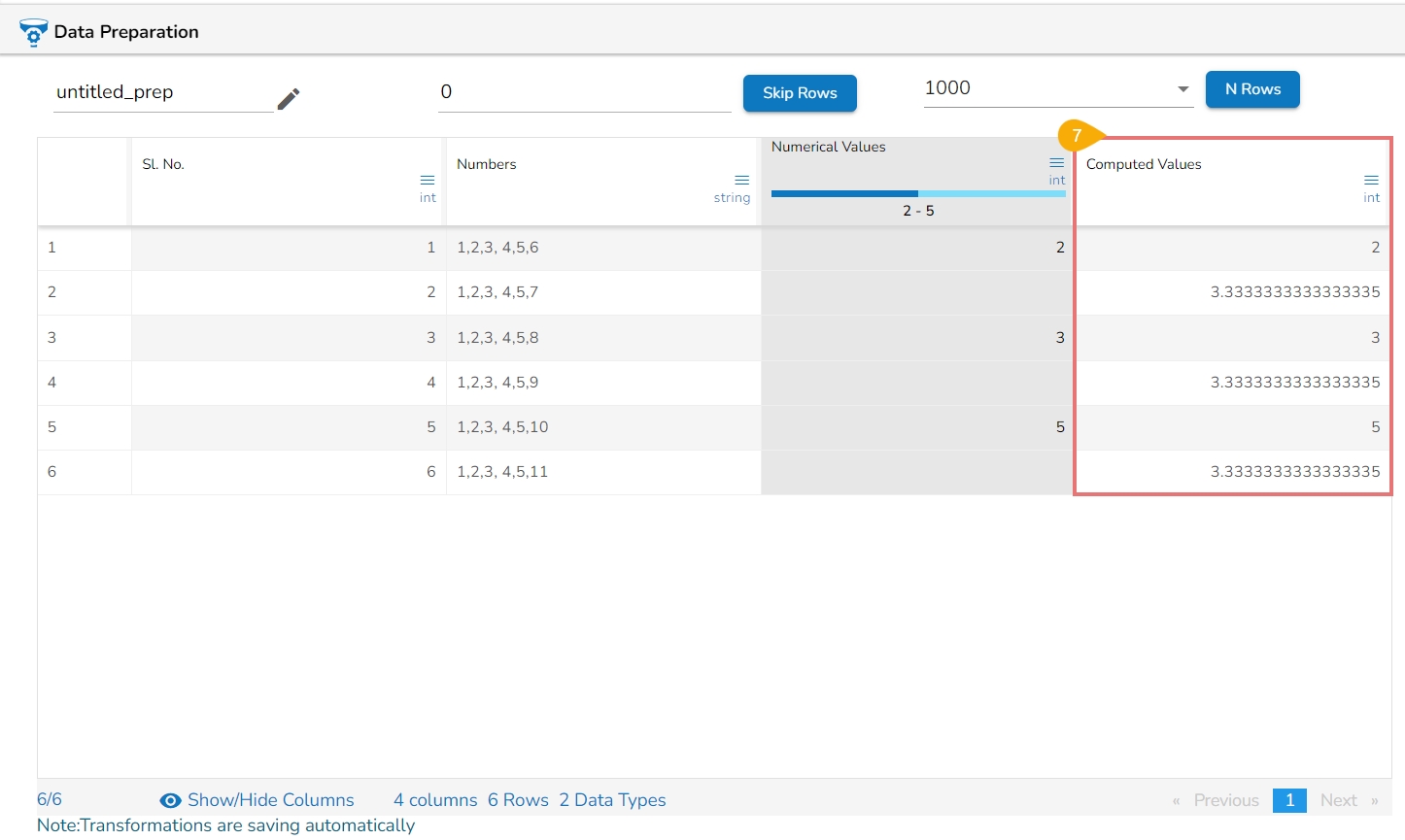

E.g., 1,2,3,4,5,6,7,8,9,10

No. of bins : 3

The result would be 0,0,0,0,1,1,1,2,2,2

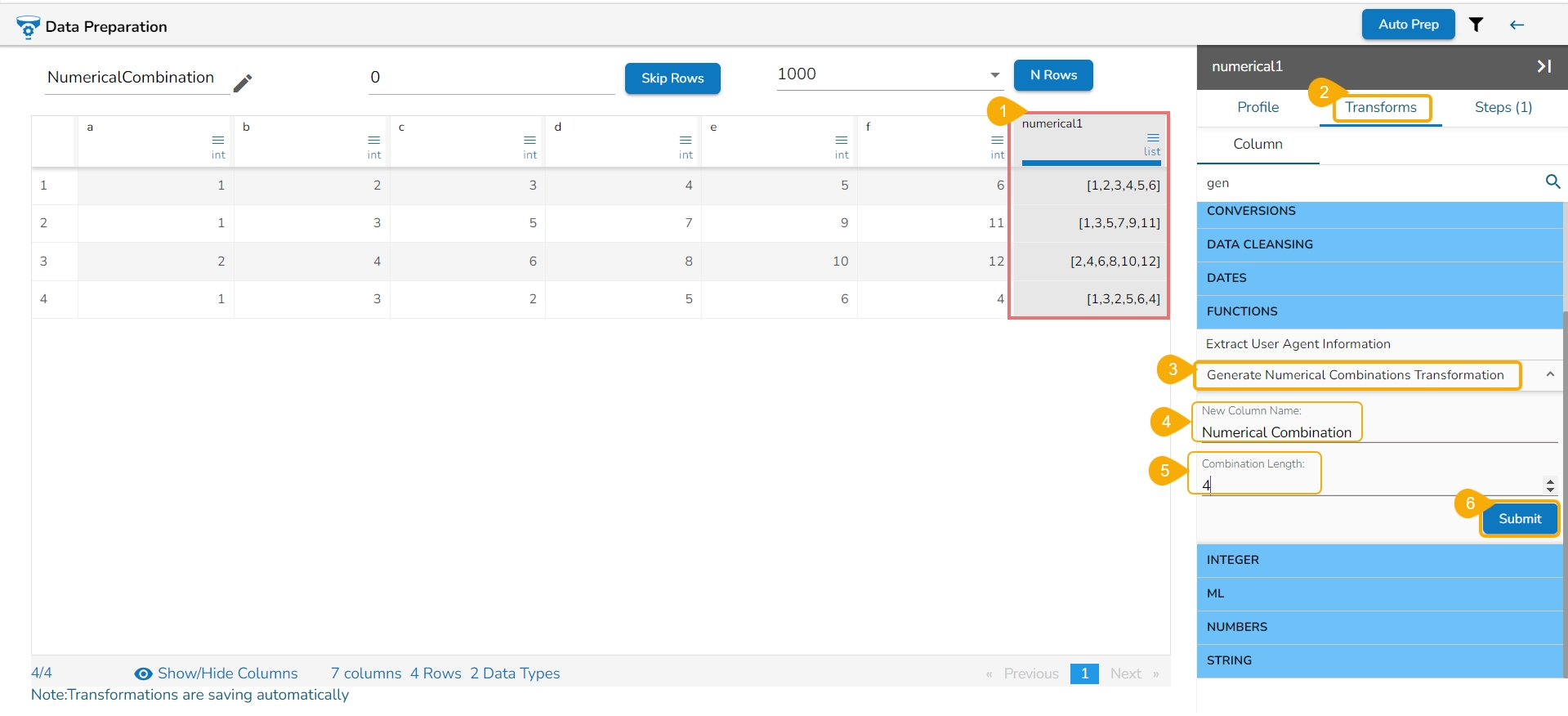

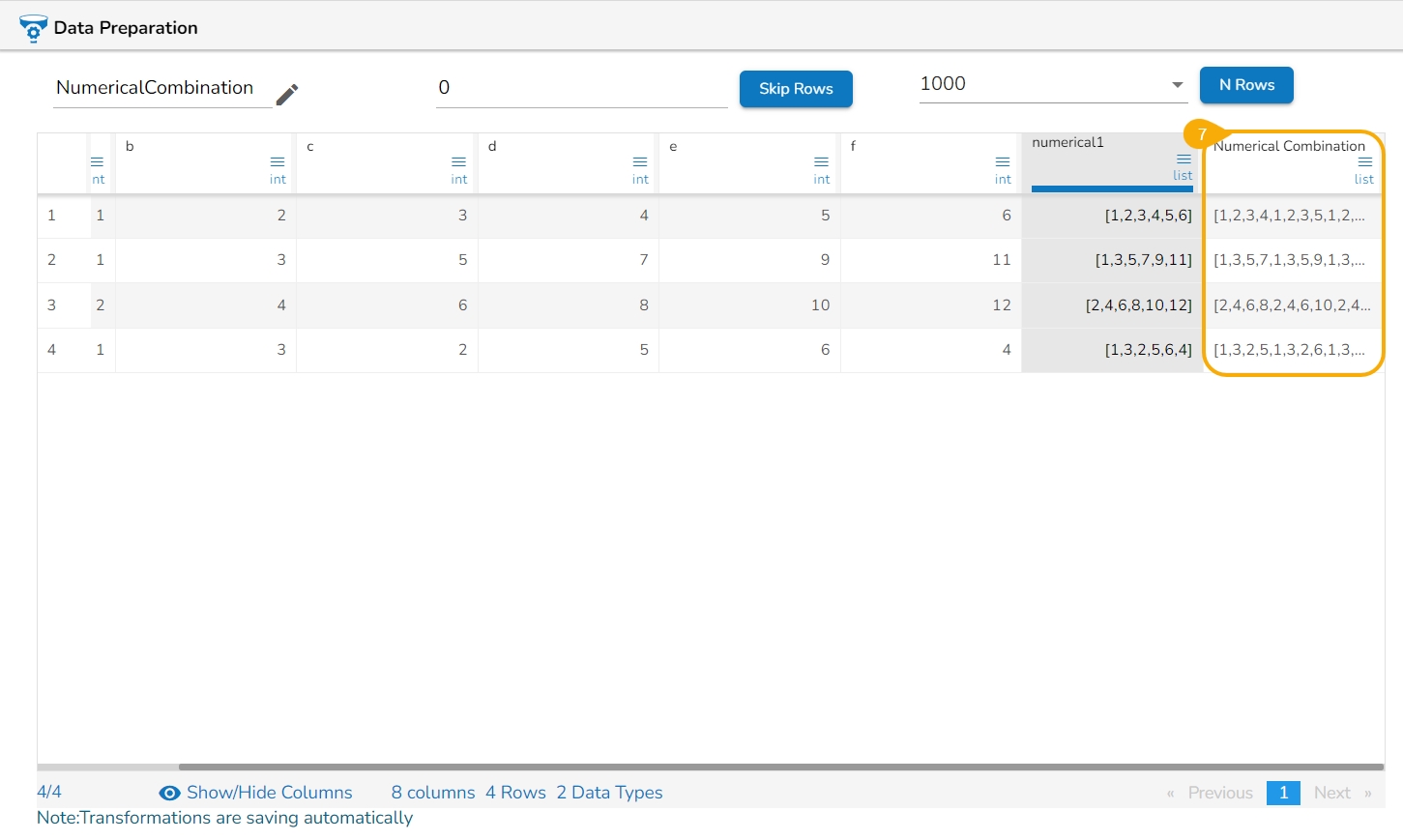

Expanding Window Transform is a common technique used in time series analysis and machine learning for feature engineering. It involves creating new features based on rolling statistics or aggregates calculated over expanding windows of historical data. Here are the steps to perform this transformation:

Select a Numeric Column: Choose a column containing numeric (integer or float) data that you want to transform using the expanding window method.

Select the Expanding Window Transform: Choose the Expanding Window transform option from the available transformations.

The Expanding window transform drawer opens.

Select Method (Min, Max, Mean): Decide on the method you want to apply for calculation within the expanding window. Options typically include Minimum (Min), Maximum (Max), and Mean. User can select multiple columns.

Submit It: Execute the expanding window transformation with the chosen column and method(s) by clicking the Submit option.

The output will be generated as follows:

If multiple methods are selected, new columns will be created with names indicating the method used. For example, if three methods are selected for a column named 'col1', the resulting columns will be named 'col1_Expanding_Min', 'col1_Expanding_Max', and 'col1_Expanding_Mean'.

Calculation:

col1_Expanding_Min: Compares each value to the smallest value from the column and updates the result. The minimum value will always be the least value from the column.

col2_Expanding_Max: Compares each value to the first cell (smallest value) and updates it if a higher value is encountered.

col1_Expanding_Mean: Calculates the mean by adding each value to the first cell value and dividing by the number of elements encountered so far in the expanding window.

The Feature Agglomeration is indeed used in machine learning and dimensionality reduction for combining correlated features into a smaller set of representative features. It's particularly useful when dealing with datasets containing a large number of features, some of which may be redundant or highly correlated with each other.

Here are the steps to perform the transformation:

Navigate to the Data Preparation workspace.

Select the Feature Agglomeration as the transform from the Transforms tab.

The Feature Agglomeration dialog opens.

Choose multiple numerical columns from your dataset.

Update the samples if needed.

Click the Submit option.

The output will contain the transformed features, where the number of resulting columns will be equal to the number of clusters specified or determined by the algorithm.

Each column will represent a cluster, which is a combination of the original features. The clusters are formed based on the similarity or correlation between features.

If the selected numerical columns are 3 and the sample size is 2, the resulting output will have 2 columns labeled as cluster_1 and cluster_2, respectively, representing the two clusters obtained from the Feature Agglomeration transformation.

The Label Encoding is a technique used to convert categorical columns into numerical ones, enabling them to be utilized by machine learning models that only accept numerical data. It's a crucial pre-processing step in many machine learning projects.

Here are the steps to perform Label Encoding:

Select a column containing string or categorical data from the Data Grid display using the Data Preparation workspace.

Choose the Label Encoding transform.

After applying the Label Encoding transform, as a result, a new column will be created where the categorical values are replaced with numerical values.

These numerical values are typically assigned in ascending order starting from 0. Each unique category in the original column is mapped to a unique numerical value.

For example:

If a column contains categories "Tall", "Medium", "Short", and "Tall", after applying Label Encoding, it will show the result as 0, 1, 2, 0, respectively. Each unique category gets a distinct numerical value assigned to it based on its position in the encoding scheme.

The lag transformation involves shifting or delaying a time series by a certain number of time units (lags). This transformation is commonly used in time series analysis to study patterns, trends, or dependencies over time.

Here are the steps to perform a lag transformation:

Navigate to the Data Preparation workspace.

Select the Lag Transform from the Transforms tab.

The Lag Transform dialog box opens.

Choose the numeric-based column representing the time series data.

Update the Lag parameter to specify the number of time units to shift or delay the time series. Provide a number to the Lag field. The Lag value should be 1 or more.

Click the Submit option to submit the transformation.

After applying the lag transformation, the result will be updated with a new column.

This new column represents the original time series data shifted by the specified lag.

The first few cells in the new column will be empty as they correspond to the lag period specified.

The subsequent cells will contain the values of the original time series data shifted accordingly.

For example, if we have a simple time series data representing the monthly sales of a product over a year with a lag of 2, the first two cells in the new column will be empty, and the subsequent cells will contain the sales data shifted by two months.

Month

Sales

Sales_lag_2

Jan

100

Feb

120

Mar

90

100

April

60

120

May

178

90

June

298

60

The Leave One Out Encoding transform is to encode categorical variables in a dataset based on the target variable while avoiding data leakage. It's particularly useful for classification tasks where you want to encode categorical variables without introducing bias or overfitting to the training data.

Here are the steps to perform Leave One Out Encoding transformation:

Select a string column for which the transformation is applied. This column should contain categorical variables.

Choose the Leave One Out Encoding transformation from the Transforms tab.

The Leave One Out Encoding dialog box appears.

Select an integer column which represents the target value used to calculate the mean for category values. This column is usually associated with the target variable in your dataset.

Submit the transformation by using the Submit option.

After applying the Leave One Out Encoding transformation, the result will be displayed as a new column.

This new column will contain the mean values of the occurrences for each record in the selected categorical column, excluding the target value in that record.

This encoding method helps to encode categorical variables based on the target variable while avoiding data leakage, making it particularly useful for classification tasks where you want to encode categorical variables without introducing bias or overfitting to the training data. Refer the following image as an example:

category

target

Result

A

1

0.5

B

0

0.5

A

1

0.5

B

1

0

A

0

1

B

0

0.5

One-Hot Encoding/ Convert Value to Column is a data preparation technique used to convert categorical variables into a binary format, making them suitable for machine learning algorithms that require numerical input. It creates binary columns for each category in the original data, where each column represents one category and has a value of 1 if the category is present in the original data and 0 otherwise.

Here are the steps to perform One-Hot Encoding:

Select Categorical Column: Choose the categorical column(s) from your dataset that you want to encode. These columns typically contain string or categorical values.

Apply One-Hot Encoding: Use the One-Hot Encoding transformation to convert the selected categorical column(s) into a binary format. By clicking the One-Hot Encoding transform, it gets applied to the values of the selected categorical column.

Result Interpretation: The output will be a set of new binary columns, each representing a category in the original categorical column. For each row in the dataset, the value in the corresponding binary column will be 1 if the category is present in that row, and 0 otherwise.

Example: Suppose you have a dataset with a categorical column "Color" containing the following values: "Red", "Blue", "Green", and "Red".

Original Dataset:

Color

Red

Blue

Green

Red

After applying One-Hot Encoding:

Each row represents a category from the original column, and the presence of that category is indicated by a value of 1 in the corresponding binary column. For instance, the first row has "Red" in the original column, hence "Color_Red" is 1, while the others are 0. Like wise "Color_Blue" and "Color_Green" are displayed.

1

0

0

0

1

0

0

0

1

1

0

0

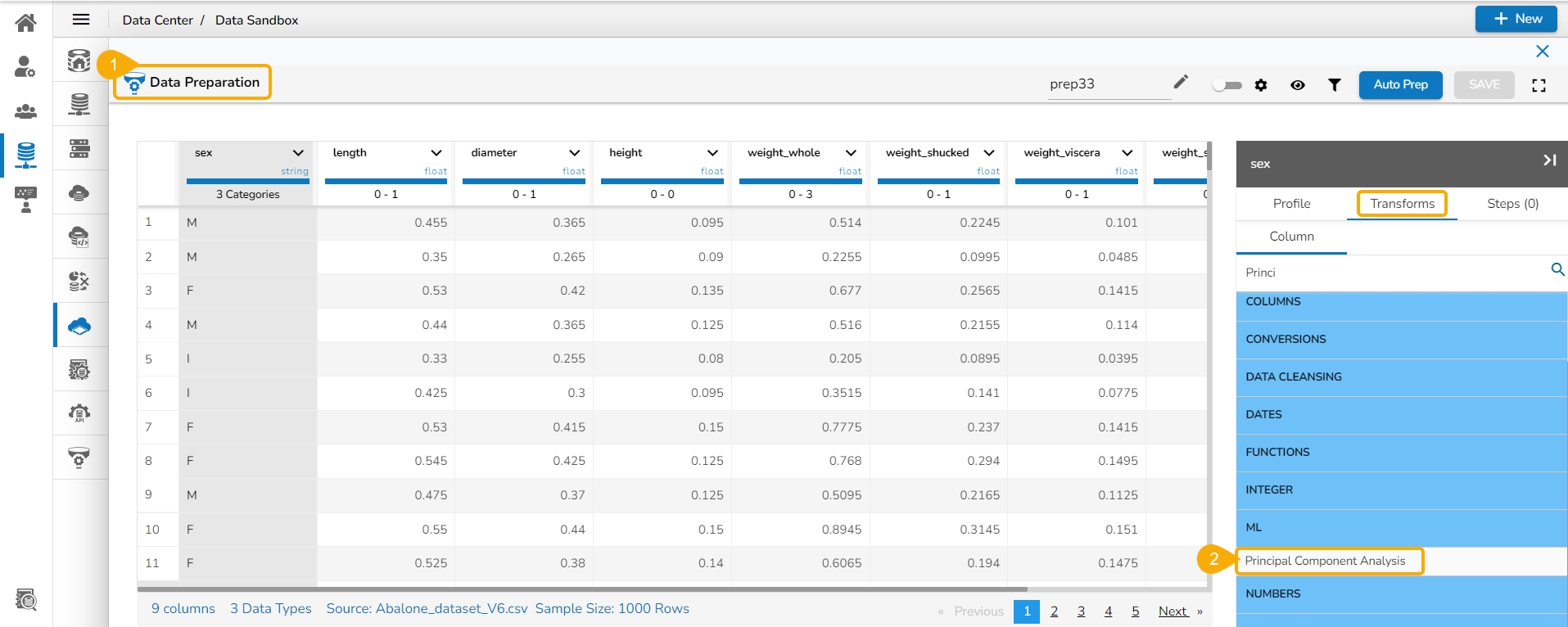

Principal Component Analysis (PCA) is a dimensionality reduction technique used to identify patterns in data by expressing the data in a new set of orthogonal (uncorrelated) variables called principal components. The PCA is widely used in various fields such as data analysis, pattern recognition, and machine learning.

Here are the steps to perform Principal Component Analysis (PCA):

Navigate to the Data Preparation workspace.

Select the Principal Component Analysis transform using the Transforms tab.

The Principal Component Analysis dialog window opens.

Select multiple numerical columns by using the given checkboxes.

The selected columns get displayed separated by commas.

Output Features: Update output features by providing a number based on the number provided for this field, the result columns are inserted in the data set.

Click the Submit option.

Here's an illustration to explain the Principal Component Analysis:

Suppose we have a dataset with two numerical variables, "Height" and "Weight", and we want to perform PCA on this dataset.

Original Dataset:

170

65

165

60

180

70

160

55

After standardization:

0.44

0.50

-0.22

-0.50

1.33

1.00

-1.56

-1.00

Based on the provided update output features, the result column(s) get added.

Please Note: The selected Output Feature for the chosen dataset is 1, therefore in the above given image one column has been inserted displaying the result values.

The Rolling Data transform is used in time series analysis and feature engineering. It involves creating new features by applying transformations to rolling windows of the original data. These rolling windows move through the time series data, and at each step, summary statistics or other transformations are calculated within the window.

Here are the steps to perform the Rolling Data transform:

Select a numeric (int/ float) based column from your dataset. This column represents the time series data on which you want to apply the Rolling Data transformation.

Select the Rolling Data transform.

Update the Window size. Specify the size of the rolling window. This determines the number of consecutive data points included in each window. The window size should be a numeric value of 1 or larger number.

Select a Method out of the given choices (Min, Max, Mean). It is possible to choose all the available methods and apply them on the selected column.

Click the Submit option to apply the rolling window transformation.

Please Note: Window Size can be updated by any numeric values which must be 1 or larger values.

The result will be the creation of new columns based on the selected method and the specified window size. Each new column will contain the summary statistic or transformation calculated within the rolling window as it moves through the time series data.

For Example: Suppose we have a time series dataset with a numeric column named "Value" containing the following values: [1, 2, 3, 4, 5, 6, 7, 8, 9, 10], and we want to apply a rolling window transformation with a window size of 2 and calculate the Min, Max, and Mean within each window.

In this example, each new column represents the summary statistic (Min, Max, or Mean) calculated within the rolling window of size 2 as it moves through the "Value" column.

The Result Columns:

"Value_Min": [null, 1, 2, 3, 4, 5, 6, 7, 8, 9]

"Value_Max": [null, 2, 3, 4, 5, 6, 7, 8, 9, 10]

"Value_Mean": [null, 1.5, 2.5, 3.5, 4.5, 5.5, 6.5, 7.5, 8.5, 9.5]

Please Note: The first cell in each new column is null because there are no previous cells to calculate the summary statistic within the initial window.

The Singular Value Decomposition transform is a powerful linear algebra technique that decomposes a matrix into three other matrices, which can be useful for various tasks, including data compression, noise reduction, and feature extraction. In the context of transformations for data analysis, Singular Value Decomposition (SVD) can be used as a technique for dimensionality reduction or feature extraction. It works by breaking down a matrix into three constituent matrices, representing the original data in a lower-dimensional space.

Here are the steps to perform the Singular Value Decomposition transform:

Select the Singular Value Decomposition transform using the Transforms tab.

The Singular Value Decomposition window opens.

Select multiple numeric types of columns from the dataset using the drop-down menu.

Update the Latent Factors.

Click the Submit option.

The result should be based on the latent factor update size. For example, if it's 2 the result column will be 2.

Target-based Quantile Encoding is particularly useful for regression problems where the target variable is continuous. It helps in encoding categorical variables based in a dataset based on the distribution of the target variable within each category which can potentially improve the predictive performance of regression models.

Here are the steps to perform the Target-based Quantile Encoding transform:

Select a string column from the dataset on which the Target-based Quantile Encoding can be applied.

Select the Target-based Quantile Encoding transformation from the Transforms tab.

The target-based quantile encoding dialog box opens.

Select an integer column from the dataset.

Click the Submit option.

The result will be a new encoded column for each value in the selected column.

E.g.,

category

target

Result

A

1

0.875

B

0

0.125

A

1

0.875

B

1

0.125

A

0

0.875

B

0

0.125

Target Encoding, also known as Mean Encoding or Likelihood Encoding, is a method used to encode categorical variables based on the target variable(or another summary statistic) for each category. It replaces categorical values with the mean of the target variable for each category. This encoding method is widely used in predictive modeling tasks, especially in classification problems, to convert categorical variables into a numerical format that can be used as input to machine learning algorithms.

Here are the steps to perform the Target Encoding transform:

Select a category (string) column type for the transformation.

Select the Target Encoding transformation from the Transforms tab.

The Target Encoding dialog box opens.

Select the Target Column using the drop-down option (it should be numeric/integer column).

Click the Submit option.

The result will be displayed in a new column with the encoded mean values for each category value in the selected column will be displayed.

E.g.,

Category

Target

Result

A

1

0.5257

B

0

0.4247

A

1

0.5257

B

1

0.4247

A

0

0.5257

B

0

0.4247

The Weight of Evidence Encoding is used in binary classification problems to encode categorical variables based on their predictive power to the target variable. It measures the strength of the relationship between a categorical variable and the target variable by examining the distribution of the target variable across different categories.

Here are the steps to perform the Weight of Evidence Encoding transform:

Select a categorical column (string column) from the dataset.

Select the Weight of evidence encoding transform from the Transforms tab.

Select a target column with Binary Variables (like true/false, 0/1). E.g., the selected column in this case, is the target_value column.

Click the Submit option.

The result will be as new column where the distribution of the target variable across different categories.

Find out the clusters based on the pronunciation sound and edit the bulk data in a single click.

Check out the given illustration on the Cluster & Edit transform.

Steps to perform the Cluster & Edit transform.

Select a column from the given dataset.

Open the Transforms tab.

Select the Cluster and Edit transform from the Advanced category.

The Cluster & Edit window opens.

The Method drop-down uses the Soundex phonetic algorithm for indexing names by sound as pronounced in English.

The Values found column lists number of values found from the data set related to a specific sound. E.g., In the given image the Values found display 5 categories.

Select a value by using the checkbox that needs to be modified or changed. E.g., the 'Declineed' has been selected in the given example.

Navigate to the Replace Value list.

Search for a replace value or enter a value that you wish to be used as replace value using the drop-down menu from the Replace Value column.

Click the Submit option.

The selected values from the column get modified in the data set.

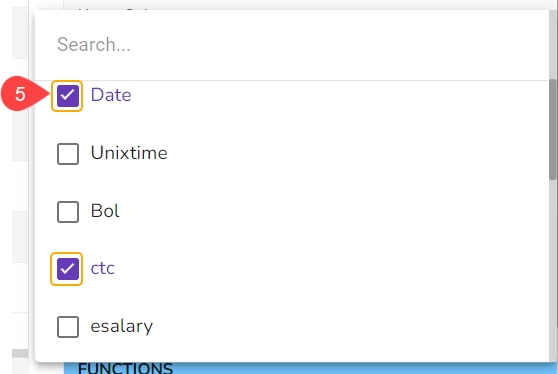

This transform helps to execute expressions.

Check out the given illustration to understand the Expression Editor transform.

Steps to perform the Expression Editor transform:

Navigate to a Dataset within the Data Preparation framework.

Navigate to the Transforms tab.

Open the Expression Editor from the Advanced transforms.

The Expression Editor window opens displaying the following columns:

Functions: The first column contains functions for the user to search for a function. By using the double clicks on a function, it gets added to the given space provided for creating a formula.

Columns: The second column lists all the column names available in the selected dataset.

The Formula space is provided to create and execute various formulas/ executions.

Use either of the following ways to consume the created expression or formula in the dataset.

Update a selected column by using the Update column option.

Create a new column with the created expression, and provide column name for the New Column. A new column has been created in the example given below:

Click the Submit option to either add a new column or update the selected column based on the executed formula/ expression.

The recently created or updated column with Formula gets added to the dataset.

Anomaly detection is used to identify any anomaly present in the data. i.e., Outlier. Instead of looking for usual points in the data, it looks for any anomaly. It uses the Isolation Forest algorithm.

Check out the given walk-through on Find Anomaly transform.

Steps to perform the Find Anomaly transform:

Select a dataset within the Data Preparation framework.

Navigate to the Transforms tab.

Select the Find Anomaly transform from the ADVANCED category.

Configure the following information:

Select Feature Columns: Select one or more columns where you want to find the anomaly.

Maximum Sample Size: The Isolation Forest algorithm takes the training data of a given sample size to find out the normal value in the dataset.

Contamination (%): It is the percentage of observations we believe to be outliers. It varies from 0 to 1 (both inclusive).

Anomaly Flag Name: The result is either -1 or 1. 1 means the data is standard, and -1 means data is an outlier. This information gets stored in the new column given in the anomaly flag name.

Click the Submit option after the required details are provided.

The anomaly gets flagged under the column that has been named using the Anomaly Flag Name option.

Please Note: The other needed parameters such as Estimators and seed values are considered based on their default values to run the Isolation Forest logic on the selected dataset sample.

This transform helps to perform SQL queries.

Check out the given illustration on the SQL Transform.

Steps to perform the SQL transform:

Select a dataset within the Data Preparation framework.

Navigate to the Transforms tab.

Open the SQL Transform from the Advanced transforms.

Please Note: Function syntax and small example comes under the text area by using double-clicks on the functions.

Click the Submit option to add a new column based on the query result.

The SQL Editor page opens.

The First column contains SQL functions, the user can search for a function and add it to the given text space provided for writing query.

The Second column lists all the column names available in the dataset.

The Text area is provided for writing queries.

Based on the selected function an example will be displayed below the text space.

Click the Submit option.

A new column gets added to the dataset reflecting the condition provided through the SQL transform.

Please Note: The SQL Transform & Expression Editor support only Pandas SQL Queries.

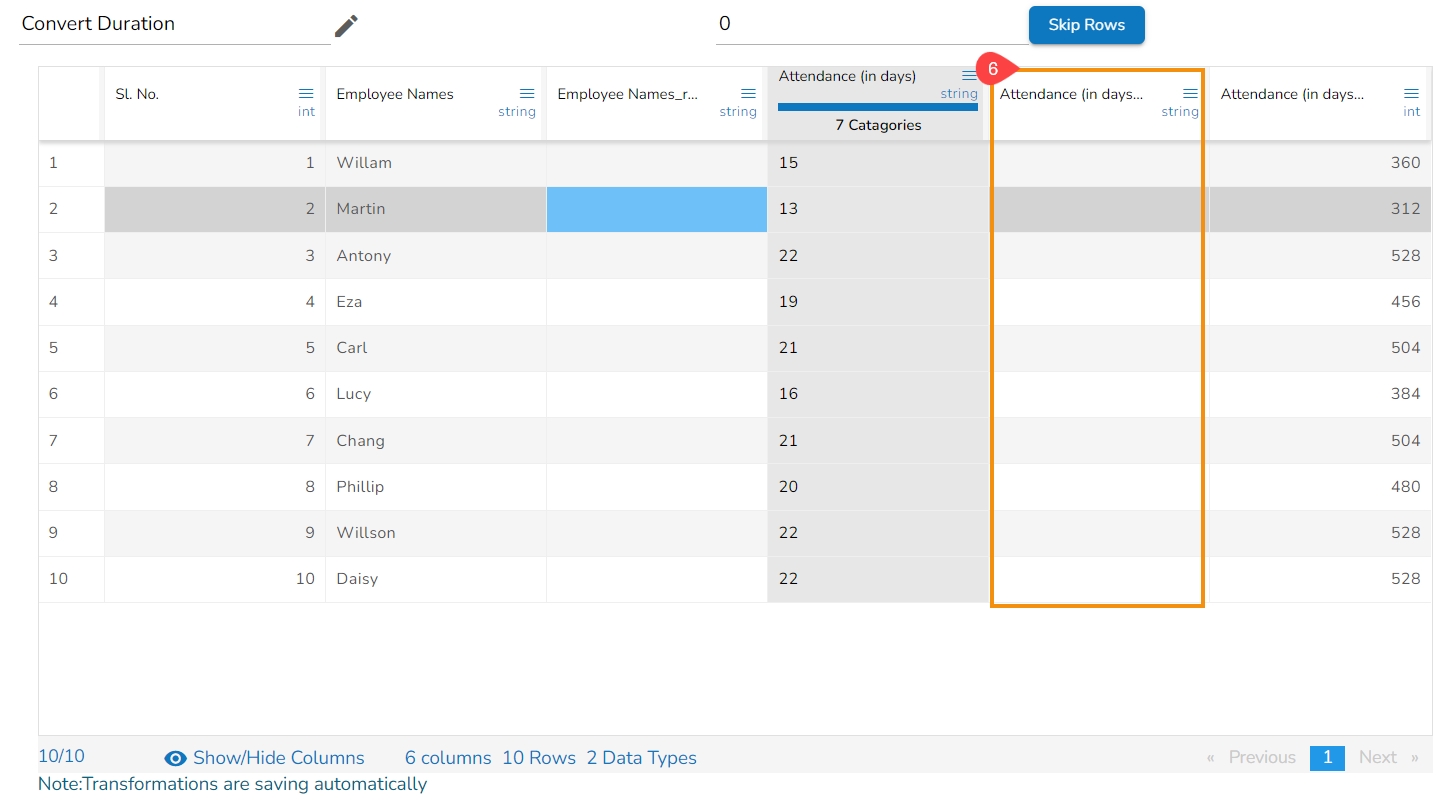

The transform converts any duration (day, hour, minute, seconds, milliseconds) to any specified duration.

To perform the transform, select the column which has the duration to be converted and specify the duration type.

Check out the given illustration on how to apply Convert Duration.

Select a column from the data set with the time duration.

Navigate to the Transforms tab.

Select the Convert Duration transform from the Conversion category.

Enable the Create new column option to create a new column.

From: The type of source interval

To: The type of destination interval

Precision: The decimal points to be retained.

Click the Submit option.

The selected column values undergo a transformation to align with the chosen conversion duration. E.g., For instance, in this scenario, the selected column values undergo a conversion, transitioning from the unit of days to hours.

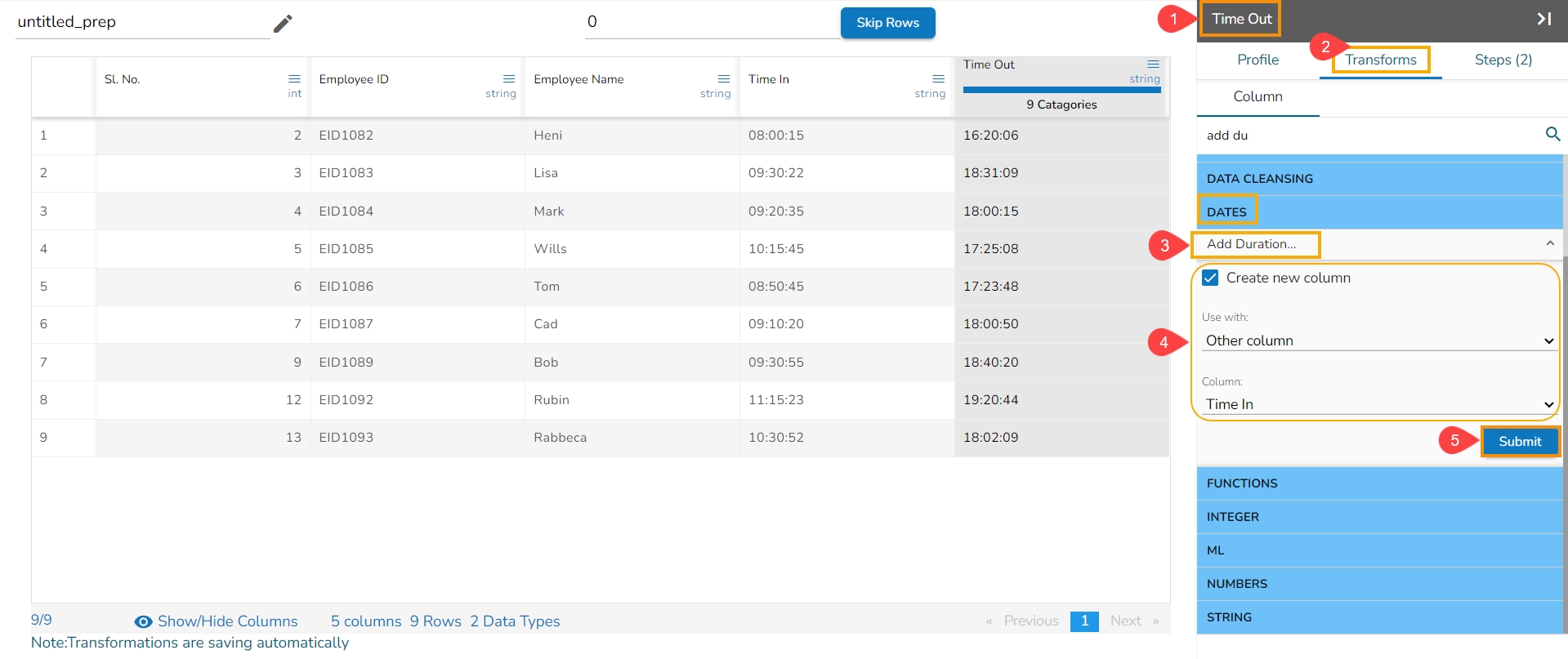

The transform adds two-time values. It can either add the selected column with a time value or time from another column.

Please Note: The transform supports adding time into ‘hh:mm:ss.mmm’ and ‘hh:mm:ss’ formats.

Use with: Specify whether to fill with a value or another column value

Column/ Value: The value with which the column must be added, or the column with which the selected column value must be added.

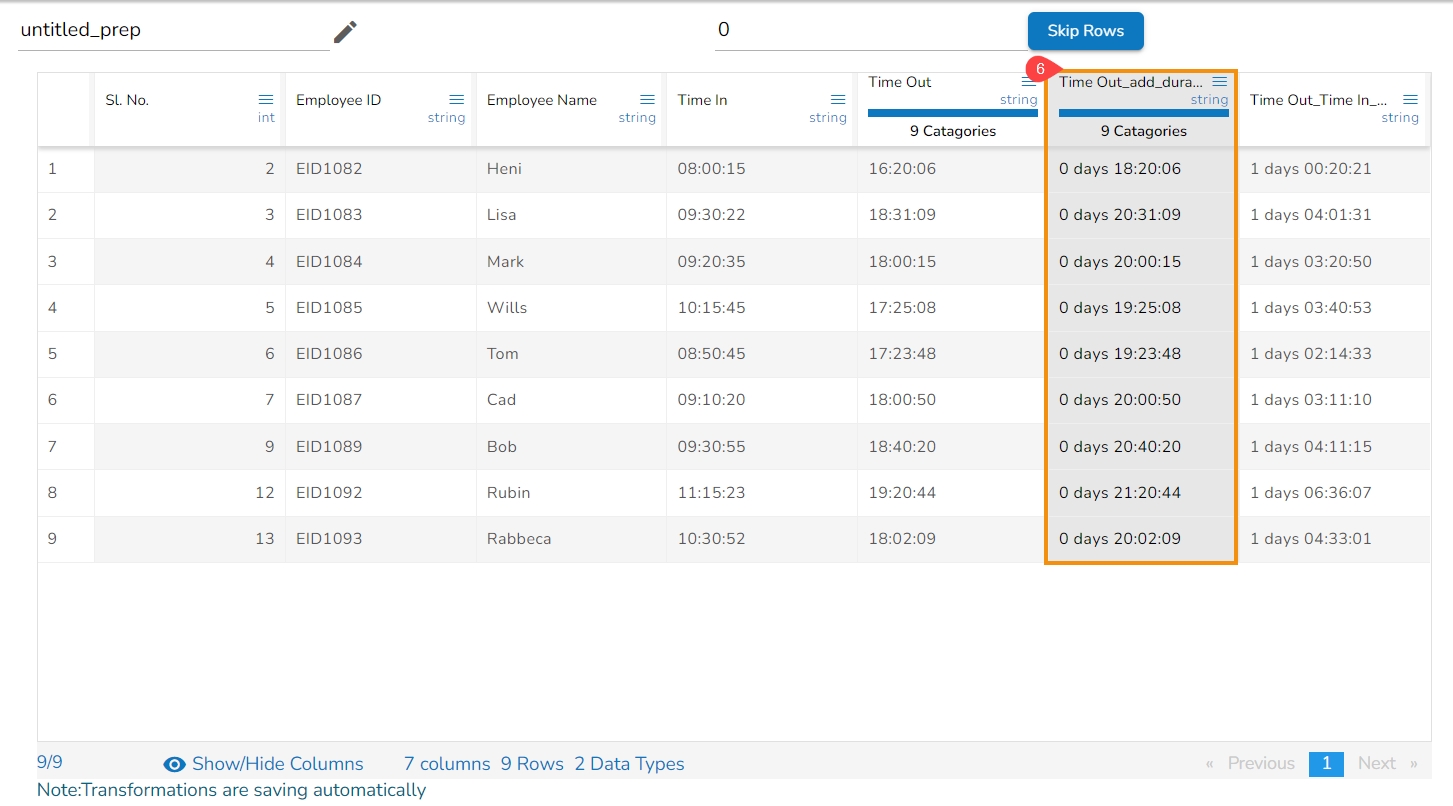

The Add Duration transform is applied to the timecol2, and the selected other column is timecol3 to configure the transform:

Select a column with the time values (In this case, the selected column is Time Out).

Open the Transforms tab.

Select the Add Duration transform from the Date transform category.

Enable the Create new column option, if you wish to display the transformed data in a new column.

Select the Other Column option from the drop-down option.

Select another column using the drop-down option from where the duration values are to be counted (In this case, the selected column is Time In column).

Click the Submit option.

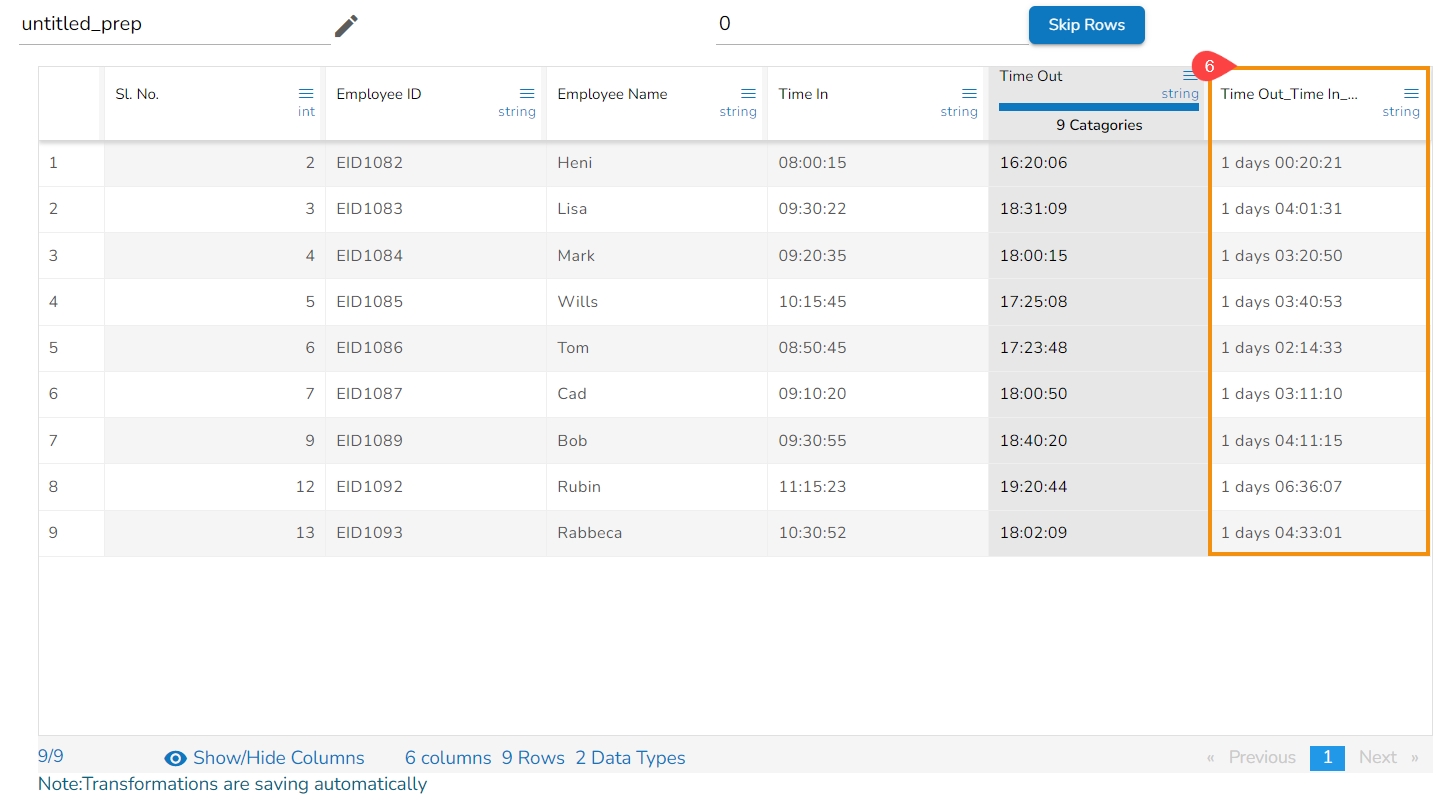

A new column gets created displaying the duration values as displayed below:

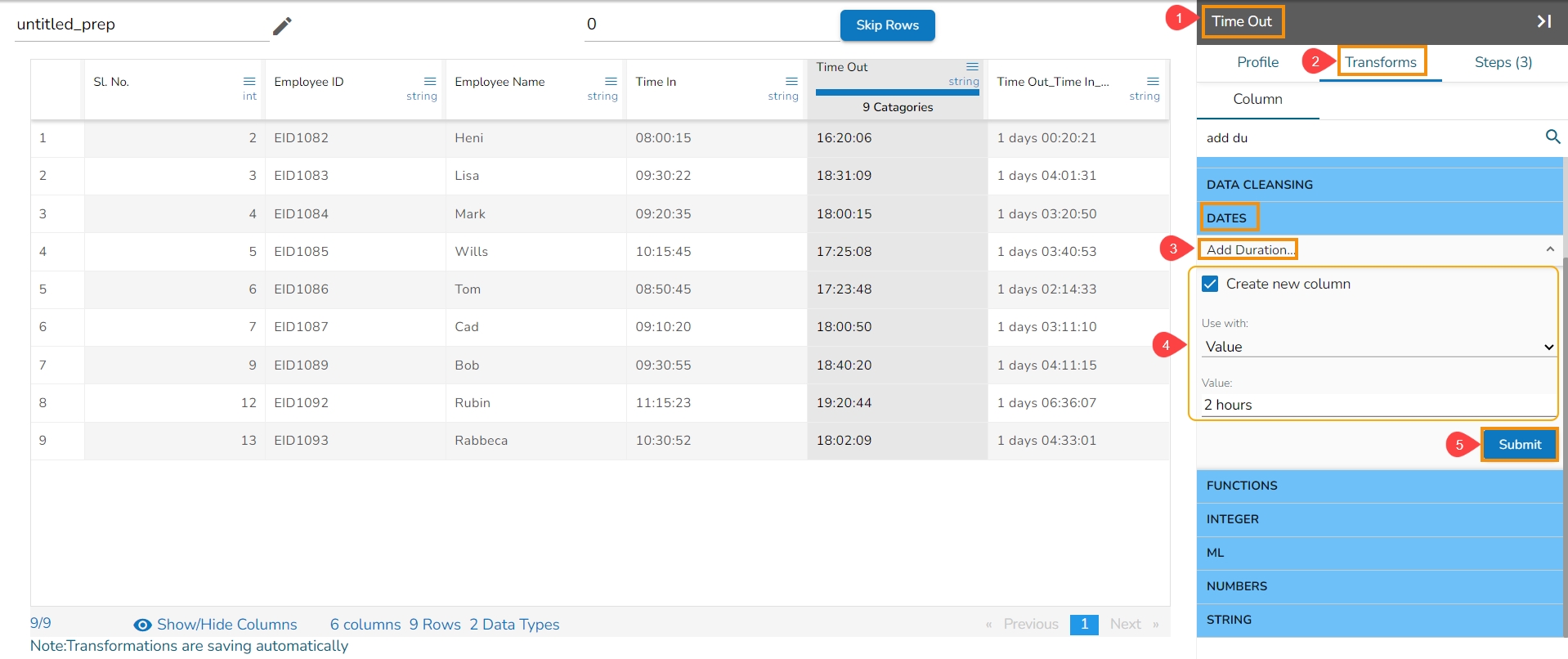

The Add Duration transform has been applied to the Time Out column with Value option where in the set value is 2 hours.

A new column gets added to the Data Grid displaying duration based on the set value as shown in the below-given image:

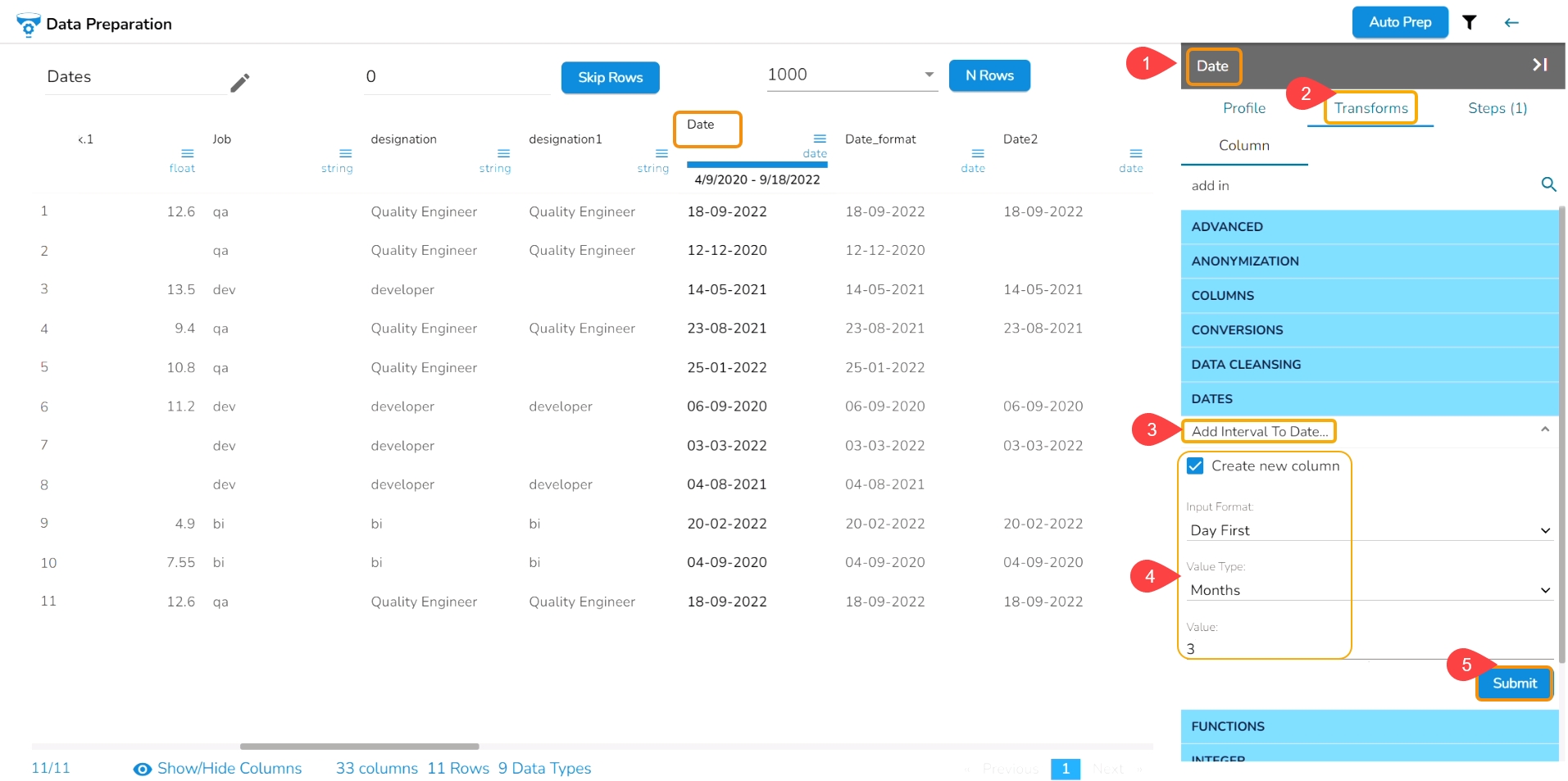

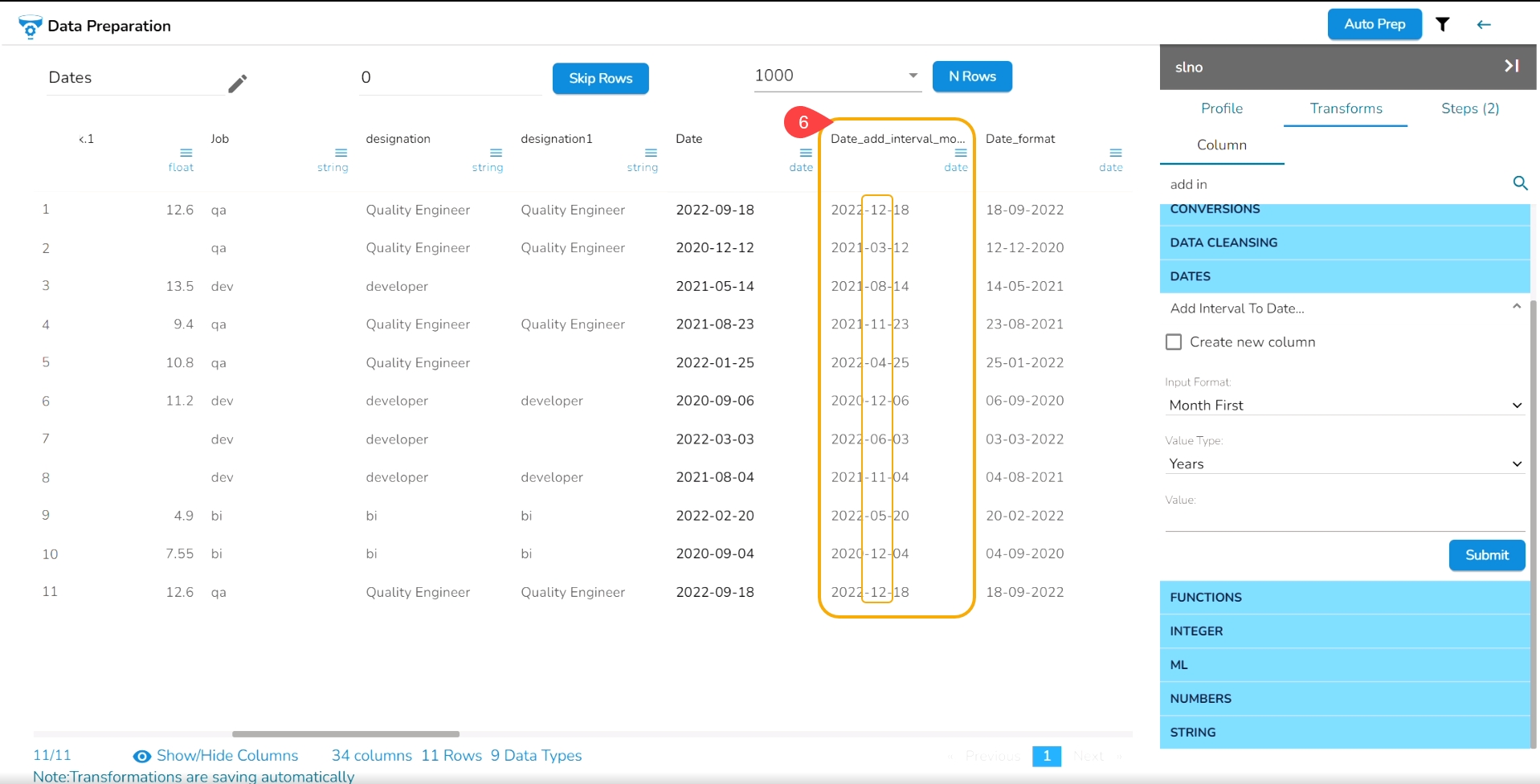

It adds the time duration specified to the selected datetime column.

Check out the given walk-through on how to use the Add Interval to Date transform.

Select a column with Date values from the data grid.

Open the Transforms tab.

Select the Add Interval to Date transform from the Dates category.

Enable the Create new column option to create a new column with the transform result.

Input Format: It is used to specify the format of the selected Date column format. It can have values ‘Year first’, ‘Month first’, and ‘Day first.’

Value Type: It specifies the type of duration which acts as the operand for the addition. The value type can be years, months, days, weeks, hours, minutes or milliseconds

Value: The value or the operand that must be added with the selected column

Click the Submit option.

E.g., The Add Interval to Date transform has been applied to the Date column with the selected value of 3 months,

As a result, a new column gets added to the Data Grid reflecting the transformed data as per the set value.

Please Note: The transform supports the datetime column of ‘yyyy-mm-dd’ into the ‘hh:mm:ss’ format.

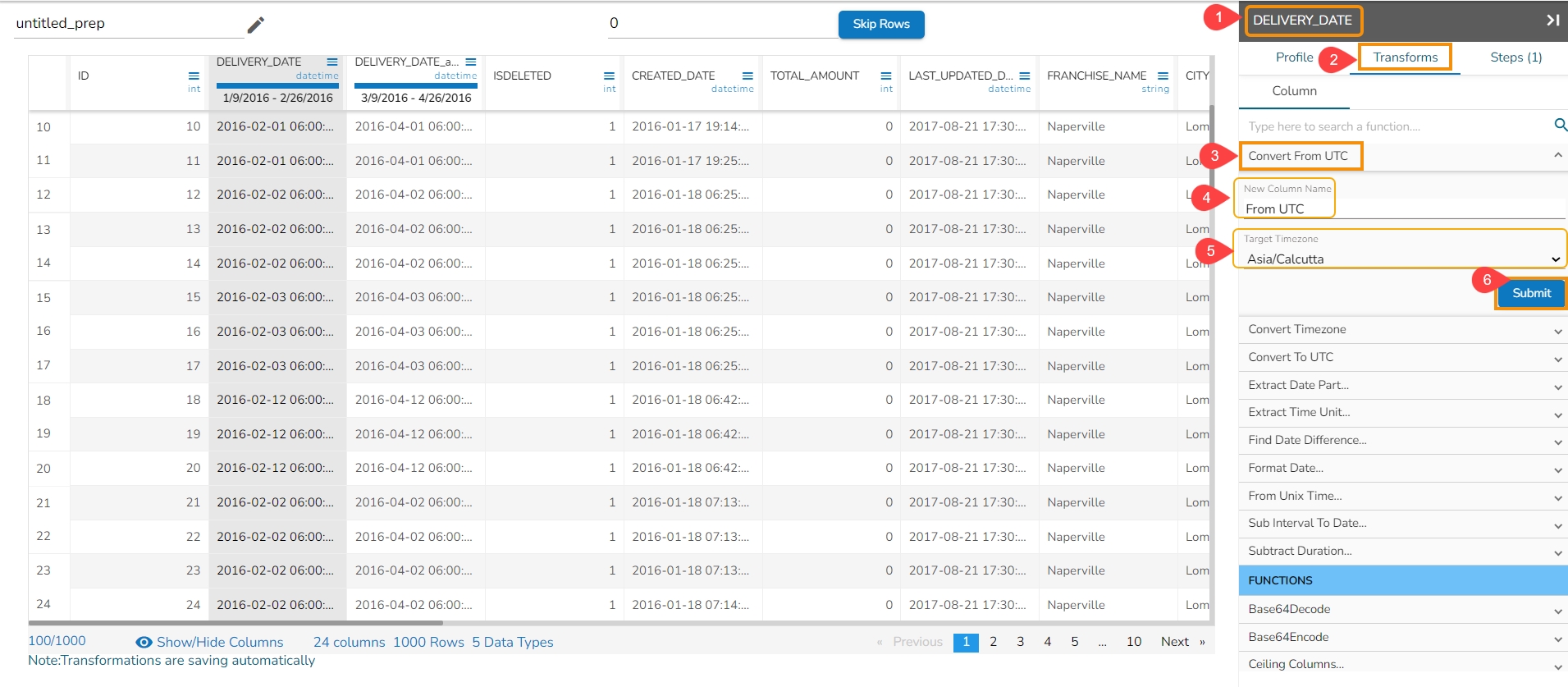

Converts the DateTime value to the corresponding value of the specified time zone. Input can be a column of Datetime values and it’s assumed to be in the UTC time zone.

Please Note: Inputs with time zone offsets are invalid.

Check out the walk-around on the Convert From UTC transform.

Steps to perform the transformation:

Select a DateTime column from the Dataset.

Navigate to the Transforms tab.

Select the Covert From UTC transform from the Dates category

Pass new column name.

Pass the Target Time zone in which the date to be converted.

Click the Submit option.

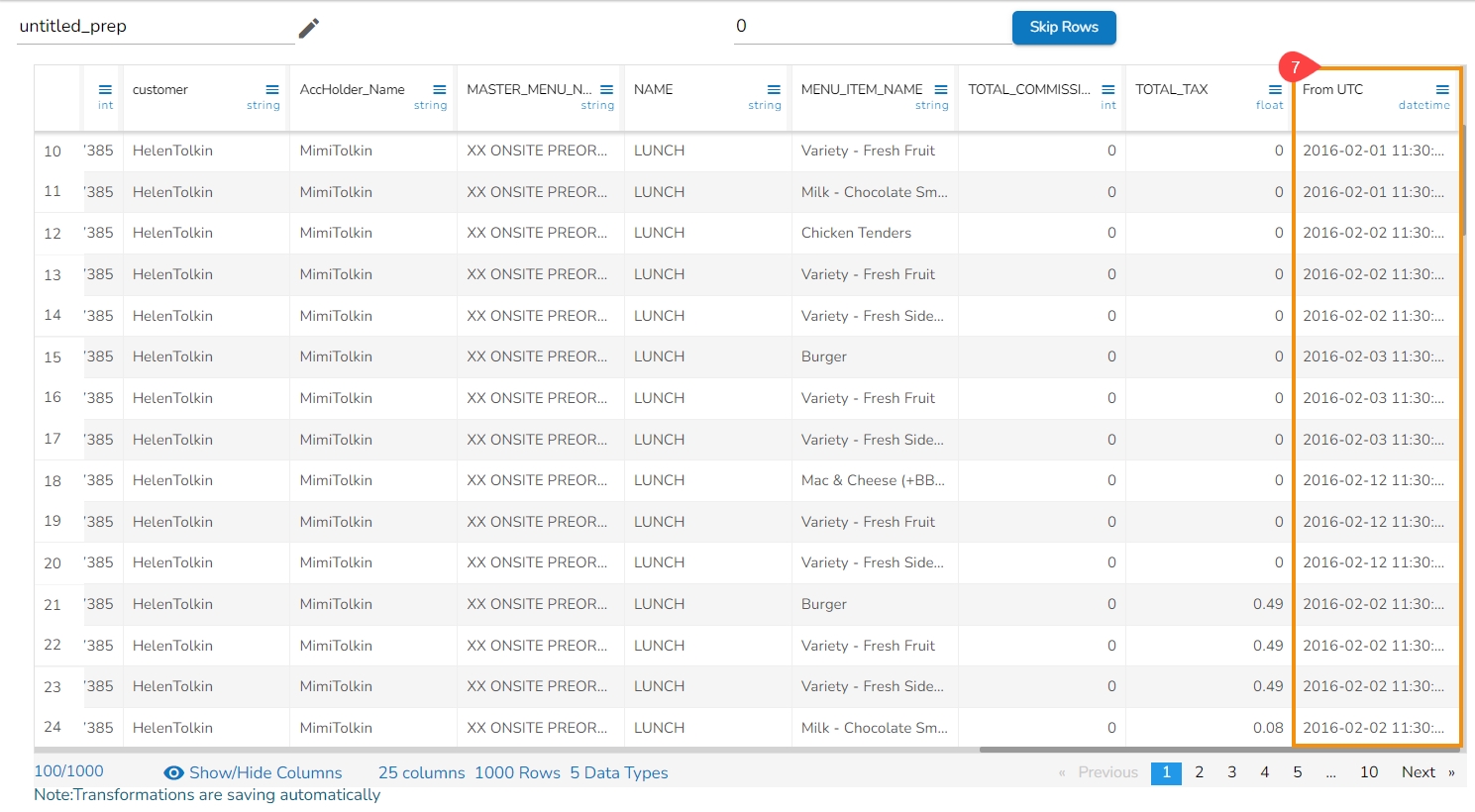

Result will come in a new column with the converted time zone present in it.

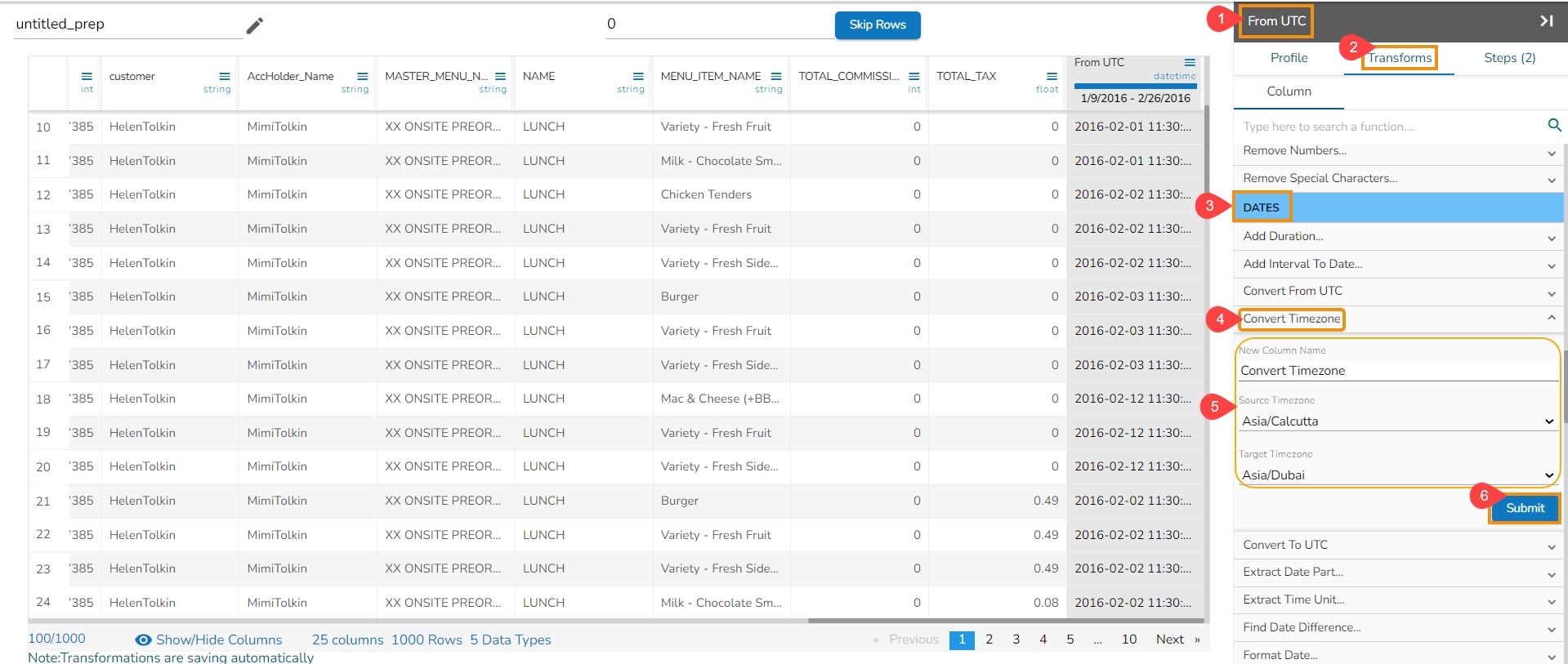

The Convert Timezone transformation converts Datetime value in a specified time zone to corresponding value second specified time zone.

Please Note: Inputs with time zone offsets are invalid.

Steps to perform the transformation:

Select a DateTime column from the Data Grid.

Navigate to the Dates transforms category.

Select the Convert Timezone transform.

Pass new column name.

Pass the Source Time zone for the selected date column.

Pass the Target Time zone in which the date to be converted.

Click the Submit option.

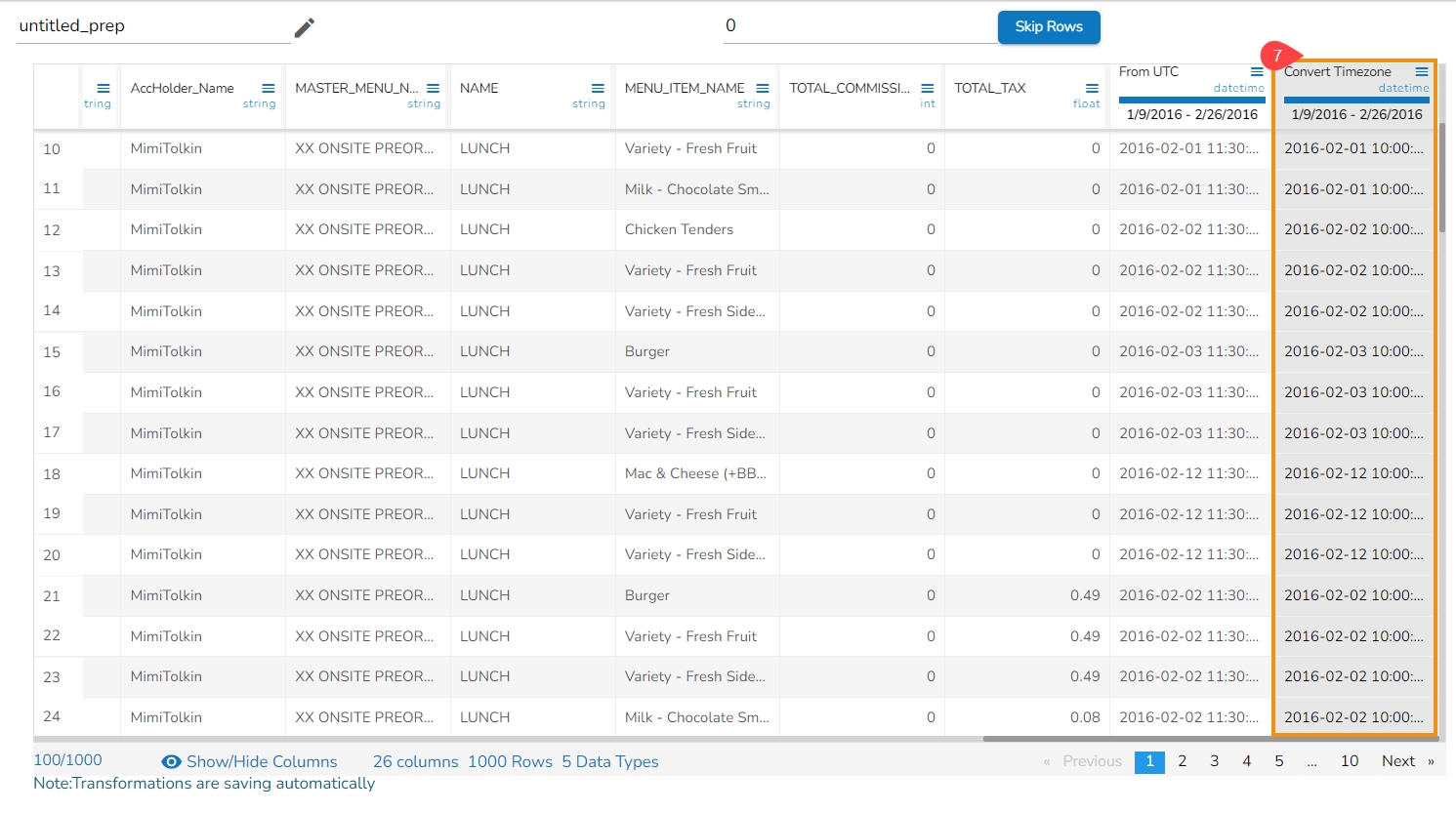

Result will come in a new column with the converted time zone.

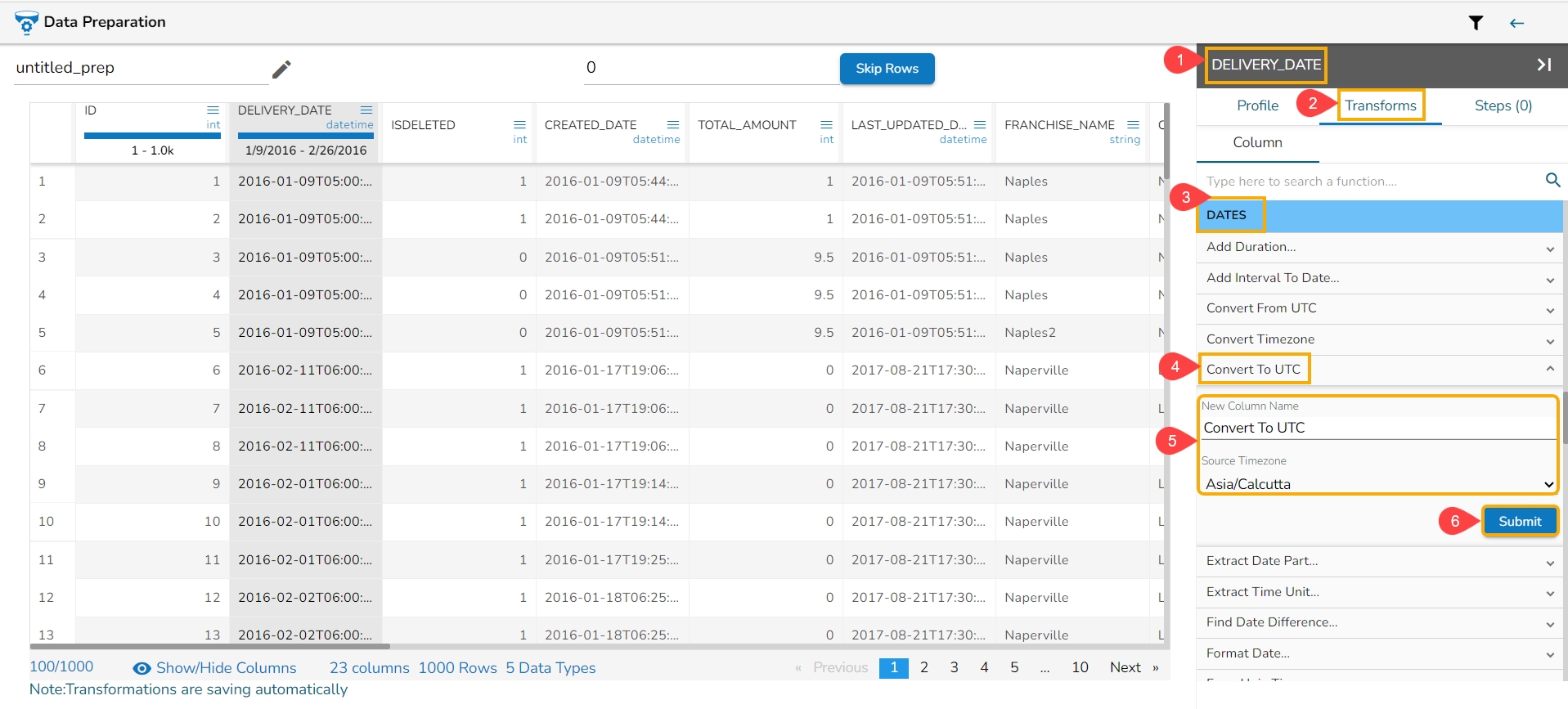

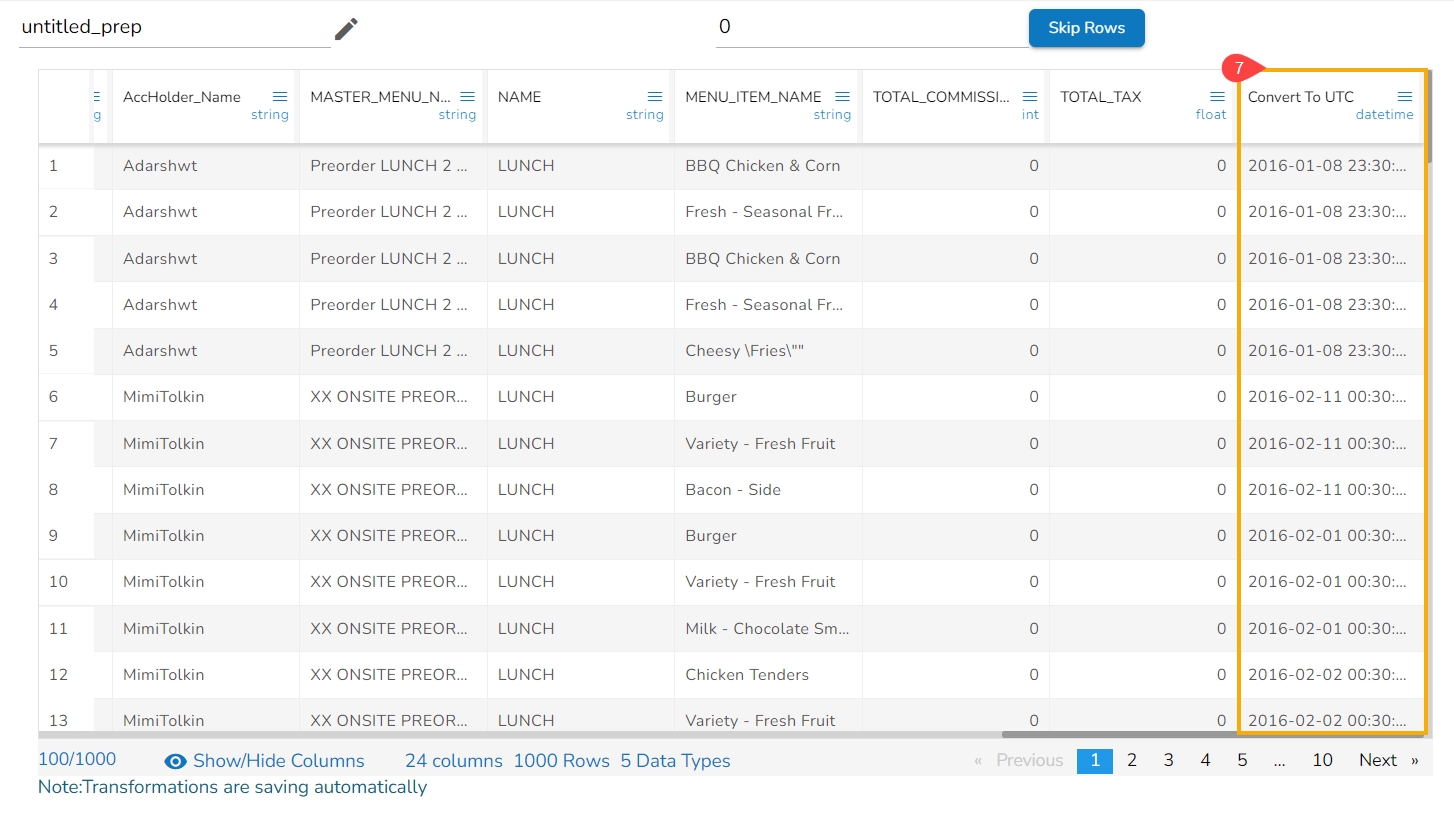

The Convert To UTC transform converts the DateTime value in a specified time zone to the corresponding value in the UTC time zone. Input can be a column of DateTime values.

Check out the given walk-through on how to Convert to UTC.

Please Note: Inputs with time zone offsets are invalid.

Steps to perform the transformation:

Select a DateTime column from the Data Grid.

Open the Transforms tab.

Navigate to the Dates transforms category.

Select the Convert To UTC transform.

Pass new column name.

Pass Source Time zone from which the date is to be converted to UTC.

Click the Submit option.

Result will come in a new column containing the converted UTC format.

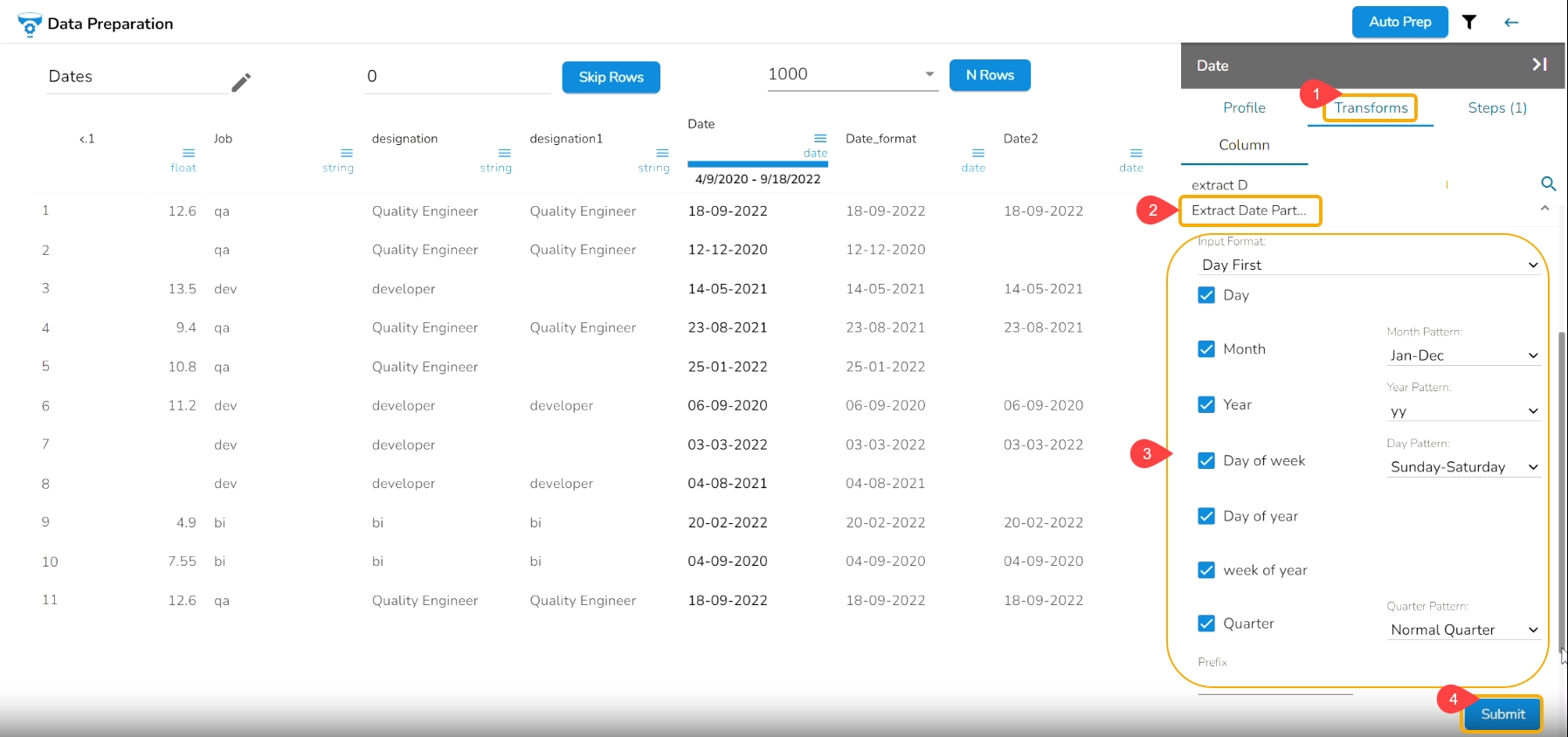

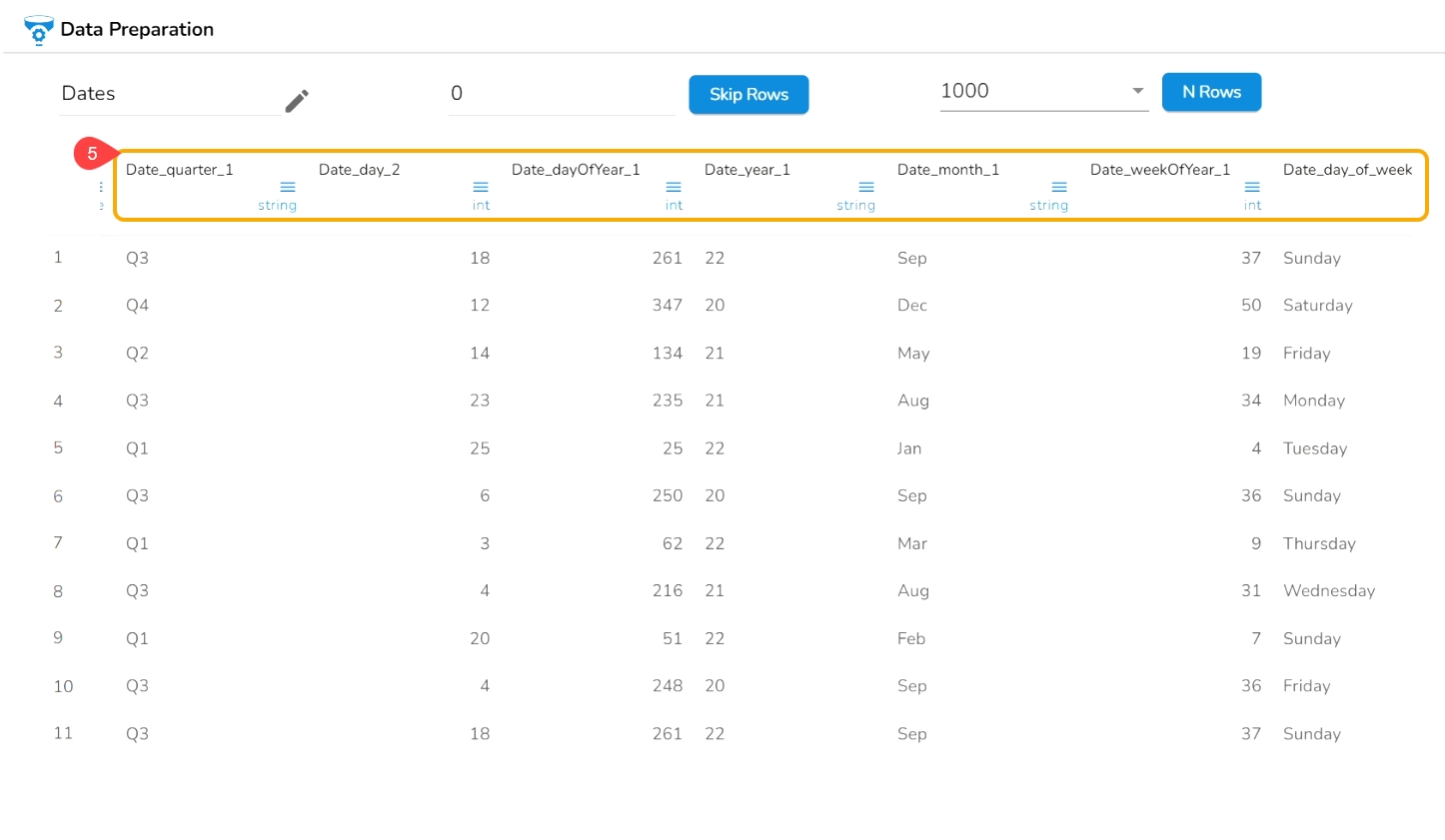

It extracts the date part from a selected column with a date value. The date parts that can be extracted include day, month, year, the day of the week, the day of the year and the week of the year.

Check out the given illustration on how to use the Extract Date Part transform.

Select a Datetime column from the Data Grid.

Open the Transforms tab.

Select the Extract Date Part transform from the Dates transforms category.

Select an Input Format based on the selected column.

Day: It extracts day from a date

Month: It extracts the month from a date/datetime. We can specify the pattern in which the month value has to be returned. Month pattern can be 0-12, Jan - Dec or January - December

Year: It extracts the year from a date. We can specify the pattern in which the year has to be returned. The year pattern can be in the ‘yy’ or ‘yyyy’ format.

Day of Week: It returns the day of the week for the selected date. Day of week pattern can also be specified. The pattern can be 1-7, Sun-Sat or Sunday-Saturday

Day of Year: It returns a number between 1 and 365, which indicates the sequential day number starting with day one on January 1st.

Week of Year: It replaces a number between 1 and 53, which indicates the sequential week number beginning with 1 for the week January 1st falls.

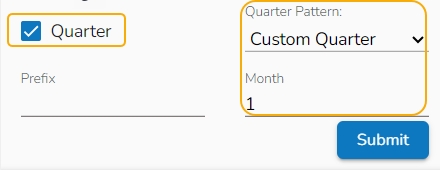

Quarter: It displays the date value based on the quarter, select a quarter pattern using the drop-down option. The supported options are Normal Quarter, Financial Quarter, and Custom Quarter (the user can define the month value for the custom quarter option).

Click the Submit option after selecting all the Date Parts that you wish to extract from the targeted column.

E.g., This transform is applied on the Date column by selecting the Day, Month (Jan-Dec format), Year (in yy pattern), Day of Week (Sunday- Saturday), Day of year, Quarter as a part to be extracted from the selected column values.

As a result, it creates a new columns displaying all the selected transform values.

Please Note: The transform supports Date and DateTimes format (date hh:mm:ss).

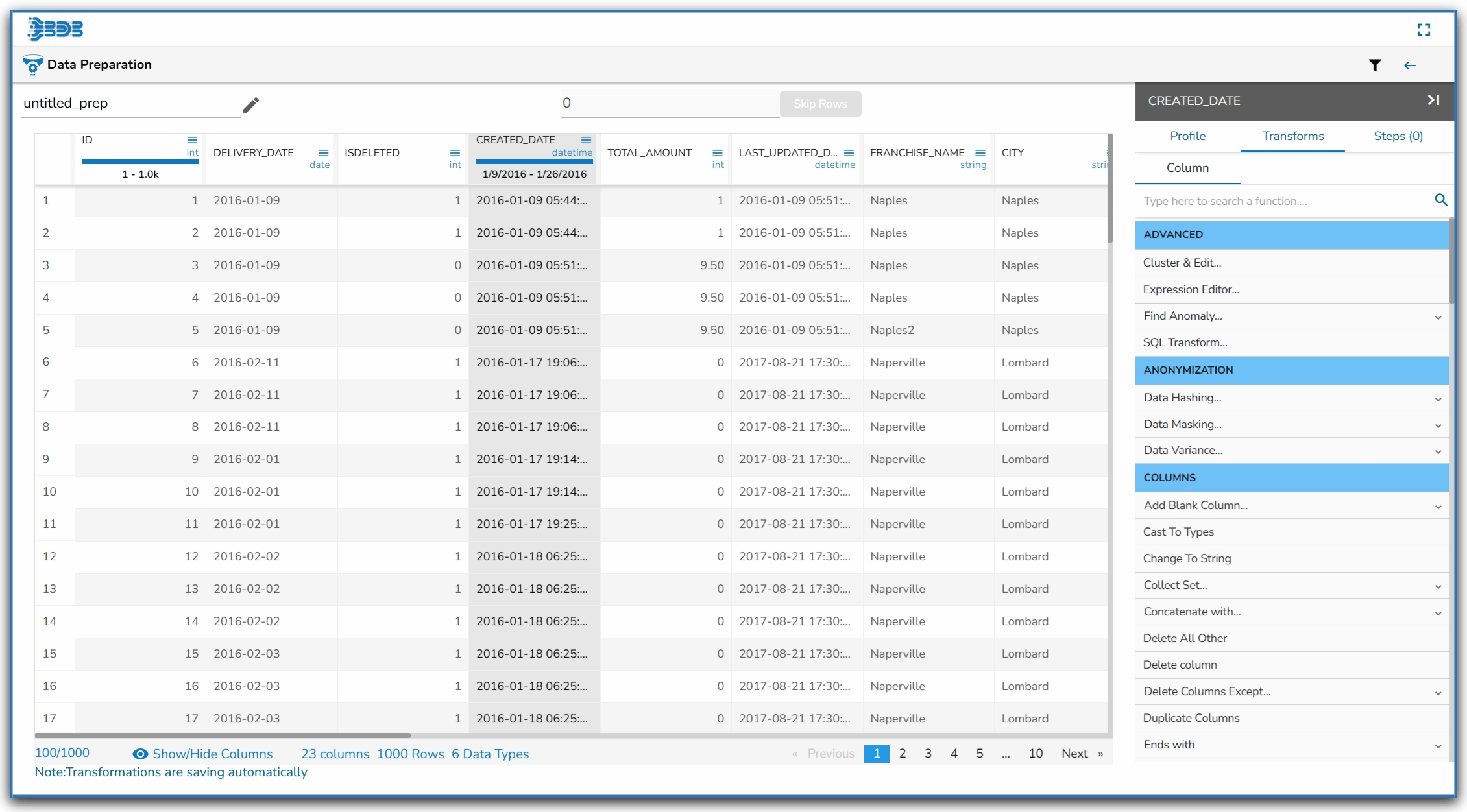

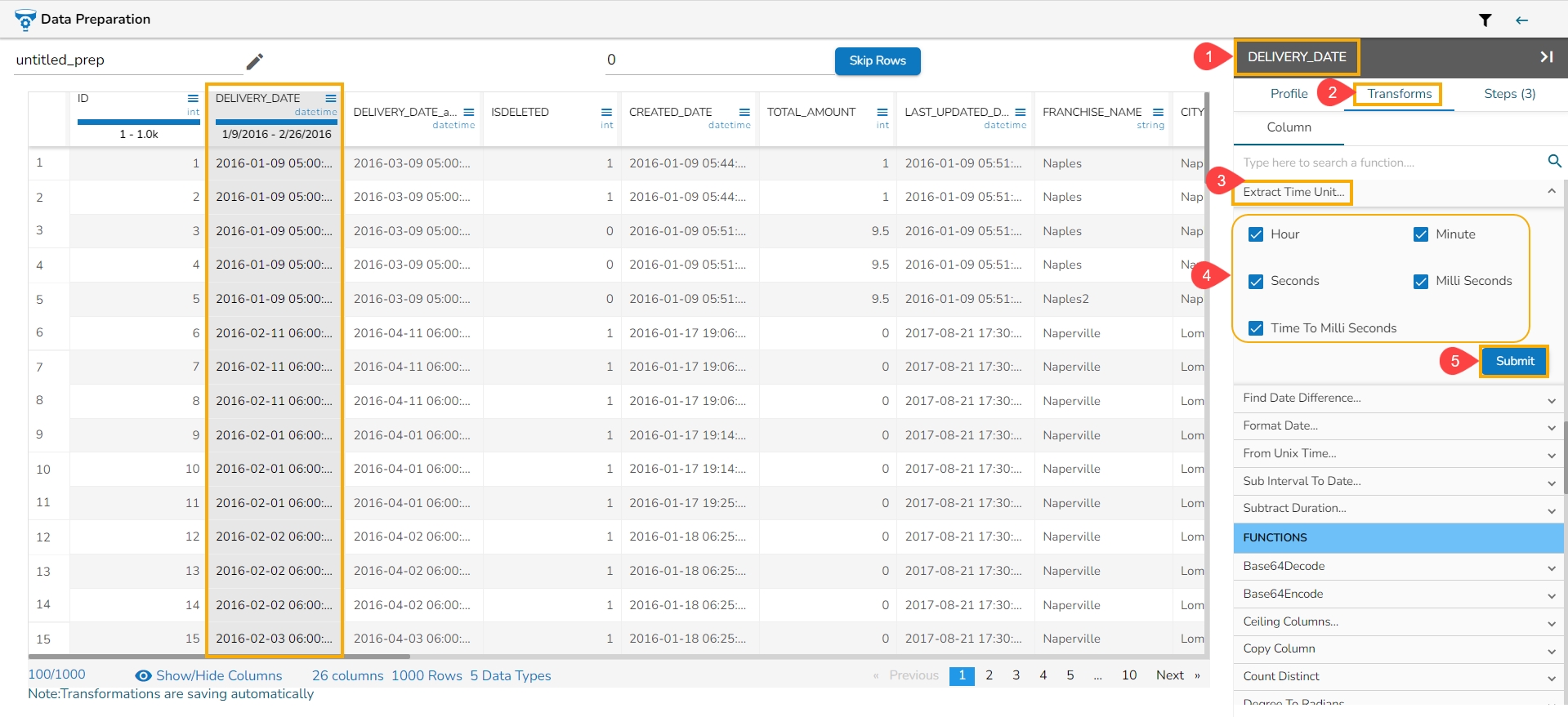

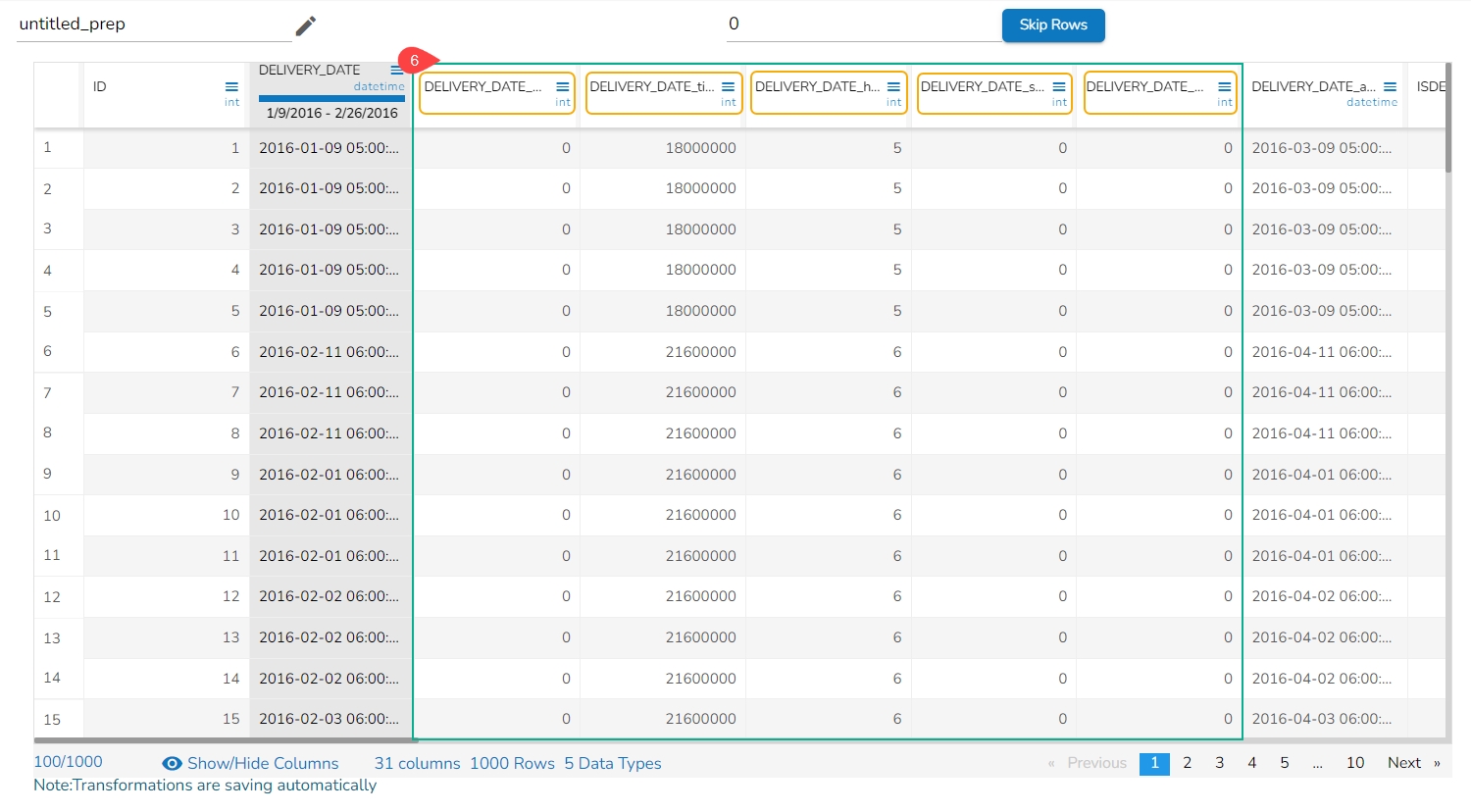

Extract the time units from a selected column with a time value. The time units that get extracted include hours, minutes, seconds, milliseconds, and time to milliseconds.

Select a Datetime column from the Data Grid.

Open the Transforms tab.

Select the Extract Time Unit transform from the Dates transforms category.

Hours: Extracts hours from a time

Minutes: Extracts minutes from a time

Seconds: Extracts seconds from a time

Milliseconds: Extracts milliseconds from a time

Time to Milliseconds: Converts the time given to milliseconds

Click the Submit option.

The Extract Time Unit transform is applied to the DELIVERY_DATE column selecting all the available format types:

As a result, the time gets extracted in the set time units and no. of columns get added based on the selected time unit options. E.g., In this case, 5 new columns get added to the Data Grid displaying the extracted time values.

Please Note: The transform supports time format like- hh:mm:ss:mmm, hh:mm:ss, hh:mm

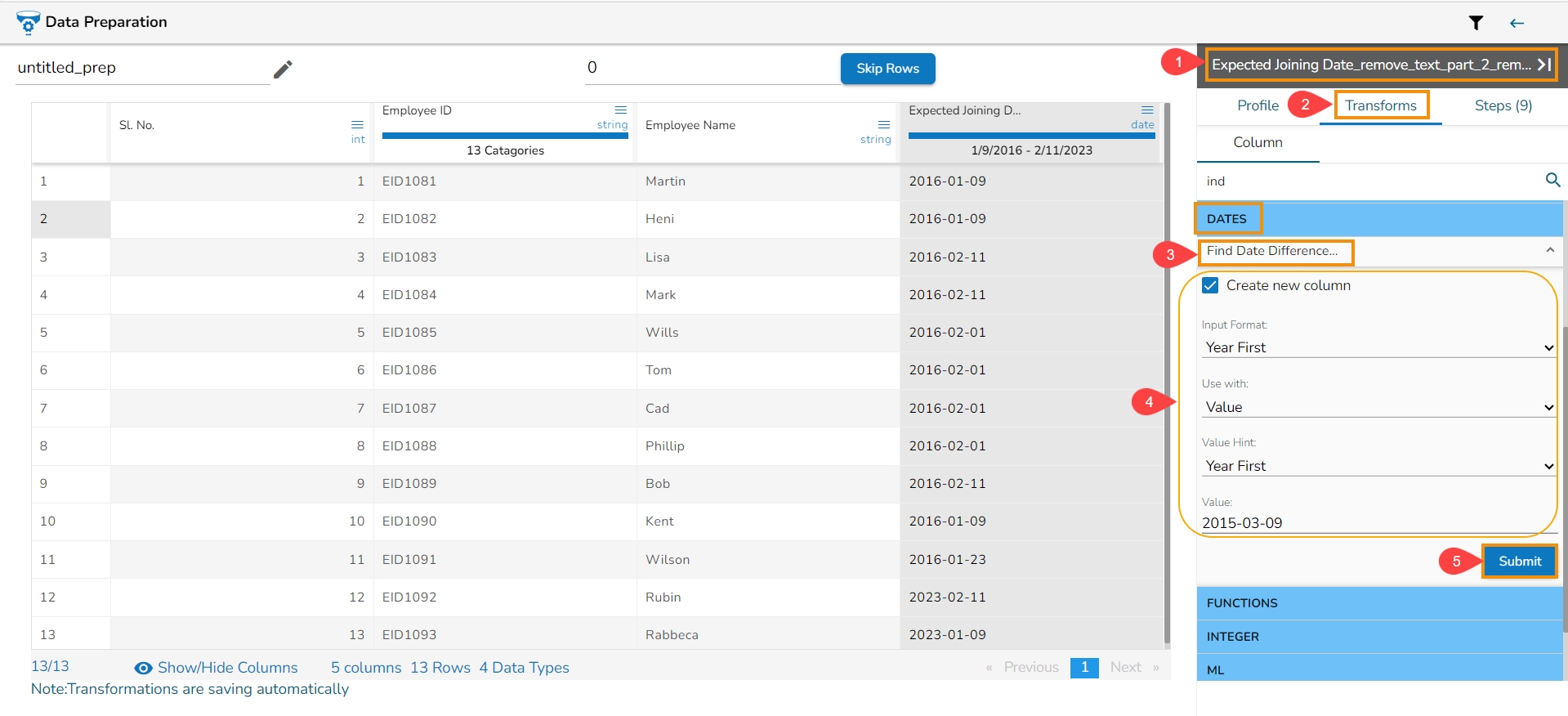

The transform finds the difference between two date values. It can either subtract the selected column with a date value or date from another column. The transformed value can replace the existing column value or can be added as a new column.

Select a Date column from the Data Grid.

Open the Transforms tab.

Select the Find Date Difference transform from the Dates transforms category.

Enable the Create new column option to create a new column with the transformed data.

Input Format: Specifies the format of the given date column.

Use with: Specify whether to fill with a value or another column value.

Value Hint: Specify format of value from which you want to find the difference.

Value: Pass the date value from where you want to find the date difference.

Click the Submit option.

In this case, the Find Date Duration transform has been used with the value 2015-03-09.

As a result, a new column gets created with the set Date Duration value.

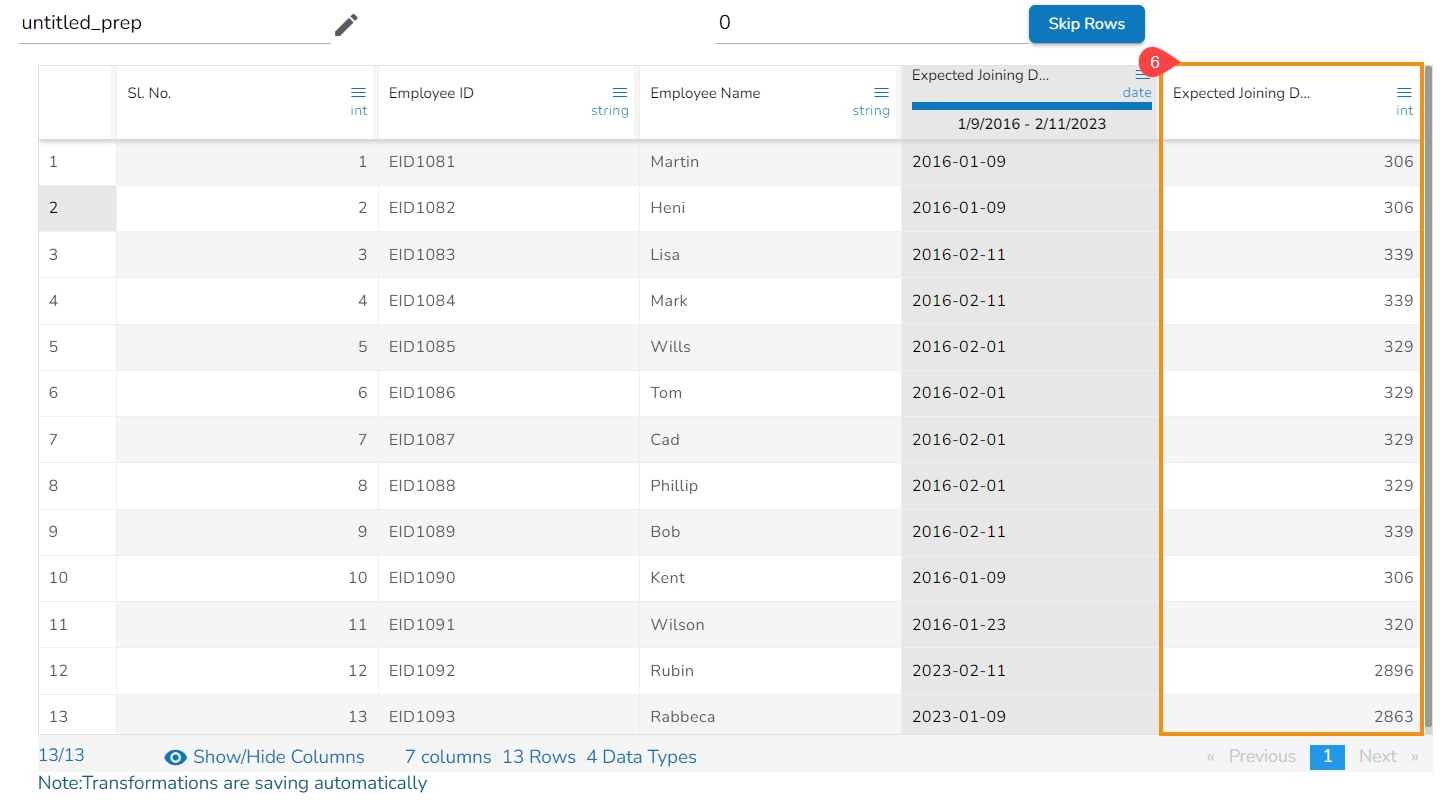

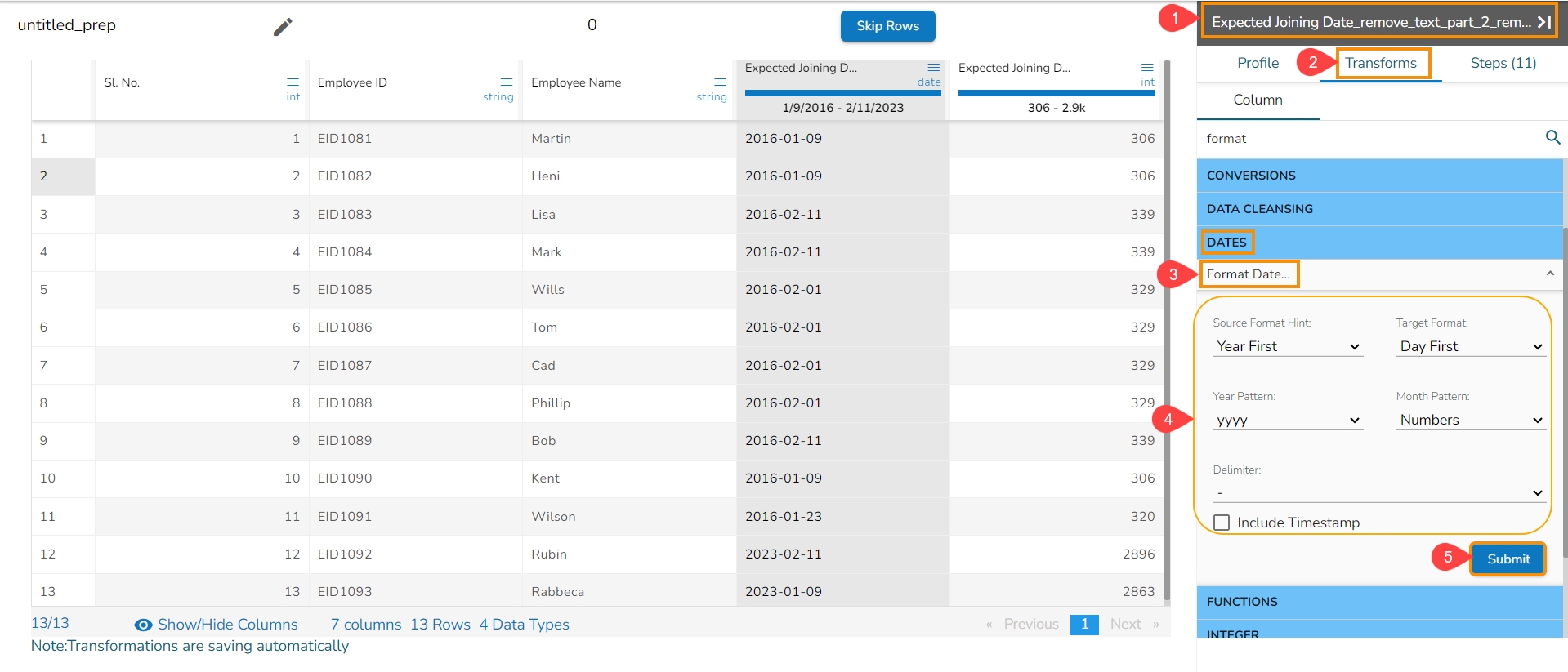

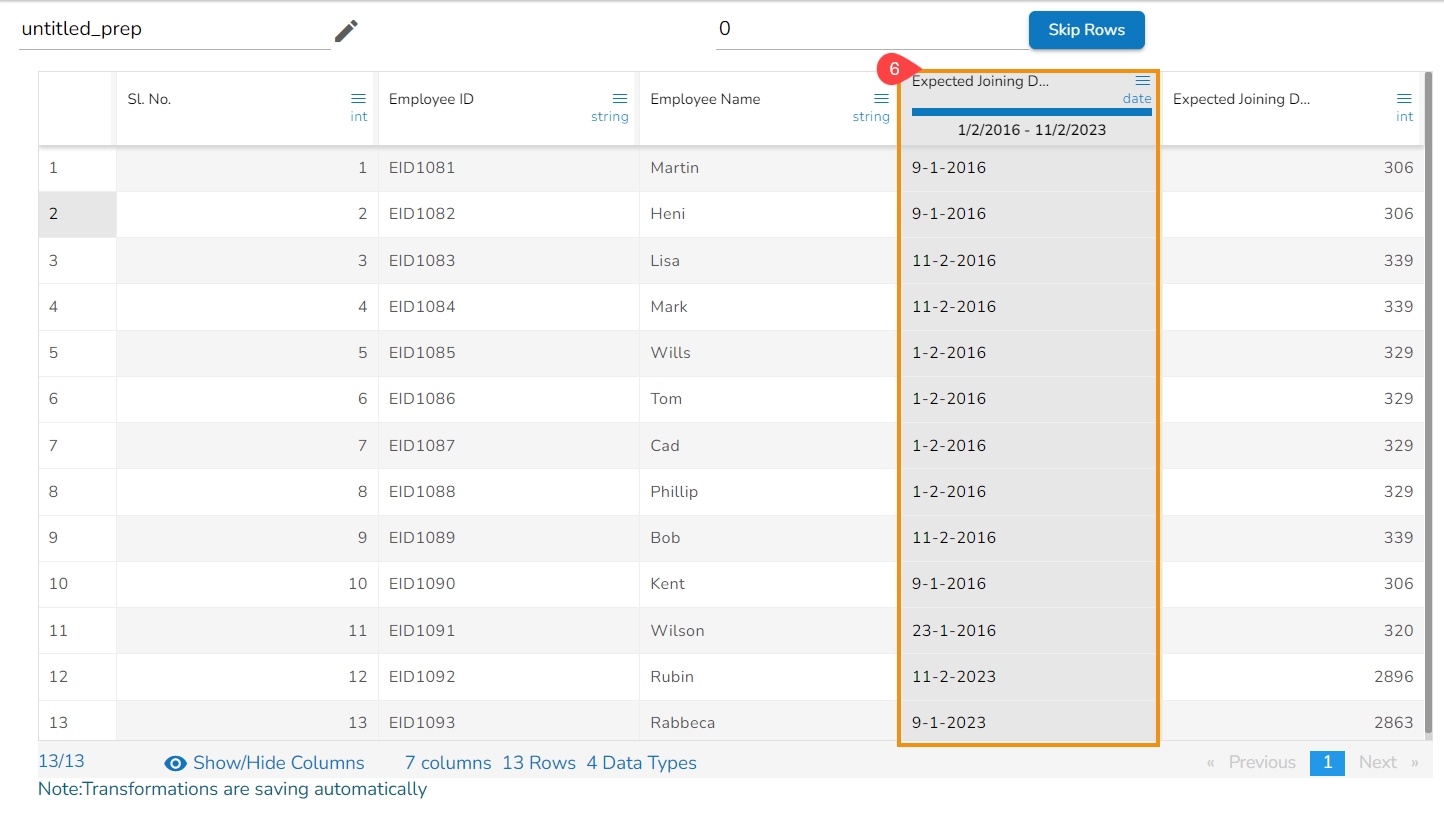

The users can change the format of a date column by using this transform.

Select a Date column from the Data Grid.

Open the Transforms tab.

Select the Format Date transform from the Dates transforms category.

Source Format Hint: Specifies the current format of the date column.

Target Format: Specifies what we want first (Year, Month, Day) in our output format of the date column.

Year Pattern: Specifies the format of the year (yyyy or yy) in the output date column.

Month Pattern: It specifies the format of the month (number, Jan-Dec, January-December) in the output date column.

Delimiter: Specifies Delimiter (like- slash, a hyphen, comma, full stop, space) for the output date column.

Include Timestamp: It adds a timestamp to the current date format if enabled with a tick mark.

Click the Submit option.

As a result, it displays the values of the selected column as per the set transform format of Date:

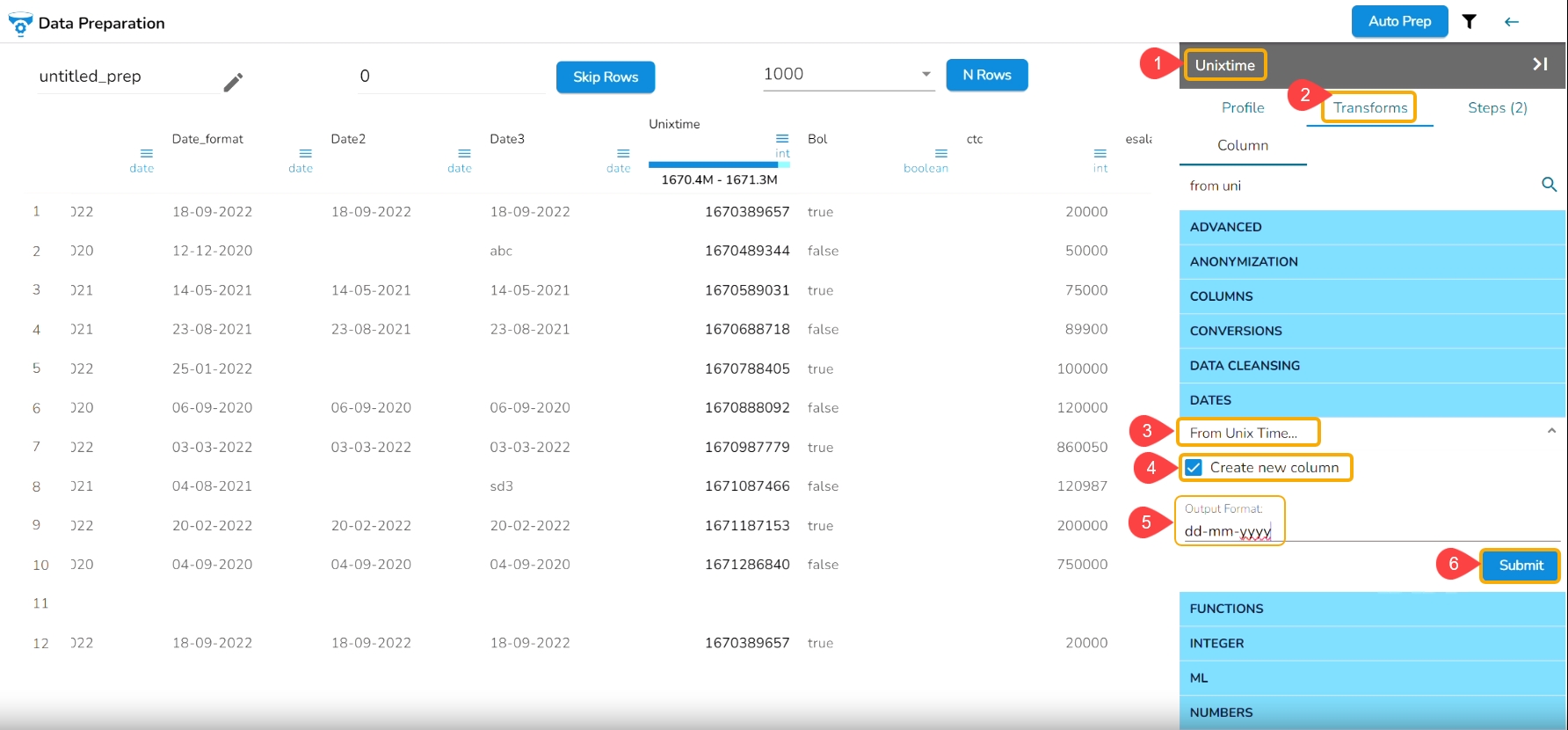

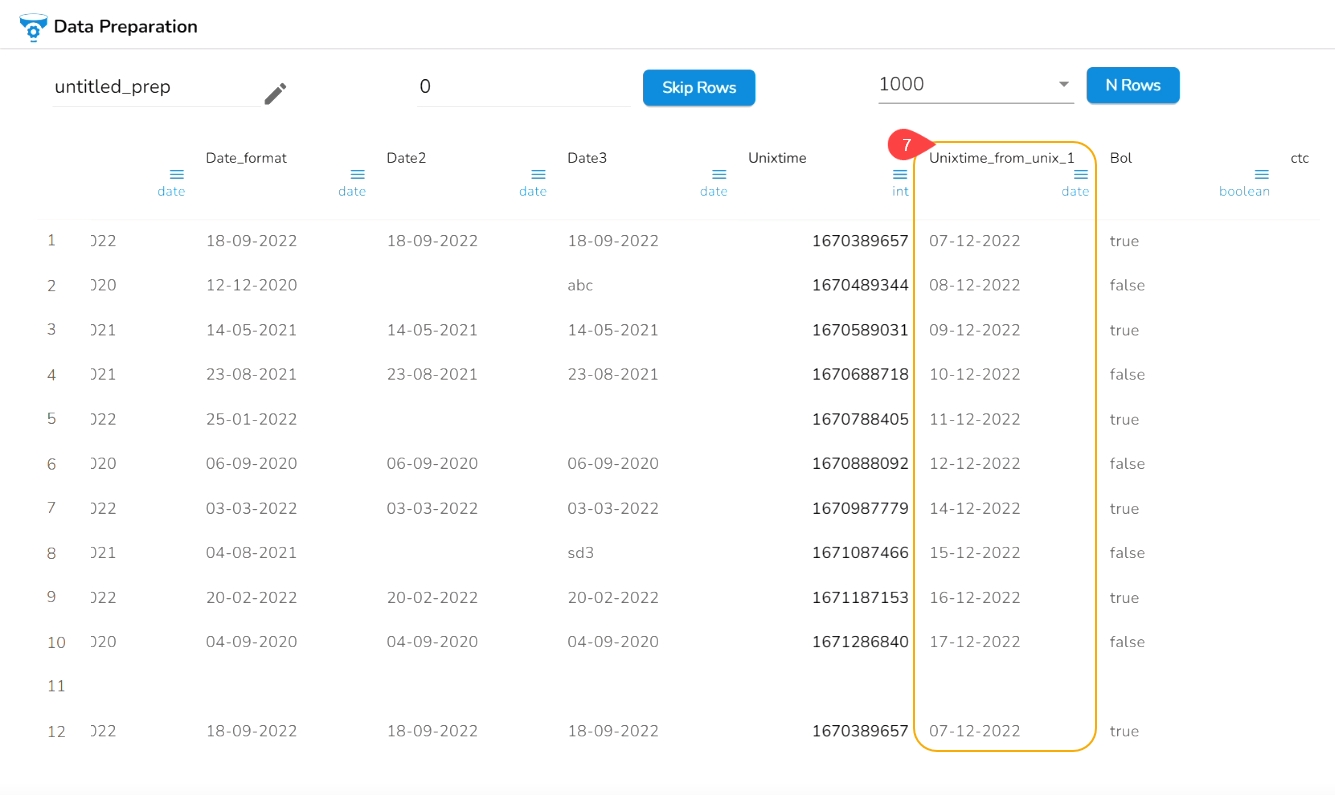

The From Unix Time transform converts the Unix time into a specified format. The From Unix Time transform has been applied on the Date column that contains the values in the Unix format.

Check out the given illustration on how to user From Unix Time transform.

Select a Date column from the Data Grid that contains data in the Unix time.

Open the Transforms tab.

Select the From Unix Time transform from the Dates transforms category.

Enable the Create new column option to create a new column with the transformed data values.

Provide the Output Format in which you want to get the result data.

Click the Submit option.

As a result, a new column gets added to the Data Grid in the set Date format with the converted Unix values:

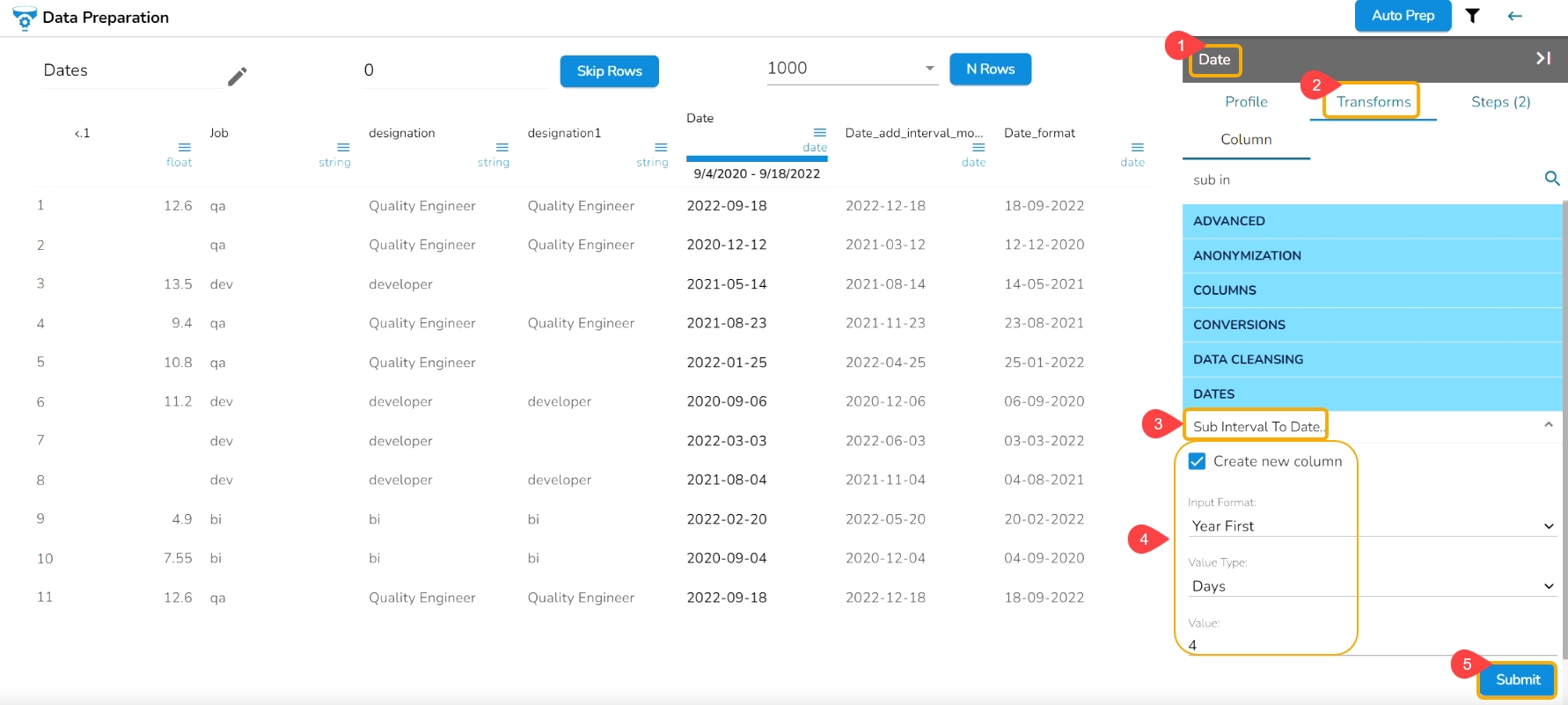

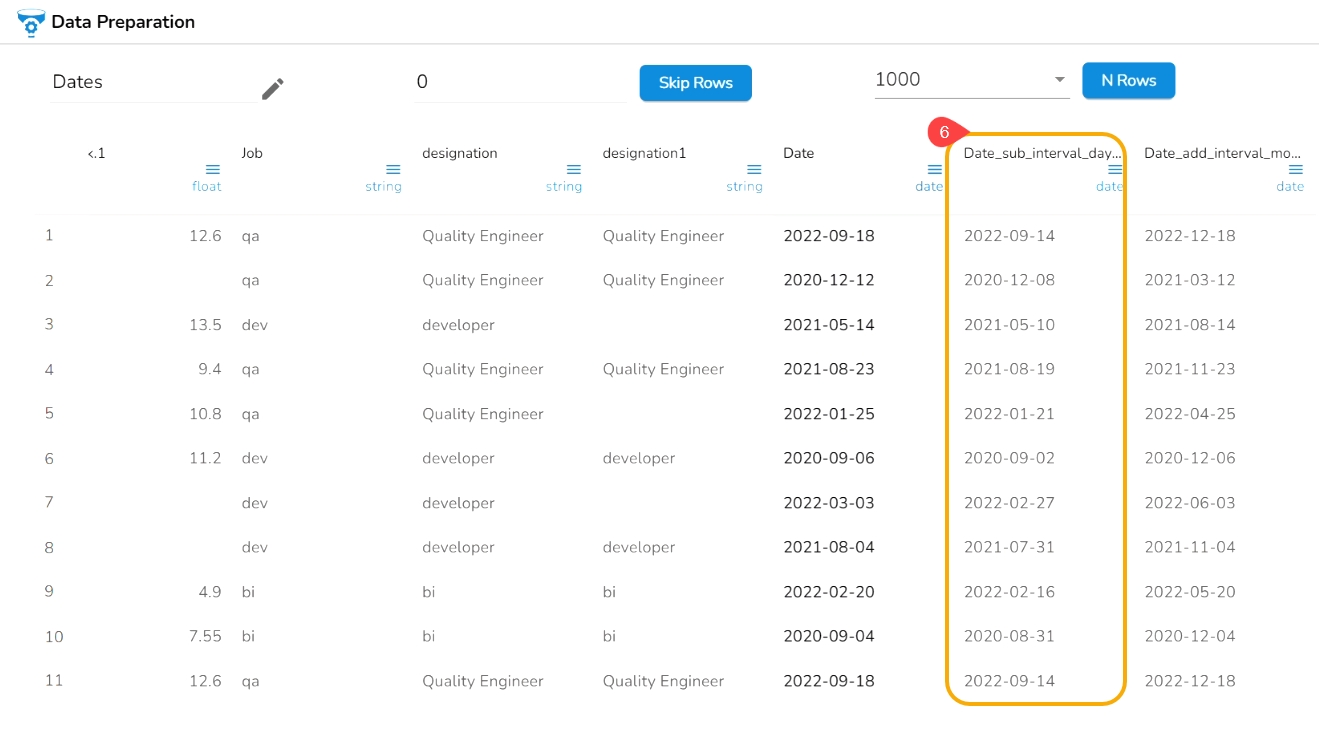

The Sub Interval to Date transform subtracts specified value(interval) from the given date column. The transformed value can replace the existing column value or can be added as a new column.

Check out the given illustration on how to use Sub Interval to Date.

Select a Date column from the Data Grid.

Open the Transforms tab.

Select the Sub Interval to Date transform from the Dates transforms category.

Provide the following information to apply the transform:

Enable the Crate new column option to create a new column with the transformed values.

Input Format: Format of date column(given) should be specified here.

Value Type: It specifies what we want to subtract like years, months, days, weeks, etc.

Value: It specifies how many years/months/days (value type) we want to subtract.

Click the Submit option.

E.g., The Sub Interval to Date transform is applied on the Date column with the 4 days as value to get Sub Interval to the given date.

As a result, a new column gets created with the set sub interval values:

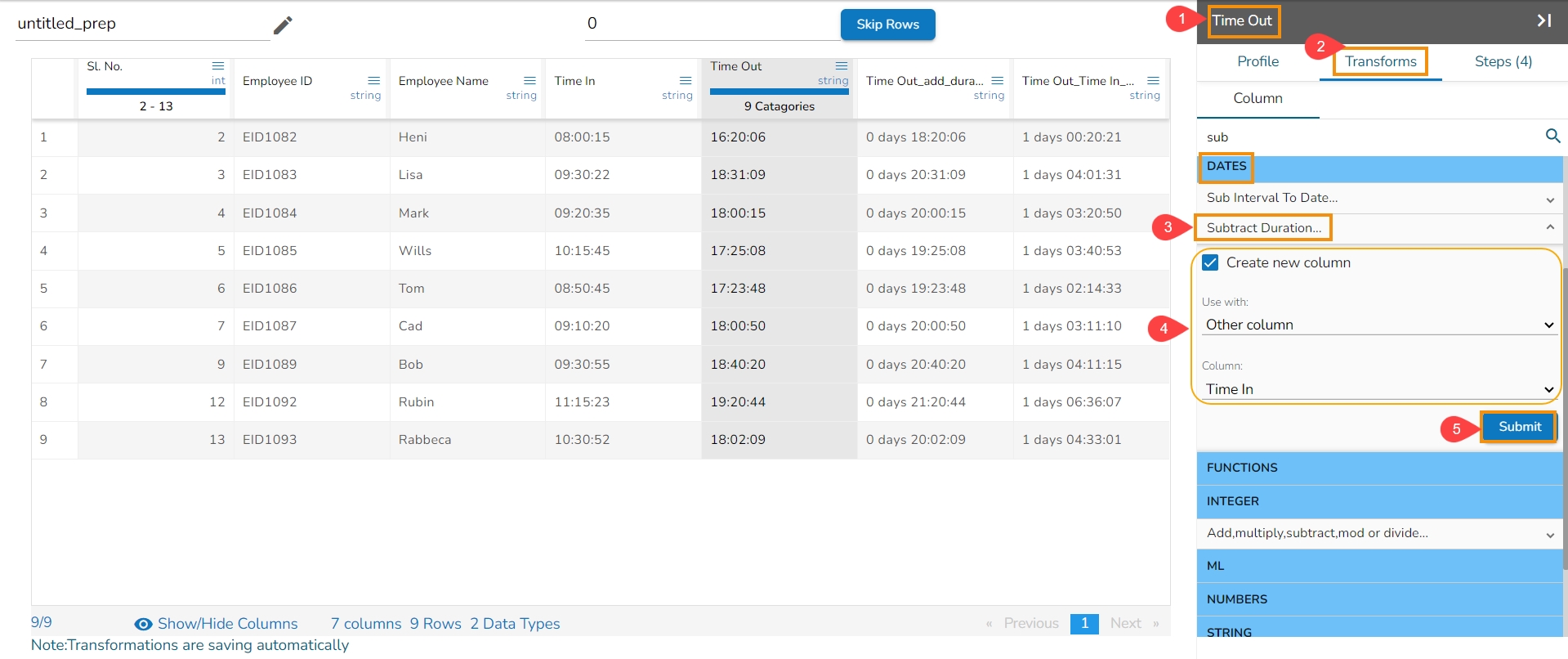

The transform ‘Subtract Duration’ deducts the time values in two ways. It can either subtract the selected column with a time value or time from another column. The transform supports subtracting time into ‘hh:mm:ss.mmm’,‘ hh:mm:ss’, and 'hh:mm’ formats. The transformed value can replace the existing column value or can be added as a new column.

Select a column with the time values from the dataset.

Navigate to the Transforms tab.

Select the Subtract Duration transform from the Dates category.

Enable the Create new column option, if you wish to create a new column with the result data.

Use with: Specify whether to fill with a value or another column value

Column/ Value: The value with which the column must be subtracted, or the column with which the selected column value must be subtracted.

Click the Submit option.

The result will get displayed based on your Use with selection as described below:

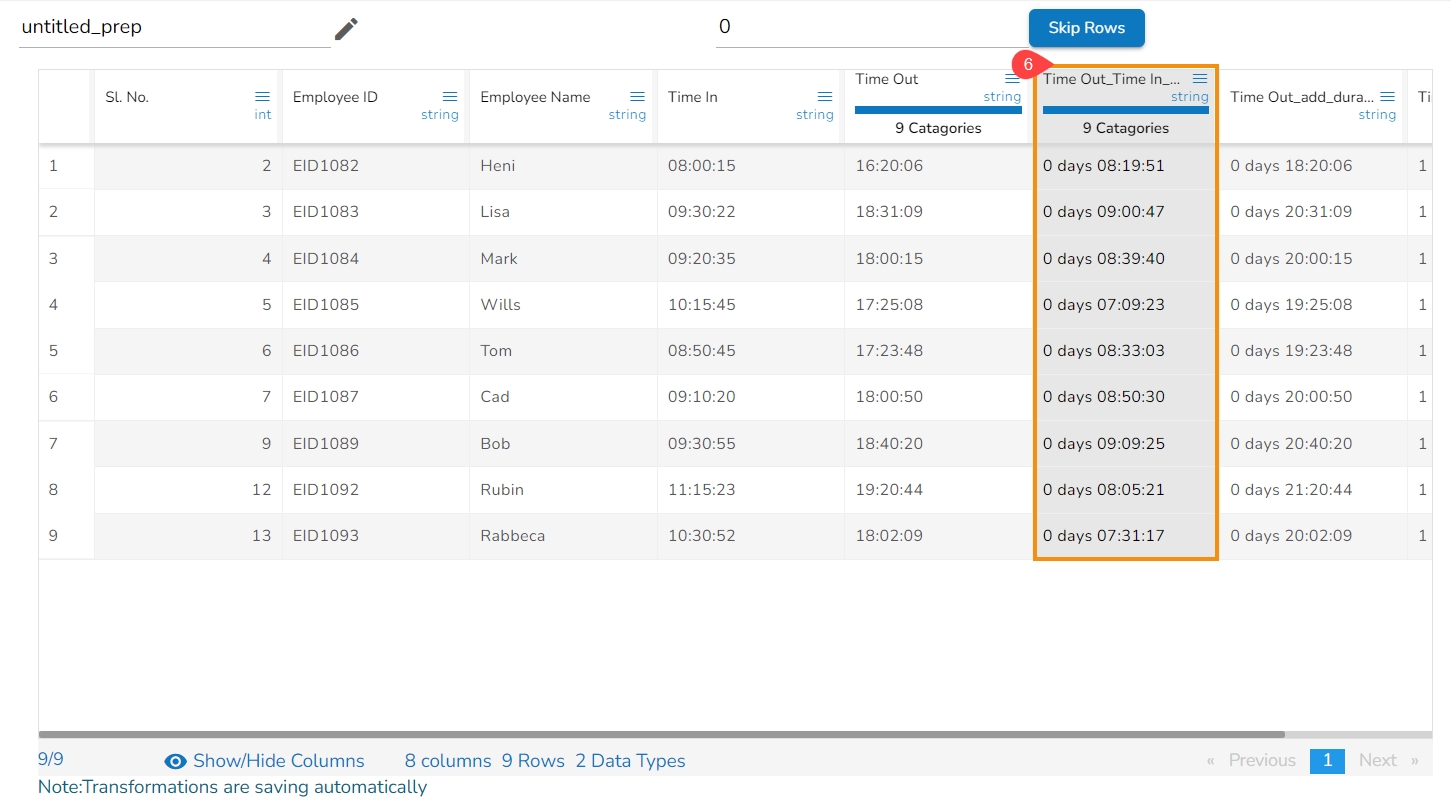

The Subtract Duration transform has been applied to the Time Out column, the selected other column is Time In,

As a result, a new column gets added to the Data Grid with the subtracted duration out of the selected columns:

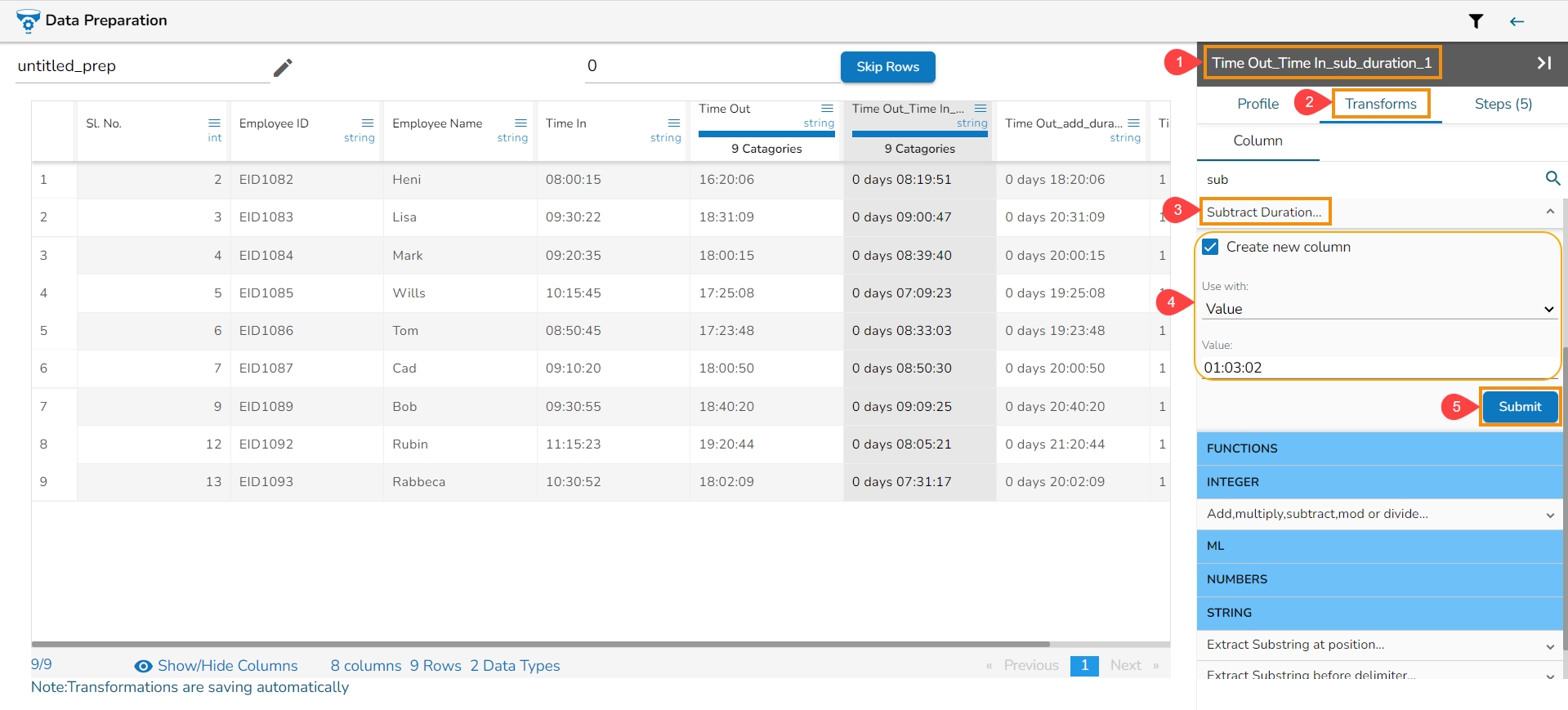

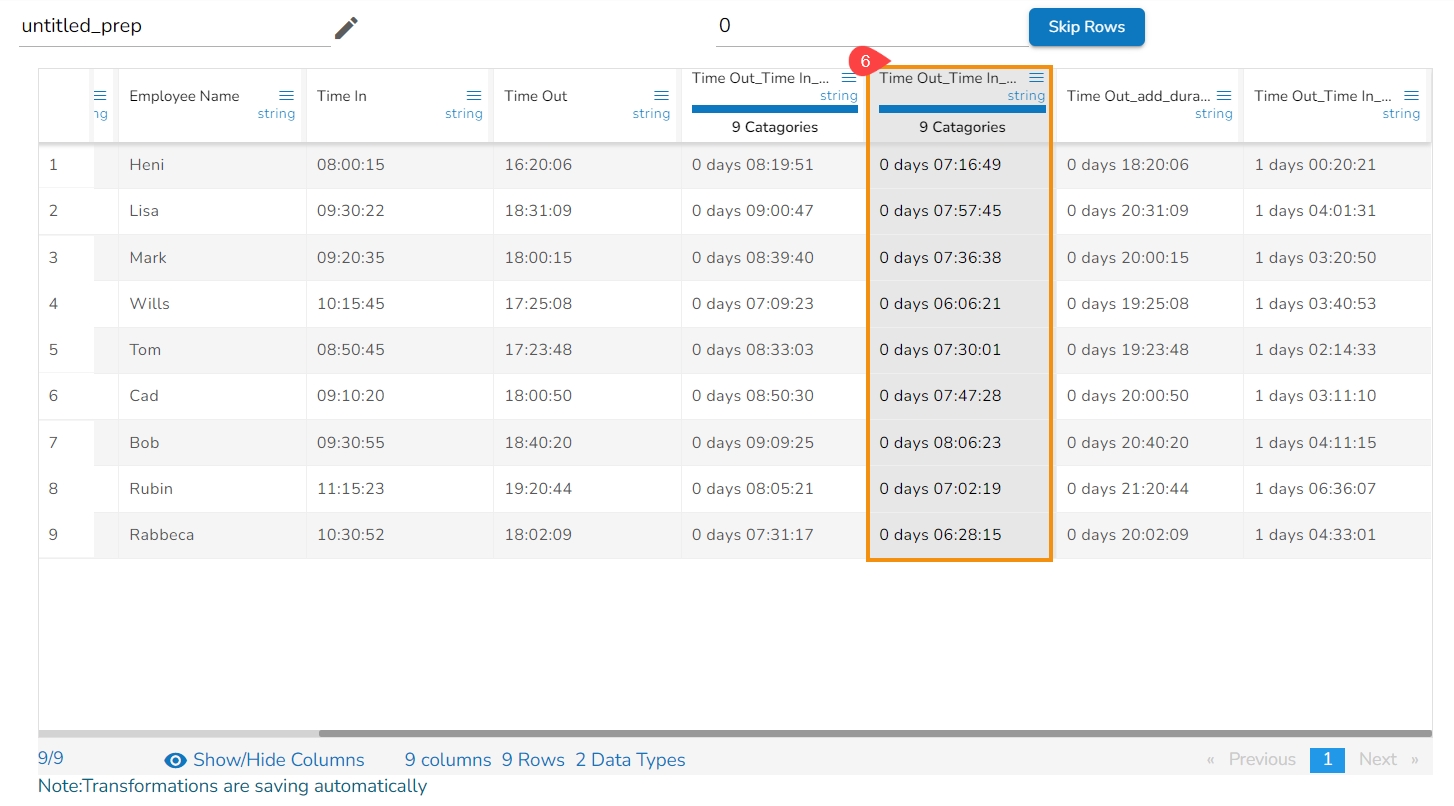

The Subtract Duration transform has been applied to the Time Out_Time In_sub_duration_1, the selected value is 01:03:02,

As a result, a new column gets added to the Data Grid with the remaining values after subtracting the set values from the targeted column:

Anonymization is a type of information sanitization whose intent is privacy protection. It is a data processing technique that removes or modifies personally identifiable information.

The below-mentioned transforms are available under the Dates category:

This transformation using the Salt and Pepper technique is a method to protect sensitive data by introducing random noise or fake data points into a dataset while preserving its statistical properties.

Check out the given illustration on the Anonymization transform.

Steps to perform the Anonymization Transform:

Navigate to a dataset within the Data Preparation framework, and select a column.

Select one column that needs to be protected.

Select the Transforms tab.

Select the Anonymization (Hashing Anonymization) transform from the Anonymization category.

Pass the Set Values (pass any random data as numerical or string values)

Select columns in the Set Fields which can be used in the transformation.

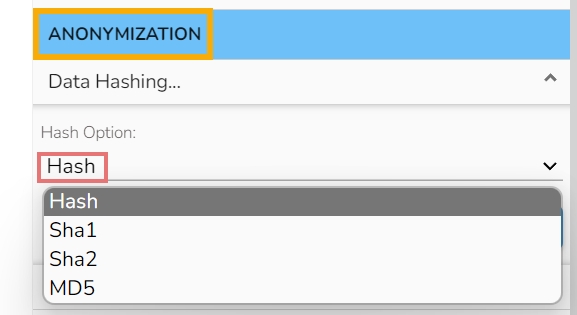

Select a Hash Option using the drop-down menu.

Click the Submit option.

The result will update the selected column by protecting the data in a hashed format.

Please Note:

The first user-provided value (entered in the "Set Values" field) acts as the pepper.

Selected column values will act as the salt.

The hash options displayed in the UI map to the following actual hashing algorithms on the backend:

Sha1 (UI) → SHA-256 (Backend)

Sha2 (UI) → SHA-512 (Backend)

Hash (UI) → MD-5 (Backend)

MD5 (UI) → MD-5 (Backend)

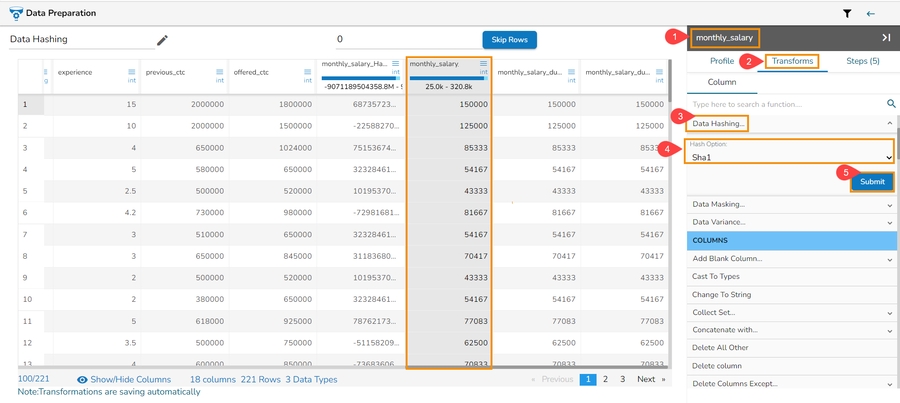

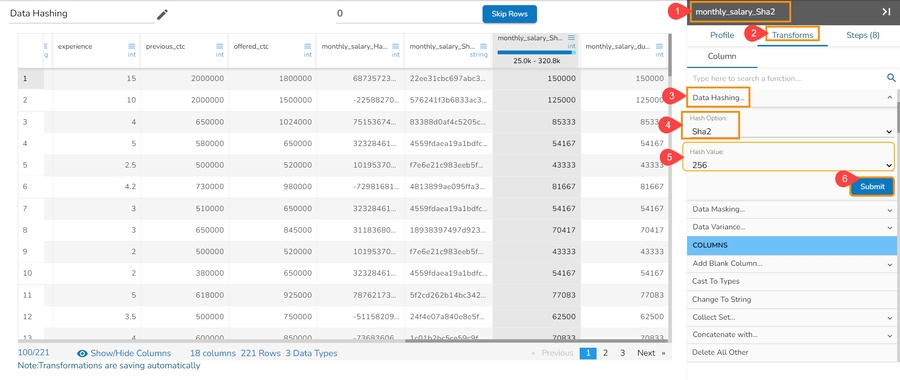

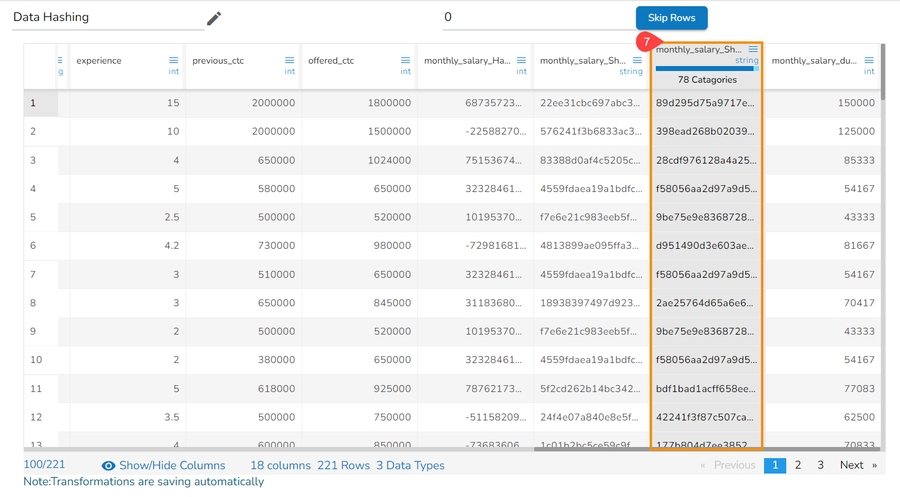

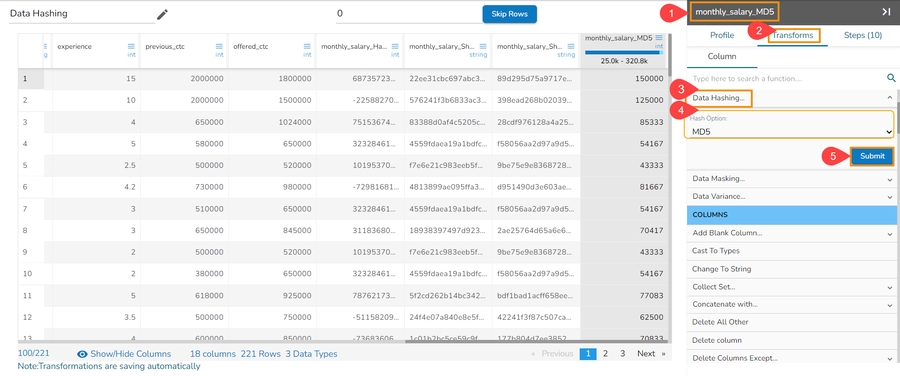

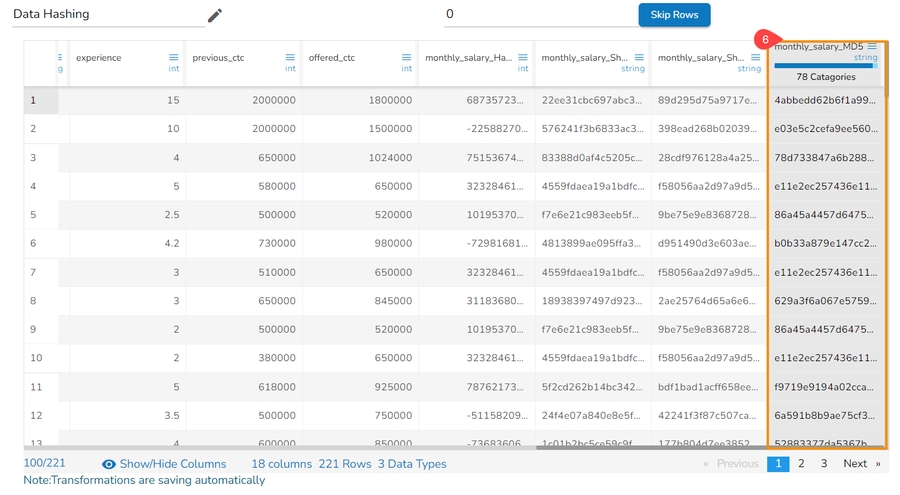

Data Hashing is a technique of using an algorithm to map data of any size to a fixed length. Every hash value is unique.

Data Hashing is a data transformation technique used to convert raw data into a fixed-length representation in the form of a hash value. This transformation is often employed as part of the data preprocessing stage before using the data for various purposes such as analysis, machine learning, or storage. The main objective of data hashing as a data transform is to provide a more efficient and secure way to handle and process sensitive or large datasets.

Check out the given illustration on how to use Data Hashing transform.

Please Note:

A suitable hashing algorithm is chosen based on the specific requirements and security considerations as Hash Options. The supported Hash options are Hash, Sha-1, Sha-2 and MD-5.

The hash options displayed in the UI map to the following actual hashing algorithms on the backend:

Sha1 (UI) → SHA-256 (Backend)

Sha2 (UI) → SHA-512 (Backend)

Hash (UI) → MD-5 (Backend)

MD5 (UI) → MD-5 (Backend)

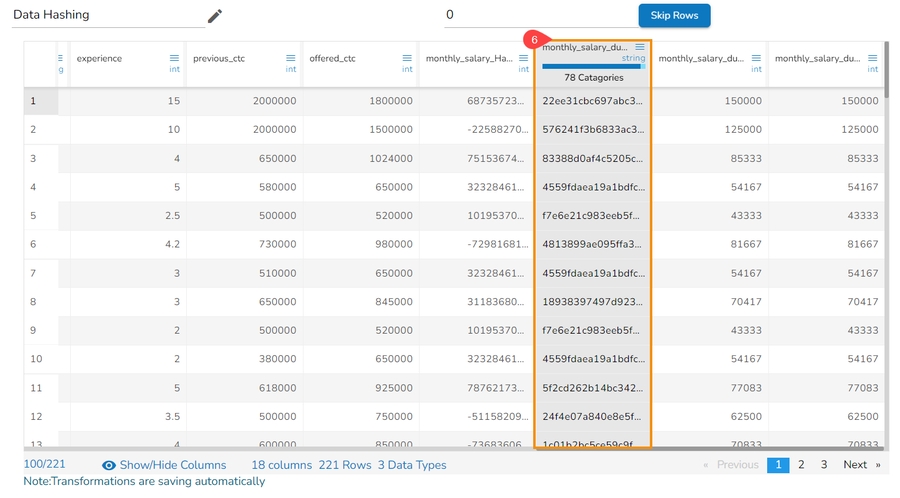

Steps to perform the Data Hashing transform:

Navigate to a dataset within the Data Preparation framework, and select a column.

Open the Transforms tab.

Select the Data Hashing transform from the ANONYMIZATION category.

Select a column from data grid for transformation.

Select the required Hash Option. The supported Data Hashing options are Hash, Sha-1, Sha-2, MD-5.

Click the Submit option.

The selected column gets converted based on the hashing option (In the below-given case, the selected Data Hashing option is Hash).

Data masking transform is the process of hiding original data with modified content. It is a method of creating a structurally similar but inauthentic version of an actual data.

Check out the given walk-through on the Data Masking transform.

Steps to perform the Data Masking Transform:

Select a column within the Data Preparation framework.

Open the Transforms tab.

Select the Data Masking transform from the ANONYMIZATION category.

Provide the Start Index and End Index to mask the selected data.

Click the Submit option.

The below-given image displays how the Data Masking transform (when applied to the selected dataset) converts the selected data:

The Data Variance transform allows the users to apply data variance to Numeric and Date columns.

Check out the given illustration on how to use Data Variance.

Select the Data Variance transform from the Transforms tab.

Select a column from data grid for transformation.

Select the required Value Type-Numeric/Date.

Configure the adequate information based on the Value Type.

Click the Submit option.

The data of the selected column gets modified based on the set value type.

Select a numeric column within the Data Preparation framework.

Open the Transforms tab.

Select the Data Variance transform from the ANONYMIZATION category.

Select Numeric as the Value Type.

Configure the following details:

Select an Operator using the drop-down option.

Set percentage.

Click the Submit option.

The data of the selected column gets transformed based on the set numeric values.

Select a column containing Date values from the given dataset within the Data Preparation framework.

Open the Transforms tab.

Select the Data Variance transform from the ANONYMIZATION category.

Select Date as the Value Type.

Provide the following details:

Start Date

End Date

Click the Submit option.

The selected Date column will display random dates from the selected date range.

Please Note: The Data Variance transform also provides space to add description while configuring the transformation information.

This transform helps to performs arithmetic operation on the selected numerical column.

The Integer data transform performs arithmetic operations on numerical data by applying basic mathematical operations to each data point in a dataset. These operations include addition, subtraction, multiplication, and division. The purpose of these transformations is to modify or manipulate the data in some meaningful way, allowing for easier analysis, visualization, or computation.

Addition (+): This operation involves adding a constant value to each data point in the dataset. It can be useful for tasks such as shifting the data along the axis or adjusting the data to a new reference point.

Subtraction (-): Subtraction subtracts a constant value from each data point. Like addition, it can be used to shift the data, but in the opposite direction.

Multiplication (): Multiplication scales each data point by a constant factor. It can stretch or compress the data along the axis, altering its magnitude.

Division (/): Division divides each data point by a constant value. It can be useful for normalizing the data or expressing it in relative terms.

Modulus(%): Modulus divides the given numerator by the denominator to find a result. In simpler words, it produces a remainder for the integer division.

These arithmetic operations can be performed on individual data points or entire datasets. The operations are straightforward and commonly used in data processing and analysis.

For example,

When dealing with sensor readings, you might add a constant offset to calibrate the measurements.

In financial analysis, you might multiply data by a scaling factor to adjust for inflation or currency conversions.

Check out the given walk-through on how to use the Addition, Subtraction, and Multiplication options under the Integer transform.

Steps to use the Integer Transform:

Select a numerical/ Integer column from the dataset.

Open the Transforms tab.

Select the Add, multiply, subtract, mod, or divide transform provided under the Integer category.

Operator: There are five arithmetic operations to choose ( +, -, / , *, %). Select any one operator.

Use with: The operation can be performed between two columns and to a column based on a value.

Operand/Column: The arithmetic operation needs an operand if it is to be used with a value.

The arithmetic operation needs another column if the selected Use With option is Column.

The first operand is one on which the operation is being performed. The second operand can either be a value or other numerical column based on the choice of use with an option.

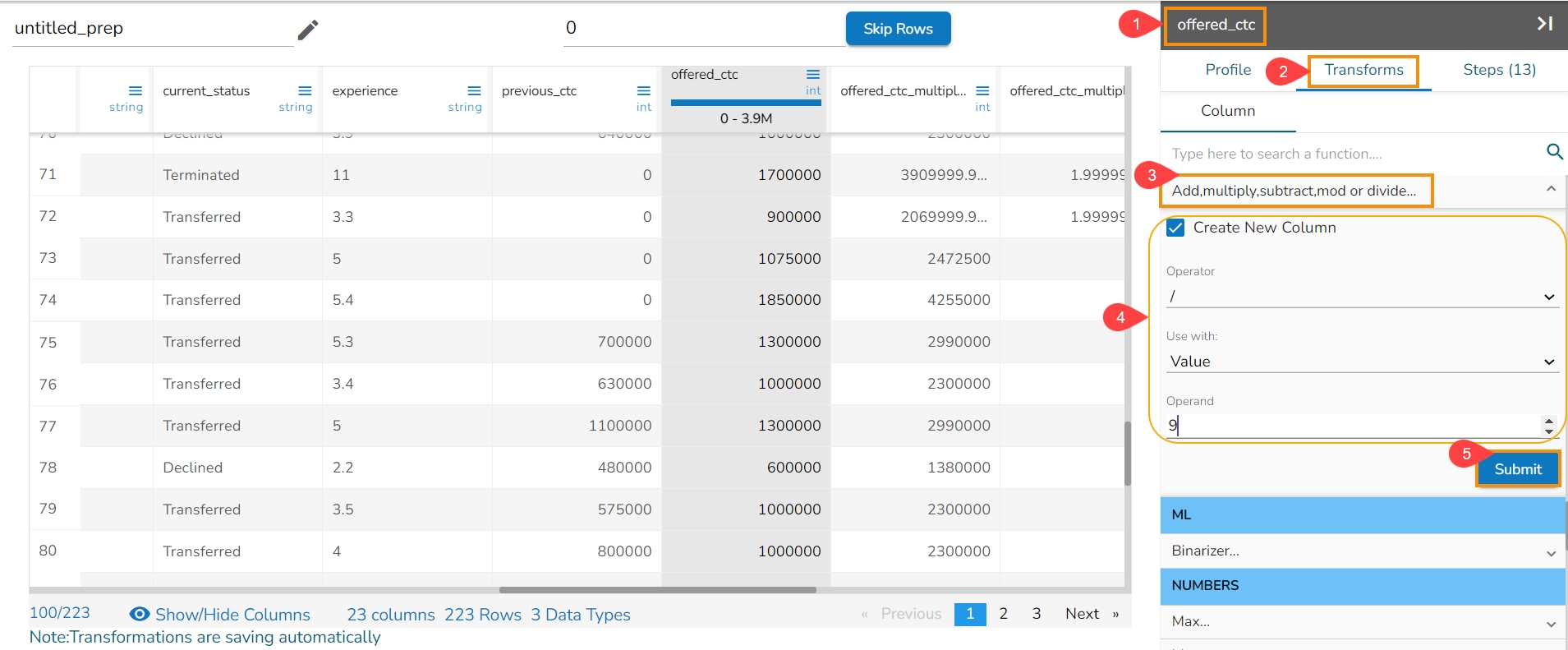

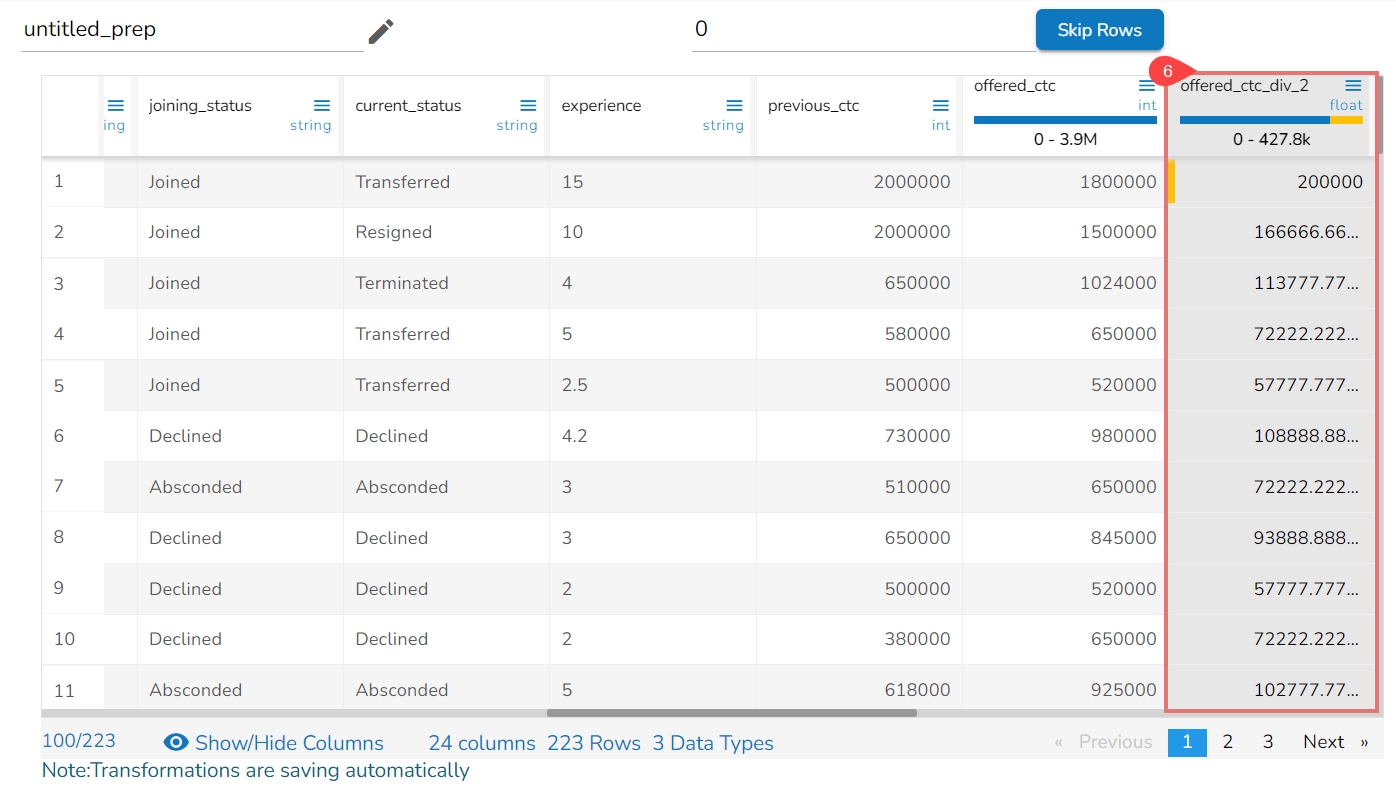

The Integer transform has been applied on the Sales column by choosing division with value where operand is 9.

As a result, a new column gets added with the divided values of the sales.

This transformation also returns the modulo value, which is the remainder of dividing the first argument by the second argument. Equivalent to the Modulus (%) operator.

Steps to perform the transformation with the Modulus (%) operator.

Select the required column in which MOD should calculate.

Select Create New column (optional).

Set the Operator as MOD (%).

Use with Value or Other Column.

Pass the value or select the other column.

Result will come in a new column in which MOD returns the remainder of the value.

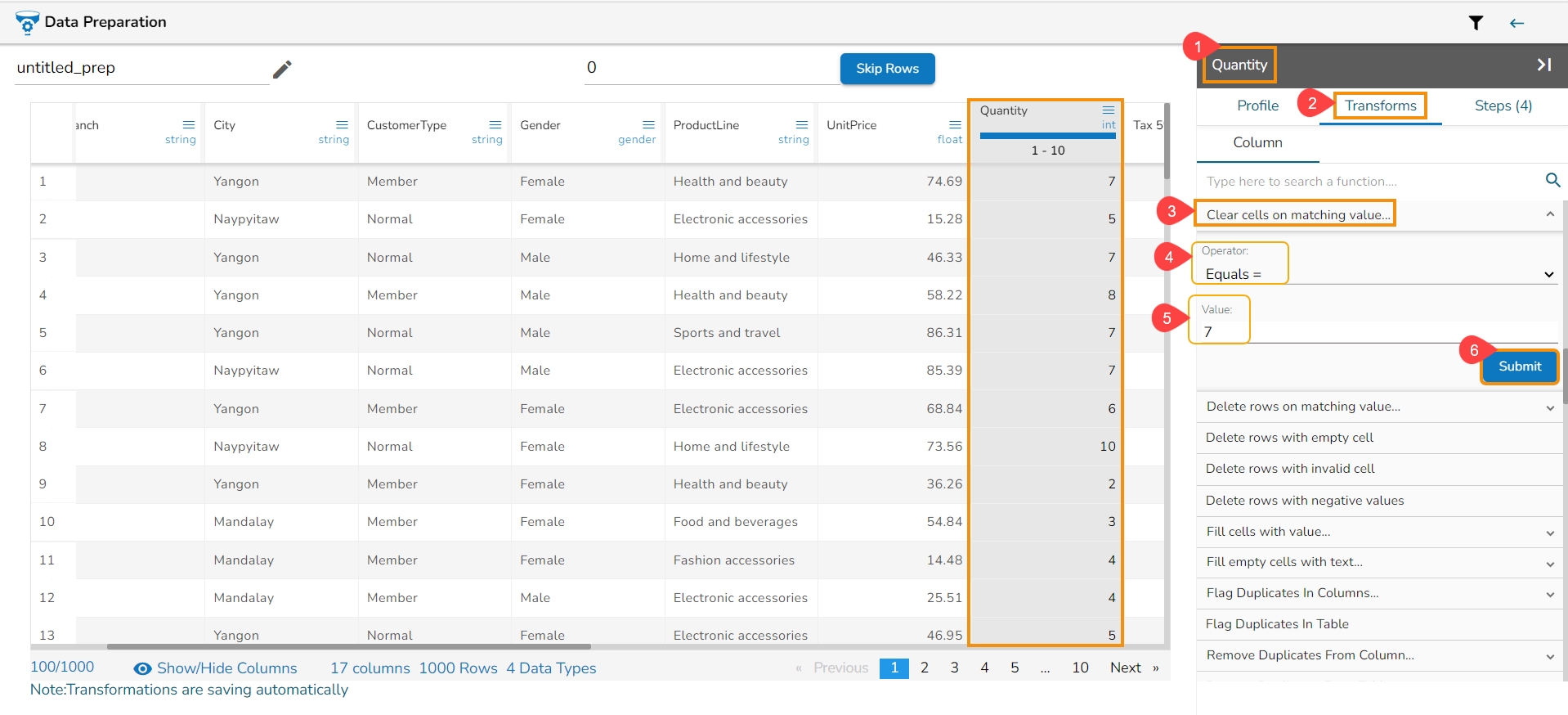

Clear the cell value on matching the condition specified. Operators include contains, equals, starts with, end with, and regex match. Transform applies in the same column.

The Clear Cells on Matching Value data transform is a process used to remove or delete the contents of specific cells in a dataset or spreadsheet based on a given condition or matching value. This transformation is commonly employed to clean or manipulate data by selectively clearing cells that meet certain criteria.

Here's an overview of how the Clear Cells on Matching Value data transform works:

Select a column.

Navigate to the Transforms tab.

Select the Clear Cells on Matching Value transform from the Data Cleansing category.

Operator: Select the operator required for matching from the list.

Value: The value or pattern to be searched for in the selected column.

Click the Submit option.

Please Note: The supported Operators for this Transform are: equals, starts with, end with, and regex.

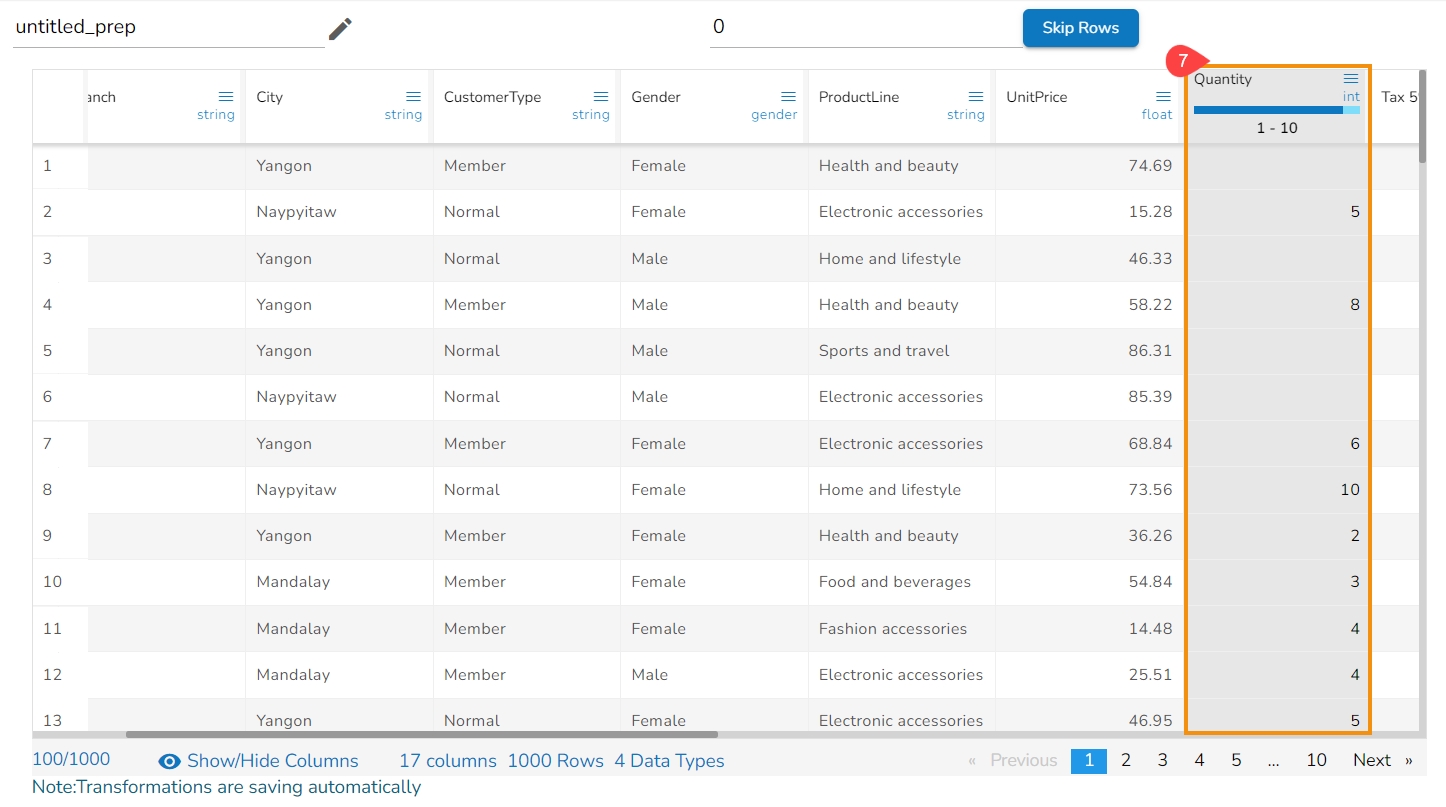

The value selected in the Quantity column clears the cell with 0 value.

It removes the values from the cells with 7 value and returns blanks cells:

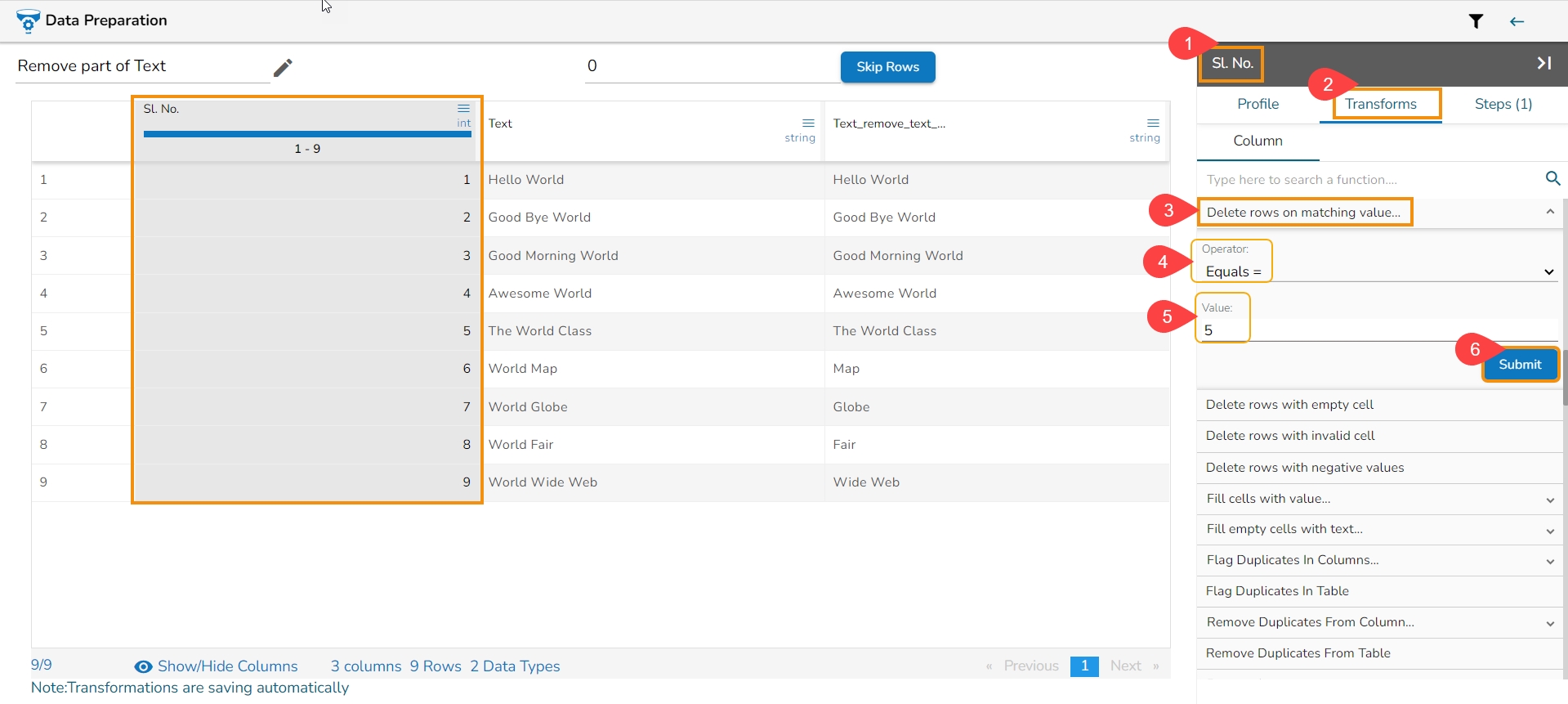

Delete the rows on matching the condition specified for that column.

The Delete Rows on Matching Value transform is a data manipulation process used to remove rows from a dataset based on a specified condition or matching value. This transformation allows the user to selectively delete rows that meet certain criteria.

Here's an overview of how the Delete Rows on Matching Value transform works:

Select a column.

Navigate to the Transforms tab.

Select the Delete Rows on Matching Value transform from the Data Cleansing category.

Operator: Select the operator required for matching from the list.

Value: The value or pattern to be searched for in the selected column.

Click the Submit option.

Please Note: The supported operators are: contains, equals, starts with, ends with, and regex match.

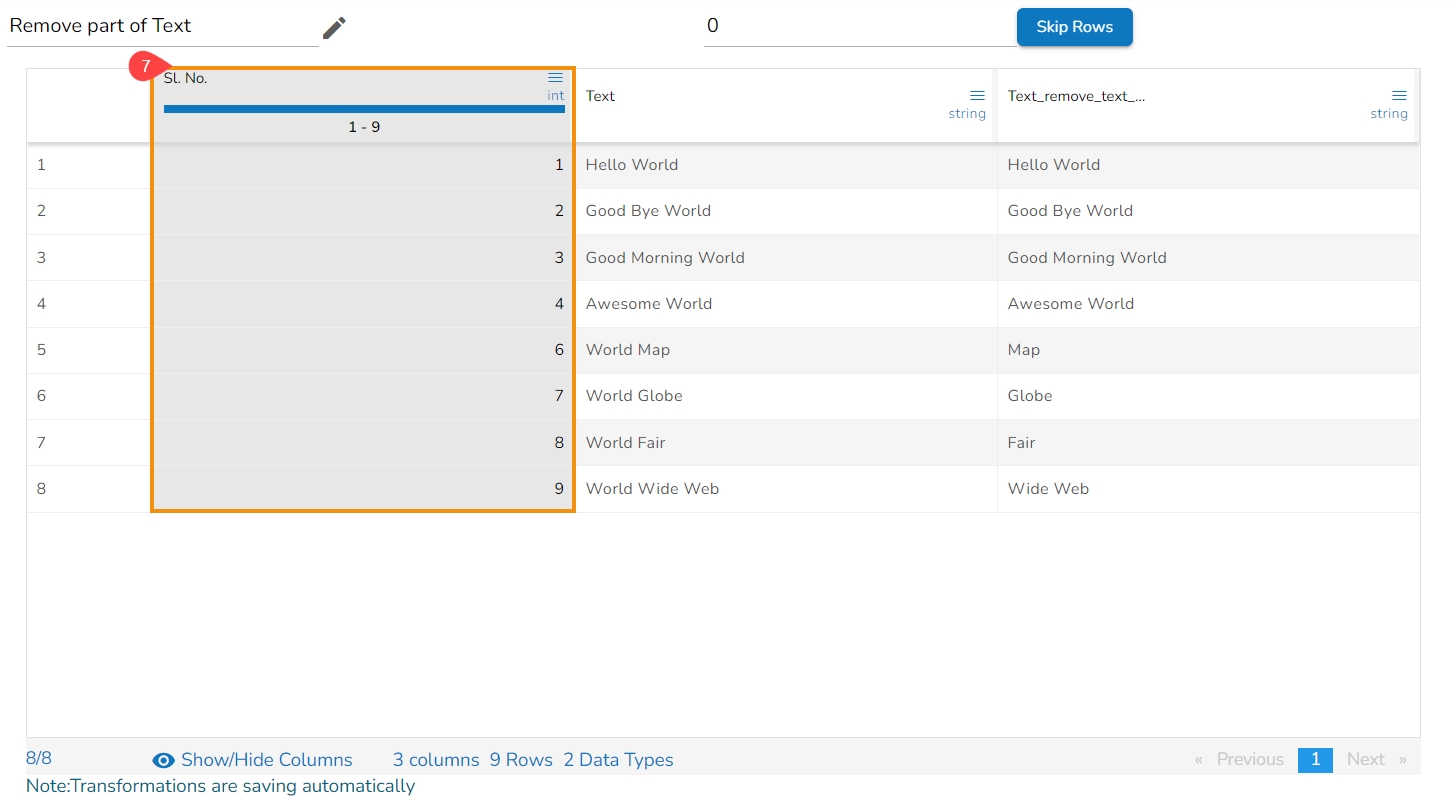

The row with given value from the Sl. No. column gets deleted.

The row with 5 value has got deleted from the id column.

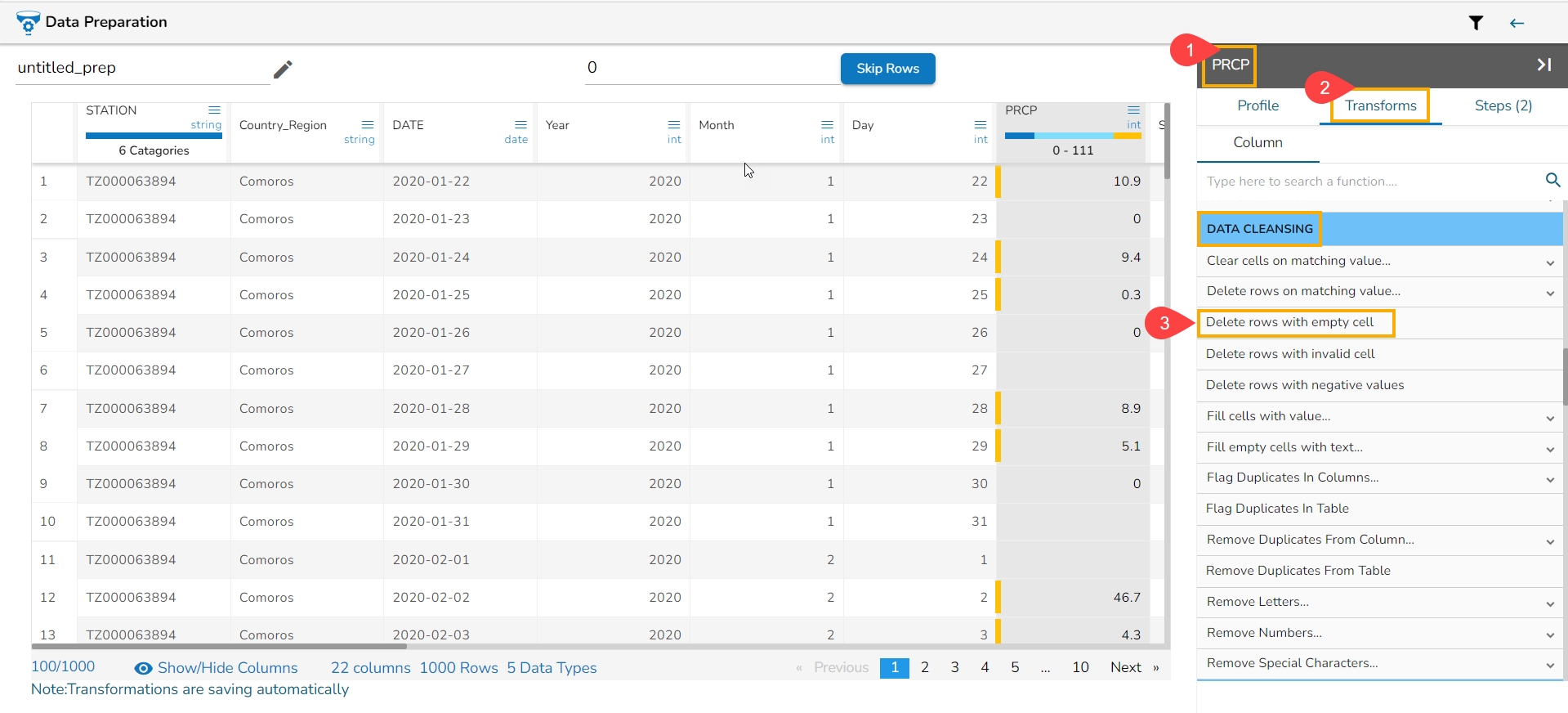

The Delete Rows with Empty Cell transform is a data manipulation operation commonly used in spreadsheet software or data processing tools to remove rows that contain empty cells within a specified column or across multiple columns. The transform helps clean and filter data by eliminating rows that lack essential information or have incomplete records.

Select a column.

Navigate to the Transforms tab.

Click the Delete Rows with Empty Cell transform from the Data Cleansing category.

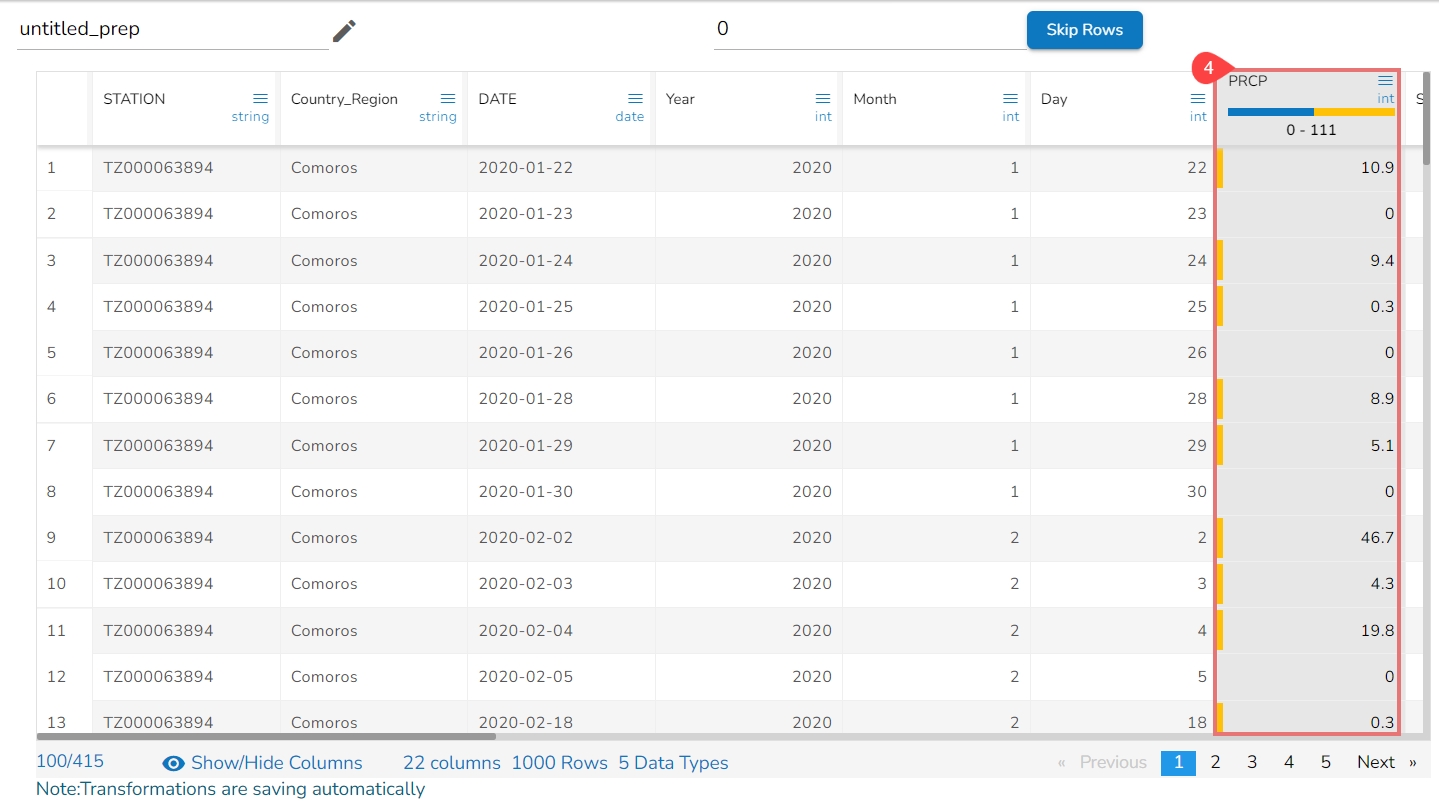

Please Note: This transform does not have a form to configure, it gets applied by clicking the transform name.

It deletes all the rows with empty cell in that column returning the data as below:

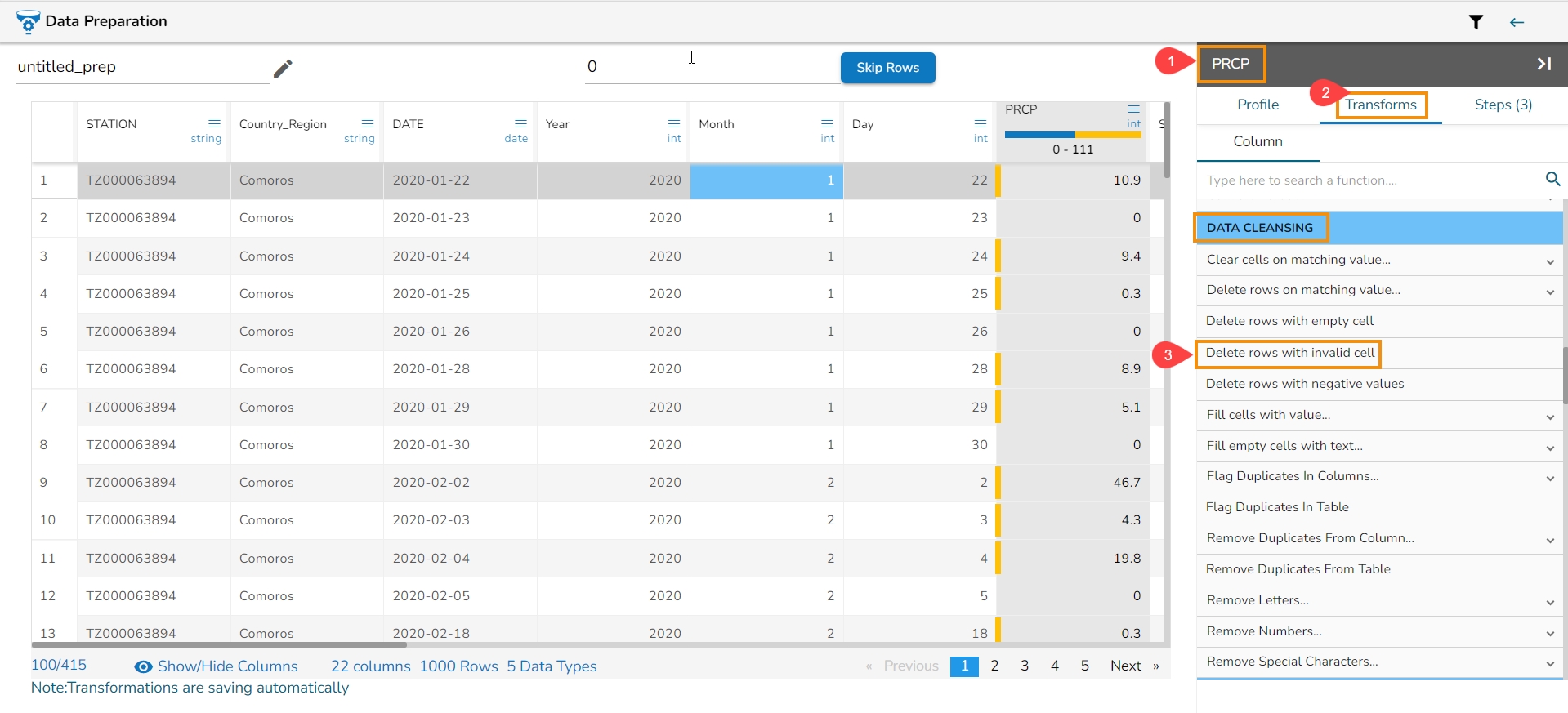

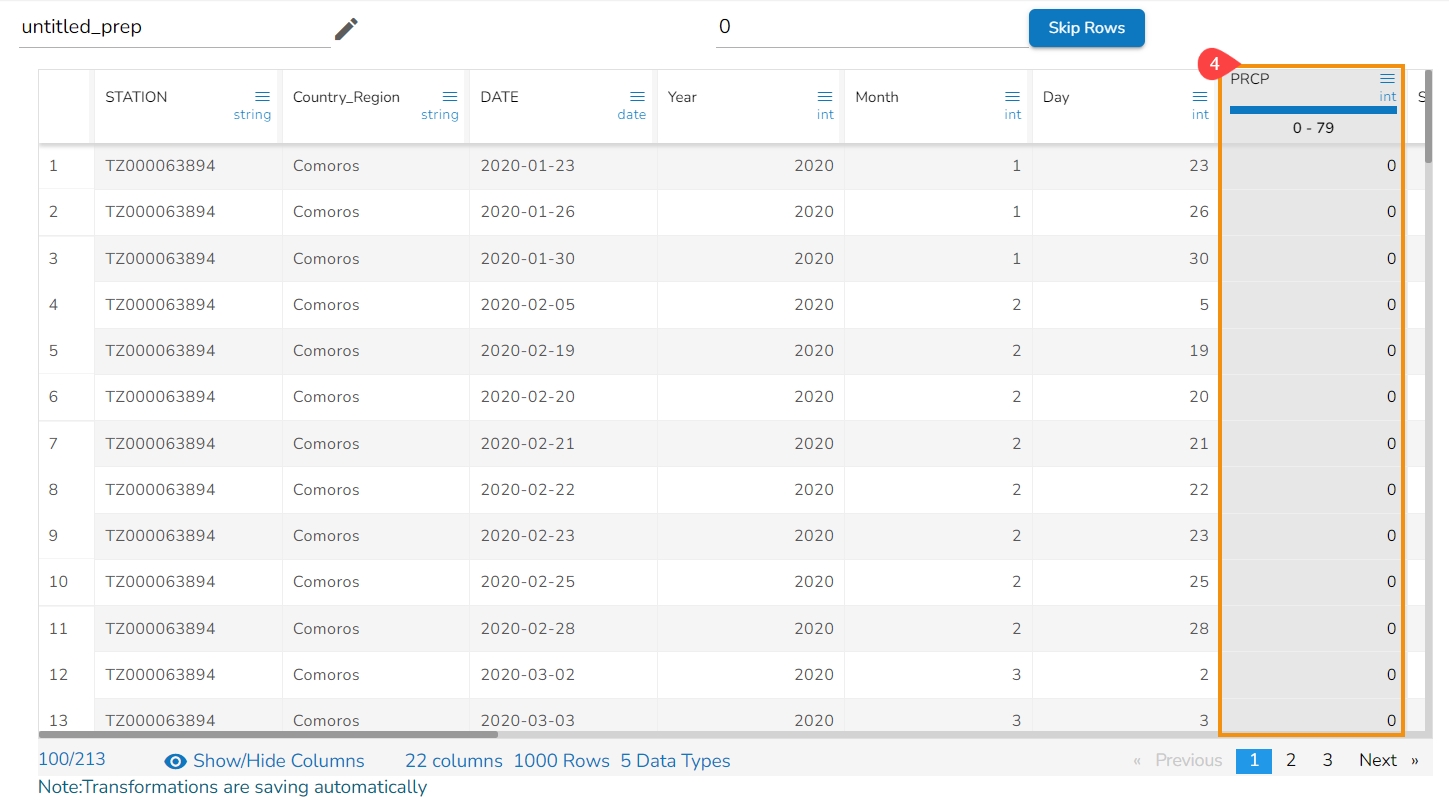

The Delete Rows with Invalid Cell transform is a data manipulation operation used to remove rows that contain invalid or inconsistent data in a specified column or across multiple columns. This transform helps clean and filter data by eliminating rows that do not meet specific validation criteria or fail to comply with predefined rules.

Refer to the following steps on how the Delete Rows with Invalid Cell transform works:

Select a column.

Navigate to the Transforms tab.

Select the Delete Rows with Invalid Cell transform from the Data Cleansing category.

Please Note: This transform does not have a form to configure, it gets applied by clicking the transform name.

The transform deletes rows with an invalid value in the selected column.

The Delete Rows with Negative Values transform is a data manipulation operation used to remove rows that contain negative values in a specified column. This transform helps to filter and clean data by eliminating rows that have undesired negative values.

Select a column.

Navigate to the Transforms tab.

Select the Delete Rows with Negative Values transform from the Data Cleansing category.

Please Note: This transform does not have a form to configure, it gets applied by clicking the transform name.

It deletes the row with the negative value and returns the data as displayed below:

The Fill Cells with Value transform is a data manipulation operation used to replace empty or missing cells with a specified value in a column or across multiple columns. This transform helps to ensure data consistency and completeness by filling in gaps or replacing missing values.

Select a column.

Navigate to the Transforms tab.

Select the Fill Cells with Value transform from the Data Cleansing category.

Use with: Specify whether to fill with a value or another column value

Column/ Value: The value with which the column must be filled, or the column with which the value must be replaced. When the above transform is applied to the below data on the column timecol2, it fills the column with the selected value that is 30.

Click the Submit option.

Please Note: The user can also fill the column value with another column's values if the selected option is Column. E.g., the following image mentions the values of the timecol3 is provided for the timecol2.

It fills the selected column with a value or a value from another column.

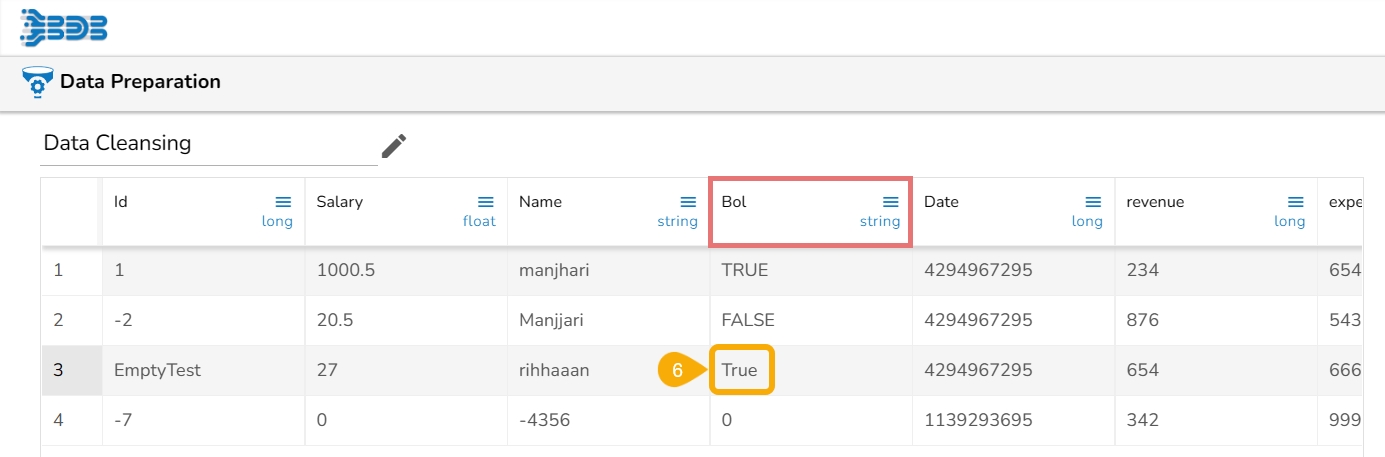

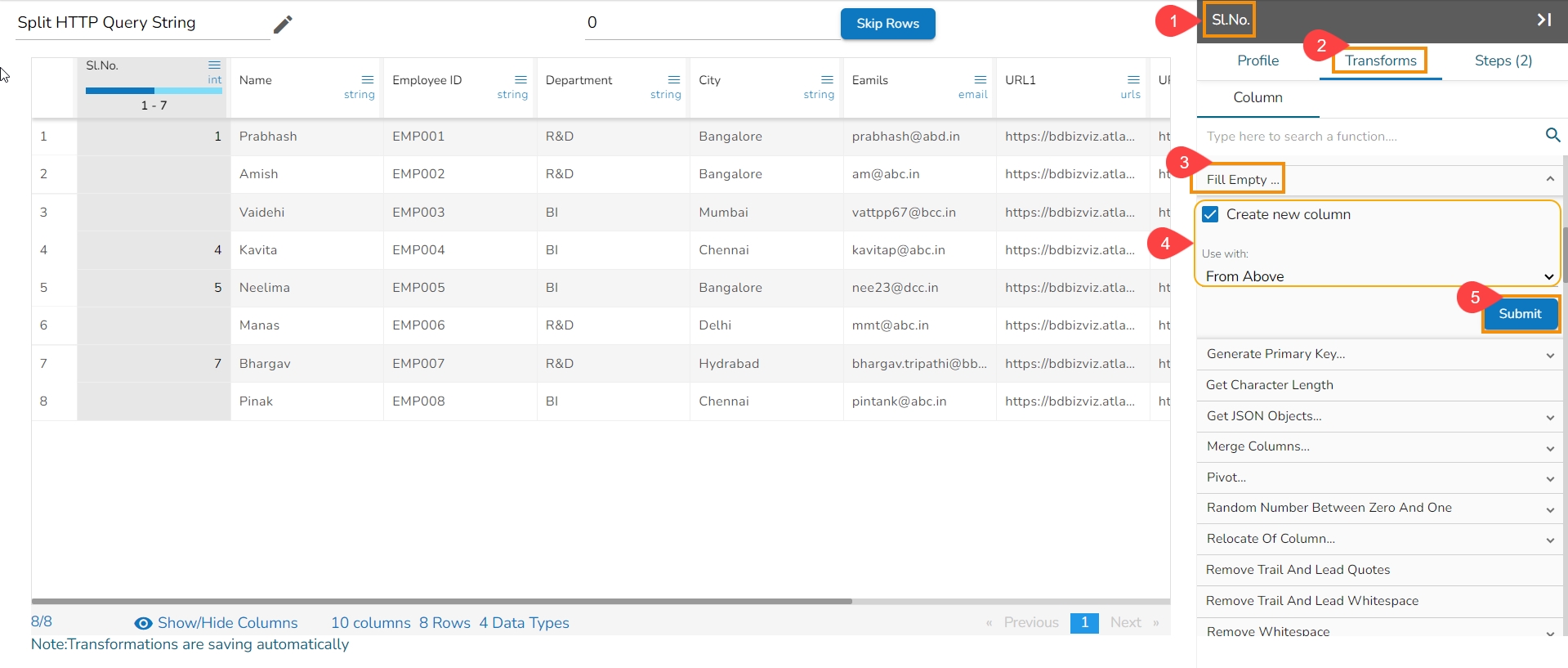

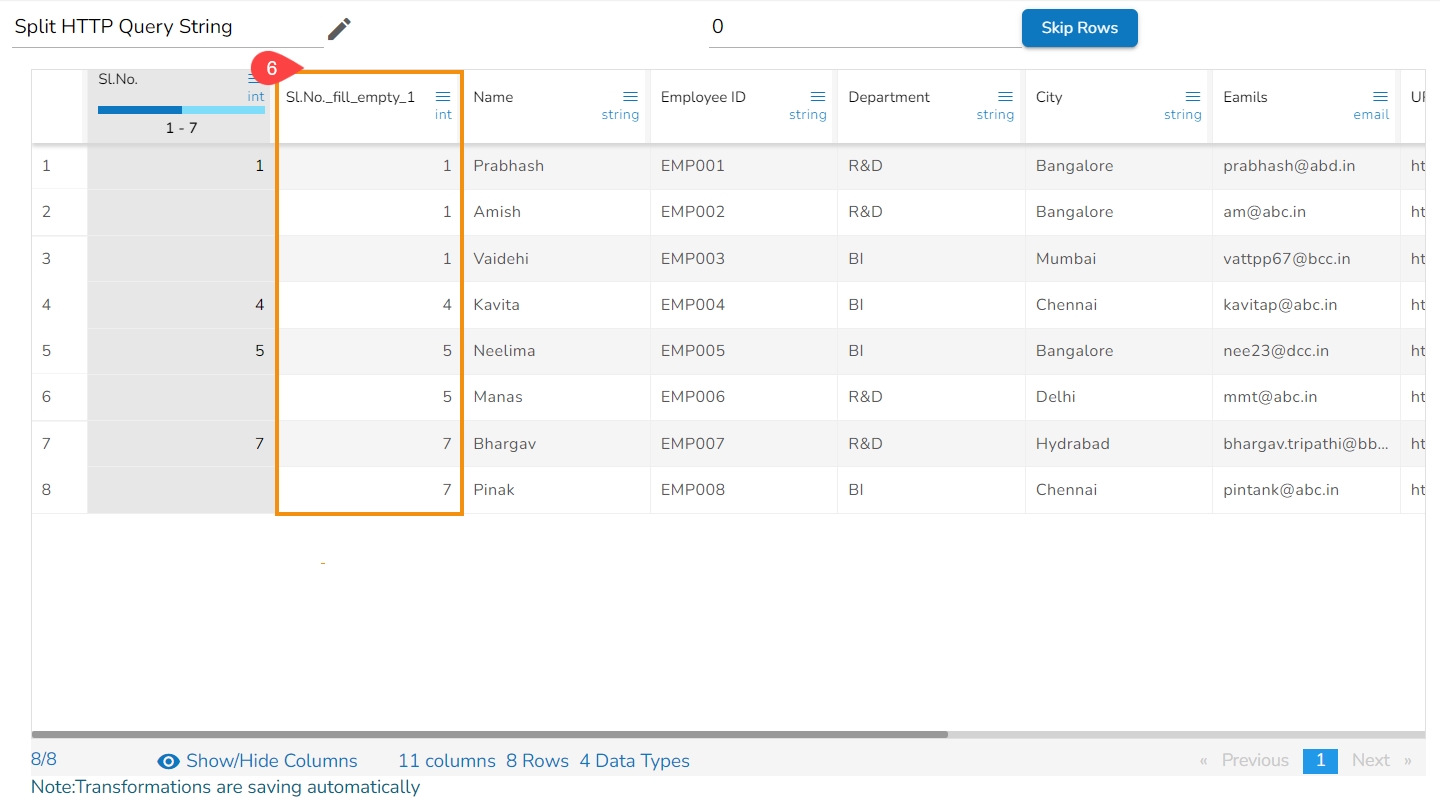

It helps to fill the empty cells of a selected column with a value or a value from another column if the destination column is empty.

Select a column.

Navigate to the Transforms tab.

Select the Fill Empty Cells with Text transform from the Data Cleansing category.

Use with: Specify whether to fill with a value or another column value.

Column/ Value: The value with which the column must be filled, or the column with which the value must be replaced.

Click the Submit option.

When the transform is applied to the below data on the empty cell of the Bol column,

It fills the empty cell with the chosen text:

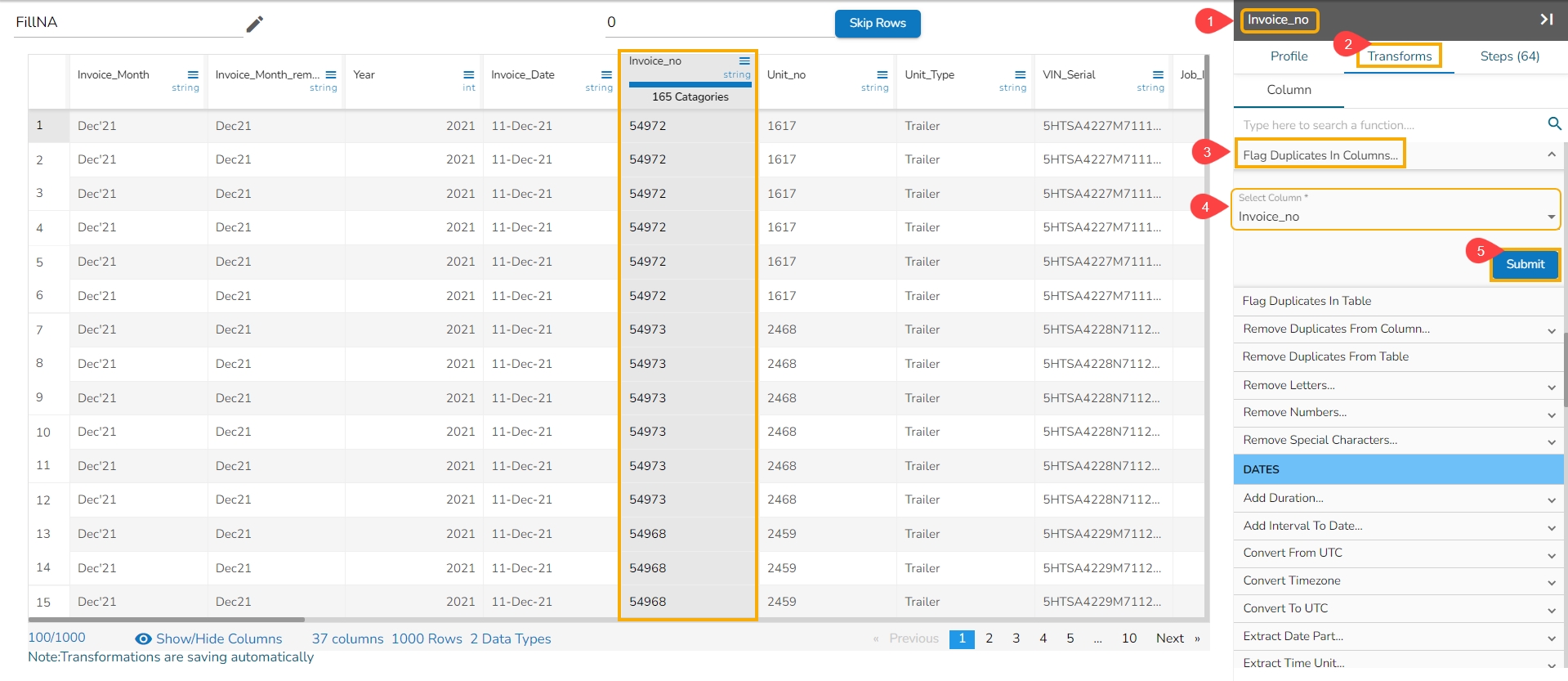

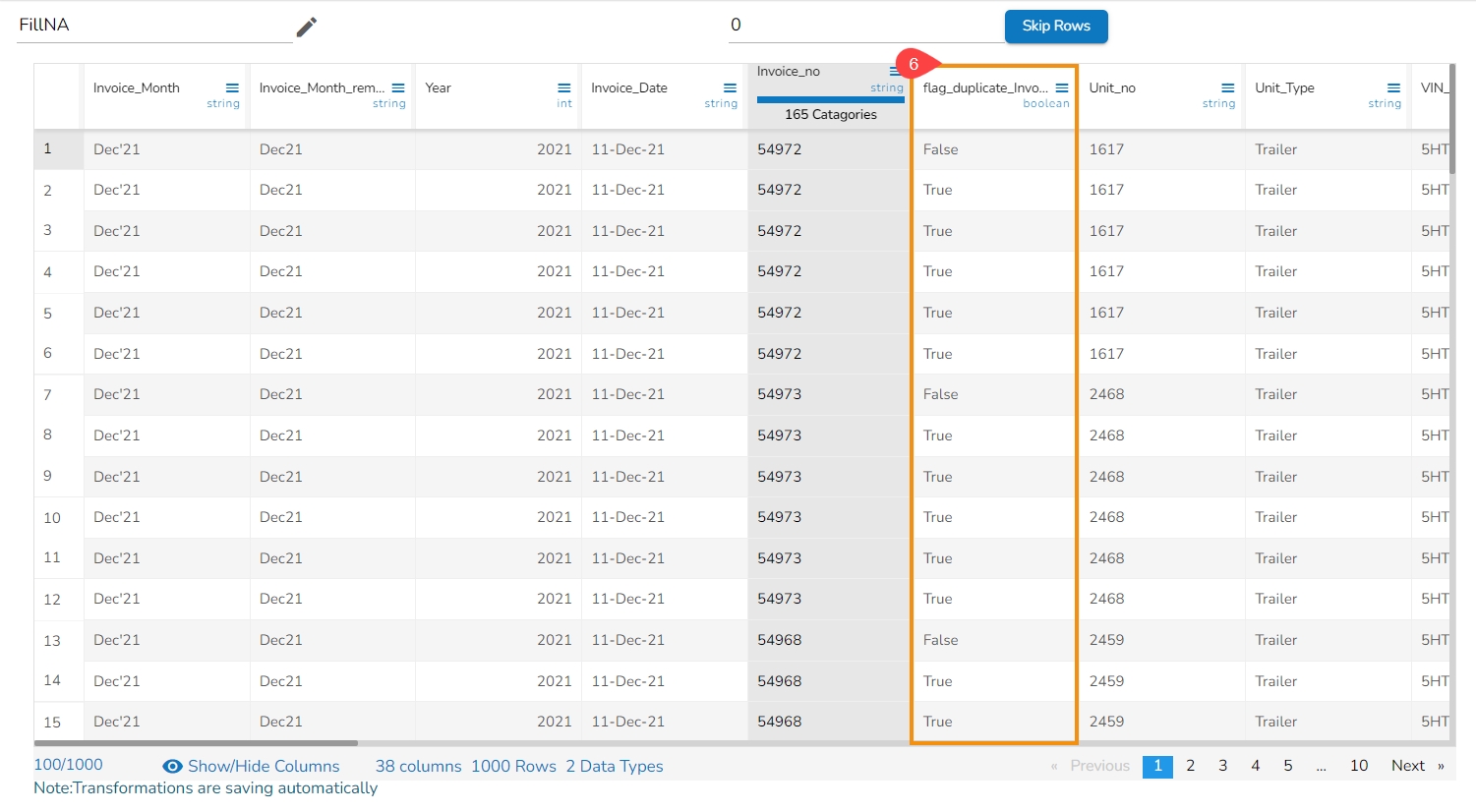

This transform adds a new Boolean column based on duplicate values in the column. For original value it gives false, and for the duplicate value, it provides true value.

Select a column.

Navigate to the Transforms tab.

Select the Flag Duplicates in Columns transform from the Data Cleansing category.

Select the column that contains duplicate values.

Click the Submit option.

It inserts a new column by flagging the duplicated values as true.

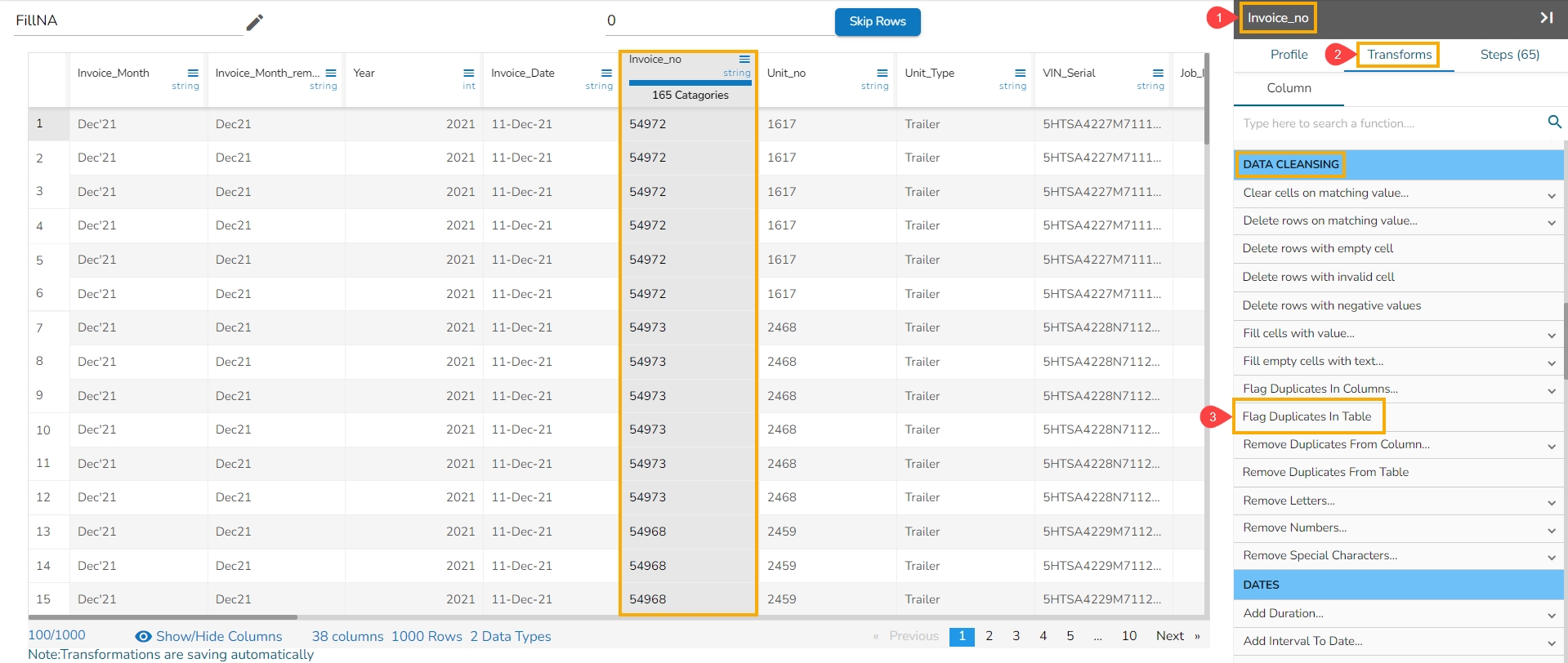

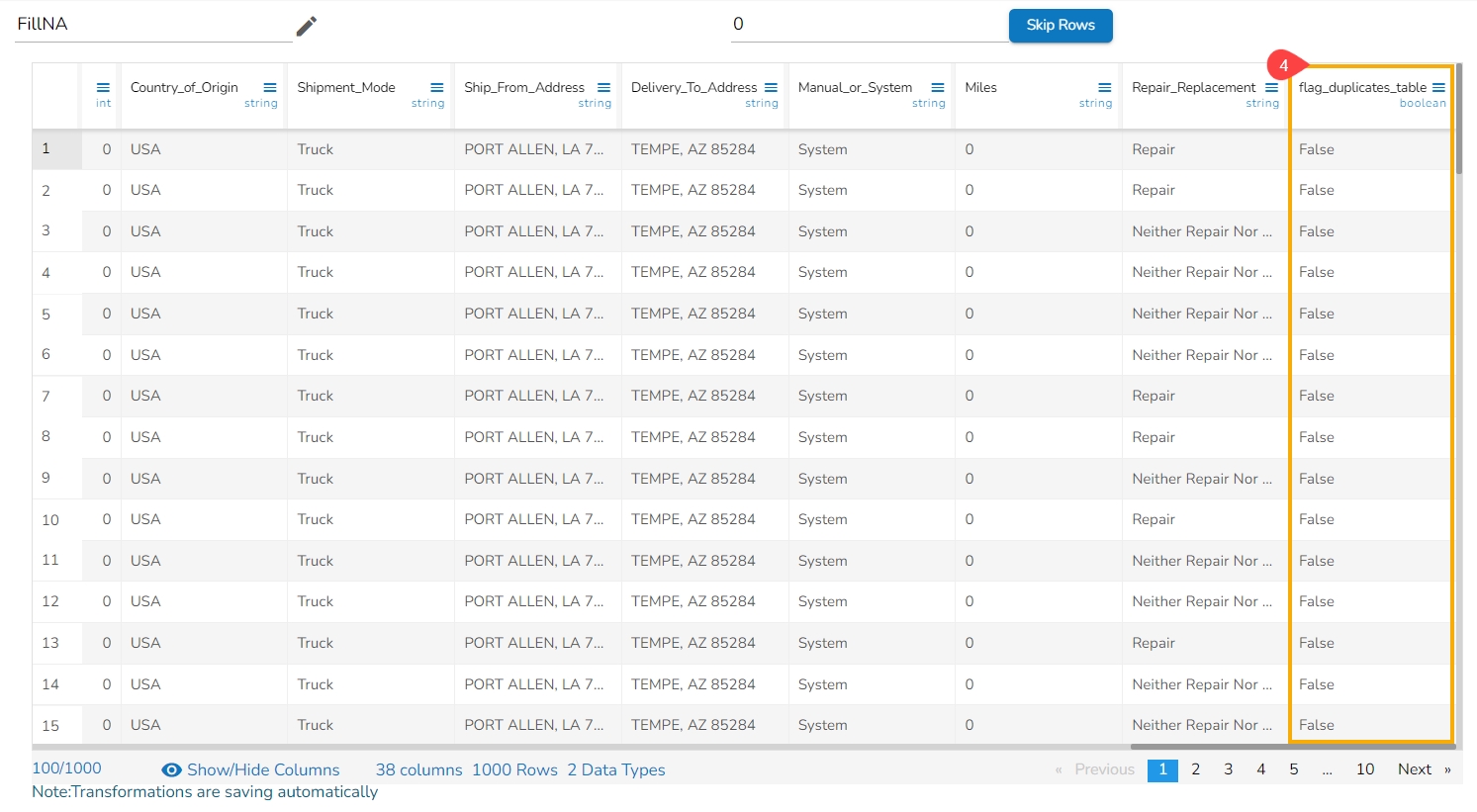

This transform adds a new Boolean column based on duplicate rows in the table. For original value it gives false, and for the duplicate value, it provides true value.

Select a column that contains duplicate values.

Navigate to the Transforms tab.

Click the Flag Duplicates in Table transform from the Data Cleansing category.

When applied on a column it inserts a new column named with the 'flag_' prefix and mentions the duplicated values as true.

The duplicated values get notified as true in the flag_duplicates_table column.

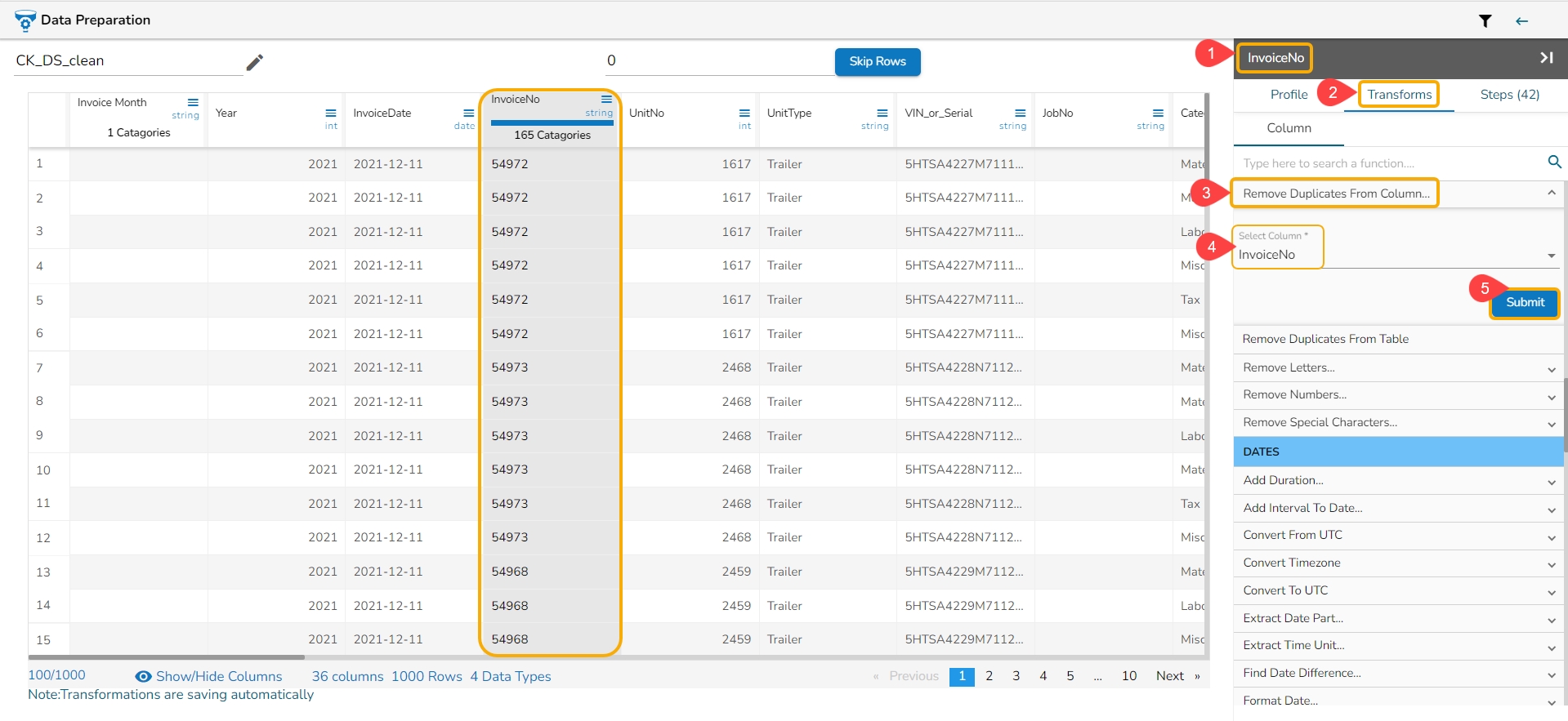

It removes duplicate values from the selected columns. This transform can be performed on a single as well as on multiple columns.

Select a column.

Navigate to the Transforms tab.

Select the Remove Duplicates from Column transform from the Data Cleansing category.

Select the column that contains duplicate values.

Click the Submit option.

The duplicated values get removed from the column.

It removes all duplicate rows from the table.

Select a column.

Navigate to the Transforms tab.

Select the Remove Duplicates from Table transform from the Data Cleansing category.

The duplicated data from the selected column gets deleted.

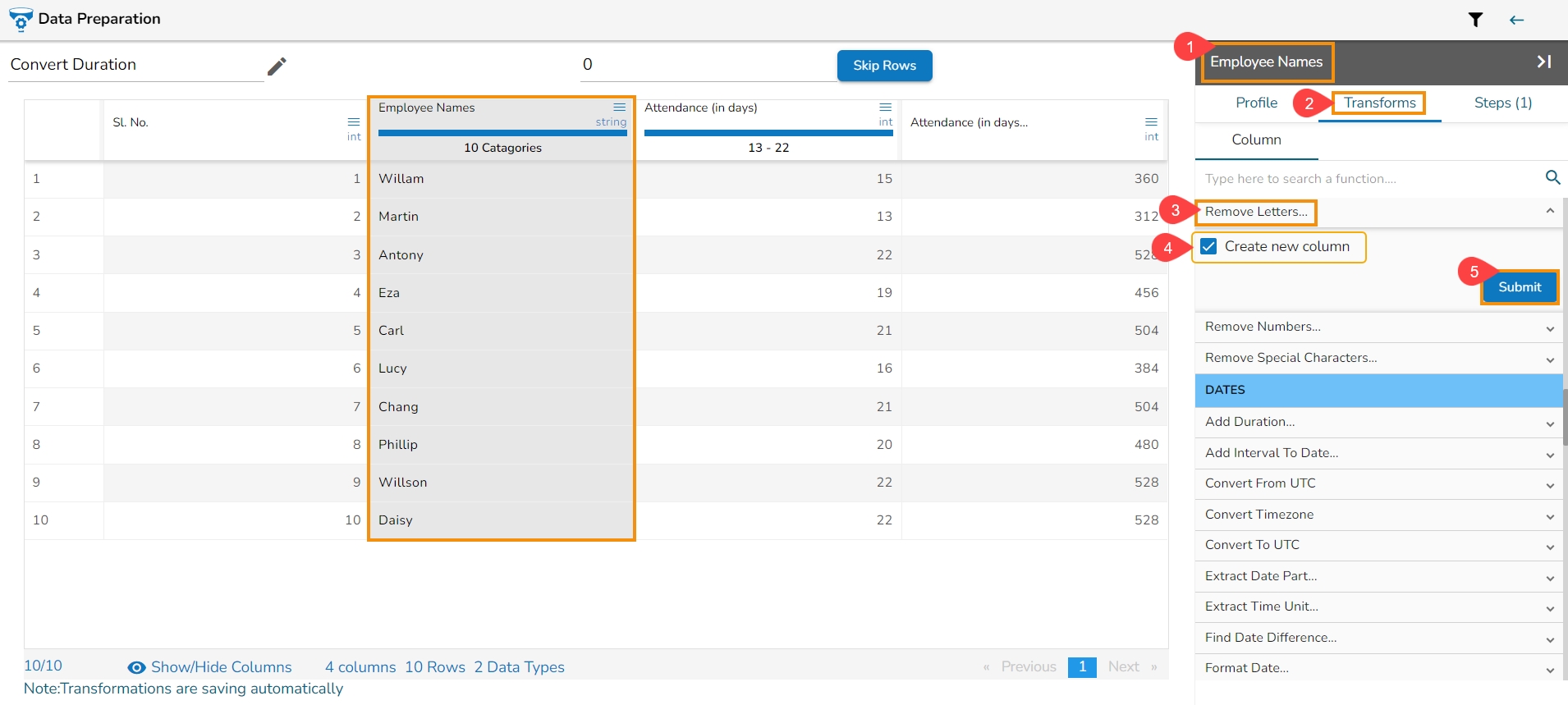

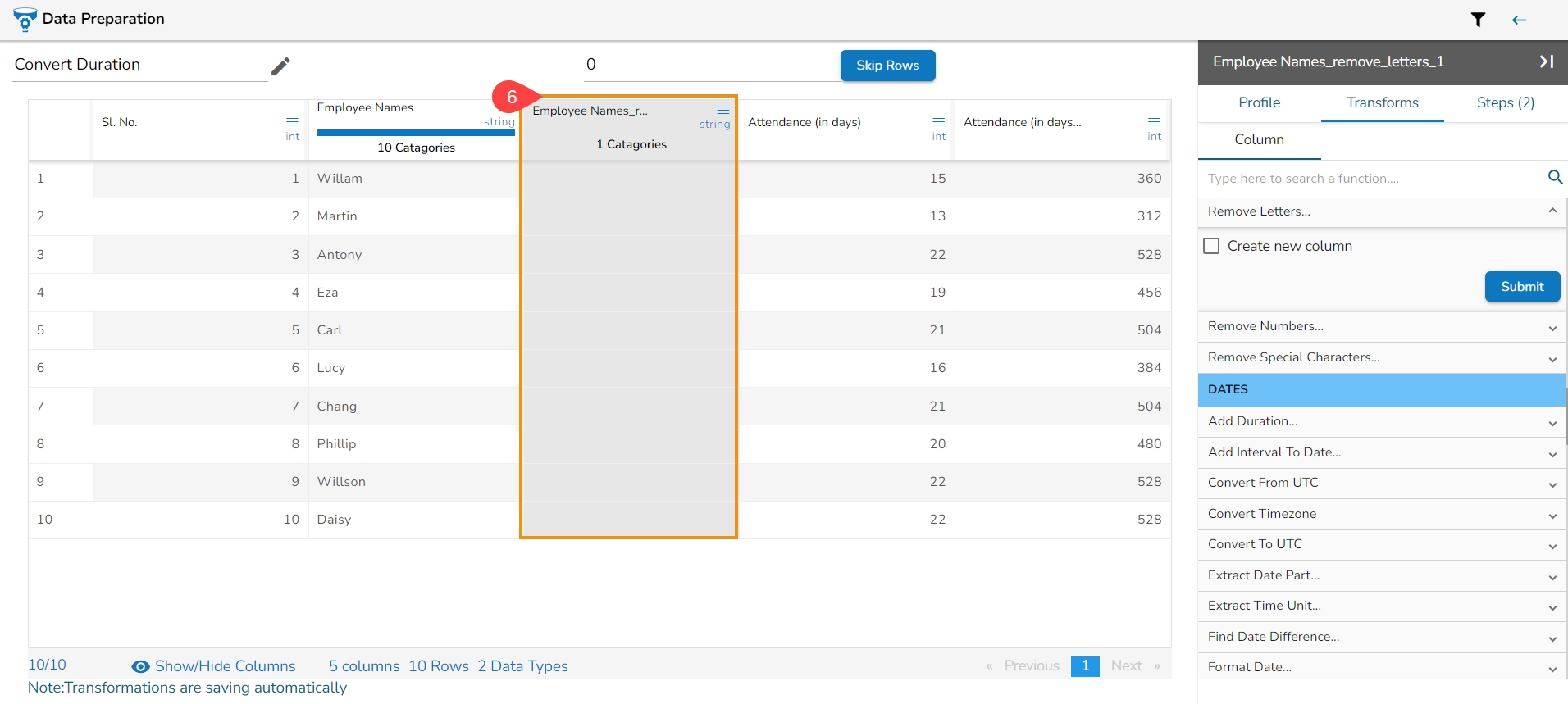

It removes any letter present in the selected column. The users can either add a new column with the transformed value or overwrite the same column.

Select a column.

Navigate to the Transforms tab.

Select the Remove Letter transform from the Data Cleansing category.

Enable create new column to create a new column with the transform result.

Click the Submit option.

It removes the numbers from the newly inserted column.

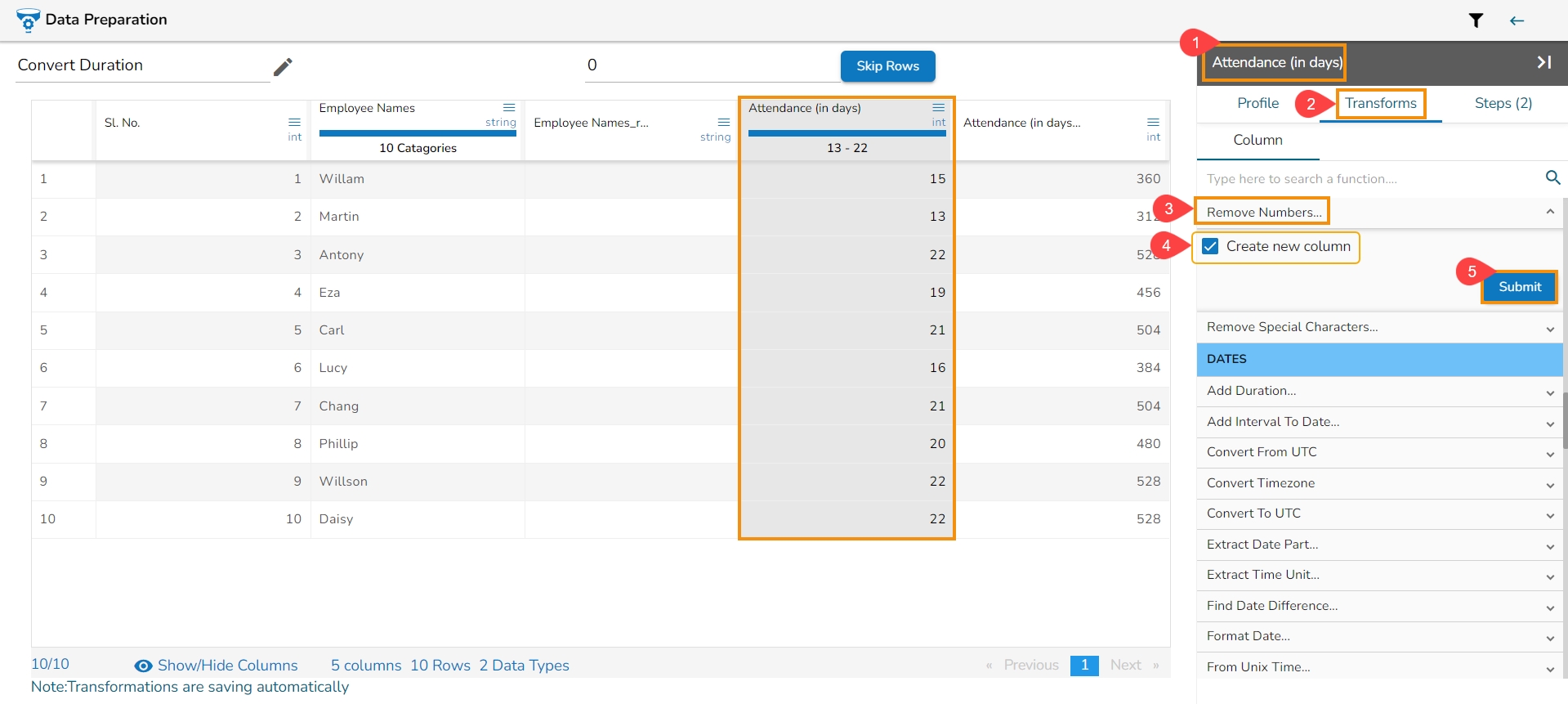

It removes any number present in the selected column. We can either add a new column with the transformed value or overwrite the same column.

Select a column.

Navigate to the Transforms tab.

Select the Remove Letter transform from the Data Cleansing category.

Enable create new column to create a new column with the transform result.

Click the Submit option.

When the Remove Numbers, transform gets performed on a selected column,

It removes numbers from the selected column.

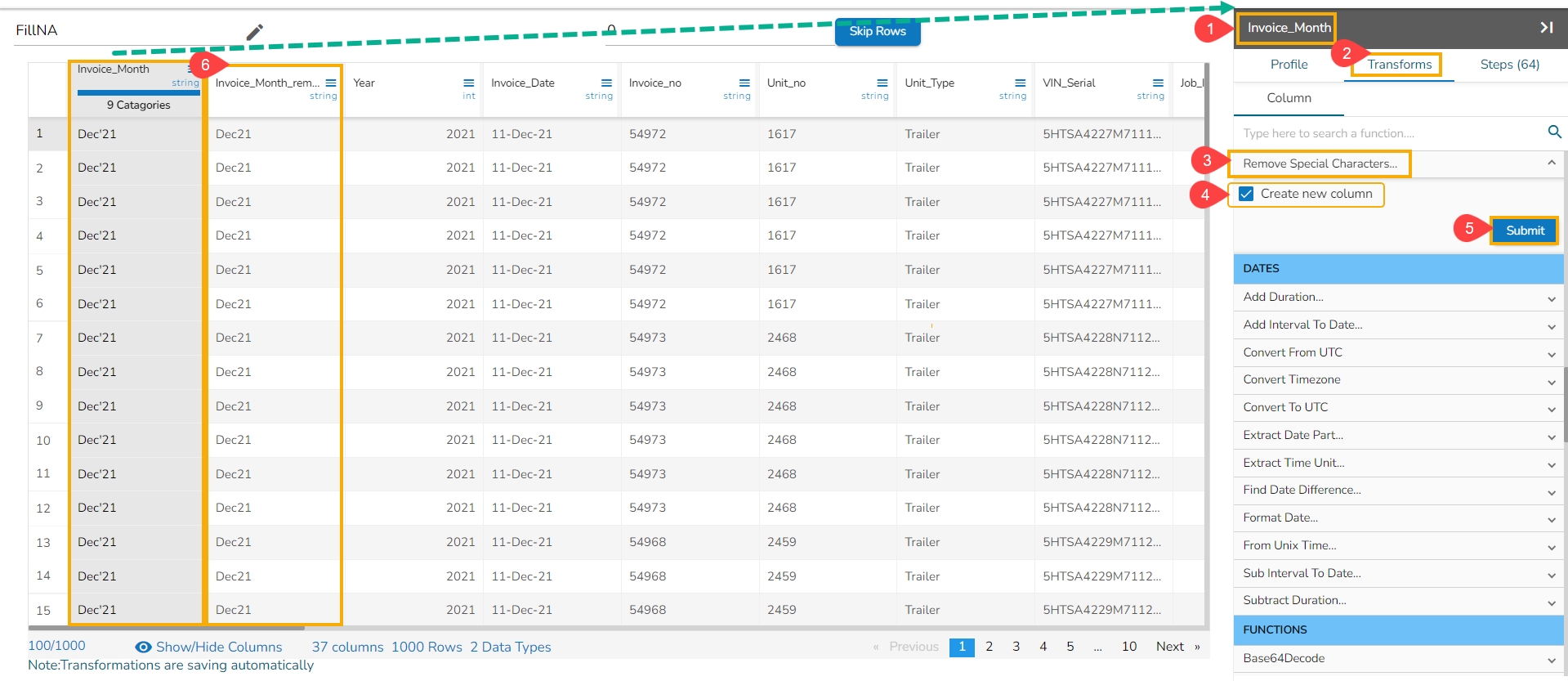

This transform helps to remove the special characters from the metadata (column headers) of a dataset & make it useful in other modules.

Check out the given illustration on how to Remove Special Characters from Metadata.

It removes any special character present in the selected column. Only letters, numbers, and spaces are retained. We can either add a new column with the transformed value or overwrite the same column.

When the transform Remove Special Characters gets performed on the selected column, the punctuations get removed from the column.

Select a column.

Navigate to the Transforms tab.

Select the Remove Special Characters transform from the Data Cleansing category.

Enable the Create new column option.

Click the Submit option.

The special character gets removed from the newly added column.

This transform removes leading and trailing quotes from a text. It can be applied to both single quotes (') and double quotes (").

Steps to perform the transform:

Navigate to the Data Preparation landing page.

Select a String datatype Column where single or double quotes in the values as trail & lead part.

Open the Transform tab.

Navigate to the Data Cleansing transform type.

Click the Remove Trail and Lead Quotes transform.

As a result, the quotes will get removed and changes will be reflected in the same source column.

This transform removes Trail and all Whitespaces found at the beginning and end of the text.

Steps to perform the transform:

Select a String datatype Column where whitespace is present in the values as trail & lead part.

Click on the Remove trail and lead whitespace transform.

Whitespace will get removed from the trail and lead places and changes will be reflected in the same source column.

This transforms removes all whitespace from a string, including leading and trailing whitespace and all whitespace within the string.

Steps to perform the transform:

Navigate to the Data Preparation landing page displaying the Data.

Select a String datatype Column where whitespace is present in the values as trail, between & lead part.

Open the Column

Click the Remove Whitespace transform.

Use checkbox to select the Create New Column option.

Provide a name for the New Column.

Click the Submit option.

Whitespace will get removed from the lead, in-between, and trail spaces of the content of the source column and displayed as a new column in the data set.

The users can avoid creating a new column while using the Remove Whitespace transform in that case, the whitespaces will get removed from the source column itself.

This tab is provided to see all the performed transforms on the selected dataset.

This tab lists all the transformations that were performed on the data. It also gives a count of steps performed.

The user can open any performed transform and edit it using the Steps tab.

The Steps tab also provides the Copy and Paste icons to copy the applied set of transforms and paste them on a new Data Preparation based on the same data scource.

Check out the illustration on copy transformation steps and paste them to a new Data Preparation.

Perform some transformations on one dataset using the Data Preparation workspace. Or Choose a Data Preparation containing some transforms listed under the Steps tab.

Open the Steps tab to see all the performed transforms listed.

Click the Copy icon to copy the available steps.

Choose the same data source from where the copied steps were performed.

Click the Data preparation icon to start a new data preparation.

Since the selected source already contains some Data Preparations, it redirects the user to the Preparation List page. Please Click the Create Preparation option from this page.

Navigate to the Steps tab for the newly created data preparation.

Click the Paste icon.

All the copied transformation steps start applying to the selected data source.

The user may validate once all the transform steps are pasted to the current data.

The user may give an identical name to the preparation.

Click the Save option.

A notification message appears.

The Data Preparation gets saved with the applied transforms steps.

The Steps tab contains the delete option to remove applied transformation steps. The user can follow the below-given steps to use these options:

Navigate to the Steps tab displaying some applied steps.

Click the Delete icon for a listed step.

The selected steps get deleted.

A message appears to notify the same.

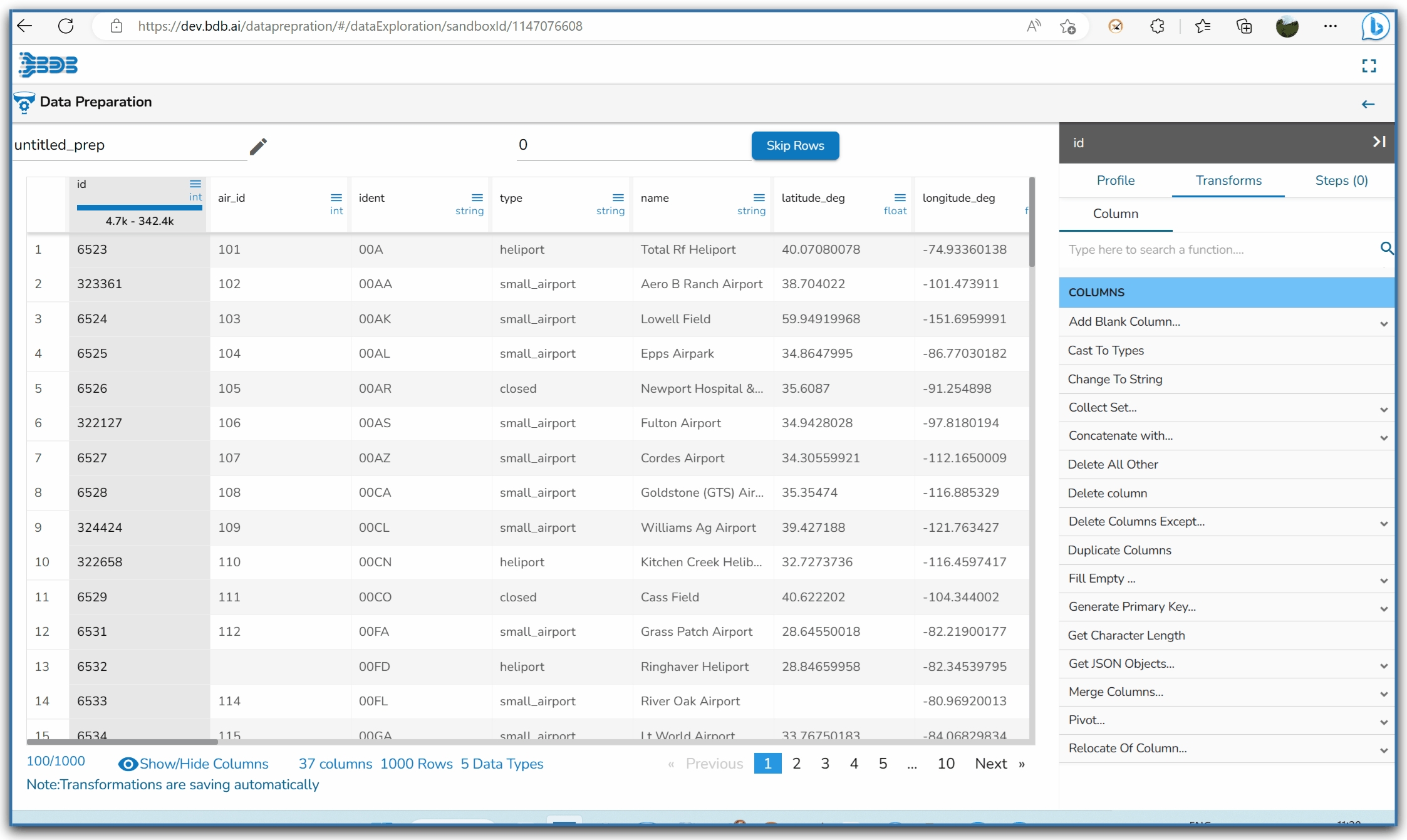

The user can access the Data Grid view of the selected dataset by clicking on the Data Preparation icon. The displayed data in the grid changes based on the number of transforms performed on it.

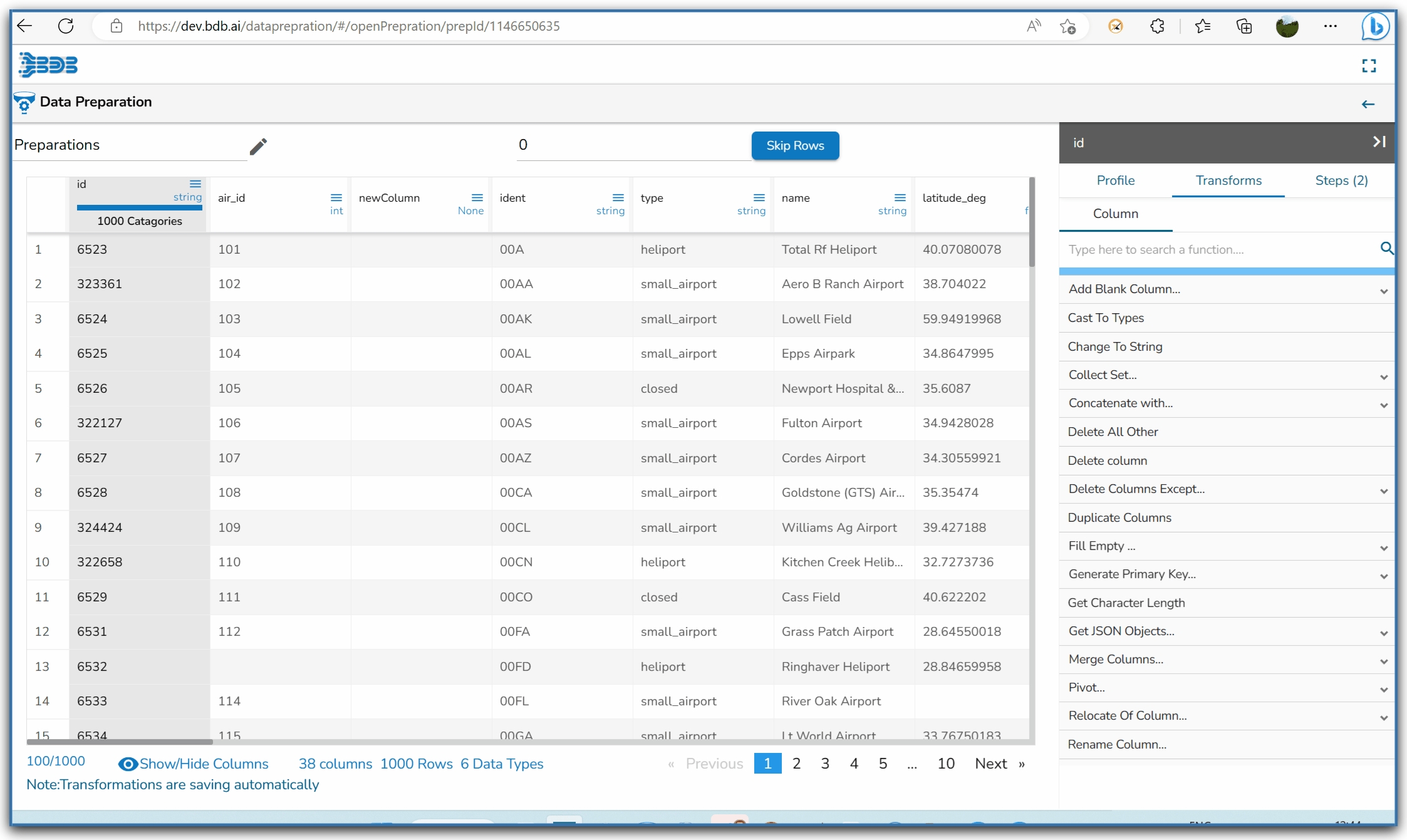

The Data Grid in the BDB Data Preparation displays the data. The data shown in the grid is a sample from the actual data set or complete data based on the data volume.

The Grid format provides easy access to commonly used transformations from the main Transformation categories. To access them, navigate to the header panel on the Data Preparation landing page, where various Transformation categories are listed.

Navigate to the Data Preparation landing page.

In the header panel, locate the various Transformation categories.

Select a Transformation Category and click on it.

A context menu will appear displaying commonly used transforms from the selected category.

Select a transform and click on it to apply the transform.

Based on the selected transform the configuration dialog box will appear. Provide the required information to apply the transform.

The selected transform will be applied to the dataset.

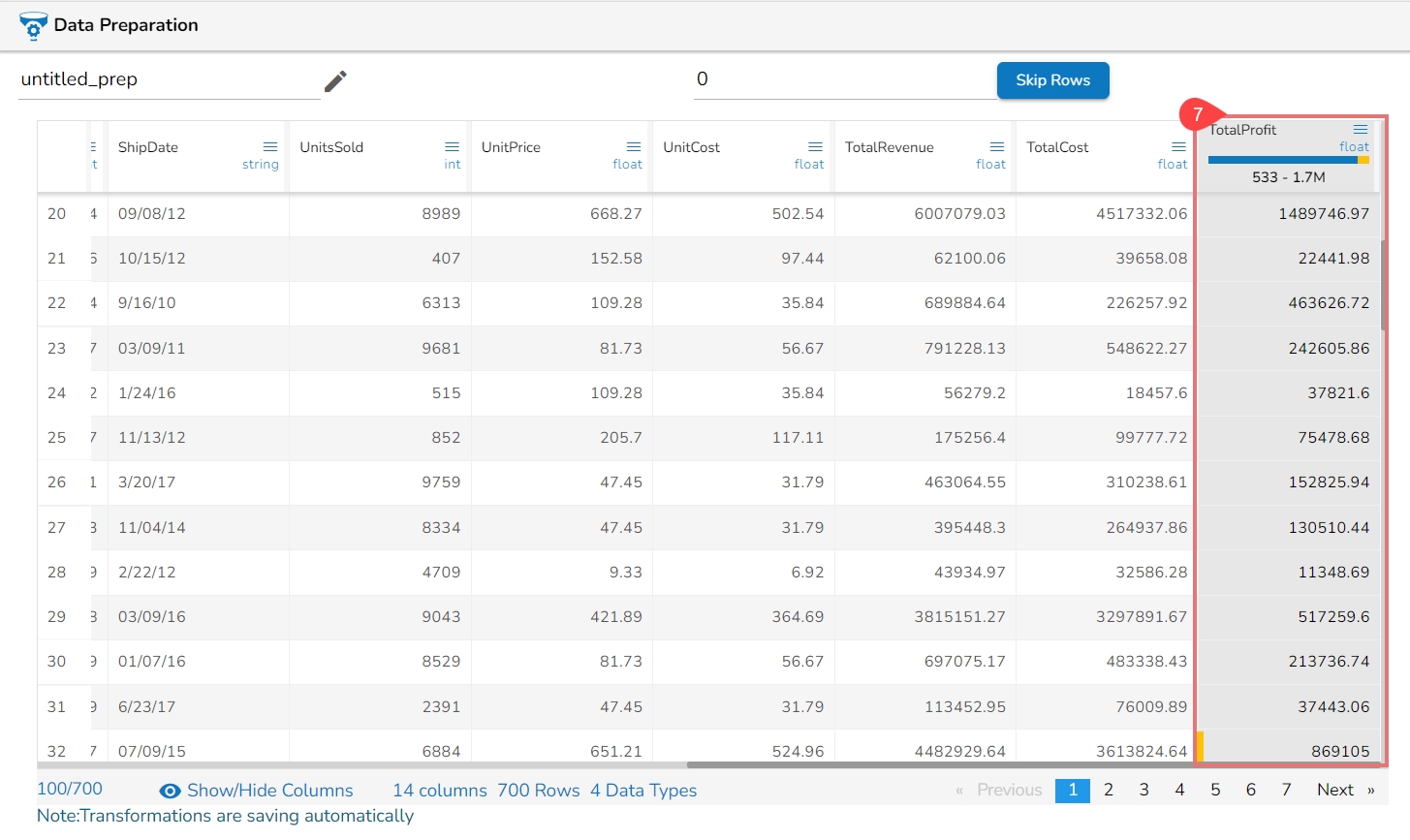

The grid has a header that displays the column name, and column type from the selected dataset. Within this header, a column chart visually represents the data of the selected column.

The column chart can be shown from the Header using the Show Chart option provided on the top right side of the page. The Column chart remains hidden by default in the Data Grid.

Navigate to the Data Preparation workspace.

Click the Show Chart icon.

The column chart for each column will be displayed in the header.

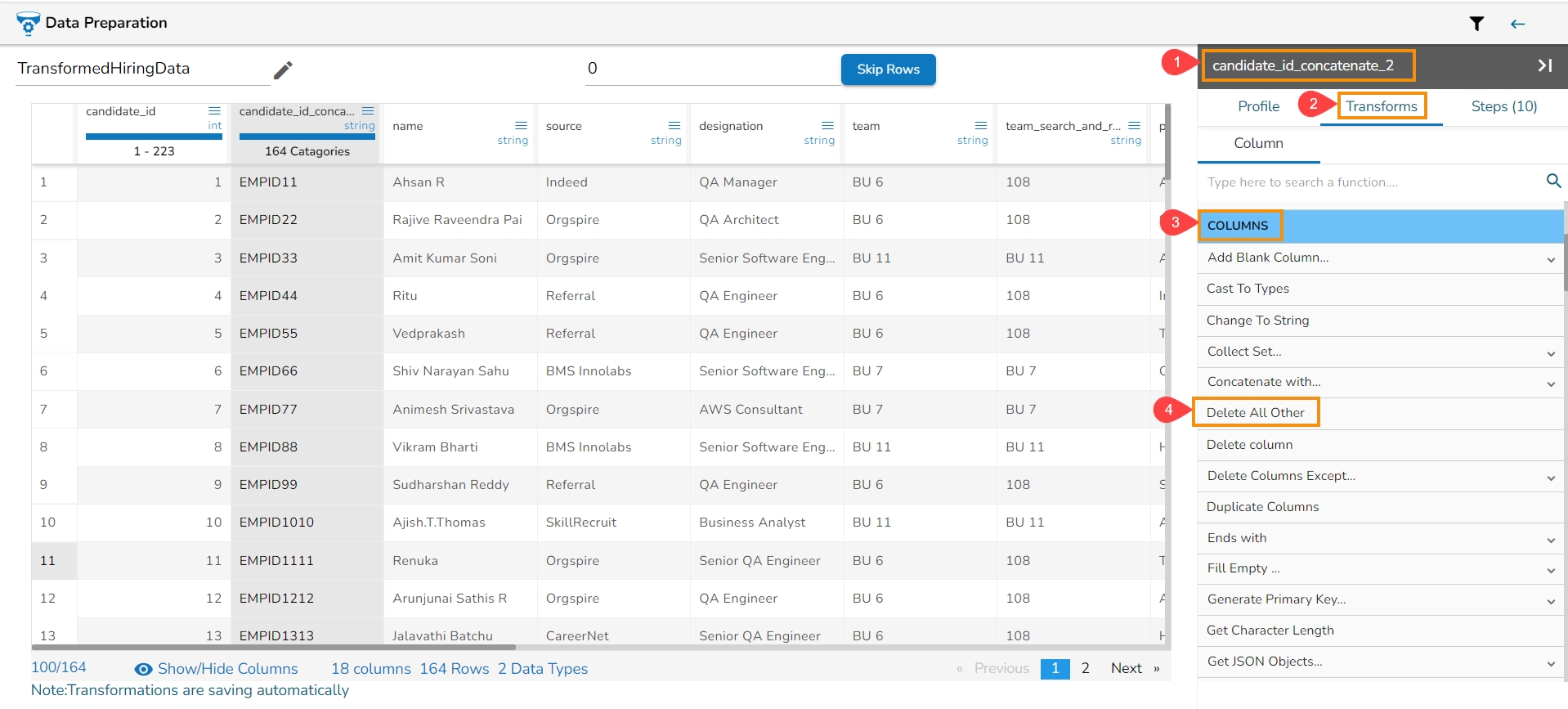

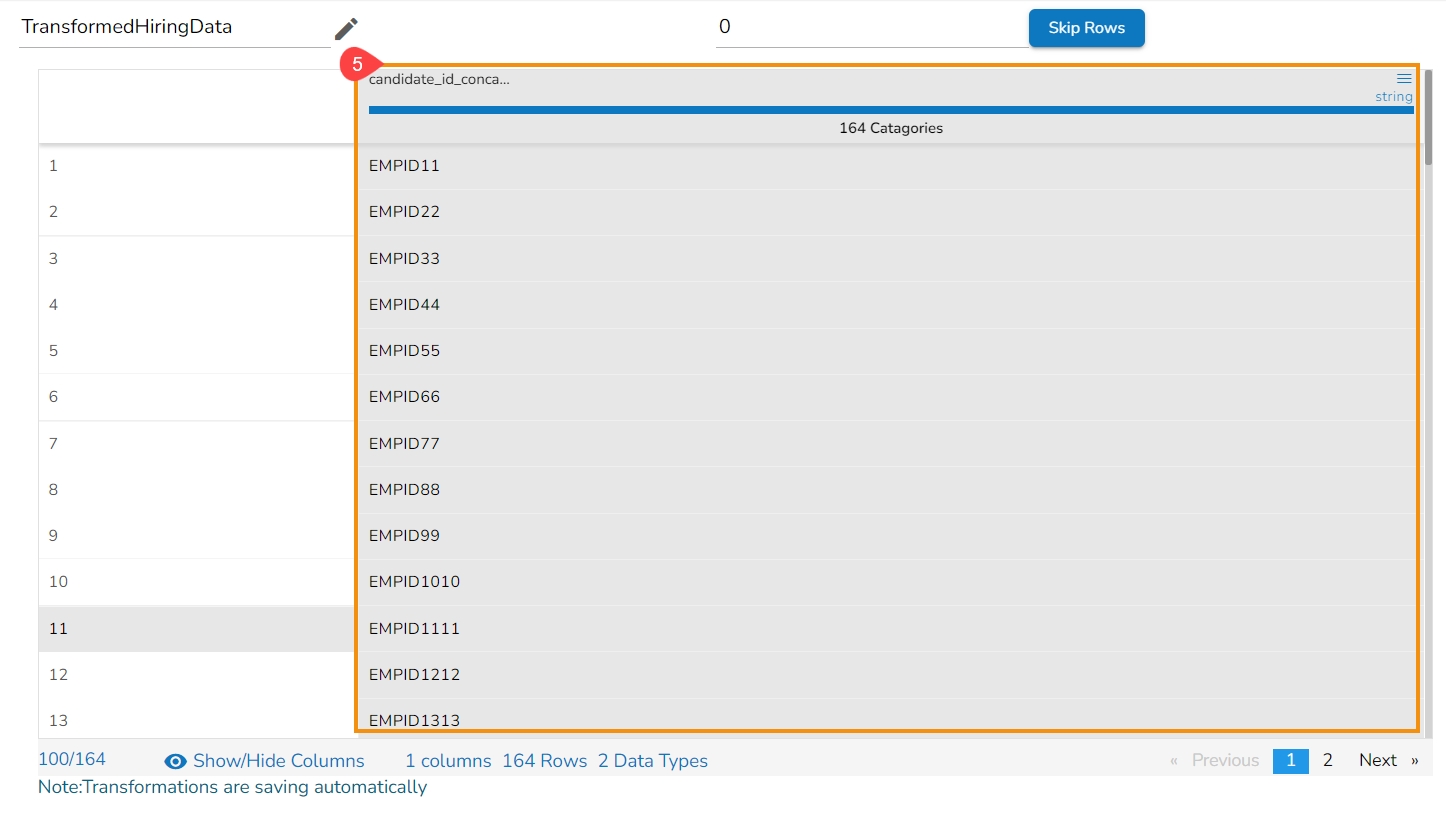

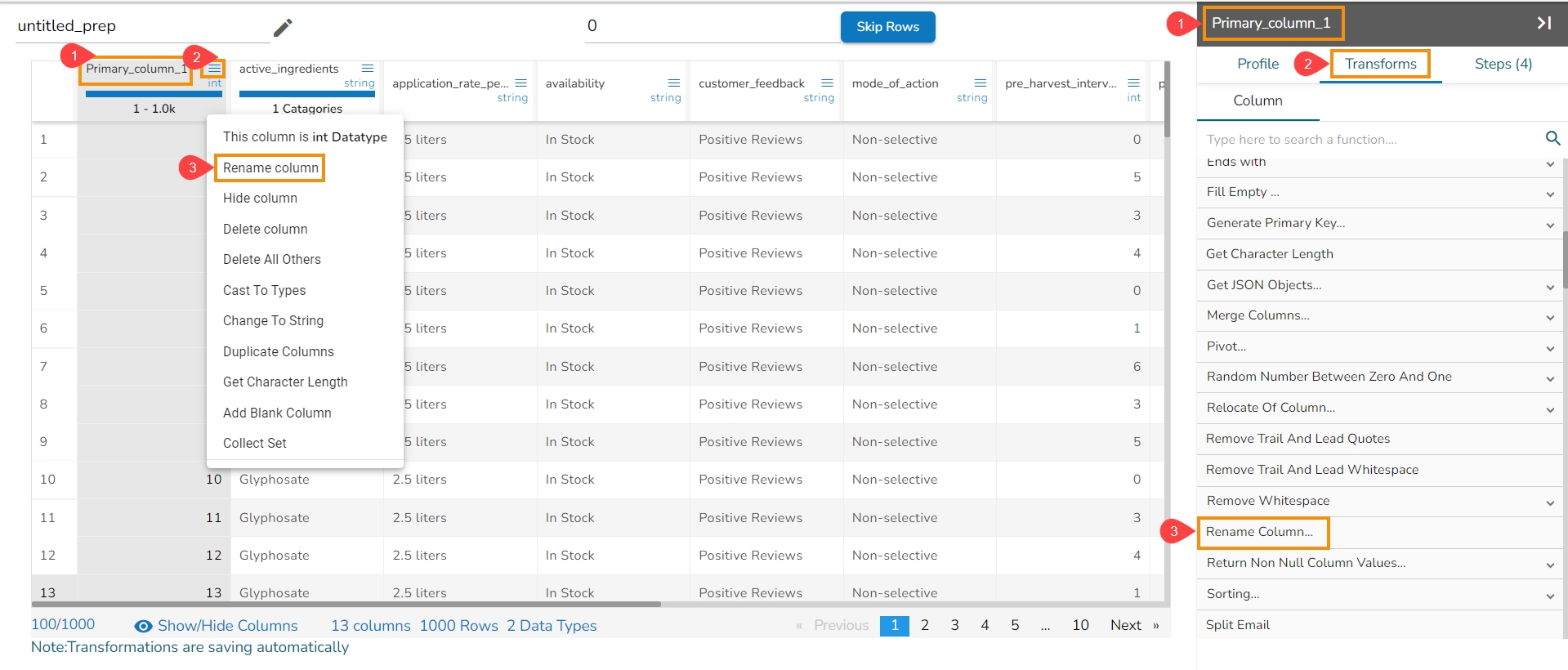

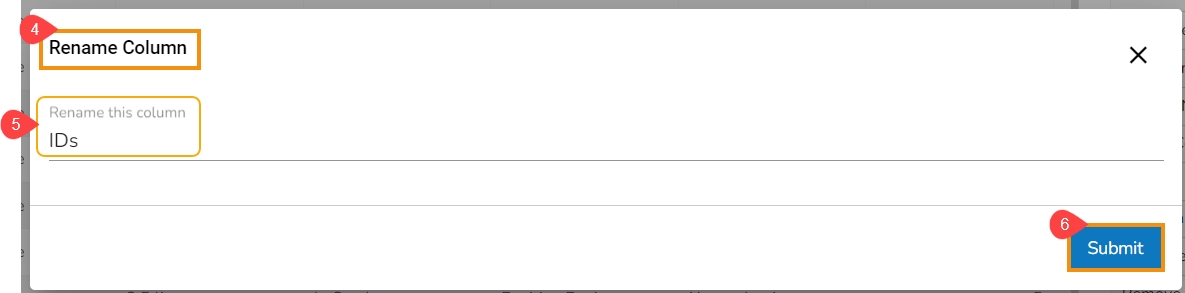

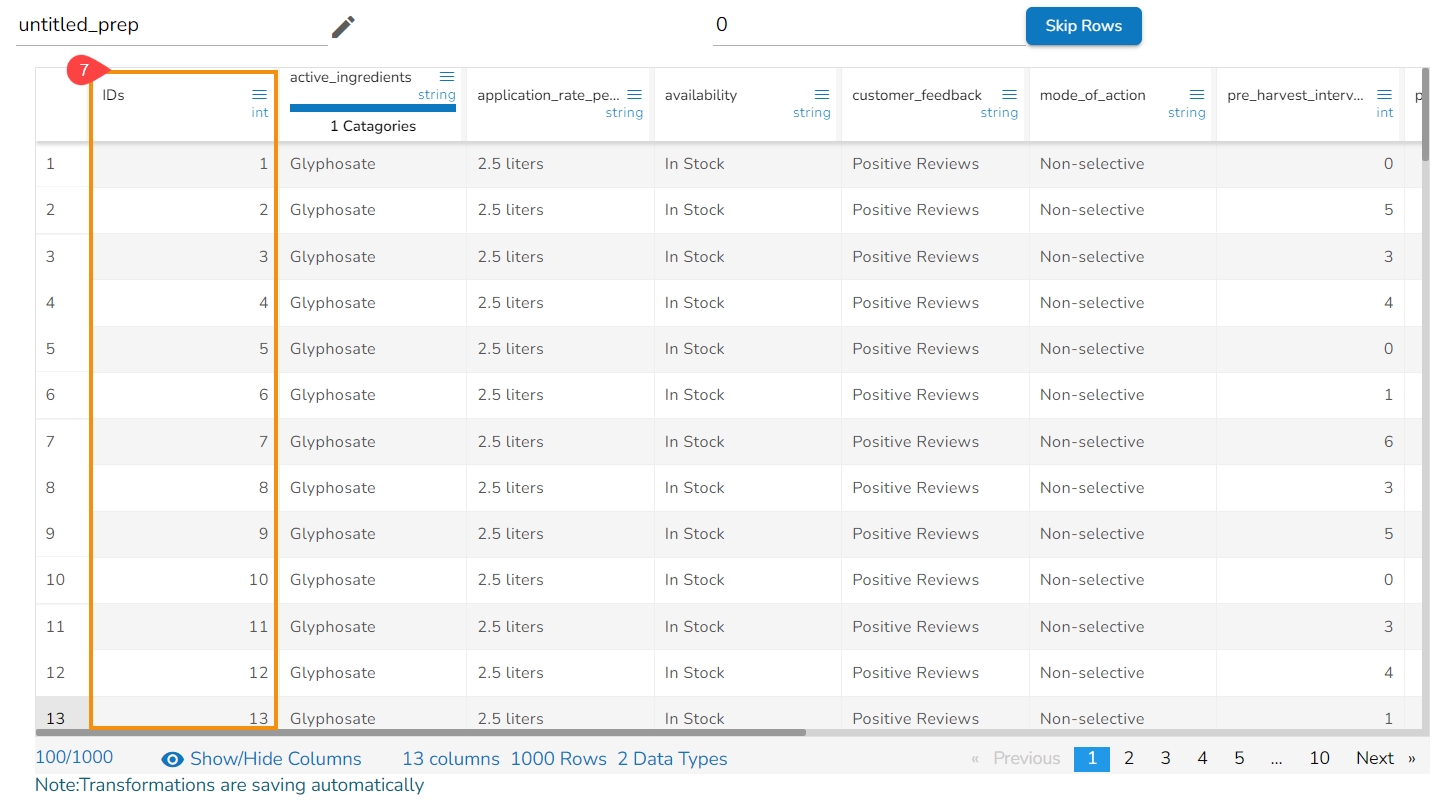

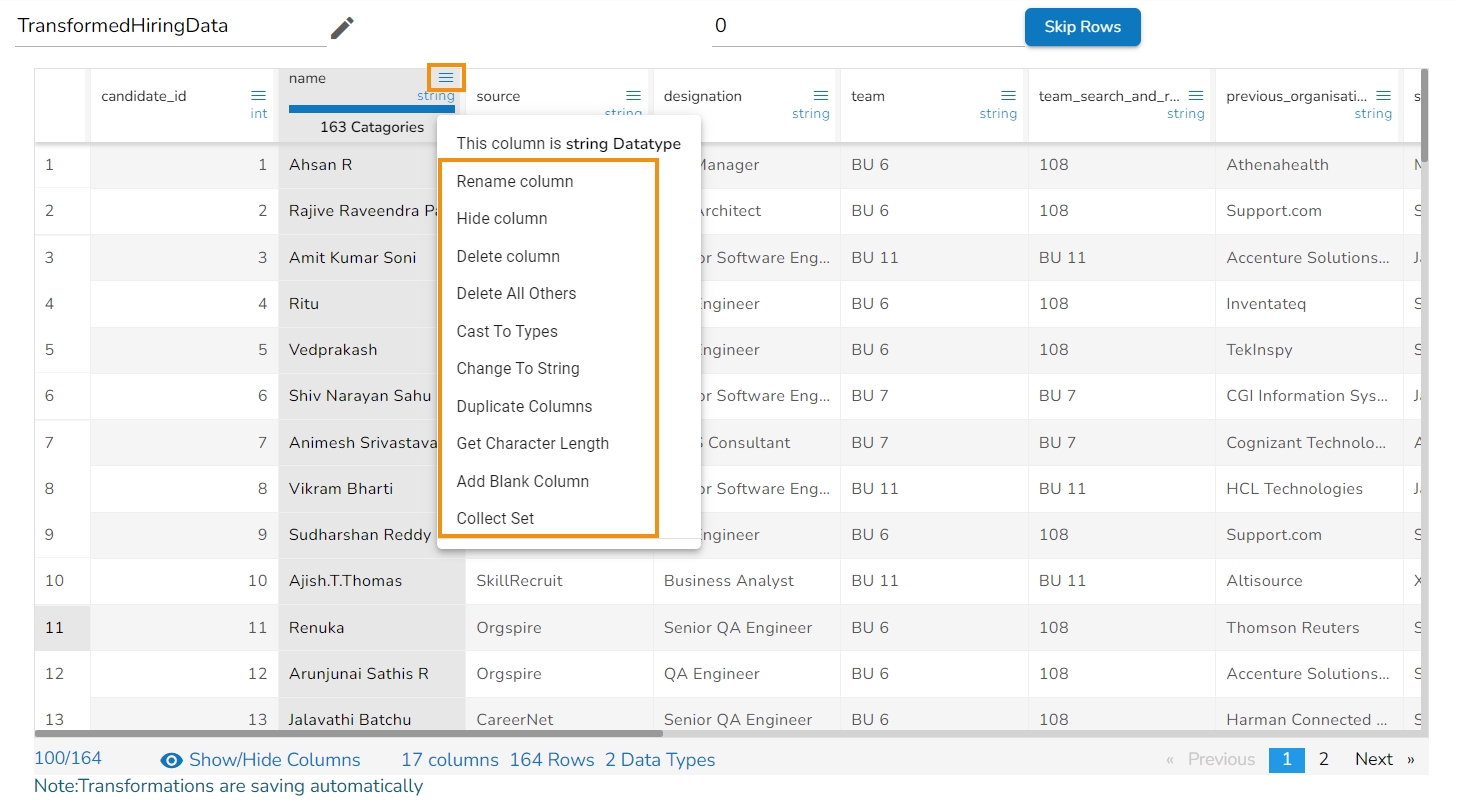

Each Column Header contains a Drop-down icon. By clicking the Drop-down icon, a Context menu is displayed with some options to be applied to that column.

The following options are displayed while clicking on the Context Menu icon:

Rename column

Hide Column

Delete Column

Delete All Others

Duplicate Columns

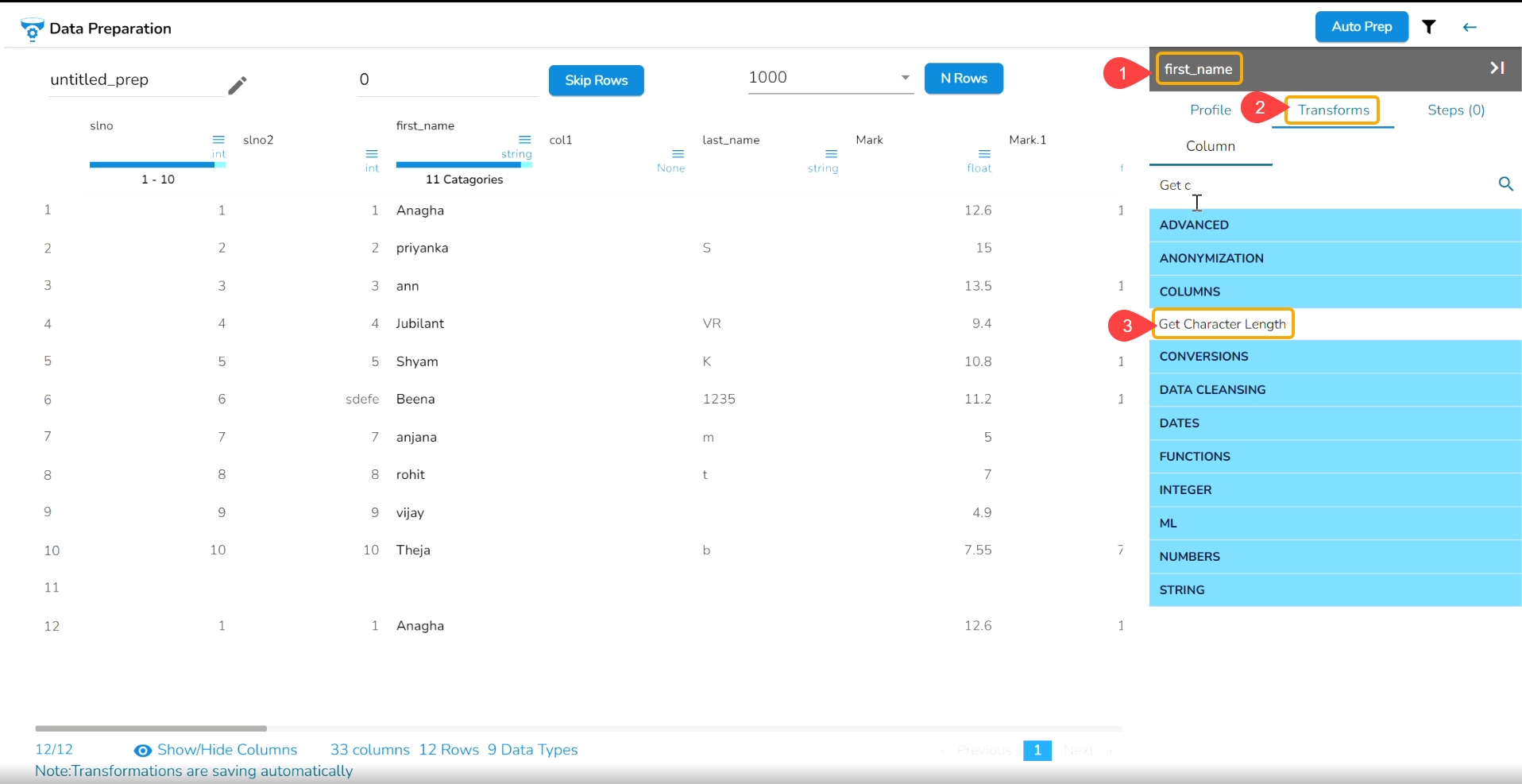

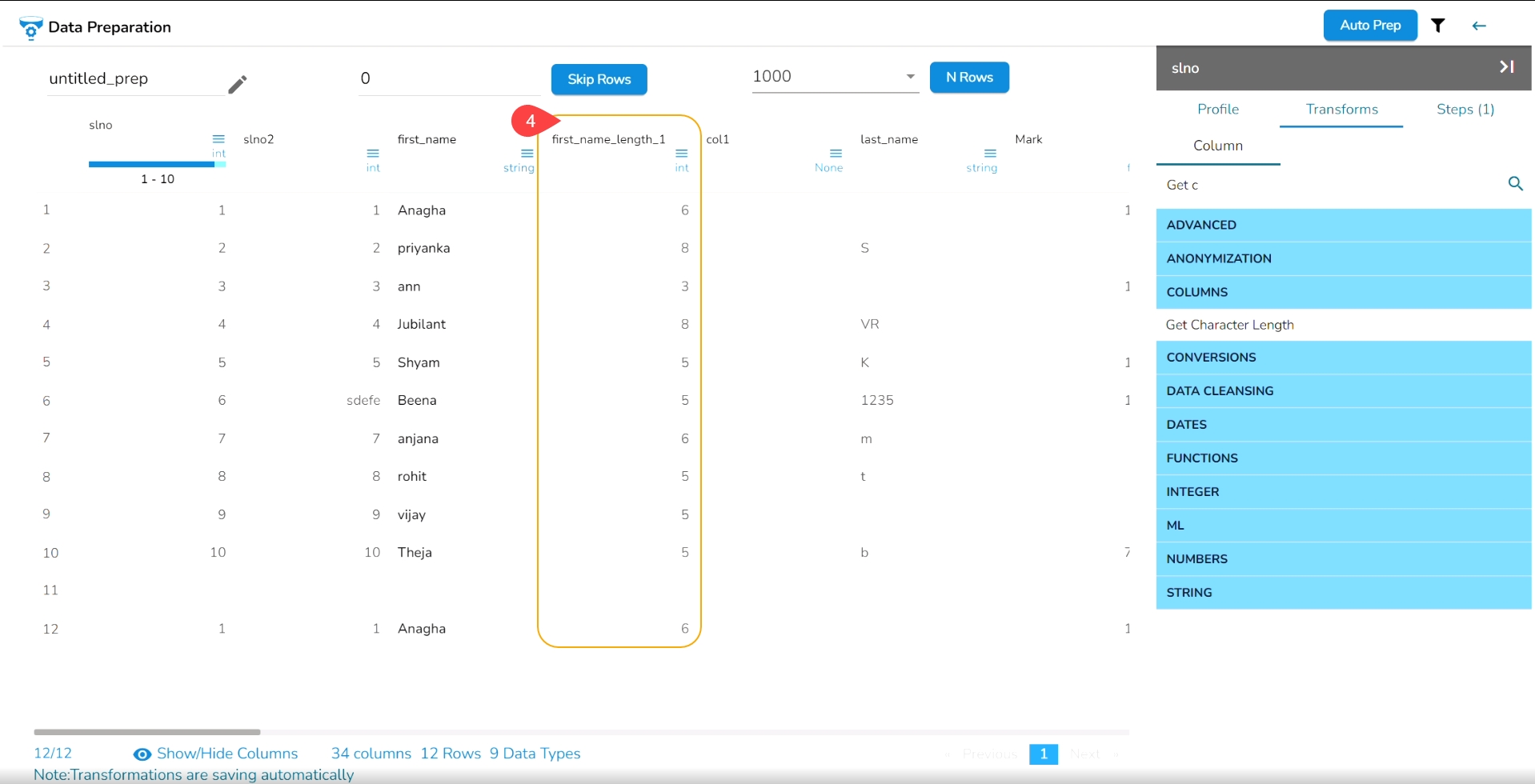

Get Character Length

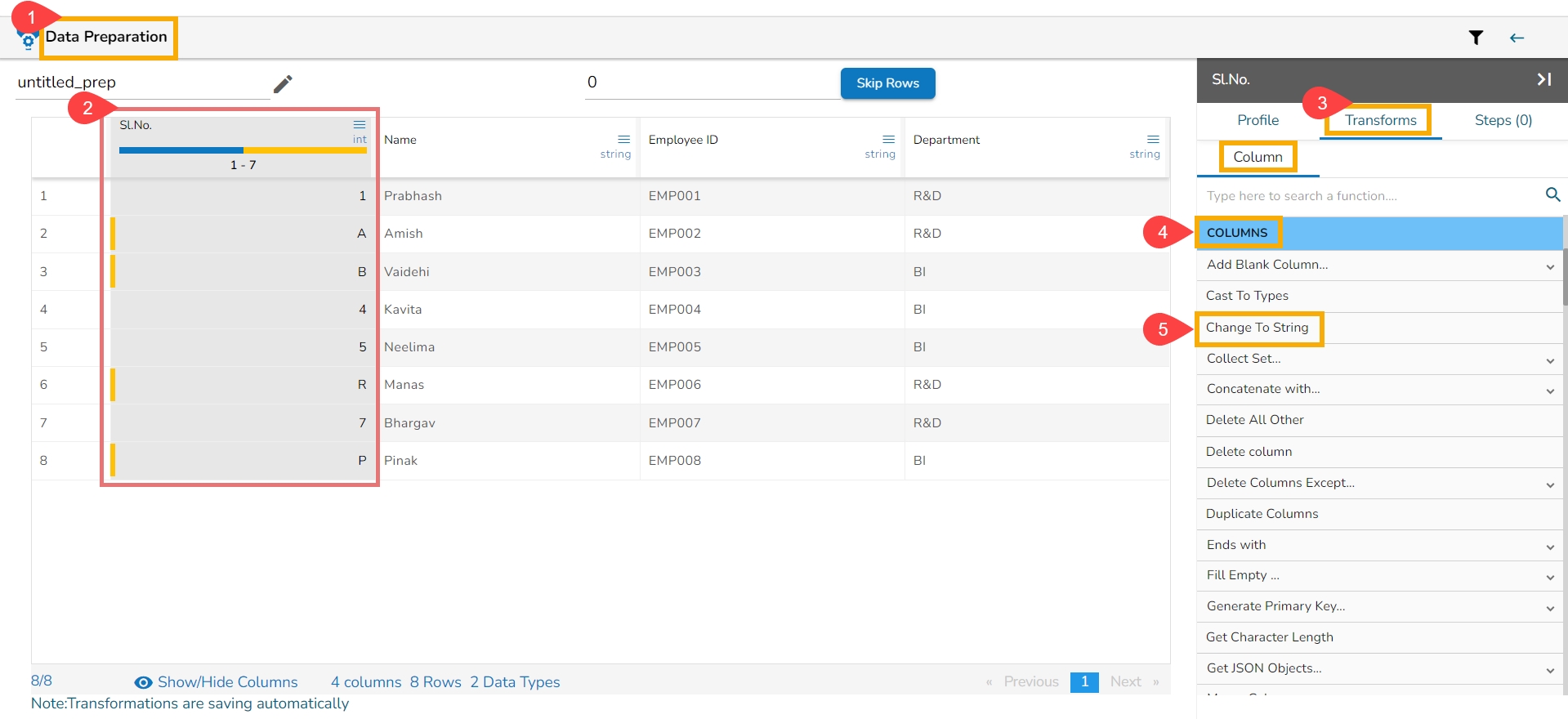

Change to String (appears only for Integer)

From the context menu, select the desired transform. The transform will be applied to your dataset automatically.

It also presents the data type of the column. It is analyzed based on the max match to any data type in the first 10K records. Consider that out of the 10000 rows sample, there are 9000 integers and 1000 string values, the selected data type is Integer. The 1000-string rows get detected as invalid rows.

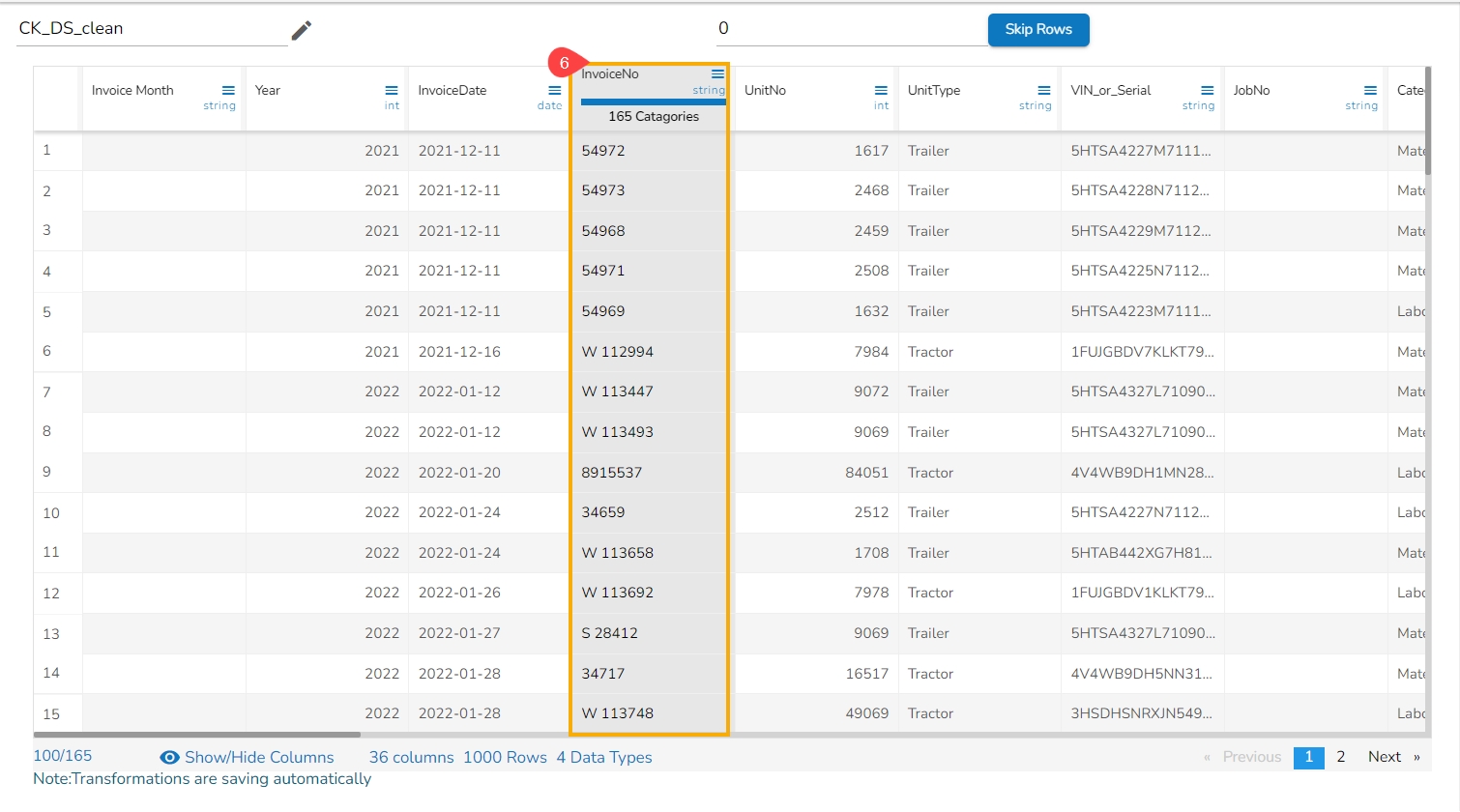

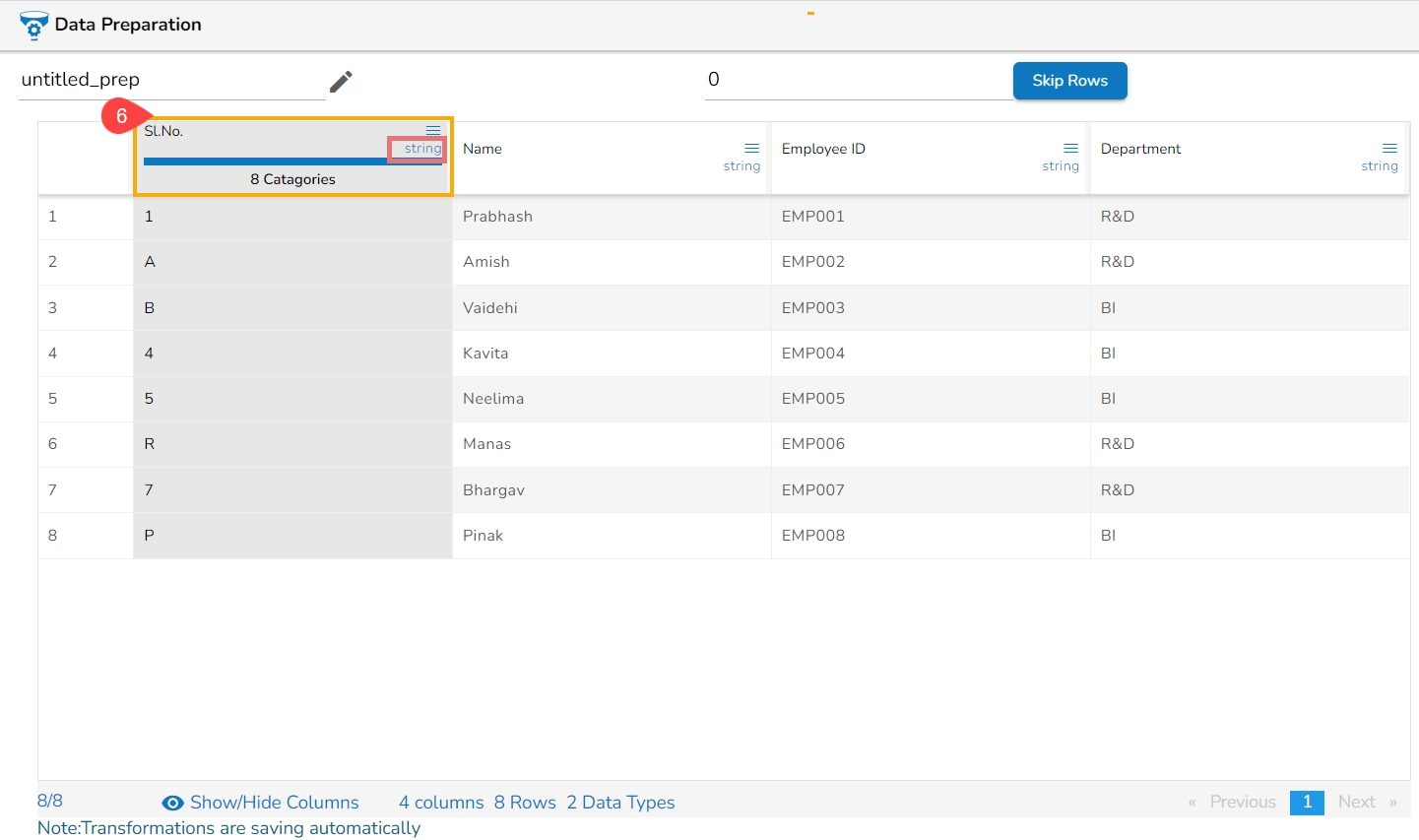

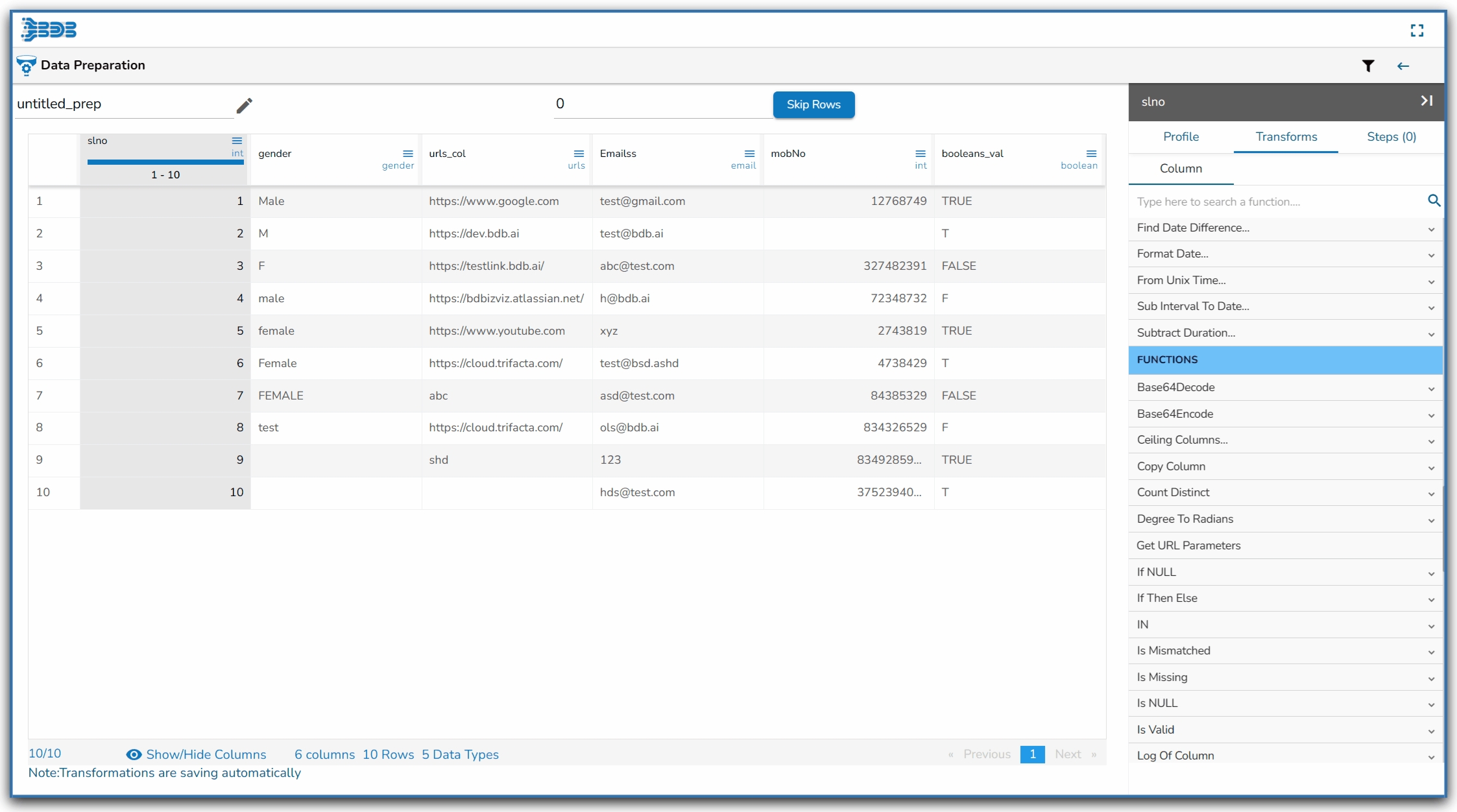

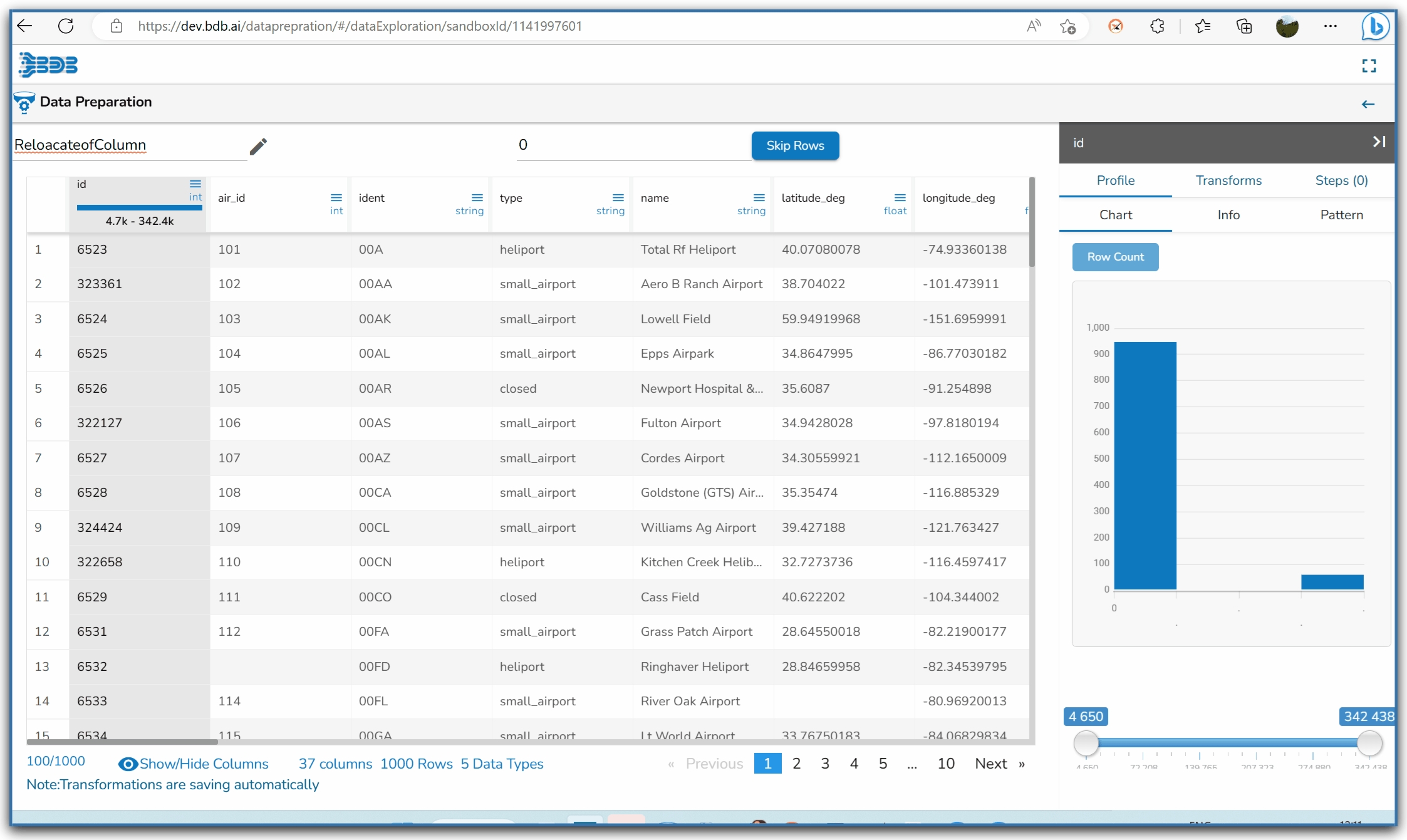

The column header in the Data Grid displays the following information based on the column types:

Columns with Integer values- The Min and Max values

Columns with String values- Total unique count or no. of categories

Columns with Date values- Range of dates including the min-max date

The Data Grid header displays Data Types. Some of the supported Data Types are given below:

Integer

Double

String

Date

Timestamp

Long

Boolean

Gender

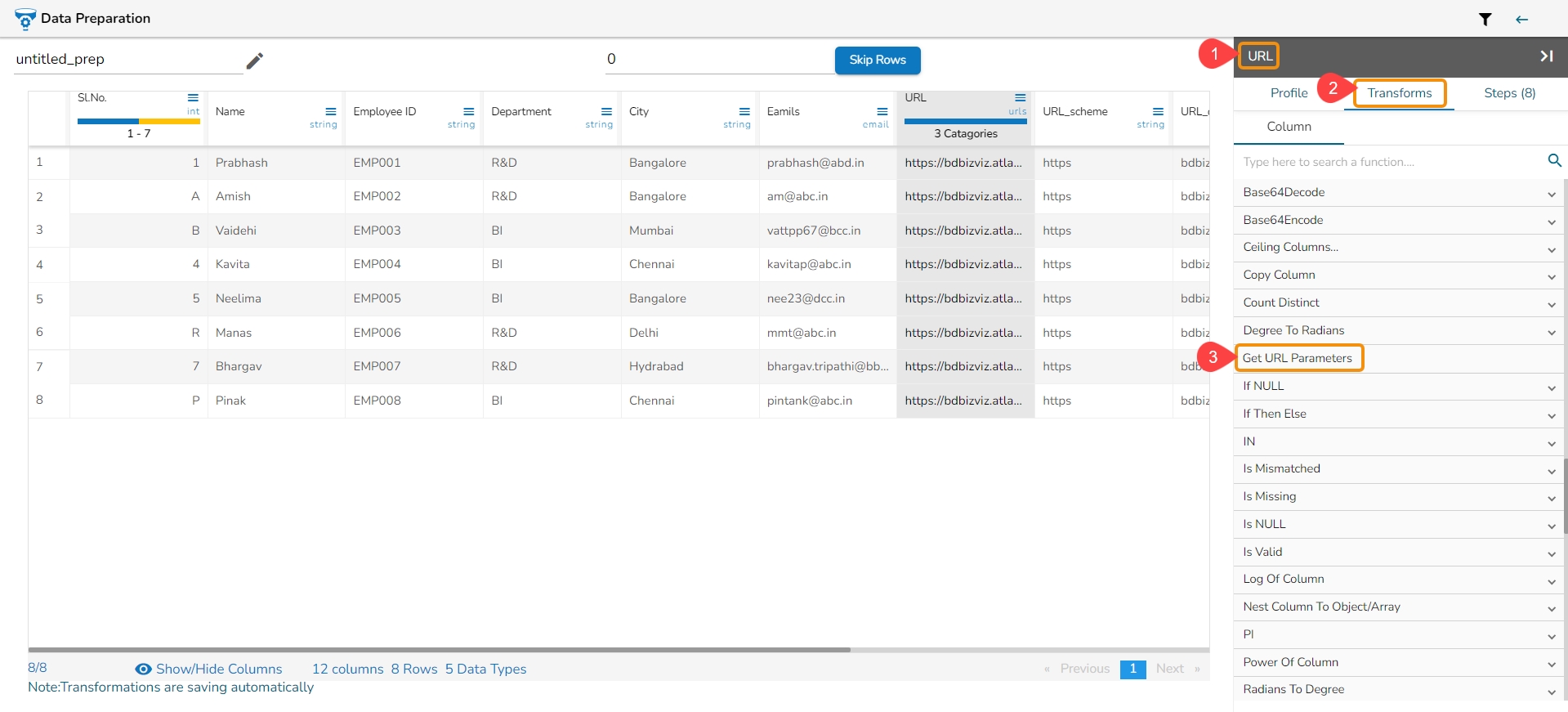

URL

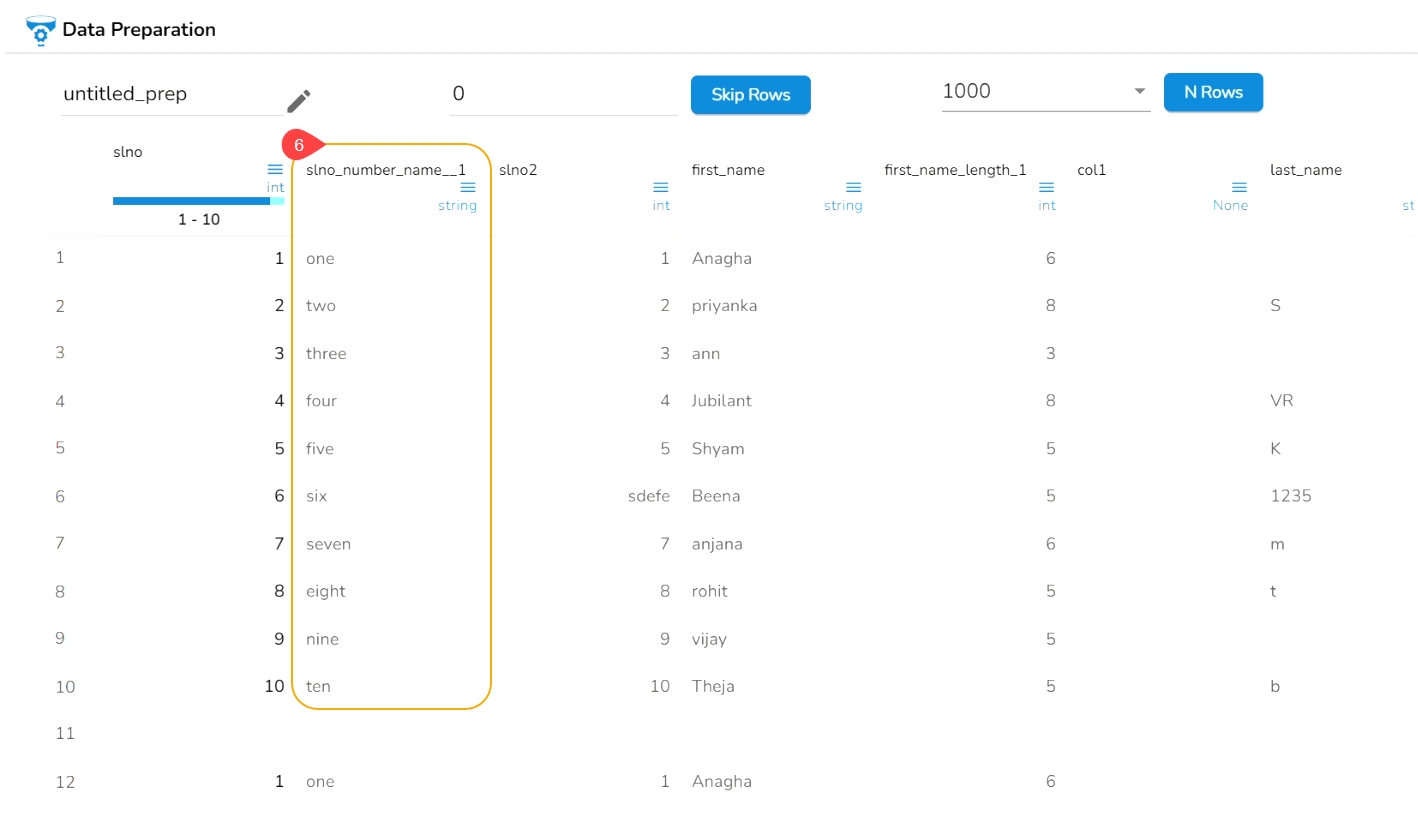

Please Note: Repetitive Column Names Handling under the Data Preparation module is based on the file types (as explained below).

When opening an Excel file with repetitive names of the columns in the Data Preparation framework, the column names will be mentioned with _0, _1 suffix by default.

For Example, if multiple columns with the name ID are present in an Excel file, the Data Preparation will read these columns as ID_0, ID_1, ID_2, and so on.

A CSV file handles such scenarios of repetitive column names by displaying no suffix for the first column and then progressively inserting .1, .2, and .3 suffixes for all the repetitive columns.

For Example, if multiple columns with the name ID are present in a CSV file, the Data Preparation will read these columns as ID, ID.1, ID.2, and so on.

A Data Quality Bar appears in the header of the data grid. The Data Quality is indicated through color-coding by clicking on a particular column.

The Data Quality Bar displays three types of data using 3 different colors.

Dark Blue-Valid Data

Orange-Invalid Data

Light Blue- Blank Data

Please Note: These color-coded bars appear by clicking on a particular column.

The Settings icon on the top of the Data Preparation workspace contains two actions to be applied to the data set rows.

The Skip Rows functionality will help the user to skip the selected records from the specified index. The user can limit the Data Preparation up to some no. of rows by using the Skip Rows option. The skipped rows will be excluded from the original dataset while applying the Data Preparation transformations.

Navigate to the Data Preparation Workspace.

Click the Settings icon.

The Skip & Total Rows drawer opens.

Provide the number till where you want to skip the data.

Click the Apply option.

The dataset grid will exhibit the dataset with the specified rows skipped.

The Sample Size reflects the change after applying the Skip Row.

The Info section also displays changed Count and Valid data.

Please Note:

The default value for Skip Rows functionality is 0.

After saving the Data Preparation with Skipped rows while re-opening the same Data Preparation the Skip rule gets removed.

The Skip Rows functionality does not support the Data Preparations created based on the Data Sets.

In cases where the dataset is extensive, containing more than 1000 rows, it's recommended to use skip row and total row functionalities together for better performance or efficiency.

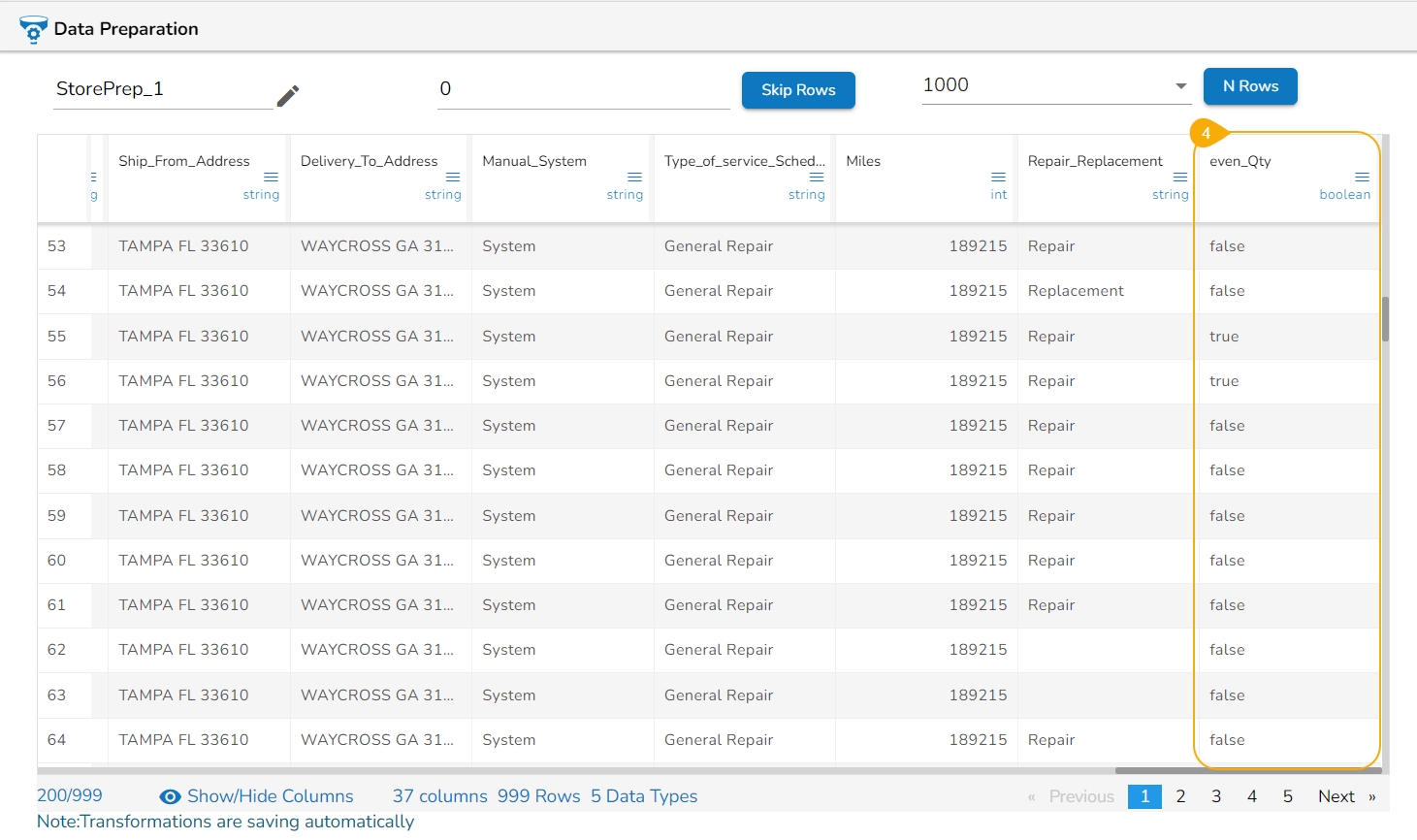

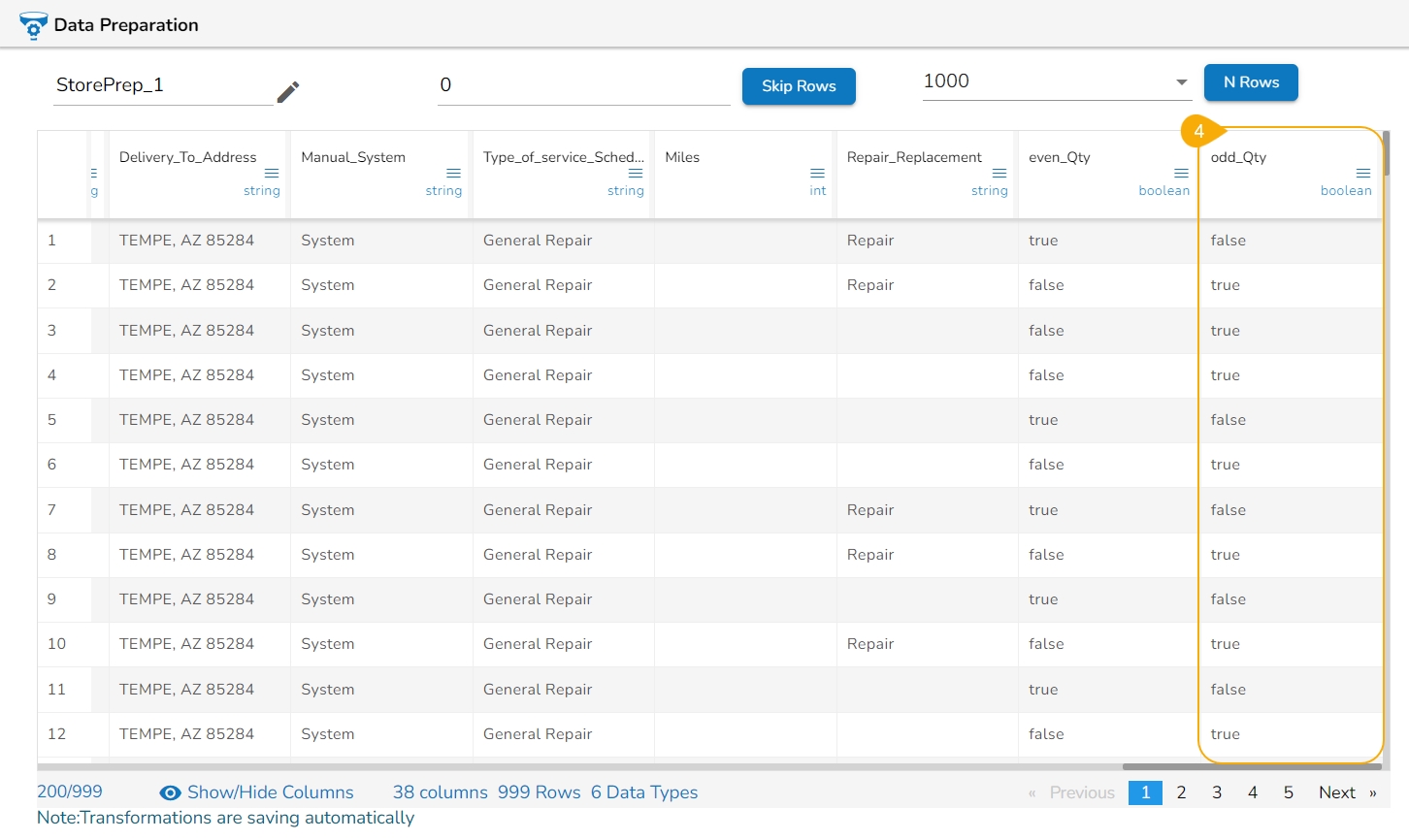

By default, the grid always displays the first 1K rows of the dataset. The user can use the N Rows option to change or modify the limit of the Data set displayed in the Data Grid. The N Rows option is provided on the top of the Data Grid view, the user can change the view to 2K, 3K, 4K up to 5K using this option.

Navigate to the Data Preparation Workspace.

Click the Settings icon.

The Skip & Total Rows drawer opens with the default value for the Total Rows.

Select an option for the Total Rows using the drop-down menu.

Click the Apply option.

The Sample Size number of rows will reflect the selected total number of rows.

The Count and Valid no. of data mentioned under the Info option will also display the changed total data.

Each page displays 200 records by default in the grid display. The pages will be added to the Grid display to accommodate the remaining rows based on the selected no. of the Total row.

Please Note: The Total Rows functionality is not supported for a Data Preparation created based on a Data Set.

This option allows the user to instantly hide or show the rows based on their need to derive meaningful insights from the displayed data.

Check out the illustration to use the Show/Hide option.

Navigate to the Grid view of any selected Data Preparation.

Click the Show/Hide Columns option at the top of the displayed grid view of the data.

The Show/Hide Columns drawer appears displaying the available columns from the selected Data Preparation.

Select the columns using the given checkboxes provided for those columns.

The selected columns will disappear from the Data Grid display.

Un-check the checkboxes for the same column(s).

The column(s) start reflecting in the Grid view.

Please Note: The Hide Columns option can be accessed from the menu icon provided for each column in the Data Grid display of the dataset.

Auto Prep is an automated process to streamline and clean datasets by applying various data-cleaning techniques and transformations. These techniques may include handling missing values, standardizing data formats, removing duplicates, and performing other preprocessing tasks to ensure data is consistent, accurate, and ready for analysis.

Implementing Auto Prep can save time and effort compared to manual data cleaning, especially for large datasets with numerous variables. By automating the cleaning process, data scientists and analysts can focus more on analyzing insights and making informed decisions rather than spending excessive time on data preparation. All the applied steps are neatly mentioned under the Steps tab.

Please Note: Auto Prep will quickly be applied all over the dataset to clean the complete dataset.

A set of transforms is included in this process. The explanation of each Auto Prep transform is given below:

Please Note:

The most important/ significant transform as a part of Auto Prep is Remove Special Character from Metadata which will be useful in the columns present in the dataset with no proper naming convention.

While applying Auto Prep other than the set of transforms provided under the Auto Prep, the trailing leading whitespaces from the dataset are removed if present.

Check out the given video on the Auto Prep functionality.

Navigate to a dataset displayed in the Data Preparation framework.

Click the Auto Prep option from the top right menu panel.

The Transformations List window opens with the list of the suggested Data Preparations.

The user can modify the suggested list by using the checkboxes.

Click the Proceed option after selecting all the required data preparation options from the list.

Open the Steps tab.

All the selected data preparations are applied to the dataset. The Auto Prep entry gets registered as AUTO DATAPREP under the Steps tab.

The applied Transforms are listed below by clicking on the AUTO DATAPREP step from the Steps tab.

Please Note:

The saved Data Preparation using Auto Prep also appears under the Preparation List while opening the data sandbox file from the Data Sandbox List page.

The Remove Special Characters from Metadata will be disabled by default while using Auto Prep. This transform will be enabled only when the headers of the selected dataset contain special characters.

Once the Auto Prep feature has been applied to a Dataset it will be disabled for the dataset. The Auto Prep feature can be enabled in such scenarios only after deleting the applied AUTO DATAPREP step from the Steps tab.

The Filter feature allows the user to customize the display by selecting a specific column/ row or choosing a data type from the listed options.

Check out the illustration to understand the Filter functionality for Columns and Rows.

Navigate to the landing page of the selected Data Preparation.

Click the Filter icon provided on the top right side of the screen.

The Filter drawer window appears displaying the default view for the Filter functionality.

The user can filter the data display based on the following aspects:

Data Types: Select the data types from the available list based on which you wish to filter the data.

Column: Provide the name of a specific column to filter the view by that column. For example, the given image displays data filtered by the columns that contain the "Fir" letters in the titles (There is only one column with these letters in the given dataset).

Row: Provide the value of a specific row to filter data by that value. For example, the following image filters the data by the rows that contain the Indeed value.

Please Note:

The Filter dialog box will display all the applicable data types to the available categories of columns from the selected Data Preparation.

The Filter dialog window displays the data type options selected by default while opening it for the first time. The user can edit the choices after opening it.

Keep the data type option checked that can display multiple columns in the filtered view while applying the Column or Row filtering option.

The user will get a Save option on the Data Preparation workspace screen to save the concerned Data Preparation.

Navigate to the Data Preparation workspace.

Provide a name for the Data Preparation or modify the existing name if needed/ Apply transform.

Click the Save option.

A notification message appears to ensure the user.

The Data Preparation gets saved on the Data Preparation List page.

Please Note:

The Save option gets enabled only after one transform or Auto Prep is applied to the dataset.

If the user fails to save a Data Preparation after applying some transforms, and closes the Data Preparation workspace. It will still be saved with an auto-generated name under the Data Preparation List.

Pagination is implemented in the grid display of data. The tool displays 200 records on each page by default, by changing the Total Row count the no. of pages displayed for the data grid will change.

Key Metrics are displayed at the bottom of the data grid to provide valuable insights into the dataset. These metrics offer essential contextual information, enabling users to make informed decisions, perform data profiling, and gain a deeper understanding of the dataset that is being prepared. This includes:

Column Count: The total number of columns in the dataset to quickly assess the complexity and scope of the data.

Data Type Count: The number of distinct data types present in the dataset, enabling users to understand the variety and diversity of data formats and structures.

Source: It displays the name of the source data.

Sample Row Count: The total number of rows in the dataset, providing an overview of the dataset's size and volume.

Please Note:

The Data Preparation workspace supports more than the listed Data Types.

The user can edit the name for the Data Preparation using the Title bar.

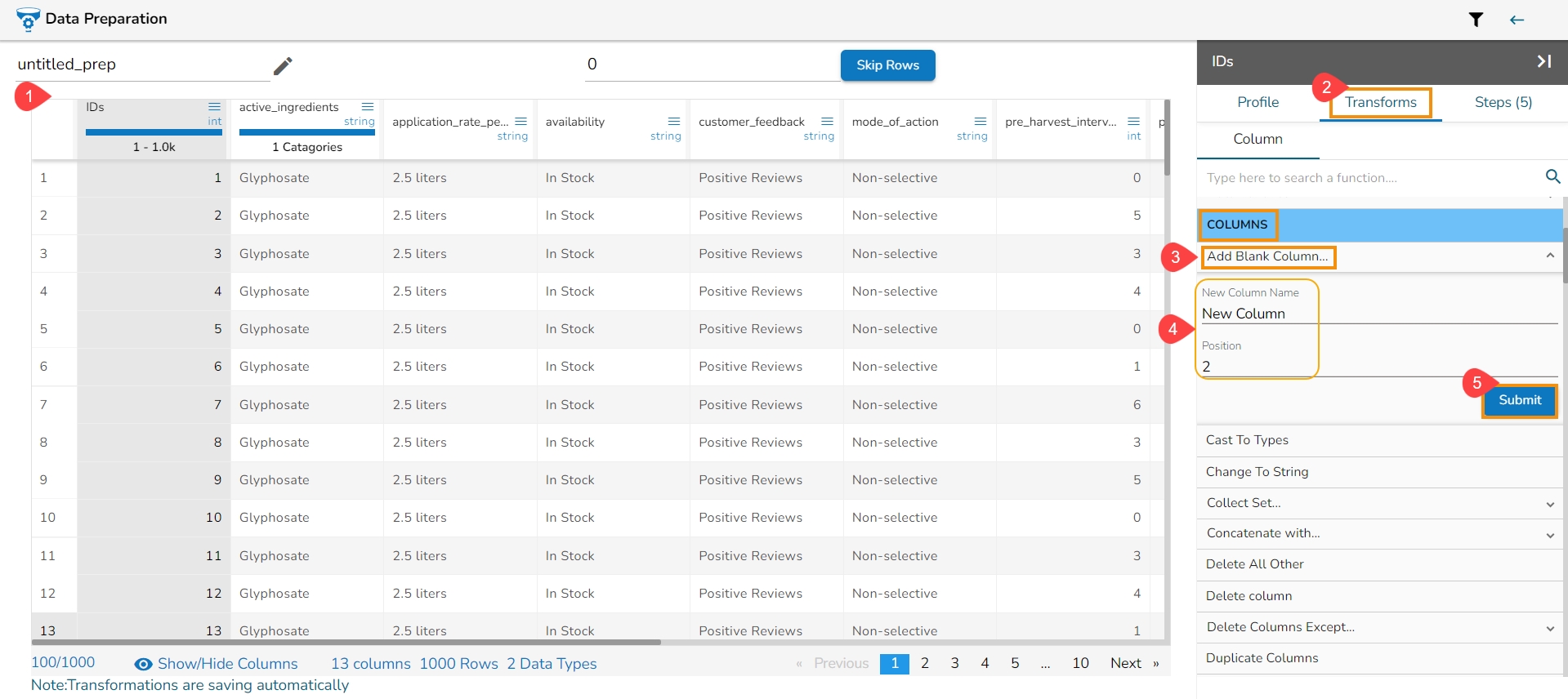

The Add Prefix transform allows you to effortlessly incorporate custom text at the outset of each value. The seamless integration of personalized text at the beginning of each value empowers you to enhance and customize your data in a user-friendly manner.

Check out the given illustration on how to user Add Prefix transform.

Steps to perform the Add Prefix transformation:

Select a column from the Data Grid display of the dataset.

Select the Transforms tab.

Open the Add Prefix transform from the Column category.

Provide the following information:

Enable the Create new column option to add a new column with the transformed values.

Pass the prefix value that is needed.

Select one or multiple columns where the prefix needs to be added.

Click the Submit option.

Result will come as a nw column or on the same column by adding the prefix value on the selected columns.

The implementation of the Add Suffix transform enables the effortless inclusion of custom text at the end of every value. This transformative technique offers a user-friendly approach to enrich and modify the original data, allowing for the seamless integration of personalized text at the end of each value. By leveraging the flexibility of the Add Suffix transform, you can tailor and enhance your data with ease, ensuring it aligns perfectly with your specific requirements and desired outcomes.

Steps to perform the Add Suffix transformation:

Navigate to the Transforms tab.

Select the Add Suffix transform from the String transform category.

Provide the following information:

Enable the Create new Column (Optional) to add a new column with the result data.

Pass the suffix value that is needed.